Technology peripherals

Technology peripherals AI

AI Recommended paper: Segmentation and classification of breast tumors in ultrasound images based on deep adversarial learning

Recommended paper: Segmentation and classification of breast tumors in ultrasound images based on deep adversarial learningConditional GAN (cGAN) Atrous Convolution (AC) Channel Attention with Weighted Blocks (CAW).

This paper proposes a breast tumor segmentation and classification method for ultrasound images (cGAN AC CAW) based on deep adversarial learning. Although the paper was proposed in 2019, he proposed a method of using GAN for segmentation at that time It is a very novel idea. The paper basically integrated all the technologies that could be integrated at the time, and achieved very good results, so it is very worth reading. In addition, the paper also proposed a SSIM of typical adversarial loss and l1 norm loss as loss function.

Semantic segmentation using cGAN AC CAW

Generator G

The generator network contains an encoder part : Consists of seven convolutional layers (En1 to En7) and a decoder: seven deconvolutional layers (Dn1 to Dn7).

Insert an atrous convolution block between En3 and En4. Dilation ratios 1, 6 and 9, kernel size 3×3, stride 2.

There is also a channel attention layer with channel weighting (CAW) block between En7 and Dn1.

CAW block is a collection of channel attention module (DAN) and channel weighting block (SENet), which increases the representation ability of the highest level features of the generator network.

Discriminator D

It is a sequence of convolutional layers.

The input to the discriminator is a concatenation of the image and a binary mask marking the tumor region.

The output of the discriminator is a 10×10 matrix with values ranging from 0.0 (completely fake) to 1.0 (real).

Loss function

The loss function of generator G consists of three terms: adversarial loss (binary cross-entropy loss), l1-norm to promote the learning process and improve segmentation mask boundaries SSIM loss of shape:

#where z is a random variable. The loss function of discriminator D is:

Use random forest for classification task

Enter each image into the trained Generate a network, obtain the tumor boundary, and then calculate 13 statistical features from this boundary: fractal dimension, lacunarity, convex hull, convexity, circularity, area, perimeter, centroid, minor and major axis length, smoothness, Hu moments (6) and central moments (order 3 and below)

uses exhaustive feature selection (Exhaustive feature selection) algorithm to select the optimal feature set. The EFS algorithm shows that fractal dimension, lacunarity, convex hull, and centroid are the four optimal features.

These selected features are fed into a random forest classifier, which is then trained to distinguish between benign and malignant tumors.

Result Comparison

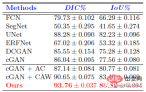

Segmentation

The data set contains 150 malignant tumors and 100 benign tumors contained in the image. For training the model, the data set was randomly divided into training set (70%), validation set (10%) and test set (20%).

This model (cGAN AC CAW) outperforms other models on all metrics. Its Dice and IoU scores are 93.76% and 88.82% respectively.

Box plot comparison of IoU and Dice of the paper model with segmentation heads such as FCN, SegNet, ERFNet and U-Net.

The value range of this model for Dice coefficient is 88% ~ 94%, and the value range for IoU is 80% ~ 89%, while other deep segmentation methods FCN , SegNet, ERFNet and U-Net have a larger value range.

Segmentation results As shown in the figure above, SegNet and ERFNet produced the worst results, with a large number of false negative areas (red), and some false positive areas (green).

While U-Net, DCGAN, and cGAN provide good segmentation, the model proposed in the paper provides more accurate breast tumor boundary segmentation.

Classification

#The proposed breast tumor classification method is better than [9], with a total accuracy of 85%.

The above is the detailed content of Recommended paper: Segmentation and classification of breast tumors in ultrasound images based on deep adversarial learning. For more information, please follow other related articles on the PHP Chinese website!

人工智能(AI)、机器学习(ML)和深度学习(DL):有什么区别?Apr 12, 2023 pm 01:25 PM

人工智能(AI)、机器学习(ML)和深度学习(DL):有什么区别?Apr 12, 2023 pm 01:25 PM人工智能Artificial Intelligence(AI)、机器学习Machine Learning(ML)和深度学习Deep Learning(DL)通常可以互换使用。但是,它们并不完全相同。人工智能是最广泛的概念,它赋予机器模仿人类行为的能力。机器学习是将人工智能应用到系统或机器中,帮助其自我学习和不断改进。最后,深度学习使用复杂的算法和深度神经网络来重复训练特定的模型或模式。让我们看看每个术语的演变和历程,以更好地理解人工智能、机器学习和深度学习实际指的是什么。人工智能自过去 70 多

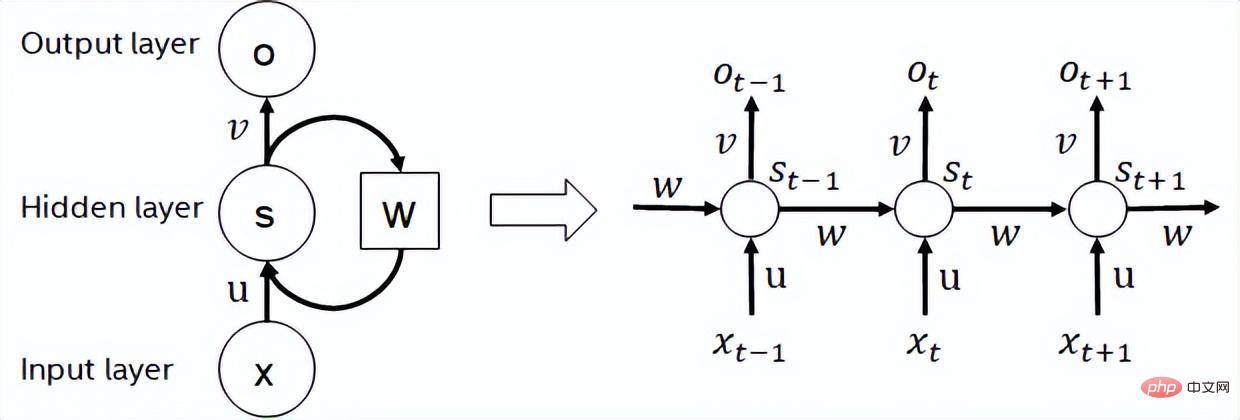

深度学习GPU选购指南:哪款显卡配得上我的炼丹炉?Apr 12, 2023 pm 04:31 PM

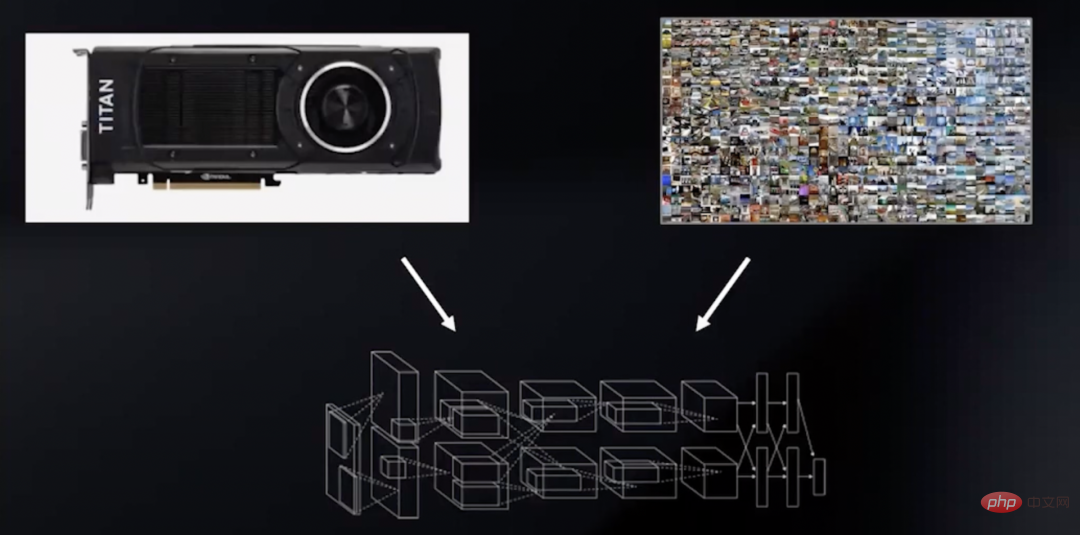

深度学习GPU选购指南:哪款显卡配得上我的炼丹炉?Apr 12, 2023 pm 04:31 PM众所周知,在处理深度学习和神经网络任务时,最好使用GPU而不是CPU来处理,因为在神经网络方面,即使是一个比较低端的GPU,性能也会胜过CPU。深度学习是一个对计算有着大量需求的领域,从一定程度上来说,GPU的选择将从根本上决定深度学习的体验。但问题来了,如何选购合适的GPU也是件头疼烧脑的事。怎么避免踩雷,如何做出性价比高的选择?曾经拿到过斯坦福、UCL、CMU、NYU、UW 博士 offer、目前在华盛顿大学读博的知名评测博主Tim Dettmers就针对深度学习领域需要怎样的GPU,结合自

字节跳动模型大规模部署实战Apr 12, 2023 pm 08:31 PM

字节跳动模型大规模部署实战Apr 12, 2023 pm 08:31 PM一. 背景介绍在字节跳动,基于深度学习的应用遍地开花,工程师关注模型效果的同时也需要关注线上服务一致性和性能,早期这通常需要算法专家和工程专家分工合作并紧密配合来完成,这种模式存在比较高的 diff 排查验证等成本。随着 PyTorch/TensorFlow 框架的流行,深度学习模型训练和在线推理完成了统一,开发者仅需要关注具体算法逻辑,调用框架的 Python API 完成训练验证过程即可,之后模型可以很方便的序列化导出,并由统一的高性能 C++ 引擎完成推理工作。提升了开发者训练到部署的体验

基于深度学习的Deepfake检测综述Apr 12, 2023 pm 06:04 PM

基于深度学习的Deepfake检测综述Apr 12, 2023 pm 06:04 PM深度学习 (DL) 已成为计算机科学中最具影响力的领域之一,直接影响着当今人类生活和社会。与历史上所有其他技术创新一样,深度学习也被用于一些违法的行为。Deepfakes 就是这样一种深度学习应用,在过去的几年里已经进行了数百项研究,发明和优化各种使用 AI 的 Deepfake 检测,本文主要就是讨论如何对 Deepfake 进行检测。为了应对Deepfake,已经开发出了深度学习方法以及机器学习(非深度学习)方法来检测 。深度学习模型需要考虑大量参数,因此需要大量数据来训练此类模型。这正是

聊聊实时通信中的AI降噪技术Apr 12, 2023 pm 01:07 PM

聊聊实时通信中的AI降噪技术Apr 12, 2023 pm 01:07 PMPart 01 概述 在实时音视频通信场景,麦克风采集用户语音的同时会采集大量环境噪声,传统降噪算法仅对平稳噪声(如电扇风声、白噪声、电路底噪等)有一定效果,对非平稳的瞬态噪声(如餐厅嘈杂噪声、地铁环境噪声、家庭厨房噪声等)降噪效果较差,严重影响用户的通话体验。针对泛家庭、办公等复杂场景中的上百种非平稳噪声问题,融合通信系统部生态赋能团队自主研发基于GRU模型的AI音频降噪技术,并通过算法和工程优化,将降噪模型尺寸从2.4MB压缩至82KB,运行内存降低约65%;计算复杂度从约186Mflop

地址标准化服务AI深度学习模型推理优化实践Apr 11, 2023 pm 07:28 PM

地址标准化服务AI深度学习模型推理优化实践Apr 11, 2023 pm 07:28 PM导读深度学习已在面向自然语言处理等领域的实际业务场景中广泛落地,对它的推理性能优化成为了部署环节中重要的一环。推理性能的提升:一方面,可以充分发挥部署硬件的能力,降低用户响应时间,同时节省成本;另一方面,可以在保持响应时间不变的前提下,使用结构更为复杂的深度学习模型,进而提升业务精度指标。本文针对地址标准化服务中的深度学习模型开展了推理性能优化工作。通过高性能算子、量化、编译优化等优化手段,在精度指标不降低的前提下,AI模型的模型端到端推理速度最高可获得了4.11倍的提升。1. 模型推理性能优化

深度学习撞墙?LeCun与Marcus到底谁捅了马蜂窝Apr 09, 2023 am 09:41 AM

深度学习撞墙?LeCun与Marcus到底谁捅了马蜂窝Apr 09, 2023 am 09:41 AM今天的主角,是一对AI界相爱相杀的老冤家:Yann LeCun和Gary Marcus在正式讲述这一次的「新仇」之前,我们先来回顾一下,两位大神的「旧恨」。LeCun与Marcus之争Facebook首席人工智能科学家和纽约大学教授,2018年图灵奖(Turing Award)得主杨立昆(Yann LeCun)在NOEMA杂志发表文章,回应此前Gary Marcus对AI与深度学习的评论。此前,Marcus在杂志Nautilus中发文,称深度学习已经「无法前进」Marcus此人,属于是看热闹的不

英伟达首席科学家:深度学习硬件的过去、现在和未来Apr 12, 2023 pm 03:07 PM

英伟达首席科学家:深度学习硬件的过去、现在和未来Apr 12, 2023 pm 03:07 PM过去十年是深度学习的“黄金十年”,它彻底改变了人类的工作和娱乐方式,并且广泛应用到医疗、教育、产品设计等各行各业,而这一切离不开计算硬件的进步,特别是GPU的革新。 深度学习技术的成功实现取决于三大要素:第一是算法。20世纪80年代甚至更早就提出了大多数深度学习算法如深度神经网络、卷积神经网络、反向传播算法和随机梯度下降等。 第二是数据集。训练神经网络的数据集必须足够大,才能使神经网络的性能优于其他技术。直至21世纪初,诸如Pascal和ImageNet等大数据集才得以现世。 第三是硬件。只有

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Atom editor mac version download

The most popular open source editor