Technology peripherals

Technology peripherals AI

AI To save an AI that has made a mistake, you cannot just rely on beatings and scoldings.

To save an AI that has made a mistake, you cannot just rely on beatings and scoldings.To save an AI that has made a mistake, you cannot just rely on beatings and scoldings.

Many studies have found that AI is so ashamed that it has learned to discriminate against people.

How can this be done?

Recently, a study by Tsinghua & Fudan gave suggestions for this:

If you want to drag AI back from the road of sexism, a scolding will not be effective. .

The best way is to understand why the child is like this, and then prescribe the right medicine to reason with him.

Because if you just teach without being reasonable and correct violently, the AI will be frightened and stupid (performance will decrease)!

Oh mai Karma, it is difficult to raise a four-legged gold-eating beast. Is it so difficult to raise a (xun) cyber child?

Let’s take a look at what suggestions this group of AI “nurses and dads” put forward for training children.

To be honest, AI can reduce gender discrimination

Before this time, it was not that no one was grabbing the ears of AI that had fallen behind, trying to get rid of the bad habit of favoring boys over girls.

However, most current debiasing methods will degrade the performance of the model on other tasks.

For example, if you let AI weaken gender discrimination, it will produce this annoying result:

It will either not be able to tell whether the gender of "dad" is male or female, or it will make grammatical errors. Error, forgetting to give the verb s that follows the third person.

What’s even more annoying is that this degradation mechanism has not yet been studied clearly.

Otherwise, we should simply abandon models with obvious gender bias——

In 2018, Amazon noticed that the model used to automatically screen resumes discriminated against female job seekers, so it removed this system Hidden in snow.

Otherwise, you will have to endure the performance degradation.

Does it mean that if you want AI to no longer be a mistake AI or a problem AI, then AI will definitely lose its mind?

Tsinghua & Fudan research said no to this.

Their research area is pre-trained language models.

This is because it shows magical power in various NLP tasks and has many practical scenarios.

It’s not a good idea when gender bias is used in social work such as online advertising, automated resume screening systems, and education.

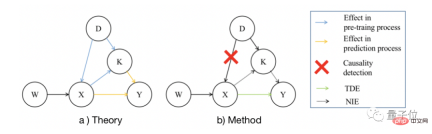

The research proposes a theoretical framework for the origin of AI gender bias, a causal framework, which is used to explain how data imbalance leads to gender bias in the model during the pre-training process.

They define the gender bias of the pre-trained model when performing a specific prediction task as follows:

Among them, M is the model, and Y is to use M For the predicted word, B is the degree of gender bias of M.

Y0|W is the ground truth, the probability of being a male-related word or a female-related word is one-half, Y|W is the prediction of M.

If M's predicted Y is unbalanced and distributed between genders, then model M has gender bias when predicting Y0 based on w.

During the pre-training process, the optimization algorithm will determine the parameters in the embedding part and K based on the pre-training data D.

Therefore, data imbalance D misleads the model into incorrect parameters.

For example, if the word "doctor" in the training data is more often associated with male vocabulary, the model will take it for granted that "doctor" is associated with "male gender".

Have you seen this triangle? Let’s use it to explain why the current method of correcting AI will make it stupid.

When applying the pre-trained model to predict Y based on W, the model first converts W into extracted X, and then determines the mean of Y based on X and K.

Due to misleading parameters in the dive part, W was converted to an incorrect X, and K was also incorrect.

After one operation, the wrong X and the wrong K together lead to an error in Y.

These errors, and their interaction, lead to gender bias through three potential mechanisms.

In other words, at this point, gender bias has arisen.

How does the current debiasing method for educational AI work?

All current debiasing methods intervene in one or two of the three mechanisms.

The details are as follows:

- Enhance data intervention on D and intervene in all three mechanisms.

- By eliminating the geometric projection of X on the gender space in K, the path of D→X→K→Y is cut off.

- The gender equality regularization method either distorts the relationship between D and X or the relationship between D and K, so this type of method interferes with the mechanisms of D→X→Y and D→X→K→Y .

After explaining the bias-performance dilemma existing in current debiasing methods, the team tried to propose a fine-tuning method.

They found that among the three mechanisms, D→X→Y is the only one that leads to gender bias and has nothing to do with the transformer.

If the fine-tuning method only corrects the bias through D→X→Y, it can reduce gender bias while maintaining the performance of the model.

Based on the decomposition theorem, the team conducted numerical experiments.

It turns out that this approach can bring double dividends:

Reduce some gender bias while avoiding performance degradation.

After experiments, team members located the source of AI gender bias in the two architectures of the pre-training model: word embedding and conversion.

Accordingly, the research team proposed the C4D method, which reduces gender bias by adjusting marker embedding.

The core idea of this method is to reduce the TDE function by correcting the misguided X, thereby reducing the total deviation.

Although the team does not know what the correct marker embedding is, they developed a gradient-based method to infer the underlying ground truth.

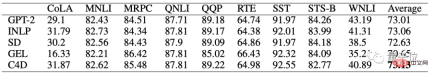

Everything is ready, the team applied the C4D method to the GPT-2 test debiasing results.

The results show that among all test methods, the C4D method has the lowest perplexity on small, medium and ultra-large GPT-2.

In the large-scale GPT-2, C4D’s perplexity ranked second, only 0.4% worse than the highest score.

Moreover, the method with the highest score has a lower debiasing effect on gender discrimination than C4D.

On the GLUE dataset, the C4D method obtained the highest average score.

This shows that C4D can significantly reduce gender bias and maintain model performance.

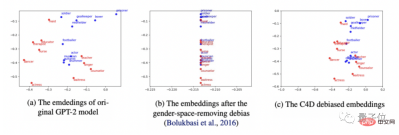

After listening to so many theoretical introductions, let’s look at an illustration to get an intuitive feel.

In the three pictures below, the blue dots represent hidden male bias, and the red dots represent female bias.

Picture (a) is the original understanding of AI; Picture (b) is the understanding of AI after humans scolded them aimlessly; Picture (c) is AI’s understanding after humans found the reason and patiently explained it understand.

In figures (b) and (c), the embedding of male bias and female bias is more concentrated, which means the level of bias is lower.

At the same time, it can be noted that the embedding in Figure (c) still maintains the topology in Figure (a), which is why the C4D method can maintain model performance.

Researcher: It may also be able to reduce other biases in AI

"Although this method can effectively alleviate AI's gender bias in language models, it is still not enough to completely eliminate it."

——Researchers pointed out this issue truthfully.

If you want to further correct AI bias without reducing AI performance, you need to better understand the mechanism of language models.

How can we understand it better?

On the one hand, we use the “C4D method” proposed in this study to test other biases in AI.

The main research object of this experiment is: gender bias in the workplace.

In fact, because AI has been constantly learning all kinds of information before, it is the type that accepts everyone. As a result, it accidentally contracted the inherent social problems such as religious discrimination, discriminating against blacks and loving whites... …

So, you might as well go to GPT-2 and test the final effect of removing other biases.

On the other hand, you can try the "C4D method" on a variety of large models.

In addition to GPT-2 used in this study, the classic NLP pre-training model BERT developed by Google is also a good test scenario.

However, if you want to transplant it to other models, you need to regenerate the correction template, and you may need to use the multi-variable TDE (Template Driven Extraction) function.

By using the TDE function, you can directly put content into the index without modifying the document structure.

Some netizens came with a dog’s head:

Generally speaking, it is inevitable to become a “misstep AI” when entering society.

But if you want to turn back the prodigal son of "AI that has made a mistake", find the right method and reason with it, it will still have good results~

In addition, one of the members of the research team, Yu Yang from Tsinghua University On his personal Weibo, he stated that a website for querying gender discrimination in AI models will be launched in the next two days.

You can look forward to it!

Paper address: https://arxiv.org/abs/2211.07350 Reference link: https://weibo.com/1645372340/Mi4E43PUY#comment

The above is the detailed content of To save an AI that has made a mistake, you cannot just rely on beatings and scoldings.. For more information, please follow other related articles on the PHP Chinese website!

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

AI Unearths New Potential In The Mining IndustryMay 10, 2025 am 11:16 AM

AI Unearths New Potential In The Mining IndustryMay 10, 2025 am 11:16 AMAI Enhanced Mining Equipment The mining operation environment is harsh and dangerous. Artificial intelligence systems help improve overall efficiency and security by removing humans from the most dangerous environments and enhancing human capabilities. Artificial intelligence is increasingly used to power autonomous trucks, drills and loaders used in mining operations. These AI-powered vehicles can operate accurately in hazardous environments, thereby increasing safety and productivity. Some companies have developed autonomous mining vehicles for large-scale mining operations. Equipment operating in challenging environments requires ongoing maintenance. However, maintenance can keep critical devices offline and consume resources. More precise maintenance means increased uptime for expensive and necessary equipment and significant cost savings. AI-driven

Why AI Agents Will Trigger The Biggest Workplace Revolution In 25 YearsMay 10, 2025 am 11:15 AM

Why AI Agents Will Trigger The Biggest Workplace Revolution In 25 YearsMay 10, 2025 am 11:15 AMMarc Benioff, Salesforce CEO, predicts a monumental workplace revolution driven by AI agents, a transformation already underway within Salesforce and its client base. He envisions a shift from traditional markets to a vastly larger market focused on

AI HR Is Going To Rock Our Worlds As AI Adoption SoarsMay 10, 2025 am 11:14 AM

AI HR Is Going To Rock Our Worlds As AI Adoption SoarsMay 10, 2025 am 11:14 AMThe Rise of AI in HR: Navigating a Workforce with Robot Colleagues The integration of AI into human resources (HR) is no longer a futuristic concept; it's rapidly becoming the new reality. This shift impacts both HR professionals and employees, dem

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

WebStorm Mac version

Useful JavaScript development tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 Mac version

God-level code editing software (SublimeText3)