Technology peripherals

Technology peripherals AI

AI From papers to code, from cutting-edge research to industrial implementation, comprehensively understand BEV perception

From papers to code, from cutting-edge research to industrial implementation, comprehensively understand BEV perceptionFrom papers to code, from cutting-edge research to industrial implementation, comprehensively understand BEV perception

#BEV What exactly is perception? What are the aspects of BEV perception that both the academic and industrial circles of autonomous driving are paying attention to? This article will reveal the answer for you.

In the field of autonomous driving, letting perception models learn powerful bird's-eye view (BEV) representations is a trend and has attracted widespread attention from industry and academia. . Compared with most previous models in the field of autonomous driving that are based on performing tasks such as detection, segmentation, and tracking in the front view or perspective view, the Bird's Eye View (BEV) representation allows the model to better identify occluded vehicles and has Facilitates the development and deployment of subsequent modules (e.g. planning, control).

It can be seen that BEV perception research has a huge potential impact on the field of autonomous driving and deserves long-term attention and investment from academia and industry. So what exactly is BEV perception? What are the contents of BEV perception that academic and industrial leaders in autonomous driving are paying attention to? This article will reveal the answer for you through BEVPerception Survey.

BEVPerception Survey is a collaboration between the Shanghai Artificial Intelligence Laboratory Autonomous Driving OpenDriveLab team and SenseTime Research Institute The practical tool presentation method of the collaborative paper "Delving into the Devils of Bird's-eye-view Perception: A Review, Evaluation and Recipe" is divided into the latest literature research based on BEVPercption and PyTorch-based Two major sections of the open source BEV perception toolbox.

- ##Paper address: https://arxiv.org/abs/2209.05324

- Project address: https://github.com/OpenPerceptionX/BEVPerception-Survey-Recipe

- Summary interpretation , Technical Interpretation

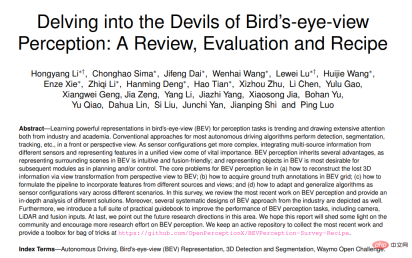

BEVPerception Survey The latest literature review research mainly includes three parts-

BEV camera, BEV lidar and BEV fusion. BEV Camera represents a vision-only or vision-centric algorithm for 3D object detection or segmentation from multiple surrounding cameras; BEV LiDAR describes the detection or segmentation task of point cloud input; BEV Fusion describes the detection or segmentation task from multiple sensors Input fusion mechanisms such as cameras, lidar, global navigation systems, odometry, HD maps, CAN bus, etc.

BEV Perception Toolbox is a platform for 3D object detection based on BEV cameras and is used in Waymo data Jishang provides an experimental platform that can conduct manual tutorials and experiments on small-scale data sets.

Specifically, BEV Camera represents an algorithm for 3D object detection or segmentation from multiple surrounding cameras; BEV lidar represents using point clouds as input to complete detection or segmentation tasks; BEV fusion uses the output of multiple sensors as input, such as cameras, LiDAR, GNSS, odometry, HD-Map, CAN-bus, etc.

BEVPercption Literature Review Research

BEV CameraBEV camera perception includes 2D feature extraction It consists of three parts: converter, view transformer and 3D decoder. The figure below shows the BEV camera perception flow chart. In view transformation, there are two ways to encode 3D information - one is to predict depth information from 2D features; the other is to sample 2D features from 3D space.

Figure 2: BEV camera perception flow chart

For 2D feature extractor, There is a lot of experience in 2D perception tasks that can be learned from in 3D perception tasks, such as the form of main intervention training.

The view conversion module is a very different aspect from the 2D perception system. As shown in the figure above, there are generally two ways to perform view transformation: one is the transformation from 3D space to 2D space, and the other is the transformation from 2D space to 3D space. These two transformation methods are either used in 3D space prior knowledge of physics in the system or utilizing additional 3D information for supervision. It is worth noting that not all 3D perception methods have view transformation modules. For example, some methods detect objects in 3D space directly from features in 2D space.

3D decoder Receives features in 2D/3D space and outputs 3D perception results. Most 3D decoders are designed from LiDAR-based perception models. These methods perform detection in BEV space, but there are still some 3D decoders that exploit features in 2D space and directly regress the localization of 3D objects.

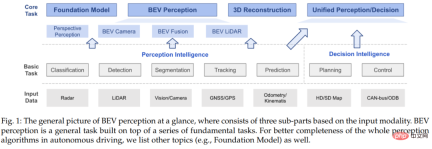

BEV Lidar

The common process of BEV lidar perception is mainly to combine the two branches to convert point cloud data Convert to BEV representation. The figure below shows the BEV lidar sensing flow chart. The upper branch extracts point cloud features in 3D space to provide more accurate detection results. The lower branch extracts BEV features in 2D space, providing a more efficient network. In addition to point-based methods that operate on raw point clouds, voxel-based methods voxelize points into discrete grids, providing a more efficient representation by discretizing continuous 3D coordinates. Based on discrete voxel representation, 3D convolution or 3D sparse convolution can be used to extract point cloud features.

Figure 3: BEV lidar sensing flow chart

BEV Fusion

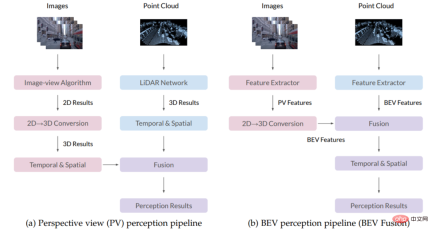

The BEV perception fusion algorithm has two methods: PV perception and BEV perception, which are suitable for academia and industry. The figure below shows a comparison of the PV sensing and BEV sensing flow charts. The main difference between the two is the 2D to 3D conversion and fusion module. In the PV-aware flowchart, the results of different algorithms are first converted into 3D space and then fused using some prior knowledge or manually designed rules. In the BEV perception flow chart, the PV feature map will be converted to the BEV perspective, and then fused in the BEV space to obtain the final result, thus maximizing the retention of the original feature information and avoiding excessive manual design.

Figure 4: PV sensing (left) and BEV sensing (right) flow chart

Datasets suitable for BEV sensing models

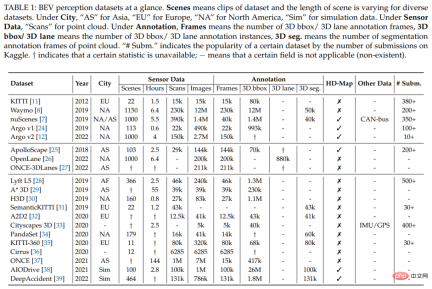

There are many data sets for BEV sensing tasks. Typically a dataset consists of various scenes, and each scene has a different length in different datasets. The following table summarizes the commonly used data sets in the academic community. We can see that the Waymo dataset has more diverse scenes and richer 3D detection box annotations than other datasets.

Table 1: List of BEV sensing data sets

However, currently the academic community There is no publicly available software for the BEV perception tasks developed by Waymo. Therefore, we chose to develop based on the Waymo data set, hoping to promote the development of BEV sensing tasks on the Waymo data set.

Toolbox - BEV perception toolbox

BEVFormer is a commonly used BEV perception method. It uses a spatiotemporal transformer to convert the features extracted by the backbone network from multi-view input into BEV features, and then The BEV features are input into the detection head to obtain the final detection result. BEVFormer has two features. It has precise conversion from 2D image features to 3D features and can apply the BEV features it extracts to different detection heads. We further improved BEVFormer's view conversion quality and final detection performance through a series of methods.

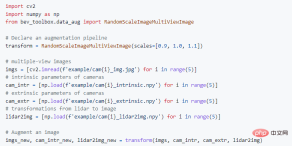

After winning CVPR 2022 Waymo Challenge first place with BEVFormer, we launched Toolbox - BEV Perception Toolbox, by providing a set of easy-to-use Waymo Open Dataset data processing tools, integrates a series of methods that can significantly improve model performance (including but not limited to data enhancement, detection heads, loss functions, Model integration, etc.), and is compatible with open source frameworks widely used in the field, such as mmdetection3d and detectron2. Compared with the basic Waymo data set, the BEV perception toolbox optimizes and improves the usage skills for use by different types of developers. The figure below shows an example of using the BEV awareness toolbox based on the Waymo dataset.

Figure 5: Toolbox usage example based on Waymo data set

Summary

- BEVPerception Survey summarizes the overall situation of BEV perception technology research in recent years, including high-level concept elaboration and more in-depth detailed discussions. A comprehensive analysis of the literature related to BEV sensing covers core issues such as depth estimation, view transformation, sensor fusion, domain adaptation, etc., and provides a more in-depth explanation of the application of BEV sensing in industrial systems.

- In addition to theoretical contributions, BEVPerception Survey also provides a very practical toolbox for improving the performance of camera-based 3D bird's-eye view (BEV) object detection, including a series of training data Enhancement strategies, efficient encoder design, loss function design, test data enhancement and model integration strategies, etc., as well as the implementation of these techniques on the Waymo data set. We hope to help more researchers realize “use and take” and provide more convenience for researchers in the autonomous driving industry.

We hope that the BEVPerception Survey will not only help users easily use high-performance BEV perception models, but also become a good starting point for novices to get started with BEV perception models. We are committed to breaking through the boundaries of research and development in the field of autonomous driving, and look forward to sharing our views and exchanging discussions with the academic community to continuously explore the application potential of autonomous driving-related research in the real world.

The above is the detailed content of From papers to code, from cutting-edge research to industrial implementation, comprehensively understand BEV perception. For more information, please follow other related articles on the PHP Chinese website!

Claude vs Gemini: The Comprehensive Comparison - Analytics VidhyaApr 13, 2025 am 09:20 AM

Claude vs Gemini: The Comprehensive Comparison - Analytics VidhyaApr 13, 2025 am 09:20 AMIntroduction Within the quickly changing field of artificial intelligence, two language models, Claude and Gemini, have become prominent competitors, each providing distinct advantages and skills. Although both models can mana

Mutable vs Immutable Objects in Python - Analytics VidhyaApr 13, 2025 am 09:15 AM

Mutable vs Immutable Objects in Python - Analytics VidhyaApr 13, 2025 am 09:15 AMIntroduction Python is an object-oriented programming language (or OOPs).In my previous article, we explored its versatile nature. Due to this, Python offers a wide variety of data types, which can be broadly classified into m

11 YouTube Channels to Learn Tableau For Free - Analytics VidhyaApr 13, 2025 am 09:14 AM

11 YouTube Channels to Learn Tableau For Free - Analytics VidhyaApr 13, 2025 am 09:14 AMIntroduction Tableau is considered one of the most robust data visualization tools currently in use by companies and individuals globally for efficient data analysis and presentation. With its user-friendly interface and exten

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AMHey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

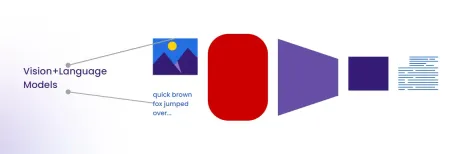

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Mac version

God-level code editing software (SublimeText3)