From AI painting, AI arranging to AI generated videos, increasingly “smart” AI has brought a new content production model, AIGC.

In the past few decades, the content obtained by humans can be roughly divided into two categories: PGC (professionally produced content), and UGC (user-generated content). The emergence of AIGC has once again diversified content production models, and at the same time, it has further deepened humankind's dependence on the digital world in a subtle way.

According to IDC statistics, global VR/AR terminal shipments will reach 11.23 million units in 2021. As the entrance to the metaverse, the tens of millions of sales of VR/AR have also made people think about how to produce more complex metaverse content compared to the Internet?

The emergence of AIGC provides a new idea for the content production of the Metaverse.

However, in 2022, when the Metaverse is still in its infancy and AIGC has not yet completed its evolution, amid the AIGC pandemic, some new problems have begun to surface.

AI's life-sustaining medicine

In 2016, Alpha Go defeated the world's Go master Lee Sedol, and the third wave of artificial intelligence led by deep learning reached its peak. Afterwards, artificial intelligence fell silent again, especially Under the influence of the global economic downturn, the flame of artificial intelligence is beginning to dim.

"Some leading artificial intelligence companies that we were originally optimistic about (during this period) did not go smoothly when they were listed, and many artificial intelligence companies had to face operating pressure," looking back on the past few years The development history of artificial intelligence enterprises, said Shi Lin, deputy director of the Content Technology Department of the Cloud Computing and Big Data Institute of China Academy of Information and Communications Technology.

At this time, artificial intelligence is in urgent need of a phenomenal product to boost the entire industry. AIGC’s timely emergence has become the “good medicine” for artificial intelligence to continue its life.

The so-called AIGC is actually a technology that uses artificial intelligence algorithms to automatically generate content.

AIGC has been used for a long time. As early as 2011, the Los Angeles Times in the United States had begun to develop Quakebot, a news writing robot for the earthquake field. In March 2013, Quakebot immediately attracted public attention when it was the first to report a 4.4-magnitude earthquake in Southern California. Subsequently, Reuters, Bloomberg, the Washington Daily News, and the New York Times introduced writing robots, and automated news became the earliest application form of AIGC.

In the art competition of the Colorado State Fair in the United States in 2022, a game designer named Jason Allen won the Digital Art/ As soon as this news was announced as the champion of the digital photography competition, it quickly aroused widespread social attention.

And this is not the only global hot search for AIGC this year.

On December 5, 2022, OpenAI CEO Sam Altman posted on social media that the large-scale language model ChatGPT trained by OpenAI had exceeded 1 million users as of that day. At this time, ChatGPT was only launched for five days, and it took Facebook, one of the four giants in Silicon Valley, 10 months to initially acquire one million registered users.

Ma Zhibo, chief scientist of Peking Data, analyzed that “OpenAI itself is a non-profit organization, but the chatGPT it released was able to gain millions of users within a week, even though the shocked capital market could not make a decision for it. Valuation, but if a company can implement technical services or technology business well, the capital market will still design a valuation system to earn this wave of dividends."

Capital and technology have always been together. Mutual development, and only capital can pave a path for technology to quickly lead to commercial applications.

AIGC’s fourfold restrictions

From automated news to ChatGPT, AIGC has evolved for ten years. However, Li Xuan, director of digital learning at the School of Continuing Education at Tsinghua University, believes that if AIGC is divided into If it is divided into five stages: prototype, standard, complete, superb, and ultimate, the current AIGC is only beginning to take shape.

A very important reason for AIGC’s popularity this year is the open source of the Stable Diffusion model. In August 2022, when Stability AI released Stable Diffusion, the company also made the weights and code of this model open source.

Tang Kangqi, senior solution architect at NVIDIA, said, "The Stable Diffusion model is very small, only about a dozen G. It only requires a 20 series GPU to run, and the speed of generating images from text is only It takes about one minute (it only takes ten seconds to deploy the open source model by yourself), which was unimaginable before.”

However, Tang Kangqi also pointed out that AIGC still needs to be deployed on a large scale for commercial use. There are four limitations:

First, Limitations of computing power. Although Stable Diffusion is very convenient to use, the training cost of the entire model is still very high. The training of this type of model generally requires 516 A top-of-the-line Ampere GPU requires hundreds of thousands of hours of training time, and the training cost is generally in the order of millions of dollars;

Second,limitations of data sources, the data used for training the Stable Diffusion model is currently the world's largest open image-text pair data set LAION-5B, and the training data for the chatGPT model comes from Wikipedia and some question and answer forums. Who owns the data property rights? Will the data "manufacturer" then impose any restrictions on the use of the data? These are also issues that need to be clarified in the future;

Third,The limitations of accurately using trigger words, the Stable Diffusion model requires that the input trigger words are accurate enough and express the meaning It is clear enough, so that it is easier to create the content that users want;

Fourth, Limitations of three-dimensional model generation, until the metaverse content is truly produced When doing this, three-dimensional models will inevitably be involved. Currently, there is still a lot of room for improvement in three-dimensional model generation, including the improvement of professional knowledge in CG (computer graphics).

These four restrictions make AIGC still have a long way to go before it can truly move toward large-scale commercialization, especially in producing content that is truly unique to the Metaverse.

New AI Skills, New Challenges for Humanity

Although there is a long way to go before AIGC can be commercialized on a large scale, the road to becoming a future productivity tool has begun to become clear.

Regarding the future development of AIGC, and even the entire AI technology, Li Xuan believes, “Just like scenes in science fiction movies, physical or mental work in the real world is replaced by robots, and physical or mental work in the virtual world is replaced by robots. Scenarios in which labor is replaced by virtual humans may occur in the not-too-distant future. In the future market, only jobs that require a sense of experience will require human participation."

In addition, Li Xuan also pointed out that As AIGC brings more and more AI tools, we now have several aspects of "obscuring" in our lives and work:

First, Information "obscuring" , while artificial intelligence helps us make "choices", information cocoons are gradually generated. For example, in the APPs we often use, the content you like to see will be continuously pushed to you, and the information you encounter will be superimposed on it. There will be more and more barriers, and the information cocoon will get bigger and bigger;

Second, organs will be "shielded", in the future, time and space such as VR and AR Flow, its density and content will become larger and larger, and then a "colloid" of information will appear. This type of information will be refracted, distorted, and blurred;

Third,Interaction "shielding", with the development of AI and robots, there are more and more humans and platforms. This type of interaction is actually an interaction with non-human beings. This type of interaction may lead to capital control or maximization of platform control.

Facing such an upcoming new world, how should we break through the "cocoon", avoid "obstruction", and live better in the metaverse full of AI?

The answer given by Li Xuan is: embrace change, lifelong learning, break through the cocoon, go beyond the shadow, through systematic thinking, open source technology and tools, and a lifelong learning mentality, better make progress in the future development of.

The above is the detailed content of The eve of AIGC producing content for the Metaverse. For more information, please follow other related articles on the PHP Chinese website!

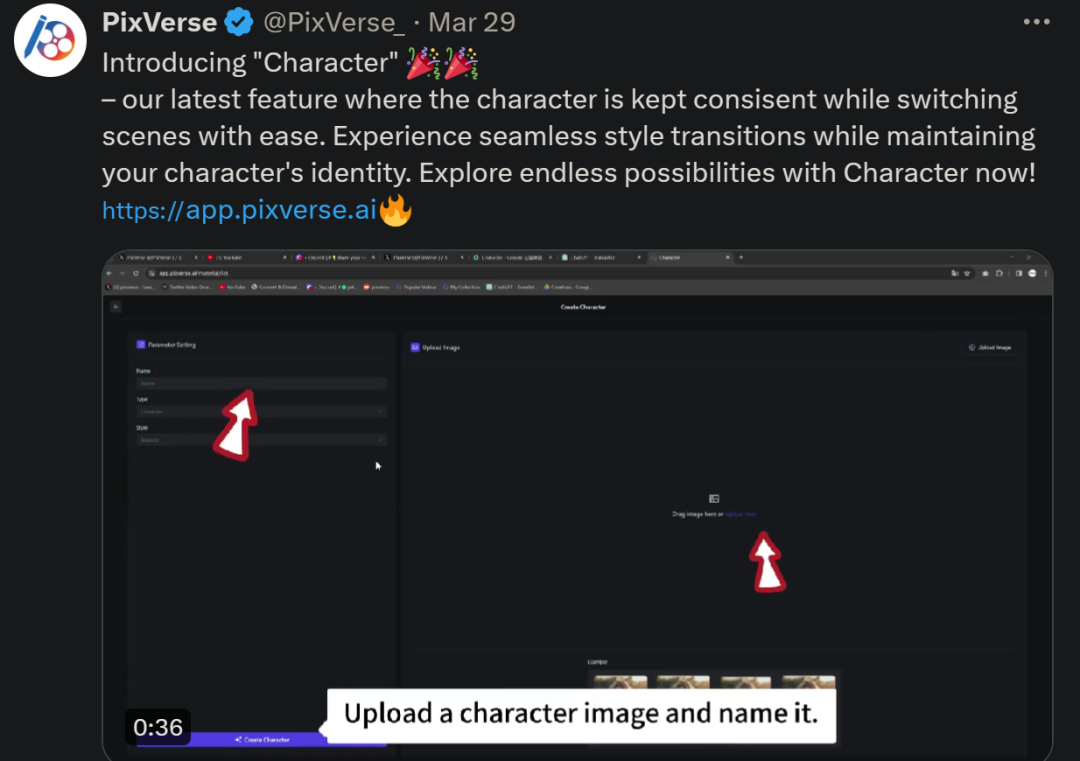

统一角色、百变场景,视频生成神器PixVerse被网友玩出了花,超强一致性成「杀招」Apr 01, 2024 pm 02:11 PM

统一角色、百变场景,视频生成神器PixVerse被网友玩出了花,超强一致性成「杀招」Apr 01, 2024 pm 02:11 PM又双叒叕是一个新功能的亮相。你是否会遇见过想要给图片角色换个背景,但是AI总是搞出「物非人也非」的效果。即使在Midjourney、DALL・E这样成熟的生成工具中,保持角色一致性还得有些prompt技巧,不然人物就会变来变去,根本达不到你想要的结果。不过,这次算是让你遇着了。AIGC工具PixVerse的「角色-视频」新功能可以帮你实现这一切。不仅如此,它能生成动态视频,让你的角色更加生动。输入一张图,你就能够得到相应的动态视频结果,在保持角色一致性的基础上,丰富的背景元素和角色动态让生成结果

ChatGPT克星,介绍五款免费又好用的AIGC检测工具May 22, 2023 pm 02:38 PM

ChatGPT克星,介绍五款免费又好用的AIGC检测工具May 22, 2023 pm 02:38 PM简介ChatGPT推出后,犹如潘多拉魔盒被打开了。我们现在正观察到许多工作方式的技术转变。人们正在使用ChatGPT创建网站、应用程序,甚至写小说。随着AI生成工具的大肆宣传和引入,我们也已经看到了不良行为者的增加。如果你关注最新消息,你一定曾听说ChatGPT已经通过了沃顿商学院的MBA考试。迄今为止,ChatGPT通过的考试涵盖了从医学到法律学位等多个领域。除了考试之外,学生们正在用它来提交作业,作家们正在提交生成性内容,而研究人员只需输入提示语就能产生高质量的论文。为了打击生成性内容的滥用

小米相册 AIGC 编辑功能正式上线:支持智能扩图、魔法消除 ProMar 14, 2024 pm 10:22 PM

小米相册 AIGC 编辑功能正式上线:支持智能扩图、魔法消除 ProMar 14, 2024 pm 10:22 PM3月14日消息,小米官方今日宣布,小米相册AIGC编辑功能正式上线小米14Ultra手机,并将在本月内全量上线小米14、小米14Pro和RedmiK70系列手机。AI大模型为小米相册带来两个新功能:智能扩图与魔法消除Pro。AI智能扩图支持对构图不好的图片进行扩展和自动构图,操作方式为:打开相册编辑-进入裁切旋转-点击智能扩图。魔法消除Pro能够对游客照中的路人进行无痕消除,使用方式为:打开相册编辑-进入魔法消除-点击右上角的Pro。目前,小米14Ultra机器已经上线智能扩图与魔法消除Pro功

营销效果大幅提升,AIGC视频创作就该这么用Jun 25, 2024 am 12:01 AM

营销效果大幅提升,AIGC视频创作就该这么用Jun 25, 2024 am 12:01 AM经过一年多的发展,AIGC已经从文字对话、图片生成逐步向视频生成迈进。回想四个月前,Sora的诞生让视频生成赛道经历了一场洗牌,大力推动了AIGC在视频创作领域的应用范围和深度。在人人都在谈论大模型的时代,我们一方面惊讶于视频生成带来的视觉震撼,另一方面又面临着落地难问题。诚然,大模型从技术研发到应用实践还处于一个磨合期,仍需结合实际业务场景进行调优,但理想与现实的距离正在被逐步缩小。营销作为人工智能技术的重要落地场景,成为了很多企业及从业者想要突破的方向。掌握了恰当方法,营销视频的创作过程就会

AIGC革新客户服务,维音构建“1+5”生成式AI智能产品矩阵Sep 15, 2023 am 11:57 AM

AIGC革新客户服务,维音构建“1+5”生成式AI智能产品矩阵Sep 15, 2023 am 11:57 AM由自然语言处理、语音识别、语音合成、机器学习等技术组成的人工智能技术,应用于各行各业获得广泛认可。置身于AI应用的前沿,从2022年底开始,维音不断见证AIGC技术所带来的惊喜,也有幸参与到这场覆盖全球的技术浪潮。经过训练、测试、调优和应用,维音将其丰富的客户服务行业经验与强大的大模型能力相结合,开发出了适用于坐席端和业务端的生成式AI客服机器人。同时,维音还将底层能力与维音Vision系列智能产品相互连接,最终形成了“1+5”维音生成式AI智能产品矩阵其中,“1”是维音自主训练的大模型服务平台

实测7款「Sora级」视频生成神器,谁有本事登上「铁王座」?Aug 05, 2024 pm 07:19 PM

实测7款「Sora级」视频生成神器,谁有本事登上「铁王座」?Aug 05, 2024 pm 07:19 PM机器之能报道编辑:杨文谁能成为AI视频圈的King?美剧《权力的游戏》中,有一把「铁王座」。传说,它由巨龙「黑死神」熔掉上千把敌人丢弃的利剑铸成,象征着无上的权威。为了坐上这把铁椅子,各大家族展开了一场场争斗和厮杀。而自Sora出现以来,AI视频圈也掀起了一场轰轰烈烈的「权力的游戏」,这场游戏的玩家主要有大洋彼岸的RunwayGen-3、Luma,国内的快手可灵、字节即梦、智谱清影、Vidu、PixVerseV2等。今天我们就来测评一下,看看究竟谁有资格登上AI视频圈的「铁王座」。-1-文生视频

美图公司AIGC落地B端新场景,“AI海报”进一步提升设计效率May 25, 2023 pm 09:11 PM

美图公司AIGC落地B端新场景,“AI海报”进一步提升设计效率May 25, 2023 pm 09:11 PM5月16日,美图公司旗下美图设计室上线“AI海报”功能,该功能旨在降低设计门槛,提高制作效率。在AIGC的加持下,让更多非专业人士也能轻松制作出高质量海报。传统的海报制作方式包括使用Photoshop专业设计工具和使用海报模板这类便捷设计工具。PS需要专业设计师才能熟练操作,但即使是专业设计师,也需要花费较多时间不断调整尺寸、配色等细节,耗费大量时间和精力。没有设计基础的人只能使用现成的海报模板来完成设计,但选择模板、替换图片、替换文本同样消耗时间,而且即便用户花了大量时间,有时候也无法达到理想

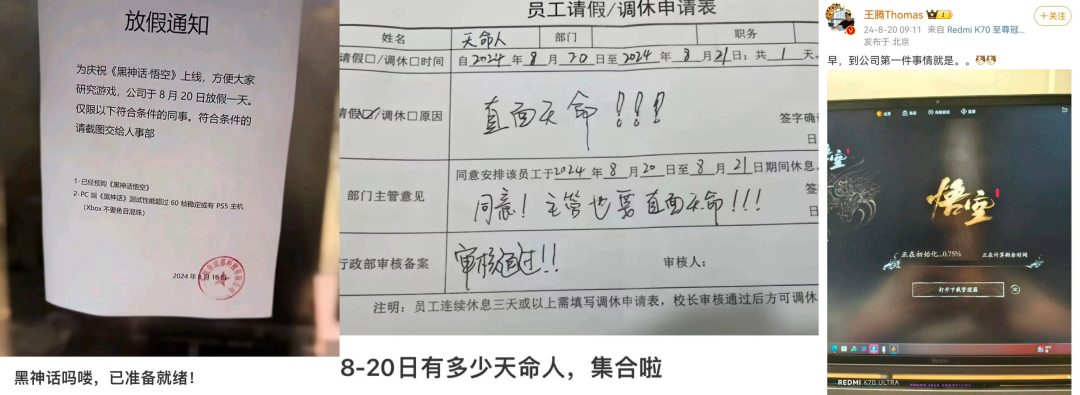

AI在用 | 川普魂穿《黑神话》,3D「魔改」悟空……一只黑猴勾起多少种AI玩法?Aug 21, 2024 pm 10:50 PM

AI在用 | 川普魂穿《黑神话》,3D「魔改」悟空……一只黑猴勾起多少种AI玩法?Aug 21, 2024 pm 10:50 PM机器之能报道编辑:杨文以大模型、AIGC为代表的人工智能浪潮已经在悄然改变着我们生活及工作方式,但绝大部分人依然不知道该如何使用。因此,我们推出了「AI在用」专栏,通过直观、有趣且简洁的人工智能使用案例,来具体介绍AI使用方法,并激发大家思考。我们也欢迎读者投稿亲自实践的创新型用例。投稿邮箱:content@jiqizhixin.com这两天被一只黑猴子刷了屏。这热度高得有多离谱?抖音、微博、公众号,只要一划拉,全在聊这款国产游戏《黑神话:悟空》,甚至官媒都下场开直播。还有公司直接放假,让员工在

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

WebStorm Mac version

Useful JavaScript development tools

Atom editor mac version download

The most popular open source editor

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.