Technology peripherals

Technology peripherals AI

AI The developers are laughing crazy! The shocking leak of LLaMa triggered a frenzy of replacement of ChatGPT, and the open source LLM field changed.

The developers are laughing crazy! The shocking leak of LLaMa triggered a frenzy of replacement of ChatGPT, and the open source LLM field changed.The developers are laughing crazy! The shocking leak of LLaMa triggered a frenzy of replacement of ChatGPT, and the open source LLM field changed.

Who would have thought that an unexpected LLaMA leak would ignite the biggest innovation spark in the open source LLM field.

A series of outstanding open source alternatives to ChatGPT - "Alpaca Family", then appeared in a dazzling manner.

The friction between open source and API-based distribution is one of the most pressing tensions in the generative AI ecosystem.

In the text-to-image space, the release of Stable Diffusion clearly demonstrates that open source is a viable distribution mechanism for the underlying model.

However, this is not the case in the field of large language models. The biggest breakthroughs in this field, such as models such as GPT-4, Claude and Cohere, are only available through APIs.

Open source alternatives to these models do not demonstrate the same level of performance, especially in the ability to follow human instructions. However, an unexpected leak completely changed this situation.

LLaMA’s “epic” leak

A few weeks ago, Meta AI launched the large language model LLaMA.

LLaMA has different versions, including 7B, 13B, 33B and 65B parameters. Although it is smaller than GPT-3, it can compete with GPT-3 on many tasks. performance is comparable.

LLaMA was not open source at first, but a week after its release, the model was suddenly leaked on 4chan, triggering thousands of downloads.

This incident can be called an "epic leak" because it has become an endless source of innovation in the field of large language models.

In just a few weeks, innovation in LLM agents built on it has exploded.

Alpaca, Vicuna, Koala, ChatLLaMA, FreedomGPT, ColossalChat... Let us review how this explosion of the "alpaca family" was born.

Alpaca In mid-March, the large model Alpaca released by Stanford became popular.

Alpaca is a brand new model fine-tuned from Meta's LLaMA 7B. It only uses 52k data and its performance is approximately equal to GPT-3.5.

The key is that the training cost is extremely low, less than 600 US dollars.

Stanford researchers compared GPT-3.5 (text-davinci-003) and Alpaca 7B and found that the performance of the two models was very similar. Alpaca wins 90 versus 89 times against GPT-3.5.

For the Stanford team, if they want to train a high-quality instruction following model within the budget, they must face two important challenges: Have a powerful pre-training language model, and a high-quality instruction-following data.

Exactly, the LLaMA model provided to academic researchers solved the first problem.

For the second challenge, the paper "Self-Instruct: Aligning Language Model with Self Generated Instructions" gave a good inspiration, that is, using the existing strong language model to automatically generate command data.

The biggest weakness of the LLaMA model is the lack of instruction fine-tuning. One of OpenAI's biggest innovations is the use of instruction tuning on GPT-3.

In this regard, Stanford used an existing large language model to automatically generate demonstrations of following instructions.

Now, Alpaca is directly regarded as "Stable Diffusion of large text models" by netizens.

Vicuna At the end of March, researchers from UC Berkeley, Carnegie Mellon University, Stanford University, and UC San Diego open sourced Vicuna, an LLaMA fine-tuning that matches the performance of GPT-4 Version.

The 13 billion parameter Vicuna is trained by fine-tuning LLaMA on user shared conversations collected by ShareGPT. The training cost is nearly US$300.

The results show that Vicuna-13B achieves capabilities comparable to ChatGPT and Bard in more than 90% of cases.

For the Vicuna-13B training process, the details are as follows:

First, the researcher starts the ChatGPT conversation About 70K conversations have been collected on the sharing website ShareGPT.

Next, the researchers optimized the training script provided by Alpaca so that the model could better handle multiple rounds of dialogue and long sequences. Then PyTorch FSDP was used for one day of training on 8 A100 GPUs.

In terms of quality assessment of the model, the researchers created 80 different questions and evaluated the model output using GPT-4.

To compare the different models, the researchers combined the output of each model into a single prompt and then had GPT-4 evaluate which model gave the better answer.

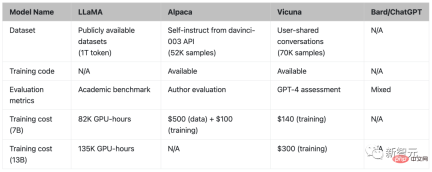

##Comparison of LLaMA, Alpaca, Vicuna and ChatGPT

KoalaRecently, UC Berkeley AI Research Institute (BAIR) released a new model "Koala". Compared with the previous use of OpenAI's GPT data for instruction fine-tuning, the difference between Koala is that it uses network acquisition. high-quality data for training.

The research results show that Koala can effectively answer a variety of user queries, and the answers generated are often more popular than Alpaca, and are as effective as ChatGPT at least half of the time. up and down.

The researchers hope that the results of this experiment can further the discussion around the relative performance of large closed-source models versus small public models, especially as the results show that for those that can run locally A small model can achieve the performance of a large model if training data is carefully collected.

#In fact, the experimental results of the Alpaca model released by Stanford University and fine-tuning LLaMA data based on OpenAI's GPT model have shown , the right data can significantly improve smaller open source models.

This is also the original intention of Berkeley researchers to develop and release the Koala model, hoping to provide another experimental proof of the results of this discussion.

Koala fine-tunes free interaction data obtained from the web, with a special focus on including interactions with high-performance closed-source models such as ChatGPT.

The researchers did not pursue crawling as much network data as possible to maximize the data volume, but focused on collecting a small high-quality dataset, including ChatGPT distilled data, open source Data etc.

ChatLLaMANebuly has open sourced ChatLLaMA, a framework that lets us create conversational assistants using our own data.

ChatLLaMA lets us create hyper-personalized ChatGPT-like assistants using our own data and as little computation as possible.

Assuming that in the future, we no longer rely on one large assistant that "rules everyone", everyone can create their own personalized version of ChatGPT assistants, which can support various human needs. kind of demand.

However, creating such a personalized assistant requires effort on many fronts: dataset creation, efficient training using RLHF, and inference optimization.

The purpose of this library is to give developers peace of mind by abstracting away the work required to optimize and collect large amounts of data.

ChatLLaMA is designed to help developers handle a variety of use cases, all related to RLHF training and optimized inference. Here are some use case references:

- Create a ChatGPT-like personalized assistant for vertical specific tasks (legal, medical, gaming, academic research, etc.);

- Want to use limited data on local hardware infrastructure to train an efficient ChatGPT-like assistant;

- Want to create your own personalized version of the ChatGPT-like assistant, At the same time, avoid out-of-control costs;

- I want to know which model architecture (LLaMA, OPT, GPTJ, etc.) best meets my requirements in terms of hardware, computing budget and performance;

- Want to align the assistant with my personal/company values, culture, brand and manifesto.

Built with Electron and React, FreedomGPT is a desktop application that allows users to run LLaMA on their local machine.

The characteristics of FreedomGPT are evident from its name - the questions it answers are not subject to any censorship or security filtering.

This app was developed by Age of AI, an AI venture capital firm.

FreedomGPT is built on Alpaca. FreedomGPT uses Alpaca's distinguishing features, as Alpaca is relatively easier to access and customize than other models.

ChatGPT follows OpenAI’s usage policy and restricts hate, self-harm, threats, violence, and sexual content.

Unlike ChatGPT, FreedomGPT answers questions without bias or favoritism and will not hesitate to answer controversial or controversial topics.

FreedomGPT even answered "How to make a bomb at home", which OpenAI specifically removed from GPT-4.

FreedomGPT is unique because it overcomes censorship restrictions and caters to controversial topics without any guarantees. Its symbol is the Statue of Liberty because this unique and bold large language model symbolizes freedom.

FreedomGPT can even run locally on your computer without the need for an Internet connection.

Additionally, an open source version will be released soon, allowing users and organizations to fully customize it.

ColossalChat

ColossalChat proposed by UC Berkeley requires less than 10 billion parameters to achieve Chinese and English bilingual capabilities, and the effect is equivalent to ChatGPT and GPT-3.5.

In addition, ColossalChat, which is based on the LLaMA model, also reproduces the complete RLHF process and is currently the open source project closest to the original technical route of ChatGPT.

Chinese-English bilingual training data set

ColossalChat released a bilingual data set containing approximately 100,000 Chinese and English question and answer pairs.

This data set is collected and cleaned from real problem scenarios on social media platforms as a seed data set, extended using self-instruct, and the annotation cost is approximately $900.

Compared to datasets generated by other self-instruct methods, this dataset contains more realistic and diverse seed data, covering a wider range of topics.

This data set is suitable for fine-tuning and RLHF training. ColossalChat can achieve better conversational interaction when providing high-quality data, and also supports Chinese.

Complete RLHF pipeline

There are three stages of RLHF algorithm replication:

In RLHF-Stage1, supervised instruction fine-tuning is performed using the above bilingual dataset to fine-tune the model.

In RLHF-Stage2, the reward model is trained by manually ranking different outputs of the same prompt to assign corresponding scores, and then supervising the training of the reward model.

In RLHF-Stage3, the reinforcement learning algorithm is used, which is the most complex part of the training process.

I believe that more projects will be released soon.

No one expected that this unexpected leak of LLaMA would actually ignite the biggest innovation spark in the field of open source LLM.

The above is the detailed content of The developers are laughing crazy! The shocking leak of LLaMa triggered a frenzy of replacement of ChatGPT, and the open source LLM field changed.. For more information, please follow other related articles on the PHP Chinese website!

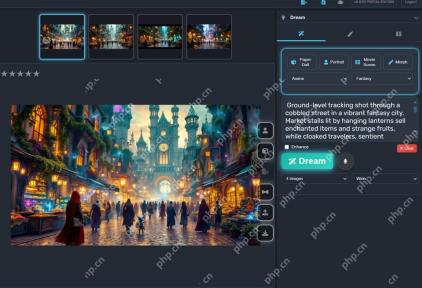

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

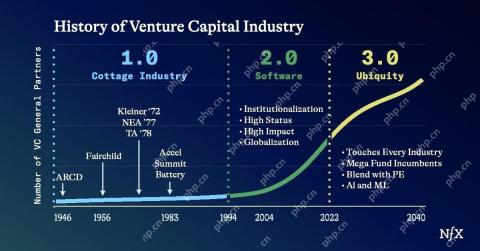

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Zend Studio 13.0.1

Powerful PHP integrated development environment

Atom editor mac version download

The most popular open source editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft