Technology peripherals

Technology peripherals AI

AI Berkeley open sourced the first high-definition data set and prediction model in parking scenarios, supporting target recognition and trajectory prediction.

Berkeley open sourced the first high-definition data set and prediction model in parking scenarios, supporting target recognition and trajectory prediction.Berkeley open sourced the first high-definition data set and prediction model in parking scenarios, supporting target recognition and trajectory prediction.

As autonomous driving technology continues to iterate, vehicle behavior and trajectory prediction are of extremely important significance for efficient and safe driving. Although traditional trajectory prediction methods such as dynamic model deduction and accessibility analysis have the advantages of clear form and strong interpretability, their modeling capabilities for the interaction between the environment and objects are relatively limited in complex traffic environments. Therefore, in recent years, a large number of research and applications have been based on various deep learning methods (such as LSTM, CNN, Transformer, GNN, etc.), and various data sets such as BDD100K, nuScenes, Stanford Drone, ETH/UCY, INTERACTION, ApolloScape, etc. have also emerged. , which provides strong support for training and evaluating deep neural network models. Many SOTA models such as GroupNet, Trajectron, MultiPath, etc. have shown good performance.

The above models and data sets are concentrated in normal road driving scenarios, and make full use of infrastructure and features such as lane lines and traffic lights to assist in the prediction process; due to limitations of traffic regulations, The movement patterns of most vehicles are also relatively clear. However, in the "last mile" of autonomous driving - autonomous parking scenarios, we will face many new difficulties:

- Traffic rules in the parking lot The requirements for lane lines and lane lines are not strict, and vehicles often drive at will and "take shortcuts"

- In order to complete the parking task, vehicles need to complete more complex parking actions, including frequent reversing, Parking, steering, etc. When the driver is inexperienced, parking may become a long process

- There are many obstacles and clutter in the parking lot, and the distance between vehicles is close. If you are not careful, you may cause Collisions and scratches

-

Pedestrians often walk through the parking lot at will, and vehicles need more avoidance actions

In such a scenario, simply apply the existing It is difficult for the trajectory prediction model to achieve ideal results, and the retraining model lacks the support of corresponding data. Current parking scene-based data sets such as CNRPark EXT and CARPK are only designed for free parking space detection. The pictures come from the first-person perspective of surveillance cameras, have low sampling rates, and have many occlusions, making them unable to be used for trajectory prediction.

In the just concluded 25th IEEE International Conference on Intelligent Transportation Systems (IEEE ITSC 2022) in October 2022, from University of California, Berkeley Researchers released the first high-definition video & trajectory data set for parking scenes, and based on this data set, used CNN and Transformer architecture to propose a trajectory prediction model called "ParkPredict" .

- Paper link: https://arxiv.org/abs/2204.10777

- Dataset home page, trial and download application: https://sites.google.com/berkeley.edu/dlp-dataset (If you cannot access, you can Try the alternative page https://www.php.cn/link/966eaa9527eb956f0dc8788132986707 )

- ##Dataset Python API: https://github.com/MPC- Berkeley/dlp-dataset

The data set was collected by drone, with a total duration of 3.5 hours, video resolution For 4K, the sampling rate is 25Hz. The view covers a car park area of approximately 140m x 80m, with a total of approximately 400 parking spaces. The dataset is accurately annotated, and a total of 1216 motor vehicles, 3904 bicycles, and 3904 pedestrian trajectories were collected.

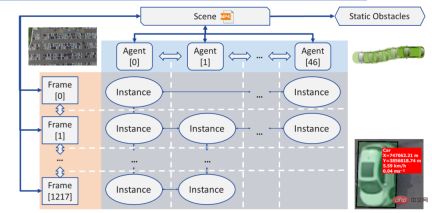

After reprocessing, the trajectory data can be read in the form of JSON and loaded into the data structure of the connection graph (Graph):

- Individual (Agent): Each individual (Agent) is an object moving in the current scene (Scene). It has attributes such as geometric shape and type. Its movement trajectory is stored as a file containing Linked List of Instances

- Instance: Each instance is the state of an individual (Agent) in a frame (Frame). Contains its position, angle, speed and acceleration. Each instance contains a pointer to the instance of the individual in the previous frame and the next frame

- Frame (Frame): Each frame (Frame) is a sampling point, and its Contains all visible instances (Instance) at the current time, and pointers to the previous frame and the next frame

- Obstacle (Obstacle): The obstacle is in this record Objects that do not move at all, including the position, corner and geometric size of each object

- Scene (Scene): Each scene (Scene) corresponds to a recorded video file, which contains pointers , pointing to the first and last frames of the recording, all individuals (Agents) and all obstacles (Obstacles)

provided by the data set Two download formats:

JSON only (recommended) : JSON file contains the types, shapes of all individuals , trajectories and other information can be directly read, previewed, and generated semantic images (Semantic Images) through the open source Python API. If the research goal is only trajectory and behavior prediction, the JSON format can meet all needs.

##Original video and annotation: If the research is based on the original camera For topics in the field of machine vision such as target detection, separation, and tracking of raw images, you may need to download the original video and annotation. If this is required, the research needs need to be clearly described in the dataset application. In addition, the annotation file needs to be parsed by itself.

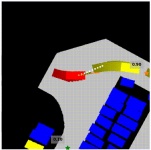

Behavior and trajectory prediction model: ParkPredictAs an application example, in the paper "ParkPredict: Multimodal Intent and Motion Prediction for Vehicles in Parking Lots with CNN" at IEEE ITSC 2022 and Transformer", the research team used this data set to predict the vehicle's intent (Intent) and trajectory (Trajectory) in the parking lot scene based on the CNN and Transformer architecture.

The team used the CNN model to predict the distribution probability of vehicle intent (Intent) by constructing semantic images. This model only needs to construct local environmental information of the vehicle, and can continuously change the number of available intentions according to the current environment.

The team improved the Transformer model and provided the intent (Intent) prediction results, the vehicle's movement history, and the semantic map of the surrounding environment as inputs to achieve Multi-modal intention and behavior prediction.

Summary

- As the first high-precision data set for parking scenarios, the Dragon Lake Parking (DLP) data set can achieve large-scale target recognition and tracking, idle Parking space detection, vehicle and pedestrian behavior and trajectory prediction, imitation learning and other research provide data and API support

- By using CNN and Transformer architecture, the ParkPredict model’s behavior and performance in parking scenarios In addition to showing good capabilities in trajectory prediction

- Dragon Lake Parking (DLP) data set is open for trial and application. You can visit the data set homepage https://sites.google.com/ berkeley.edu/dlp-dataset for more information (if you cannot access, you can try the alternative page https://www.php.cn/link/966eaa9527eb956f0dc8788132986707)

The above is the detailed content of Berkeley open sourced the first high-definition data set and prediction model in parking scenarios, supporting target recognition and trajectory prediction.. For more information, please follow other related articles on the PHP Chinese website!

Newest Annual Compilation Of The Best Prompt Engineering TechniquesApr 10, 2025 am 11:22 AM

Newest Annual Compilation Of The Best Prompt Engineering TechniquesApr 10, 2025 am 11:22 AMFor those of you who might be new to my column, I broadly explore the latest advances in AI across the board, including topics such as embodied AI, AI reasoning, high-tech breakthroughs in AI, prompt engineering, training of AI, fielding of AI, AI re

Europe's AI Continent Action Plan: Gigafactories, Data Labs, And Green AIApr 10, 2025 am 11:21 AM

Europe's AI Continent Action Plan: Gigafactories, Data Labs, And Green AIApr 10, 2025 am 11:21 AMEurope's ambitious AI Continent Action Plan aims to establish the EU as a global leader in artificial intelligence. A key element is the creation of a network of AI gigafactories, each housing around 100,000 advanced AI chips – four times the capaci

Is Microsoft's Straightforward Agent Story Enough To Create More Fans?Apr 10, 2025 am 11:20 AM

Is Microsoft's Straightforward Agent Story Enough To Create More Fans?Apr 10, 2025 am 11:20 AMMicrosoft's Unified Approach to AI Agent Applications: A Clear Win for Businesses Microsoft's recent announcement regarding new AI agent capabilities impressed with its clear and unified presentation. Unlike many tech announcements bogged down in te

Selling AI Strategy To Employees: Shopify CEO's ManifestoApr 10, 2025 am 11:19 AM

Selling AI Strategy To Employees: Shopify CEO's ManifestoApr 10, 2025 am 11:19 AMShopify CEO Tobi Lütke's recent memo boldly declares AI proficiency a fundamental expectation for every employee, marking a significant cultural shift within the company. This isn't a fleeting trend; it's a new operational paradigm integrated into p

IBM Launches Z17 Mainframe With Full AI IntegrationApr 10, 2025 am 11:18 AM

IBM Launches Z17 Mainframe With Full AI IntegrationApr 10, 2025 am 11:18 AMIBM's z17 Mainframe: Integrating AI for Enhanced Business Operations Last month, at IBM's New York headquarters, I received a preview of the z17's capabilities. Building on the z16's success (launched in 2022 and demonstrating sustained revenue grow

5 ChatGPT Prompts To Stop Depending On Others And Trust Yourself FullyApr 10, 2025 am 11:17 AM

5 ChatGPT Prompts To Stop Depending On Others And Trust Yourself FullyApr 10, 2025 am 11:17 AMUnlock unshakeable confidence and eliminate the need for external validation! These five ChatGPT prompts will guide you towards complete self-reliance and a transformative shift in self-perception. Simply copy, paste, and customize the bracketed in

AI Is Dangerously Similar To Your MindApr 10, 2025 am 11:16 AM

AI Is Dangerously Similar To Your MindApr 10, 2025 am 11:16 AMA recent [study] by Anthropic, an artificial intelligence security and research company, begins to reveal the truth about these complex processes, showing a complexity that is disturbingly similar to our own cognitive domain. Natural intelligence and artificial intelligence may be more similar than we think. Snooping inside: Anthropic Interpretability Study The new findings from the research conducted by Anthropic represent significant advances in the field of mechanistic interpretability, which aims to reverse engineer internal computing of AI—not just observe what AI does, but understand how it does it at the artificial neuron level. Imagine trying to understand the brain by drawing which neurons fire when someone sees a specific object or thinks about a specific idea. A

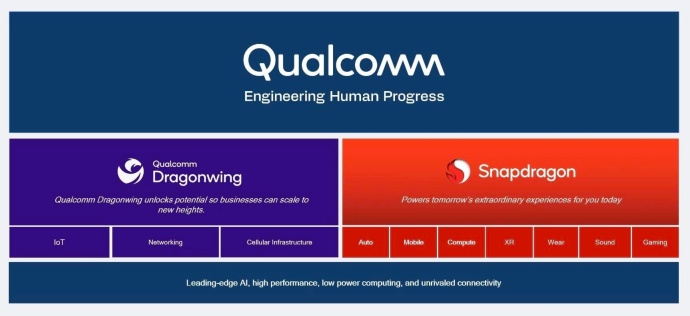

Dragonwing Showcases Qualcomm's Edge MomentumApr 10, 2025 am 11:14 AM

Dragonwing Showcases Qualcomm's Edge MomentumApr 10, 2025 am 11:14 AMQualcomm's Dragonwing: A Strategic Leap into Enterprise and Infrastructure Qualcomm is aggressively expanding its reach beyond mobile, targeting enterprise and infrastructure markets globally with its new Dragonwing brand. This isn't merely a rebran

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Zend Studio 13.0.1

Powerful PHP integrated development environment

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 Chinese version

Chinese version, very easy to use