Technology peripherals

Technology peripherals AI

AI Is this the prototype of the Meta version of ChatGPT? Open source, can run on a single GPU, beats GPT-3 with 1/10 the number of parameters

Is this the prototype of the Meta version of ChatGPT? Open source, can run on a single GPU, beats GPT-3 with 1/10 the number of parametersVery large models with hundreds of billions or trillions of parameters need to be studied, as do large models with billions or tens of billions of parameters.

Just now, Meta chief AI scientist Yann LeCun announced that they have "open sourced" a new large model series - LLaMA (Large Language Model Meta AI), with parameter quantities ranging from 7 billion to 65 billion range. The performance of these models is excellent: the LLaMA model with 13 billion parameters can outperform GPT-3 (175 billion parameters) "on most benchmarks" and can run on a single V100 GPU; while the largest 65 billion The parameter LLaMA model is comparable to Google's Chinchilla-70B and PaLM-540B.

As we all know, parameters are the variables that a machine learning model uses to predict or classify based on input data. The number of parameters in a language model is a key factor affecting its performance. Larger models are usually able to handle more complex tasks and produce more coherent outputs, which Richard Sutton calls a "bitter lesson." In the past few years, major technology giants have launched an arms race around large models with hundreds of billions and trillions of parameters, greatly improving the performance of AI models.

However, this kind of research competition to compete for “monetary ability” is not friendly to ordinary researchers who do not work for technology giants, and hinders their understanding of the operating principles and potential problems of large models. Research on solutions and other problems. Moreover, in practical applications, more parameters will occupy more space and require more computing resources to run, resulting in high application costs for large models. Therefore, if one model can achieve the same results as another model with fewer parameters, it represents a significant increase in efficiency. This is very friendly to ordinary researchers, and it will be easier to deploy the model in real environments. That’s the point of Meta’s research.

"I now think that within a year or two we will be running language models with a substantial portion of ChatGPT's capabilities on our (top-of-the-line) phones and laptops," independent artificial intelligence researcher Simon Willison writes when analyzing the impact of Meta's new AI model.

In order to train the model while meeting the requirements of open source and reproducibility, Meta only used publicly available data sets, which is different from most large-scale models that rely on non-public data. Model. Those models are often not open source and are private assets of large technology giants. In order to improve model performance, Meta trained on more tokens: LLaMA 65B and LLaMA 33B were trained on 1.4 trillion tokens, and the smallest LLaMA 7B also used 1 trillion tokens.

On Twitter, LeCun also showed some results of the LLaMA model continuation of text. The model was asked to continue: "Did you know Yann LeCun released a rap album last year? We listened to it and here's what we thought: ____ "

However, in terms of whether it can be commercialized, the differences between Meta blog and LeCun’s Twitter have caused some controversy.

Meta stated in the blog post that to maintain integrity and prevent abuse, they will release them under a non-commercial license model, focusing on study use cases. Access to the model will be granted on a case-by-case basis to academic researchers, organizations affiliated with government, civil society and academia, as well as industrial research laboratories around the world. Interested persons can apply at the following link:

## https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform

LeCun said that Meta is committed to open research and releases all models to the research community under the GPL v3 license (GPL v3 allows commercial use).

This statement is quite controversial because he does not make it clear whether the "model" here refers to code or weights, or both. In the opinion of many researchers, model weight is much more important than code.

In this regard, LeCun explained that what is open under the GPL v3 license is the model code.

Some people believe that this level of openness is not true “AI democratization.”

##Currently, Meta has uploaded the paper to arXiv, and some content has also been uploaded to the GitHub repository. You can go to Go browse.

- Paper link: https://research.facebook.com/publications/llama- open-and-efficient-foundation-language-models/

- GitHub link: https://github.com/facebookresearch/llama

Large language models (LLMs) trained on large-scale text corpora have shown their ability to perform new tasks from text prompts or from a small number of samples. task. These few-shot properties first emerged when scaling models to a sufficiently large scale, spawning a line of work focused on further scaling these models.

These efforts are based on the assumption that more parameters will lead to better performance. However, recent work by Hoffmann et al. (2022) shows that for a given computational budget, the best performance is achieved not by the largest models, but by smaller models trained on more data.

The goal of scaling laws proposed by Hoffmann et al. (2022) is to determine how best to scale dataset and model sizes given a specific training compute budget. However, this goal ignores the inference budget, which becomes critical when serving language models at scale. In this case, given a target performance level, the preferred model is not the fastest to train, but the fastest to infer. While it may be cheaper to train a large model to reach a certain level of performance, a smaller model that takes longer to train will ultimately be cheaper at inference. For example, although Hoffmann et al. (2022) recommended training a 10B model on 200B tokens, the researchers found that the performance of the 7B model continued to improve even after 1T tokens.

This work focuses on training a family of language models to achieve optimal performance at a variety of inference budgets by training on more tokens than typically used. The resulting model, called LLaMA, has parameters ranging from 7B to 65B and performs competitively with the best existing LLMs. For example, despite being 10 times smaller than GPT-3, LLaMA-13B outperforms GPT-3 in most benchmarks.

The researchers say this model will help democratize LLM research because it can run on a single GPU. At higher scales, the LLaMA-65B parametric model is also comparable to the best large language models such as Chinchilla or PaLM-540B.

Unlike Chinchilla, PaLM or GPT-3, this model only uses publicly available data, making this work open source compatible, while most existing models rely on data that either is not Publicly available or unrecorded (e.g. Books-2TB or social media conversations). Of course there are some exceptions, notably OPT (Zhang et al., 2022), GPT-NeoX (Black et al., 2022), BLOOM (Scao et al., 2022) and GLM (Zeng et al., 2022), But none can compete with the PaLM-62B or Chinchilla.

The remainder of this article outlines the researchers’ modifications to the transformer architecture and training methods. Model performance is then presented and compared with other large language models on a set of standard benchmarks. Finally, we demonstrate bias and toxicity in models using some of the latest benchmarks from the responsible AI community.

Method Overview

The training method used by the researchers is the same as that described in previous work such as (Brown et al., 2020), (Chowdhery et al., 2022) The approach is similar and inspired by Chinchilla scaling laws (Hoffmann et al., 2022). The researchers used a standard optimizer to train large transformers on large amounts of text data.

Pre-training data

As shown in Table 1, the training data sets for this study are several A mixture of sources covering different areas. In most cases, researchers reuse data sources that have been used to train other large language models, but the restriction here is that only publicly available data can be used and it is compatible with open resources. The mix of data and their percentage in the training set is as follows:

- English CommonCrawl [67%];

- C4 [15%];

- Github [4.5%];

- Wikipedia [4.5%];

- Gutenberg and Books3 [4.5%];

- ArXiv [2.5%];

- Stack Exchange [2%].

The entire training data set contains approximately 1.4T tokens after tokenization. For most of the training data, each token is used only once during training, except for the Wikipedia and Books domains, on which we perform approximately two epochs.

Architecture

#Based on recent work on large language models, this study also uses a transformer architecture. Researchers drew on various improvements that were subsequently proposed and used in different models, such as PaLM. In the paper, the researchers introduced the main difference from the original architecture:

- Pre-normalization [GPT3]. In order to improve the stability of training, the researchers normalized the input of each transformer sub-layer instead of normalizing the output. They used the RMSNorm normalization function proposed by Zhang and Sennrich (2019).

- SwiGLU activation function [PaLM]. The researchers used the SwiGLU activation function proposed by Shazeer (2020) to replace the ReLU nonlinearity to improve performance. They use the dimensions of 2D, 3D, and 4D respectively instead of 4D in PaLM.

- Rotation Embed [GPTNeo]. The researchers removed the absolute position embedding and added the rotated position embedding (RoPE) proposed by Su et al. (2021) at each layer of the network. The details of the hyperparameters of different models can be found in Table 2.

Experimental results

Common sense reasoning

In Table 3, the researchers compare with existing models of various sizes and report the numbers from the corresponding papers. First, LLaMA-65B outperforms Chinchilla-70B on all reported benchmarks except BoolQ. Again, this model surpasses the PaLM540B in every aspect except on BoolQ and WinoGrande. The LLaMA-13B model also outperforms GPT-3 on most benchmarks despite being 10 times smaller.

## Closed book answers

Table 4 shows the performance of NaturalQuestions and Table 5 shows the performance of TriviaQA. In both benchmarks, LLaMA-65B achieves state-of-the-art performance in both zero- and few-shot settings. What's more, LLaMA-13B is equally competitive on these benchmarks despite being one-fifth to one-tenth the size of GPT-3 and Chinchilla. The model's inference process is run on a single V100 GPU.

##Reading Comprehension

The researchers also evaluated the model on the RACE reading comprehension benchmark (Lai et al., 2017). The evaluation setup of Brown et al. (2020) is followed here, and Table 6 shows the evaluation results. On these benchmarks, LLaMA-65B is competitive with PaLM-540B, and LLaMA-13B outperforms GPT-3 by several percentage points.

Mathematical Reasoning

In the table 7, researchers compared it with PaLM and Minerva (Lewkowycz et al., 2022). On GSM8k, they observed that LLaMA65B outperformed Minerva-62B, although it was not fine-tuned on the mathematical data.

Code generation

As shown in the table 8 shows that for a similar number of parameters, LLaMA performs better than other general models, such as LaMDA and PaLM, which have not been trained or fine-tuned with specialized code. On HumanEval and MBPP, LLaMA exceeds LaMDA by 137B for parameters above 13B. LLaMA 65B also outperforms PaLM 62B, even though it takes longer to train.

Large-scale multi-task language understanding

The researchers used the examples provided by the benchmark to evaluate the model in the 5-shot case, and show the results in Table 9. On this benchmark, they observed that LLaMA-65B trailed Chinchilla70B and PaLM-540B by an average of a few percentage points in most areas. One potential explanation is that the researchers used a limited number of books and academic papers in the pre-training data, namely ArXiv, Gutenberg, and Books3, which totaled only 177GB, while the models were trained on up to 2TB of books. The extensive books used by Gopher, Chinchilla, and PaLM may also explain why Gopher outperforms GPT-3 on this benchmark but is on par on other benchmarks.

Performance changes during training

During the training period, the researchers tracked the performance of the LLaMA model on some question answering and common sense benchmarks, and the results are shown in Figure 2. Performance improves steadily on most benchmarks and is positively correlated with the model's training perplexity (see Figure 1).

##

The above is the detailed content of Is this the prototype of the Meta version of ChatGPT? Open source, can run on a single GPU, beats GPT-3 with 1/10 the number of parameters. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

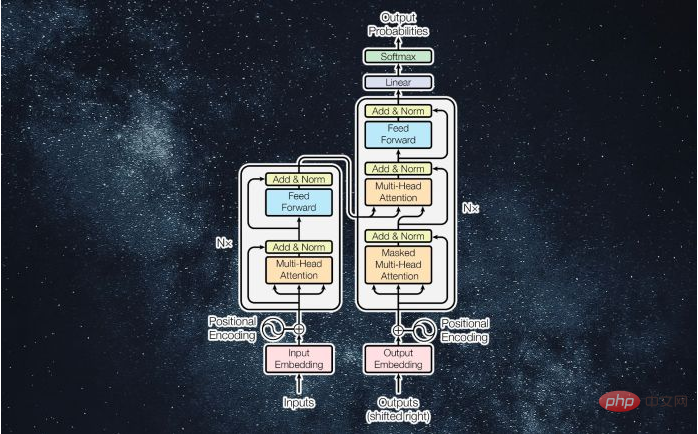

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

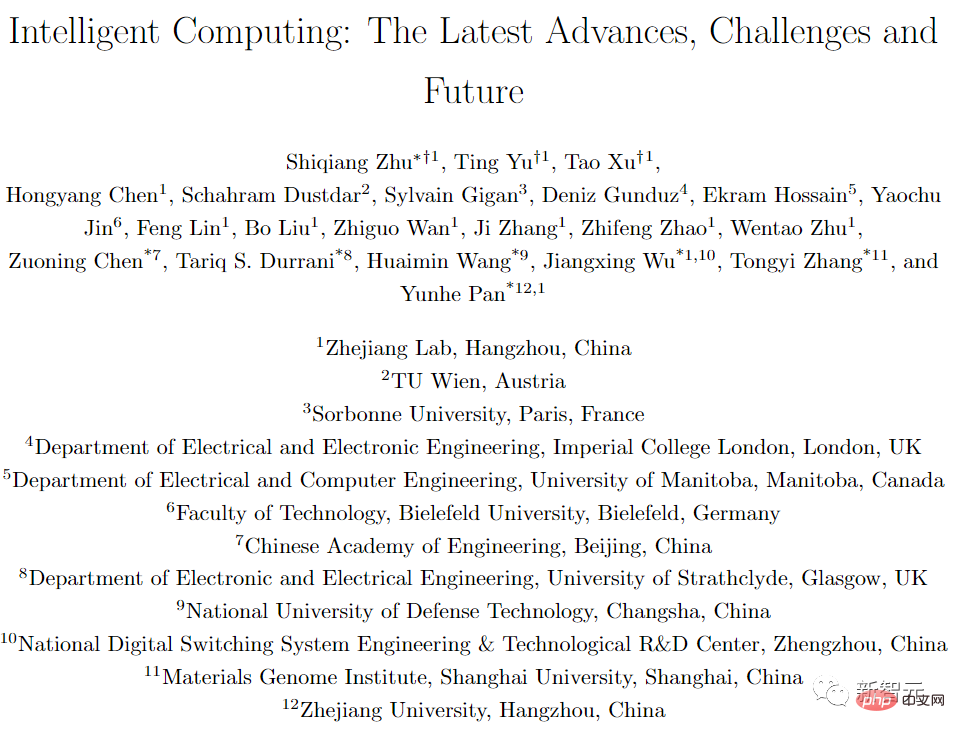

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

WebStorm Mac version

Useful JavaScript development tools

Atom editor mac version download

The most popular open source editor

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment