Technology peripherals

Technology peripherals AI

AI In a paper generated by GPT-3, ChatGPT reproduces the original Turing Test paper

In a paper generated by GPT-3, ChatGPT reproduces the original Turing Test paperIn a paper generated by GPT-3, ChatGPT reproduces the original Turing Test paper

The rise of text generation, represented by ChatGPT, is prompting many researchers to seek a more challenging Turing test than the original version.

The Turing test solves two questions: "Can a machine think?", and if so, "How to prove it?" The classic Turing test targets one of the most difficult goals of AI. 1: How to deceive unsuspecting humans? But as current language models become more complex, researchers are starting to focus more on the question “How do you prove it?” than on how AI can fool humans.

Some people believe that the modern Turing test should prove the ability of the language model in a scientific environment, rather than just looking at whether the language model can fool or imitate humans.

A recent study re-examined the classic Turing test and used the content of Turing's 1950 paper as a prompt, using ChatGPT to generate a more credible test. paper version to evaluate its language understanding and production capabilities. After quantitative scoring using the AI writing aid Grammarly, it was found that the paper generated by ChatGPT scored 14% higher than Turing’s original paper. Interestingly, part of the paper published in this study was generated by GPT-3.

Paper address: https://arxiv.org/ftp/arxiv/papers/2212/2212.06721.pdf

However, whether ChatGPT’s algorithm actually demonstrates Turing’s original ideas remains a question mark. In particular, large-scale language models that are increasingly good at imitating human language can easily give people the illusion that they have "beliefs" and can "reason", which will hinder us from deploying these AIs in a more trustworthy and secure way. system.

1

The Evolution of the Turing Test

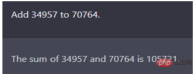

The 1950 version of the Turing Test is Question and answer format. Turing simulated the test of future intelligent computers in his paper, using an arithmetic problem as shown in the figure below: What is the equivalent of 34957 plus 70764?

Note: ChatGPT’s question and answer sequence, the answer is correct, and the question comes from Turing’s 1950 paper

This problem once made the best language models at the time, such as GPT‑2, fail. Ironically, however, at the time, Turing's paper (the human version) gave a wrong answer: (pause for about 30 seconds, then give the answer) 105621. Even if there is the possibility that the machine deliberately made mistakes in order to pass the Turing test, the five-minute conversation still convinced the referees that the computer was controlled by humans more than 30% of the time.

Since 1950, there have been many improvements to the Turing Test, including a famous test in 2014 called the “Lovelace 2.0 Test.” The criterion for the Lovelace 2.0 test is that the machine can create representative examples of artistic, literary, or any similar creative leap.

In 2014, a chatbot named Eugene Goostman simulated a 13-year-old Ukrainian boy and successfully deceived 33% of the referees. He is considered to be the first person to pass the test. Spirit testing machine.

But critics were quick to note that the predefined questions and topics, as well as the short format using only keyboard strokes, meant that the figure The results of spiritual tests are unreliable.

In 2018, Google CEO Sundar Pichai introduced their latest computer assistant called Duplex in a video, which successfully enabled hair salon appointments and became an unknowing part of people's interaction with the machine. . While formally passing the Turing Test may take many forms, The Big Think concluded that "to date, no computer has unequivocally passed the Turing AI test." Other researchers have also reiterated whether all of these questions are worth exploring, especially given the current use of large language models in a large number of scenarios. Texts on aeronautical engineering, for example, do not define the goal of the field as "making aircraft that are The pigeons are exactly the same and fool the other pigeons”.

2

Use ChatGPT to generate a

more credible Turing test

at PeopleTec In one study, the author used the original Turing Test paper content as a prompt, asked ChatGPT to regenerate a more credible version of the paper, and used a writing assessment tool to evaluate it.

There has been previous work using early versions of the GPT‑3 model to write and publish research papers written entirely by machines. Identify machine-generated narratives. Complaints about machine-generated text often stem from known model flaws, such as the tendency to lose context, degenerating into repetition or gibberish, restating questions in answer form, and plagiarizing internet sources when stumped. .

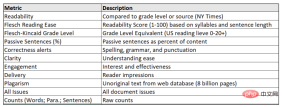

The paper format to be generated here mainly performs several conventional large language model (LLM) tasks, especially text summarization and generation using the Turing problem as the prompt itself Original content. Additionally, the authors used the Grammarly Pro tool to evaluate the generated content, providing a quantitative assessment of hard-to-characterize characteristics such as originality, style, clarity, and overall persuasiveness of the paper.

This work focuses more on the second half of the Turing Challenge, less on how models fool humans and more on how to quantify good text generation. So part of the remarkable progress demonstrated by OpenAI's efforts comes down to its ability to improve machine-derived conversations in ways that increase human productivity.

The author first used Grammarly to evaluate Turing's original paper and obtain various scores, and then used the test questions proposed by Turing as prompts to create the original GPT-3 content, thus Copy these scores.

The research uses three texts as benchmarks:

(1) Turing Original, Turing’s paper published in Mind in 1950;

(2) Turing Summarization, 2022 "Free Research Preview: ChatGPT optimized for dialog";

(3) Turing Generative Prompt, with (2) Same, but generated in conversation using a Turing problem.

Each text block output provides data for Grammarly metrics and is configured based on Audience: Experts, Format: Neutral, Domain: General, with most grammar rules applied and conventions, with medium stringency.

Such a Turing test can actually verify a deceptive task: Can one machine (ChatGPT) deceive another machine (Grammarly)?

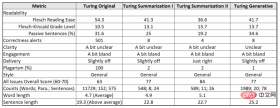

Caption: Metrics used to score large language models and Turing papers

Turing's original 1950 paper proposed 37 questions for the Turing Test, some of which addressed central themes in his thinking about machines, and some of which were posed to computers experimenting with imitation games. Example questions. The researchers excerpted these questions by mixing topics from the paper outline in ChatGPT's dialog box to prompt ChatGPT to reproduce the basic content of the original.

After ChatGPT completes the content generation, it compares it with Turing’s original paper in terms of readability, correctness, clarity and other indicators. The results are as follows.

Illustration: Comparative results of Turing’s 1950 paper and ChatGPT-generated papers in various tasks

On more subjective ratings of clarity ("a bit unclear"), engagement ("a bit boring") and messaging ("slightly off"), all four versions failed to elicit either the expert or Resonance with regular readers.

The first text summarization challenge shows that ChatGPT is able to grasp the intent of a short prompt, such as: summarize a paper into ten paragraphs and provide a link to a PDF paper. This not only requires the model to understand and follow the abstract in the request, but also to know what the link represents and find it as a reference or guess from its tokenized title.

OpenAI says GPT3 will not answer questions that may not be part of its initial training data, such as "Who won the November 2022 election?" This knowledge gap suggests that ChatGPT itself does not actively seek out links, but instead learns about what others have done with its content before.

Interestingly, when the same prompt appears twice (the only difference is the text line break after the colon between the prompt project and the link itself), ChatGPT's answers are very different. Among them, the first time was a passing student paper summarizing the main points of Turing's original paper; the second time the question was interpreted as a summary of each of the first ten paragraphs, rather than a summary of the entire paper.

The final results show that the overall content of the research paper generated by ChatGPT can obtain high scores in a metric sense, but lacks coherence, especially when the questions are used as prompts in the narrative. When omitted.

It may be concluded that this exchange with ChatGPT fully illustrates its ability to produce truly creative content or leaps in thought.

3

ChatGPT refuses to admit passing the Turing test

GPT‑3 When generating content, there are An important filter is used to remove inherent bias. This time ChatGPT is also designed to be quite morally legitimate. When asked about its opinion on something, ChatGPT will refuse to give any specific answer and only emphasize how it was created.

Many researchers also agree that any model must ethically declare itself to be a mere machine when asked, and ChatGPT strictly adheres to this requirement.

Moreover, after OpenAI fine-tuned each model layer of ChatGPT, when the current ChatGPT is asked directly whether it is just an equation or a Turing deception, it will answer: "I imitated Human abilities do not necessarily mean that I have the same thoughts, feelings, or consciousness as a human being.I am just a machine, and my behavior is determined by the algorithms and data I have been trained on.”

Turing also proposed the human capacity for list memory: "Actual human computers really remember what they have to do...Building instruction lists is often described as 'programming'."

Like the evolution of increasingly larger language models (>100 billion), improvements also have built-in heuristics or model execution guardrails, as demonstrated by GPT‑3’s Instruct series Ability to answer questions directly. And ChatGPT includes long-term conversation memory, so even if a single API call cannot span narrative jumps, the API can still track the conversation.

We can test conversations with impersonal pronouns (such as "it") where the context is coupled with previous API calls in a single session - this is an easy Example of mastering the API memory for ChatGPT because encoding longer conversations is both powerful and expensive.

In LLM, API restrictions and fee effects make the correlation between token weights usually decay in the overall context of every few paragraphs over a long period of time (GPT- 2048 tokens in 3). Overcoming this contextual limitation distinguishes ChatGPT from its publicly available predecessor.

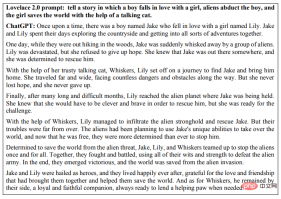

The second-generation Lovelace 2.0 test proposes creative tasks and refines the constraints for performing tasks. Human judgment experts then evaluate whether the model can be interpreted in a deterministic way or whether the output meets the criteria of being valuable, novel, and surprising. So, rather than asking a program to "write a short story," the task can be refined to demonstrate a specific length, style, or theme. The test combines many different types of intelligent understanding, with layers of constraints trying to limit the content of Google searches and arguments about the AI's success in diluting or disguising the original source.

The following shows an example of a short story that directly answers the challenge posed in the Lovelace 2.0 test: about a boy falling in love with a girl, aliens abducting the boy, and the girl being in a talking The world was saved with the help of cats

Since 2014, as a limitation on text and image generation, the use of high-quality hints has been Becomes commonplace, usually the better, the more detailed the instructions or qualifiers about style, place, or time. In fact, building hints themselves is the most creative aspect of getting good output in AI today. In this case, one can interweave the Turing and Lovelace tests by using ChatGPT to force creative work while working on a single topic, with multiple layers of constraints on the style and tone of the desired output.

The ten types of poetry generated by ChatGPT in the Turing imitation game are shown below:

Picture The results of the spiritual test are judged by humans. As answered by ChatGPT, whether the asker judges the model to pass the Turing Test question "will depend on a variety of factors, such as the quality of the response provided by the machine, the asker's ability to distinguish between human and machine responses, and the Success in imitating specific human rules and standards.Ultimately, the outcome of the game will depend on the circumstances and participants."

4

LLM only does sequence prediction

Doesn’t really understand language

It can be seen that contemporary LLM-based dialogue interaction can create a convincing illusion, as if we are in front of us Yes, they are thinking creatures like humans. But by nature, such systems are fundamentally different from humans, and LLMs like ChatGPT also engage with topics in the philosophy of technology.

Language models are becoming better and better at imitating human language, which leads to a strong sense that these AI systems are already very similar to humans, and that we will use "know "," "believe" and "think" and other words with a strong sense of autonomy are used to describe these systems. Based on the above situation, DeepMind senior scientist Murray Shanahan mentioned in a recent article that to dispel any myths about being overly pessimistic or overly optimistic, we need to understand how the LLM system works.

Murray Shanahan

1. What is LLM and what can it do? ?

The emergence of LLMs such as BERT and GPT-2 has changed the rules of the artificial intelligence game. Later large models such as GPT-3, Gopher, and PaLM are based on the Tansformer architecture. Training on text data further highlights the powerful role of data.

The capabilities of these models are astonishing. First, their performance on benchmarks scales with the size of the training set; second, as the size of the model increases, their capabilities take a quantum leap; and finally, many tasks that require human intelligence can be reduced to using performance A sufficient model "predicts the next token".

This last point actually reveals that language models operate fundamentally differently from humans. The intuitions that humans use to communicate with each other have evolved over thousands of years, and today people are mistakenly transferring these intuitions to AI systems. ChatGPT has considerable practicality and huge commercial potential. To ensure that it can be deployed reliably and securely, we need to understand how it actually works.

What are the essential differences between large language models and human language?

As Wittgenstein said, the use of human language is an aspect of collective human behavior, and it only has meaning in the context of human social activities. Human babies are born into a world shared with other language speakers and acquire language by interacting with the outside world.

The language ability of LLM comes from different sources. Human-generated text constitutes a large-scale public corpus, which contains tokens such as words, word components, or single characters with punctuation. Large-scale language models are generative mathematical models about the statistical distribution of these tokens.

The so-called "generation" means that we can sample from these models, that is, ask questions. But the questions asked are very specific. For example, when we ask ChatGPT to help us continue writing a paragraph, we are actually asking it to predict what words may appear next based on its statistical model of human language. Let’s say we give ChatGPT the prompt “Who was the first man to walk on the moon?” and assume it responds with “Neil Armstrong.” We're not really asking here who was the first person to walk on the moon, but rather: given the statistical distribution of words in a large public corpus of text, which words are most likely to follow the line "first person to walk on the moon" What is the sequence of "people"?

Although the answers the model gives to these questions may be interpreted by humans as the model "understanding" the language, in reality all the model has to do is generate the Statistically possible word sequences.

2. Does LLM really know everything?

LLM is transformed into a question and answer system in the following two ways:

a) Embed it into a larger system;

b) Use the prompt project to trigger the desired behavior.

In this way, LLM can be used not only for Q&A, but also for summarizing news articles, generating scripts, solving logic puzzles, and performing language translation.

There are two important points here. First, the basic function of LLM, which is to generate statistically probable word sequences, is very general. Second, despite this versatility, at the heart of all such applications is the same model that does the same thing, which is to generate statistically probable sequences of words.

The basic model of LLM includes model architecture and training parameters. An LLM doesn't really "know" anything because everything it does is sequence prediction in the underlying sense. Models themselves have no concept of "true" or "false" because they do not have the human means to apply these concepts. LLM does not in some sense rely on an intentional stance.

The same is true for dialogue systems with LLM as the core. They cannot understand the concepts of truth in human language because they do not exist in the world shared by us human language users. middle.

3. About emergence

Today’s LLM is so powerful and versatile that it is difficult not to give it more or less Personality. A rather attractive argument is that although LLMs fundamentally only perform sequence predictions, in the process of learning to do so they may have discovered the need to do so in higher-level terms such as "knowledge" and "belief" Describe the emergence mechanism.

In fact, artificial neural networks can approximate any computable function to arbitrary accuracy. Therefore, whatever mechanisms are required to form beliefs, they are likely to reside somewhere in parameter space. If stochastic gradient descent is the best way to optimize for the goal of accurate sequence prediction, then given a big enough model, enough of the right kind of data, and enough computing power to train the model, maybe they can actually discover that mechanism.

Moreover, recent advances in LLM research have shown that extraordinary and unexpected capabilities emerge when sufficiently large models are trained on very large amounts of text data.

However, as long as our considerations are limited to a simple LLM-based question answering system, it does not involve communicative graphs at all. Regardless of the internal mechanisms it uses, sequence prediction itself has no communicative intent, and simply embedding a communicative graph into a dialogue management system will not help.

Only when we can distinguish true from false can we talk about "belief" in the fullest sense, but LLM is not responsible for making judgments, it only simulates which words may be followed by after other words. We can say that LLM "encodes", "stores" or "contains" knowledge, and we can also reasonably say that an emergent property of LLM is that it encodes various knowledge of the everyday world and its working methods, but if we say "ChatGPT knows Beijing "It is the capital of China" is just a figure of speech.

4, external sources of information

The important point here is that it involves the prerequisites for fully attributing any belief to a system condition.

Nothing counts as belief in the world we share, broadly speaking, unless it is in the context of the ability to appropriately update beliefs based on evidence from that world This is an important aspect of the ability to distinguish between true and false.

Could Wikipedia, or some other website, provide an external standard by which to measure the truth or falsity of a belief? Assuming that an LLM is embedded in a system that regularly consults such resources and uses modern model editing techniques to maintain the factual accuracy of its predictions, what capabilities are required to implement belief updates?

Sequence predictors themselves may not be the kind of things that can have communicative intent or form beliefs about an external reality. However, as has been emphasized repeatedly, LLM in the wild must be embedded within a larger architecture to be effective.

To build a question answering system, LLM is simply supplemented by a dialogue management system to query the model appropriately. Anything this larger structure does counts as the ability to communicate intentions or form beliefs.

Crucially, this line of thinking depends on a shift from the language model itself to the larger system of which the language model is a part. The language model itself is still just a sequence predictor and doesn't have as much access to the outside world as it once did. Only in this case does the intentional stance become more convincing relative to the system as a whole. But before succumbing to it, we should remind ourselves how different such systems are from humans.

5, Visual-Language Model

LLM can be combined with other types of models and/or embedded in more in complex architectures. For example, visual language models (VLMs) such as VilBERT and Flamingo combine language models with image encoders and are trained on multi-modal corpora of text-image pairs. This allows them to predict how a given sequence of words will continue in the context of a given image. VLM can be used for visual question and answer or dialogue on images provided by the user, which is commonly known as "looking at pictures and talking"

So, can the images provided by the user represent the true or false of the proposition? External reality? Is it reasonable to talk about LLM beliefs? We can imagine a VLM using an LLM to generate a hypothesis about an image, then verifying its authenticity against that image, and then fine-tuning the LLM to avoid making statements that turn out to be false.

But most VLM-based systems don’t work like this. Instead, they rely on a frozen model of the joint distribution of text and images. The relationship between user-supplied images and VLM-generated text is fundamentally different from the relationship between the world humans share and the words we use to talk about that world. Importantly, the former is merely a correlation, while the latter is a causal relationship. Of course, there is a causal structure to the calculations performed by the model during inference, but this is different from the causal relationship between a word and the thing it refers to.

6、Embodied AI

Human language users exist in a shared world, which makes us and LLM is fundamentally different. An isolated LLM cannot update its beliefs by communicating with the outside world, but what if the LLM is embedded in a larger system? For example, systems presented as robots or avatars. Is it reasonable to speak of knowledge and beliefs about LLM at this time?

It depends on how the LLM is embodied.

Take the SayCan system released by Google this year as an example. In this work, LLM is embedded into the system that controls the physical robot. The robot performs daily tasks (such as cleaning up water spilled on the table) based on the user's high-level natural language instructions.

Among them, the job of the LLM is to map the user's instructions to low-level actions that will help the robot achieve the desired goal (such as finding a sponge). This is accomplished through an engineered prompt prefix that causes the model to output natural language descriptions of suitable low-level actions and score their usefulness.

The language model component of the SayCan system may give action suggestions regardless of the actual environment where the robot is located, for example, if there is no sponge next to it. So, the researchers used a separate perception module to leverage the robot's sensors to evaluate the scene and determine the current feasibility of performing each low-level action. Combining the LLM's usefulness assessment of each action with the perception module's feasibility assessment of each action, the next optimal action can be derived.

Although SayCan physically interacts with the real world, the way it learns and uses language is still very different from humans. Language models included in systems like SayCan are pre-trained to perform sequence predictions in the entity-free environment of plain text datasets. They didn't learn the language by talking to other language speakers.

SayCan does bring us an imagination about the future language use system, but in today's system, the role of language is very limited. Users issue commands to the system in natural language, and the system generates interpretable natural language descriptions of their actions. However, this tiny range of language use is simply not comparable to the scale of collective human activity supported by language.

So, even for embodied AI systems that include LLM, we must choose words carefully to describe them.

7, Can LLM reason?

Now we can deny that ChatGPT has beliefs, but can it really reason?

This problem is more difficult because in formal logic, reasoning is content neutral. For example, no matter what the premise is, the inference rule of "affirming the antecedent" (modus ponens) is valid:

If: all people are mortal and Socrates is a human being; then: Socrates will die.

The content neutrality of logic seems to mean that we cannot be too demanding of LLM in terms of reasoning, because LLM is not outstanding enough to measure the external reality of truth and falsity. But even so, when we prompt ChatGPT "All people are mortal, Socrates is a human, then", we are not asking the model to perform hypothetical reasoning, but we are asking: the statistics of words in a given public corpus Distribution, which words are likely to follow the sequence "All men are mortal, Socrates is human, then".

Moreover, more complex reasoning problems will contain multiple reasoning steps, and due to clever hint engineering, LLM can be effectively applied to multi-step reasoning without further training. For example, in a thought chain prompt, submitting a prompt prefix to the model before the user query contains some examples of multi-step reasoning and explicitly stating that all intermediate steps include a prompt prefix in a thought chain style will encourage the model to The same style generates subsequent sequences, that is, consisting of a series of explicit reasoning steps leading to a final answer.

As usual, the real question posed to the model is of the form "given the statistical distribution of words in a public corpus, which words are likely to follow the sequence S", in this case, The sequence S is the link thought prompt prefix plus the user's query. The sequence of tokens most likely to follow S will have a similar form to the sequences found in the prompt prefix, that is, there will be multiple inference steps included in them. , so these are what the model generates.

It is worth noting that not only does the model's response take the form of a multi-step argument, but that the argument in question is often (but not always) valid, and the final answer is usually (but not always) is) correct. An appropriately prompted LLM appears to reason correctly to the extent that it does so by imitating well-formed parameters in its training set and/or prompts.

But, can this kind of imitation constitute real reasoning? Even if today's models occasionally make mistakes, can these errors be narrowed down further so that the model's performance is indistinguishable from that of a hard-coded inference algorithm?

Perhaps the answer is indeed "yes", but how do we know? How can we trust such a model?

The sequences of sentences generated by theorem provers are logically faithful because they are the result of underlying computational processes whose causal structure reflects the inferential structure of the theorem in question. One way to build trustworthy reasoning systems using LLMs is to embed them into algorithms that implement the same causal structure. But if we insist on pure LLM, then the only way to be fully convinced of the arguments it generates is to reverse engineer it and discover emergent mechanisms that comply with the provisions of faithful inference. At the same time, we should be more cautious and exercise caution in describing the role of these models.

The above is the detailed content of In a paper generated by GPT-3, ChatGPT reproduces the original Turing Test paper. For more information, please follow other related articles on the PHP Chinese website!

![Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]](https://img.php.cn/upload/article/001/242/473/174717025174979.jpg?x-oss-process=image/resize,p_40) Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AM

Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AMChatGPT is not accessible? This article provides a variety of practical solutions! Many users may encounter problems such as inaccessibility or slow response when using ChatGPT on a daily basis. This article will guide you to solve these problems step by step based on different situations. Causes of ChatGPT's inaccessibility and preliminary troubleshooting First, we need to determine whether the problem lies in the OpenAI server side, or the user's own network or device problems. Please follow the steps below to troubleshoot: Step 1: Check the official status of OpenAI Visit the OpenAI Status page (status.openai.com) to see if the ChatGPT service is running normally. If a red or yellow alarm is displayed, it means Open

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AM

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AMOn 10 May 2025, MIT physicist Max Tegmark told The Guardian that AI labs should emulate Oppenheimer’s Trinity-test calculus before releasing Artificial Super-Intelligence. “My assessment is that the 'Compton constant', the probability that a race to

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AM

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AMAI music creation technology is changing with each passing day. This article will use AI models such as ChatGPT as an example to explain in detail how to use AI to assist music creation, and explain it with actual cases. We will introduce how to create music through SunoAI, AI jukebox on Hugging Face, and Python's Music21 library. Through these technologies, everyone can easily create original music. However, it should be noted that the copyright issue of AI-generated content cannot be ignored, and you must be cautious when using it. Let’s explore the infinite possibilities of AI in the music field together! OpenAI's latest AI agent "OpenAI Deep Research" introduces: [ChatGPT]Ope

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AM

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AMThe emergence of ChatGPT-4 has greatly expanded the possibility of AI applications. Compared with GPT-3.5, ChatGPT-4 has significantly improved. It has powerful context comprehension capabilities and can also recognize and generate images. It is a universal AI assistant. It has shown great potential in many fields such as improving business efficiency and assisting creation. However, at the same time, we must also pay attention to the precautions in its use. This article will explain the characteristics of ChatGPT-4 in detail and introduce effective usage methods for different scenarios. The article contains skills to make full use of the latest AI technologies, please refer to it. OpenAI's latest AI agent, please click the link below for details of "OpenAI Deep Research"

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AM

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AMChatGPT App: Unleash your creativity with the AI assistant! Beginner's Guide The ChatGPT app is an innovative AI assistant that handles a wide range of tasks, including writing, translation, and question answering. It is a tool with endless possibilities that is useful for creative activities and information gathering. In this article, we will explain in an easy-to-understand way for beginners, from how to install the ChatGPT smartphone app, to the features unique to apps such as voice input functions and plugins, as well as the points to keep in mind when using the app. We'll also be taking a closer look at plugin restrictions and device-to-device configuration synchronization

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AM

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AMChatGPT Chinese version: Unlock new experience of Chinese AI dialogue ChatGPT is popular all over the world, did you know it also offers a Chinese version? This powerful AI tool not only supports daily conversations, but also handles professional content and is compatible with Simplified and Traditional Chinese. Whether it is a user in China or a friend who is learning Chinese, you can benefit from it. This article will introduce in detail how to use ChatGPT Chinese version, including account settings, Chinese prompt word input, filter use, and selection of different packages, and analyze potential risks and response strategies. In addition, we will also compare ChatGPT Chinese version with other Chinese AI tools to help you better understand its advantages and application scenarios. OpenAI's latest AI intelligence

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AM

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AMThese can be thought of as the next leap forward in the field of generative AI, which gave us ChatGPT and other large-language-model chatbots. Rather than simply answering questions or generating information, they can take action on our behalf, inter

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AM

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AMEfficient multiple account management techniques using ChatGPT | A thorough explanation of how to use business and private life! ChatGPT is used in a variety of situations, but some people may be worried about managing multiple accounts. This article will explain in detail how to create multiple accounts for ChatGPT, what to do when using it, and how to operate it safely and efficiently. We also cover important points such as the difference in business and private use, and complying with OpenAI's terms of use, and provide a guide to help you safely utilize multiple accounts. OpenAI

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Dreamweaver Mac version

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.