Technology peripherals

Technology peripherals AI

AI Kavita Bala, Dean of Cornell School of Computing: What is the 'Metaverse”? God's Eye may be born through AI

Kavita Bala, Dean of Cornell School of Computing: What is the 'Metaverse”? God's Eye may be born through AIKavita Bala, Dean of Cornell School of Computing: What is the 'Metaverse”? God's Eye may be born through AI

This article is reproduced from Lei Feng.com. If you need to reprint, please go to the official website of Lei Feng.com to apply for authorization.

My research in the past few years has mainly focused on visual appearance and understanding, from micron resolution to world-class. Before I start my speech, let me show you a very interesting example. The visual interface between the protagonist and the world in this movie is very interesting.

You can see that when this person walks in the real world, a series of text appears on his visual interface. The protagonist is a car fan, so the visual interface shows him a wealth of information about the car:

Only one photo is needed, and the visual interface is Can tell you all the information about this car. We need research in the fields of computer vision and visual understanding to advance this technology.

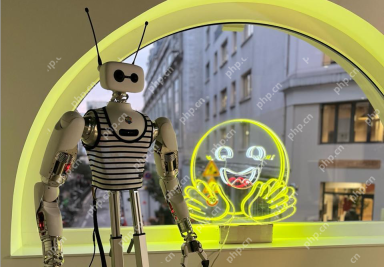

The protagonist continues walking, and when you get closer to these models, you will find that they are not real people, although they look very realistic. To achieve such technology, we need to study Realistic Appearance (Realistic Appearance).

Then the protagonist walked to a shopping window and saw all the products in the window. This time his visual interface shows him all the information about the product inside, and even simulates the effect of wearing the product. The protagonist can experience the product without actually touching it.

To achieve the effect of this video I show you, we need a method called "Inverse Graphics( Inverse graphics" technology can digitize all attributes of goods and interact with them.

I show these examples to show you the various technologies we are developing. You must have heard a lot about augmented reality/mixed reality. The ones I just mentioned are all is the technology now driving the development of augmented reality. Today I will focus on the visual technology.

A model that looks so realistic that you can't tell if it's real or fake is called photorealistic appearance in the field of computer graphics; there's another in this field Direction is to take a photo of an object and how do we understand all the attributes of the object in the photo; then we can continue to develop on this basis to understand the attributes of the world.

These are the three major contents I want to talk about today:

- Physically based visual appearance model (Physics-Based Visual Appearance Models)

- Inverse Graphics(Inverse Graphics)

- World-scale Visual Discovery(World-Scale Visual Discovery)

1 Physics-based visual appearance model

Let’s start with physics-based graphics.

First I would like to introduce a famous test: the Cornell box test, which is designed to determine the accuracy of rendering software by comparing the rendered scene with an actual photo of the same scene. The two pictures I show you, one is artificially rendered and the other is real - actually the left is a real scene and the right is a virtual picture.

People have worked for years to create images that this test cannot detect as real or fake. But the real world is not as simple as the picture in Cornell's box. There are many kinds of materials in the real world, such as fabrics, skins, leaves, food, etc. shown in this picture. People are constantly interacting with the world and judging whether what they see is real. When we want to simulate the realistic visual effects of the model on the left below, how to represent these complex materials is a big challenge. This is also a problem I have studied for many years.

So I'm going to talk about how to properly capture the look of fabric and cloth. First, let’s ask a question. Look at these two pictures. As a human being, you can immediately recognize that the left is velvet, and the right is a shiny silk-like material. Why can you recognize it immediately? What makes velvet look like velvet, and what makes silk look different than velvet but look like silk?

The answer is: structure.

The two fabrics are not only different in appearance, but their essence is that their visual effects are different because of their different structures. If we grasp this structure, we capture their visual essence.

So what we did in the original project was: look at micro-CT scans of these materials.

In the micro-CT scan of velvet, we can see that velvet is a furry material.

#The structure of silk is completely different. Silk is very tightly intertwined. The warp and weft form a specific pattern. Because the structure of silk is so tight, it gives silk that shiny effect.

Speaking of this, we will find that as long as we grasp the microstructure of the material, we can basically grasp the appearance model of the material. Even if the material It's very complex, but it still remains true to its roots.

Once we grasp the structure, we can grasp the information that shows the optical properties, such as color. This information was enough to give us a complete model, allowing us to recreate the realistic visual effects of this material.

As shown in the picture, by mastering the structural characteristics of the two fabrics, we successfully restored the visual effects of velvet and silk.

We have done a lot of research on actually promoting these models and thinking about what real-world applications this model can have. We now believe that this tool will make digital prototyping easier for industrial designers, textile designers, etc., giving designers the ability to simulate the appearance of real woven fabrics.

In an industrial loom, real yarn is used on the bobbin and after adding a weaving pattern, the industrial loom will produce a fabric as shown below on the right,and The modern visual Turing test we want to create is essentially a fully digital pipeline that uses a combination of CT scans and photos to achieve the same effect as an industrial loom.

This virtual yet realistic visual effect allows designers to make important decisions without actually manufacturing the fabric.

We actually created a low-dimensional model and 22 parameters that more intuitively represent the material structure. Designers will gain greater power if they can use this tool.

And these 22 parameters will lead to the second topic I am going to talk about, inverse graphics.

2 Inverse Graphics

The second problem we encountered is, after having these models, how to adapt to these models? ? This is also an important topic in computer graphics research.

Let’s start with the relationship between light and the surface of an object.

When light encounters a metal surface, the light will be reflected. As for other materials, such as skin, food, fabrics, etc., when light encounters their surfaces, the light will enter the surface and interact with the object to a certain extent. This is called subsurface scattering.

As shown in the picture above, the way to judge whether sushi is delicious is to judge the gloss and freshness of its appearance. Therefore, if you want to simulate the visual effect of a certain object, you need to understand what happens when light hits the surface of such an object.

Caption: End-to-end pipeline

Under ideal conditions , we have some kind of learned representation. After taking a photo, we can identify what material properties and material parameters the objects in the photo have. We can also know three parameters related to different scattering: How far it travels in the medium, how much it spreads, what is the albedo of the material when it is scattered, etc.

And now that we have very good physically-based renderers that can simulate the entire physical process of light hitting the surface of an object, I think we already have the ability to create this kind of pipeline.

If we combine the physically based renderer and the learned representation to get this end-to-end pipeline, and then match the output image with the input image and minimize the loss, then we can get the material Properties (that is, the material π in the middle of the picture above).

To do this effectively, we need to effectively combine learning and physics, turn the physical rendering process of the world upside down, and strive to get the inverse parameters.

However, the recovery of shapes and materials is very difficult. The above process requires the rendering engine R to be differentiable. Many recent studies have Studying this issue.

If we want to be able to restore the visual effect of a product like a scene in a movie, we need to have a differentiable rendering pipeline, which means we need to be able to differentiate about Loss of the property you want to restore. Here is an example of recovering material and geometry, we can use the chain method to simply sample on the edges of the surface to get the information we need.

Then we can come up with a process for restoring the visual effects of objects as shown below. First, we can use a mobile phone to take a series of pictures of the object we want to restore, then initialize the pictures, optimize the material and shape, and then optimize again through differentiable rendering. Finally, the object can present a realistic simulation effect. Can be used in augmented reality/virtual reality and other applications.

In visual simulation, subsurface scattering is a very important phenomenon. The picture below is a work by multiple artists called Cubes ( square). These are actually squares with a side length of 2.5cm made from 98 kinds of food. The surface of each of the 98 foods is different and complex, which piqued our interest in exploration.

#Since the surface of food is very complex, subsurface scattering must be taken into account when restoring the properties of raw materials. The specific content in this regard will be As presented in our later paper, we have developed a fully differential rendering pipeline. What we use this pipeline to recover are material properties centered on subsurface scattering. Finally, we restored the different materials and shapes of these two fruits, and successfully presented the visual effects of kiwi and dragon fruit cubes.

Illustration: Process of restoring kiwi and dragon fruit cubes

In the above research, we used a combination of learning and physics, and summarized the following three points of importance.

- Understand visual phenomena;

- Before restoring the visual effect of an object, first predict the visual effect it presents;

- User control.

##3 World-scale visual discovery

I still remember the protagonist walking on the street in the movie On the scene, he looks at the products in the window, and then the visual interface tells him all the information about the objects he sees?

This is Fine-grained object recognition (Fine-grained object recognition) is a large research field in computer vision. Fine-grained object recognition is in It has been applied in many industries such as product identification and real estate.

Caption: Precise information provided by fine-grained object recognition

For example, in this picture, fine-grained object recognition can tell that this person is carrying an x. This x does not refer to a handbag (most people can tell this), but here x refers to a specific brand of handbag. , this kind of precise knowledge is beyond the reach of most ordinary people.

Essentially, we can provide expert-level information through visual recognition, or even expert-level information in more than one field, and I think the research in this area is very exciting.

This picture shows a campfire stove. Maybe some people can’t determine the purpose of this object just by sight, but in detail Granular object recognition can not only tell us that this is a campfire stove, but also provide information about the name of the artwork, where it can be purchased, and the artist who designed it.

Illustration: IKEA APP

We are at IKEA This usage method has been launched in the augmented reality APP. We integrated visual recognition and virtual rendering in the augmented reality APP. From then on, our past ideas about visual interfaces began to gradually become a reality.

Note: The interface of Meta’s shopping AI GrokNet

Tu’s research is actually part of Meta’s shopping AI “GrokNet”. The slogan of GrokNet is to make every image lead people to shop (shoppable), and the goal of my research team and I is to make every image understandable (understandable).

What I have said above are some relatively basic research, and what we are doing now is collecting visual information on an unprecedented scale, including photos, videos and even Satellite imagery. The number of our satellites has grown significantly over the years. There are now about 1,500 satellites. These satellites upload 100 terabytes of data every day. If we can understand satellite images, then we can understand the direction of the entire world, and Knowing what is going on in the world is a very exciting research direction.

Caption: Can we understand pictures from a world scale?

#If we can understand the picture from the world level, then we can answer these questions on the picture: How should we live? What do we wear? What to eat? How does our behavior change over time? How has the Earth changed over time?

#So we started working on this question with anthropologists and sociologists, who were fascinated by these questions. , it just lacks a powerful tool to conduct research. One of the anthropologists we worked with was very interested in how clothing changed around the world, and we found that this question had many connections.

Why do people in different regions on the earth dress differently? We think there are several reasons:

- Weather is a very important reason. We dress differently from people in California in the summer because the weather here is warmer than in California. Cool;

- Attending parties or sports events, various activities or occasions also require people to wear specific clothing;

- Cultural differences will make Clothes vary across the world;

- Fashion trends are also an influencing factor.

So we started looking into this problem and started analyzing a set of about 8 million images of people from all over the world. We invented a simple recognition algorithm to identify what clothes people are wearing, which includes 12 attributes.

And what did we discover from this research?

We can see certain patterns from our analysis. For example, the people in the upper right corner have a tendency to wear green clothes, while those in the lower left corner People tend to wear red clothes.

Through the analysis of big data, we found that some data are consistent with our presets. For example, the weather does affect people’s clothing. People choose to wear thick clothes in winter and wear cool clothes in summer. , this is logical; but in some aspects, there are some strange data phenomena. As shown in the figure below, in Chicago over the past few years, there were several points in time that were the peak of people choosing to wear green.

These time points are all in March every year. After investigation, it turns out that these time points are St. Patrick’s Day in Chicago:

This is a very important local festival, and people in Chicago will choose to wear green on this day. If you are not a local, you may not know about this cultural event.

Note: Cultural activities valued around the world, people will wear different colors of clothing for these activities

By identifying people’s clothing changes in big data, we can understand local cultural/political activities and thus understand different regional cultures around the world. The above is how we understand the meaning of picture information from a world perspective.

Original video link: https://www.youtube.com/watch?v=kaQSc4iFaxc

The above is the detailed content of Kavita Bala, Dean of Cornell School of Computing: What is the 'Metaverse”? God's Eye may be born through AI. For more information, please follow other related articles on the PHP Chinese website!

The 60% Problem — How AI Search Is Draining Your TrafficApr 15, 2025 am 11:28 AM

The 60% Problem — How AI Search Is Draining Your TrafficApr 15, 2025 am 11:28 AMRecent research has shown that AI Overviews can cause a whopping 15-64% decline in organic traffic, based on industry and search type. This radical change is causing marketers to reconsider their whole strategy regarding digital visibility. The New

MIT Media Lab To Put Human Flourishing At The Heart Of AI R&DApr 15, 2025 am 11:26 AM

MIT Media Lab To Put Human Flourishing At The Heart Of AI R&DApr 15, 2025 am 11:26 AMA recent report from Elon University’s Imagining The Digital Future Center surveyed nearly 300 global technology experts. The resulting report, ‘Being Human in 2035’, concluded that most are concerned that the deepening adoption of AI systems over t

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen RoboticsApr 15, 2025 am 11:25 AM

Open Source Humanoid Robots That You Can 3D Print Yourself: Hugging Face Buys Pollen RoboticsApr 15, 2025 am 11:25 AM“Super happy to announce that we are acquiring Pollen Robotics to bring open-source robots to the world,” Hugging Face said on X. “Since Remi Cadene joined us from Tesla, we’ve become the most widely used software platform for open robotics thanks to

Three Aspects Of Intellectual Property With AIApr 15, 2025 am 11:24 AM

Three Aspects Of Intellectual Property With AIApr 15, 2025 am 11:24 AMAnd before that happens, societies have to look more closely at the issue. First of all, we have to define human content, and bring a broadness to that category of information. You have creative works like songs, and poems and pieces of visual art.

Amazon Unleashes New AI Agents Ready To Take Over Your Daily TasksApr 15, 2025 am 11:23 AM

Amazon Unleashes New AI Agents Ready To Take Over Your Daily TasksApr 15, 2025 am 11:23 AMThis will change a lot of things as we become able to delegate more and more tasks to machines. By connecting with external applications, agents can take care of shopping, scheduling, managing travel, and many of our day-to-day interactions with digi

AI Continents Are Fast Becoming The Latest Geo-Political Power Play For AI SupremacyApr 15, 2025 am 11:17 AM

AI Continents Are Fast Becoming The Latest Geo-Political Power Play For AI SupremacyApr 15, 2025 am 11:17 AMLet’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). EU Makes Bold AI Procl

Rethinking Threat Detection In A Decentralized WorldApr 15, 2025 am 11:16 AM

Rethinking Threat Detection In A Decentralized WorldApr 15, 2025 am 11:16 AMBut that’s changing—thanks in large part to a fundamental shift in how we interpret and respond to risk. The Cloud Visibility Gap Is a Threat Vector in Itself Hybrid and multi-cloud environments have become the new normal. Organizations run workloa

Chinese Robotaxis Have Government Black Boxes, Approach U.S. QualityApr 15, 2025 am 11:15 AM

Chinese Robotaxis Have Government Black Boxes, Approach U.S. QualityApr 15, 2025 am 11:15 AMA recent session at last week’s Ride AI conference in Los Angeles revealed some details about the different regulatory regime in China, and featured a report from a Chinese-American Youtuber who has taken on a mission to ride in the different vehicle

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Dreamweaver Mac version

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.