Technology peripherals

Technology peripherals AI

AI Diffusion + target detection = controllable image generation! The Chinese team proposed GLIGEN to perfectly control the spatial position of objects

Diffusion + target detection = controllable image generation! The Chinese team proposed GLIGEN to perfectly control the spatial position of objectsDiffusion + target detection = controllable image generation! The Chinese team proposed GLIGEN to perfectly control the spatial position of objects

With the open source of Stable Diffusion, the use of natural language for image generation has gradually become popular. Many AIGC problems have also been exposed, such as AI cannot draw hands, cannot understand action relationships, and is difficult to control the position of objects. wait.

The main reason is that the "input interface" only has natural language, cannot achieve fine control of the screen.

Recently, research hotspots from the University of Wisconsin-Madison, Columbia University and Microsoft have proposed a brand new method GLIGEN, which uses grounding input as a condition to convert existing "pre-trained text to image" The functionality of Diffusion Model has been expanded.

Paper link: https://arxiv.org/pdf/2301.07093.pdf

Project home page: https://gligen.github.io/

Experience link: https: //huggingface.co/spaces/gligen/demo

In order to retain the large amount of conceptual knowledge of the pre-trained model, the researchers did not choose to fine-tune the model, but through gating The mechanism injects different input grounding conditions into new trainable layers to achieve control over open-world image generation.

Currently GLIGEN supports four inputs.

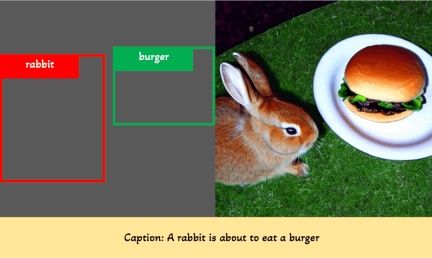

(Upper left) Text entity box (Upper right) Image entity box

(Bottom left) Image style text box (Bottom right) Text entity key points

The experimental results also show that GLIGEN has better performance in COCO and LVIS The zero-shot performance is significantly better than the current supervised layout-to-image baseline.

Controllable image generation

Before the diffusion model, generative adversarial networks (GANs) have always been the leader in the field of image generation, and their latent space and conditional input are in " The aspects of "controllable operations" and "generation" have been fully studied.

Text conditional autoregressive and diffusion models exhibit amazing image quality and concept coverage, thanks to their more stable learning objectives and large-scale access to network image-text paired data Train and quickly get out of the circle, becoming a tool to assist art design and creation.

However, existing large-scale text-image generation models cannot be conditioned on other input modes "besides text" and lack the concept of precise positioning or the use of reference images to control the generation process. Ability limits the expression of information.

For example, it is difficult to describe the precise location of an object using text, while bounding boxes (bounding

boxes) or keypoints (keypoints ) can be easily implemented.

Some existing tools such as inpainting, layout2img generation, etc. can use modal input other than text, but they are These inputs are rarely combined for controlled text2img generation.

In addition, previous generative models are usually trained independently on task-specific datasets, while in the field of image recognition, the long-standing paradigm is to learn from "large-scale image data" Or pre-trained basic models on "image-text pairs" to start building models for specific tasks.

Diffusion models have been trained on billions of image-text pairs. A natural question is: can we build on existing pre-trained diffusion models? , give them a new conditional input mode?

Due to the large amount of conceptual knowledge possessed by the pre-trained model, it may be possible to achieve better performance on other generation tasks while obtaining more data than existing text-image generation models. control.

GLIGEN

Based on the above purposes and ideas, the GLIGEN model proposed by the researchers still retains the text title as input, but also enables other input modalities, such as the grounding concept The bounding box, grounding reference image and key points of the grounding part.

The key problem here is to retain a large amount of original conceptual knowledge in the pre-trained model while learning to inject new grounding information.

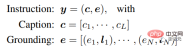

In order to prevent knowledge forgetting, researchers proposed to freeze the original model weights and add a new trainable gated Transformer layer to absorb new grouding inputs. The following uses bounding boxes as an example. .

Command input

Each grouding text entity is represented as a bounding box, containing the coordinate values of the upper left corner and lower right corner.

It should be noted that existing layout2img related work usually requires a concept dictionary and can only handle close-set entities (such as COCO categories) during the evaluation phase. Researchers found that using Text encoders encoding image descriptions can generalize the location information in the training set to other concepts.

Training data

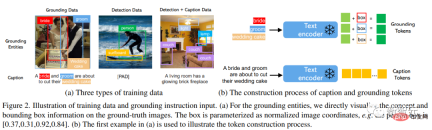

is used to generate grounding images The training data requires text c and grounding entity e as conditions. In practice, the data requirements can be relaxed by considering more flexible inputs.

There are three main types of data

1. grounding data

#Each image is associated with a caption that describes the entire image; noun entities are extracted from the caption and labeled with bounding boxes.

Since noun entities are taken directly from natural language titles, they can cover a richer vocabulary, which is beneficial to the grounding generation of open-world vocabulary.

2. Detection data

The noun entity is a predefined close-set category (for example, in COCO 80 object categories), choose to use the empty title token in the classifier-free guide as the title.

The amount of detection data (millions level) is larger than the basic data (thousands of levels), so the overall training data can be greatly increased.

3. Detection and Caption data

The noun entity is the same as the noun entity in the detection data , while the image is described with a text title alone, there may be cases where the noun entity is not completely consistent with the entity in the title.

For example, the title only gives a high-level description of the living room and does not mention the objects in the scene, while the detection annotation provides finer object-level details.

Gated attention mechanism

Researcher The goal is to give existing large-scale language-image generative models new spatially based capabilities.

#Large-scale diffusion models have been pre-trained on network-scale image text to obtain The knowledge required to synthesize realistic images based on diverse and complex language instructions. Since pre-training is expensive and performs well, it is important to retain this knowledge in the model weights while extending new capabilities. This can be achieved by Adapt new modules to accommodate new capabilities over time.

During the training process, the gating mechanism is used to gradually integrate new grounding information into the pre-trained model. This design allows flexibility in the sampling process during generation to improve quality and controllability.

The experiment also proved that using the complete model (all layers) in the first half of the sampling step, and only using the original layer (no gated Transformer layer) in the second half, generates The results can more accurately reflect grounding conditions and have higher image quality.

Experimental part

In the open set grounded text to image generation task, first use only the basic annotations of COCO (COCO2014CD) for training, and evaluate whether GLIGEN can generate basic entities other than the COCO category. .

It can be seen that GLIGEN can learn new concepts such as "blue jay", "croissant", or new Object attributes such as "brown wooden table", and this information does not appear in the training category.

The researchers believe this is because GLIGEN's gated self-attention learned to reposition visual features corresponding to grounded entities in the title for the following cross-attention layer, and Generalization capabilities are gained due to the shared text space in these two layers.

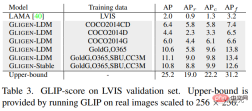

The experiment also quantitatively evaluated the zero-shot generation performance of this model on LVIS, which contains 1203 long-tail object categories. Use GLIP to predict bounding boxes from generated images and calculate AP, named GLIP score; compare it with state-of-the-art models designed for the layout2img task,

It can be found that although the GLIGEN model is only trained on COCO annotations, it is much better than the supervised baseline, probably because the baseline trained from scratch is difficult to start from Learning from limited annotations, while the GLIGEN model can utilize the large amount of conceptual knowledge of the pre-trained model.

Overall, this paper:

1. A new text2img generation method is proposed, which gives the existing text2img diffusion model new grounding controllability;

2. By retaining the pre-trained weights And learning gradually integrates new positioning layers, the model realizes open-world grounded text2img generation and bounding box input, that is, it integrates new positioning concepts not observed in training;

3. The zero-shot performance of this model on the layout2img task is significantly better than the previous state-of-the-art level, proving that large-scale pre-trained generative models can improve the performance of downstream tasks

The above is the detailed content of Diffusion + target detection = controllable image generation! The Chinese team proposed GLIGEN to perfectly control the spatial position of objects. For more information, please follow other related articles on the PHP Chinese website!

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

AI Unearths New Potential In The Mining IndustryMay 10, 2025 am 11:16 AM

AI Unearths New Potential In The Mining IndustryMay 10, 2025 am 11:16 AMAI Enhanced Mining Equipment The mining operation environment is harsh and dangerous. Artificial intelligence systems help improve overall efficiency and security by removing humans from the most dangerous environments and enhancing human capabilities. Artificial intelligence is increasingly used to power autonomous trucks, drills and loaders used in mining operations. These AI-powered vehicles can operate accurately in hazardous environments, thereby increasing safety and productivity. Some companies have developed autonomous mining vehicles for large-scale mining operations. Equipment operating in challenging environments requires ongoing maintenance. However, maintenance can keep critical devices offline and consume resources. More precise maintenance means increased uptime for expensive and necessary equipment and significant cost savings. AI-driven

Why AI Agents Will Trigger The Biggest Workplace Revolution In 25 YearsMay 10, 2025 am 11:15 AM

Why AI Agents Will Trigger The Biggest Workplace Revolution In 25 YearsMay 10, 2025 am 11:15 AMMarc Benioff, Salesforce CEO, predicts a monumental workplace revolution driven by AI agents, a transformation already underway within Salesforce and its client base. He envisions a shift from traditional markets to a vastly larger market focused on

AI HR Is Going To Rock Our Worlds As AI Adoption SoarsMay 10, 2025 am 11:14 AM

AI HR Is Going To Rock Our Worlds As AI Adoption SoarsMay 10, 2025 am 11:14 AMThe Rise of AI in HR: Navigating a Workforce with Robot Colleagues The integration of AI into human resources (HR) is no longer a futuristic concept; it's rapidly becoming the new reality. This shift impacts both HR professionals and employees, dem

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

WebStorm Mac version

Useful JavaScript development tools