Technology peripherals

Technology peripherals AI

AI How much potential do fixed-parameter models have? Hong Kong Chinese, Shanghai AI Lab and others proposed an efficient video understanding framework EVL

How much potential do fixed-parameter models have? Hong Kong Chinese, Shanghai AI Lab and others proposed an efficient video understanding framework EVLVisual basic models have achieved remarkable development in the past two years. On the one hand, pre-training based on large-scale Internet data has preset a large number of semantic concepts for the model, thus having good generalization performance; but on the other hand, in order to make full use of the model size brought by large-scale data sets Growth makes related models face inefficiency problems when migrating to downstream tasks, especially for video understanding models that need to process multiple frames.

- Paper link: https://arxiv.org/abs/2208.03550

- Code link: https://github.com/OpenGVLab/efficient-video-recognition

Based on the above two characteristics , researchers from the Chinese University of Hong Kong, Shanghai Artificial Intelligence Laboratory and other institutions proposed an efficient video understanding transfer learning framework EVL. By fixing the weight of the backbone basic model, it saves training calculations and memory consumption; at the same time, by utilizing multi-level, Fine-grained intermediate features maintain the flexibility of traditional end-to-end fine-tuning as much as possible.

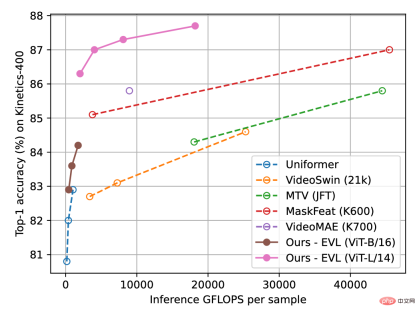

Figure 1 below shows the results of the EVL method on the video understanding dataset Kinetics-400. Experiments show that this method saves training overhead while still fully exploring the potential of the basic visual model in video understanding tasks.

Figure 1: Kinetics-400 recognition accuracy comparison, the horizontal axis is the amount of inference calculation, and the vertical axis is the accuracy.

Method

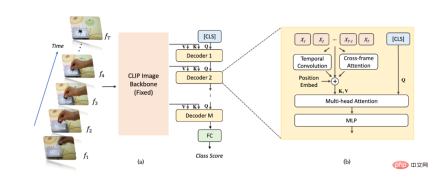

The overall schematic diagram of the algorithm is shown in Figure 2(a). For a video sample, we take T frames and input them into an image recognition network (taking CLIP as an example) and extract features. Compared with traditional methods, we extract multi-layer, unpooled features from the last few layers of the image recognition network to obtain richer and finer-grained image information; and the parameter weights of the image recognition network are always consistent in video learning. Stay fixed. Subsequently, the multi-layer feature maps are sequentially input into a Transformer decoder for video-level information aggregation. The multi-layer decoded [CLS] features are used to generate the final classification prediction.

As shown in Figure 2(b), due to the disorder when the Transformer decoder aggregates features, we added additional temporal information modeling modules to the network to better Extract location-related fine-grained timing information. Specifically, we add three additional types of position-related timing information: the first is the temporal position embeddings (Position Embeddings), the second is the temporal dimension depth-separable convolution (Depthwise Convolution), and the third is the attention between adjacent frames force information. For inter-frame attention information, we extract the Query and Key features of the corresponding layer from the image recognition network, and calculate the attention map between adjacent frames (different from the image recognition network, the attention map is composed of the Query from the same frame and Key features are obtained). The resulting attention map can explicitly reflect the position changes of objects between adjacent frames. After linear projection, the attention map obtains a vector group that reflects the object's displacement characteristics, and is integrated into the image features in the form of element-by-element addition.

Figure 2: EVL algorithm structure diagram. (a) Overall structure, (b) Sequential information modeling module.

##Figure 3: Inter-frame attention features mathematical expression.

Experiment

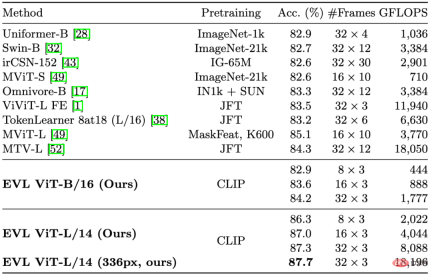

In Figure 1 and Table 1, we quoted some important methods in previous video understanding. Despite focusing on reducing training overhead, our method still outperforms existing methods in terms of accuracy (with the same amount of computation).

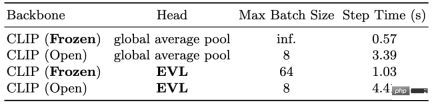

In Table 2 we show the reduction in training overhead brought by the fixed backbone network. In terms of memory, on the V100 16GB GPU, the fixed backbone network can enable a single-card batch size to reach a maximum of 64, while end-to-end training can only reach 8; in terms of time, the fixed backbone network can save 3 to 4 times the training time.

In Table 3 we show the improvement of recognition performance by fine-grained feature maps. The multi-layer unpooled features allow us to maintain a considerable degree of flexibility when fixing the backbone network weights. Using unpooled features brings the most significant improvement (about 3%), followed by using multi-layer decoders and mid-layer features, which also bring about 1% performance improvement each.

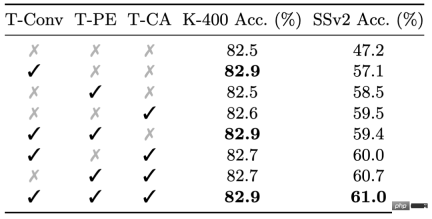

Finally we show the effect of the fine-grained timing information module in Table 4. Although fine-grained timing information has a limited impact on the performance of Kinetics-400, they are very important for the performance of Something-Something-v2: the three fine-grained timing information modules bring a total of about 0.5% and about 14% performance improvement.

Table 1: Comparison results with existing methods on Kinetics-400

Table 2: Training overhead reduction caused by fixed backbone network weights

Table 3: The impact of fine-grained feature maps on accuracy

Table 4: The effect of fine-grained time series information modeling on different data sets

Summary

This article proposes the EVL video understanding learning framework, which for the first time demonstrates the great potential of a fixed image backbone network in video understanding problems, and also makes high-performance video understanding more friendly to research groups with limited computing resources. We also believe that as the quality and scale of visual basic models improve, our method can provide a reference for subsequent research on lightweight transfer learning algorithms.

The above is the detailed content of How much potential do fixed-parameter models have? Hong Kong Chinese, Shanghai AI Lab and others proposed an efficient video understanding framework EVL. For more information, please follow other related articles on the PHP Chinese website!

How to Build Your Personal AI Assistant with Huggingface SmolLMApr 18, 2025 am 11:52 AM

How to Build Your Personal AI Assistant with Huggingface SmolLMApr 18, 2025 am 11:52 AMHarness the Power of On-Device AI: Building a Personal Chatbot CLI In the recent past, the concept of a personal AI assistant seemed like science fiction. Imagine Alex, a tech enthusiast, dreaming of a smart, local AI companion—one that doesn't rely

AI For Mental Health Gets Attentively Analyzed Via Exciting New Initiative At Stanford UniversityApr 18, 2025 am 11:49 AM

AI For Mental Health Gets Attentively Analyzed Via Exciting New Initiative At Stanford UniversityApr 18, 2025 am 11:49 AMTheir inaugural launch of AI4MH took place on April 15, 2025, and luminary Dr. Tom Insel, M.D., famed psychiatrist and neuroscientist, served as the kick-off speaker. Dr. Insel is renowned for his outstanding work in mental health research and techno

The 2025 WNBA Draft Class Enters A League Growing And Fighting Online HarassmentApr 18, 2025 am 11:44 AM

The 2025 WNBA Draft Class Enters A League Growing And Fighting Online HarassmentApr 18, 2025 am 11:44 AM"We want to ensure that the WNBA remains a space where everyone, players, fans and corporate partners, feel safe, valued and empowered," Engelbert stated, addressing what has become one of women's sports' most damaging challenges. The anno

Comprehensive Guide to Python Built-in Data Structures - Analytics VidhyaApr 18, 2025 am 11:43 AM

Comprehensive Guide to Python Built-in Data Structures - Analytics VidhyaApr 18, 2025 am 11:43 AMIntroduction Python excels as a programming language, particularly in data science and generative AI. Efficient data manipulation (storage, management, and access) is crucial when dealing with large datasets. We've previously covered numbers and st

First Impressions From OpenAI's New Models Compared To AlternativesApr 18, 2025 am 11:41 AM

First Impressions From OpenAI's New Models Compared To AlternativesApr 18, 2025 am 11:41 AMBefore diving in, an important caveat: AI performance is non-deterministic and highly use-case specific. In simpler terms, Your Mileage May Vary. Don't take this (or any other) article as the final word—instead, test these models on your own scenario

AI Portfolio | How to Build a Portfolio for an AI Career?Apr 18, 2025 am 11:40 AM

AI Portfolio | How to Build a Portfolio for an AI Career?Apr 18, 2025 am 11:40 AMBuilding a Standout AI/ML Portfolio: A Guide for Beginners and Professionals Creating a compelling portfolio is crucial for securing roles in artificial intelligence (AI) and machine learning (ML). This guide provides advice for building a portfolio

What Agentic AI Could Mean For Security OperationsApr 18, 2025 am 11:36 AM

What Agentic AI Could Mean For Security OperationsApr 18, 2025 am 11:36 AMThe result? Burnout, inefficiency, and a widening gap between detection and action. None of this should come as a shock to anyone who works in cybersecurity. The promise of agentic AI has emerged as a potential turning point, though. This new class

Google Versus OpenAI: The AI Fight For StudentsApr 18, 2025 am 11:31 AM

Google Versus OpenAI: The AI Fight For StudentsApr 18, 2025 am 11:31 AMImmediate Impact versus Long-Term Partnership? Two weeks ago OpenAI stepped forward with a powerful short-term offer, granting U.S. and Canadian college students free access to ChatGPT Plus through the end of May 2025. This tool includes GPT‑4o, an a

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

WebStorm Mac version

Useful JavaScript development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

Atom editor mac version download

The most popular open source editor