Technology peripherals

Technology peripherals AI

AI A brief analysis of the development of human-computer interaction in smart cockpits

A brief analysis of the development of human-computer interaction in smart cockpitsAt present, cars have not only changed in terms of power sources, driving methods and driving experience, but the cockpit has also bid farewell to the traditional boring mechanical and electronic space. The level of intelligence has soared, becoming a part of people's lives outside of home and office. The "third space" after that. Through high technologies such as face and fingerprint recognition, voice/gesture interaction, and multi-screen linkage, today's smart cockpits in automobiles have significantly enhanced their capabilities in environmental perception, information collection and processing, and have become "intelligent assistants" for human driving.

One of the significant signs that smart cockpit bids farewell to simple electronics and enters the intelligent assistant stage is that the interaction between humans and the cockpit changes from passive to active. This "passive" And "active" is defined centered on the cockpit itself. In the past, information exchange was mainly initiated by people, but now it can be initiated by both people and machines. The level of interaction between people and machines has become an important symbol for defining the level of smart cockpit products.

Human-computer interaction development background

It can be reflected from the history of computers and mobile phones The development of interaction methods between machines and humans, from complexity to simplicity, from abstract movements to natural interaction. The most important development trend of human-computer interaction in the future is to move machinery from passive response to active interaction. Looking at the extension of this trend, the ultimate goal of human-machine interaction is to anthropomorphize machines, making the interaction between humans and machines as natural and smooth as communication between humans. In other words, the history of human-computer interaction is the history of people adapting from machines to adapting to people through machines.

The development of smart cockpits has a similar process. With the advancement of electronic technology and the expectations of car owners, there are more and more electronic signals and functions inside and outside the car, so that car owners can reduce the waste of attention resources, thereby reducing driving distraction. As a result, car interaction methods have also gradually changed: Physical knob/keyboard - digital touch screen - language control - natural state interaction.

Natural interaction is the ideal model for the next generation of human-computer interaction

What is natural interaction?

#In short, communication is achieved through movement, eye tracking, language, etc. The consciousness modality here is more specifically similar to human "perception". Its form is mixed with various perceptions and corresponds to the five major human perceptions of vision, hearing, touch, smell, and taste. Corresponding information media include various sensors, such as sound, video, text and infrared, pressure, and radar. A smart car is essentially a manned robot. Its two most critical functions are self-control and interaction with people. Without one of them, it will not be able to work efficiently with people. Therefore, an intelligent human-computer interaction system is very necessary.

How to realize natural interaction

#More and more sensors are integrated into the cockpit, and the sensors improve the form Capabilities for diversity, data richness and accuracy. On the one hand, it makes the computing power demand in the cockpit leap forward, and on the other hand, it also provides better perception capability support. This trend makes it possible to create richer cockpit scene innovations and better interactive experiences. Among them, visual processing is the key to cockpit human-computer interaction technology. And fusion technology is the real solution. For example, when it comes to speech recognition in noisy conditions, microphones alone are not enough. In this case, people can selectively listen to someone's speech, not only with their ears, but also with their eyes. Therefore, by visually identifying the sound source and reading lips, it is possible to obtain better results than simple voice recognition. If the sensor is the five senses of a person, then the computing power is an automatically interactive human brain. The AI algorithm combines vision and speech. Through various cognitive methods, it can process various signals such as face, movement, posture, and voice. identification. As a result, more intelligent human target interaction can be achieved, including eye tracking, speech recognition, spoken language recognition linkage and driver fatigue status detection, etc.

The design of cockpit personnel interaction usually needs to be completed through edge computing rather than cloud computing. Three points: security, real-time and privacy security. Cloud computing relies on the network. For smart cars, relying on wireless networks cannot guarantee the reliability of their connections. At the same time, the data transmission delay is uncontrollable and smooth interaction cannot be guaranteed. To ensure a complete user experience for automated security domains, the solution lies in edge computing.

However, personal information security is also one of the problems faced. The private space in the cab is particularly safe in terms of safety. Today's personalized voice recognition is mainly implemented on the cloud, and private biometric information such as voiceprints can more conveniently display private identity information. By using the edge AI design on the car side, private biometric information such as pictures and sounds can be converted into car semantic information and then uploaded to the cloud, thus effectively ensuring the security of the car's personal information.

In the era of autonomous driving, interactive intelligence must match driving intelligence

In the foreseeable future, no one will Collaborative flight with aircraft will become a long-standing phenomenon, and drone interaction in the cockpit will become the first interface for people to master active flight skills. Currently, the field of intelligent driving faces the problem of uneven evolution. The level of human-computer interaction lags behind the improvement of autonomous driving, causing frequent autonomous driving problems and hindering the development of autonomous driving. The characteristic of human-computer interaction cooperation behavior is the human operation loop. Therefore, the human-computer interaction function must be consistent with the autonomous driving function. Failure to do so will result in serious expected functional safety risks, which are associated with the vast majority of fatal autonomous driving incidents. Once the human-computer interaction interface can provide the cognitive results of one's own driving, the energy boundary of the autonomous driving system can be further understood, which will greatly help improve the acceptance of L-level autonomous driving functions.

Of course, the current smart cockpit interaction method is mainly an extension of the mobile phone Android ecosystem, mainly supported by the host screen. Today's monitors are getting larger and larger, and this is actually because low-priority functions occupy the space of high-priority functions, causing additional signal interference and affecting operational safety. In the future, although physical displays will still exist, I believe that in the future, they will be replaced by natural human-computer interaction AR-HUD.

If the intelligent driving system is developed to L4 or above, people will be liberated from boring and tiring driving, and cars will become "people's third living space." In this way, the locations of the entertainment area and safety functional area (human-computer interaction and automatic control) in the cab will be changed in the future, and the safety area will become the main control area. Autonomous driving is the interaction between cars and the environment, and the interaction between people is the interaction between people and cars. The two are integrated to complete the collaboration of people, cars, and the environment, forming a complete closed loop of driving.

Second, the automatic dialogue AR-HUD dialogue interface is safer. When communicating with words or gestures, it can avoid diverting the driver's attention, thereby improving driving efficiency. Safety. This is simply not possible on a large cockpit screen, but ARHUD circumvents this problem while displaying autonomous driving sensing signals.

Third, the natural conversation method is an implicit, concise, and emotional natural conversation method. You can't occupy too much valuable physical space in the car, but you can be with the free person anytime and anywhere. Therefore, in the future, the intra-domain integration of smart driving and smart cockpit will be a safer development method, and the final development will be the central system of the car.

Practical principles of human-computer interaction

Touch interaction

The early center console screen only displayed radio information, and most of the area accommodated a large number of physical interaction buttons. These buttons basically achieved communication with humans through tactile interaction.

With the development of intelligent interaction, large screens for central control have appeared, and physical interaction buttons have begun to gradually decrease. The large central control screen is getting larger and larger, occupying an increasingly important position. The physical buttons on the center console have been reduced to none. At this time, the occupants can no longer interact with people through touch. However, at this stage, it gradually changes to visual interaction. People no longer use touch to communicate with others, but mainly use vision to communicate. operate. But it will be absolutely inconvenient for people to communicate with humans in the smart cockpit using only vision. Especially during driving, 90% of human visual attention must be devoted to observing road conditions, so that they can focus on the screen for a long time and talk to the smart cockpit.

Voice interaction

(1) Principle of voice interaction.

Understanding of natural speech - speech recognition - speech into speech.

(2) Scenarios required for voice interaction.

There are two main elements in the scenario application of voice control. One is that it can replace functions without prompts on the touch screen and have a natural dialogue with the human-machine interface. The other is that it minimizes the human-machine interface. The impact of manual control improves safety.

First, when you come home from get off work, you want to quickly control the vehicle, query information, and check air conditioning, seats, etc. while driving. On long journeys, investigate service areas and gas stations along the way, and investigate the schedule. The second is to use voice to link everything. Music and sub-screen entertainment in the car can be quickly evoked. So what we have to do is quickly control the vehicle.

The first is to quickly control the car. The basic functions include adjusting the ambient lighting in the car, adjusting the volume, adjusting the air conditioning temperature control in the car, adjusting the windows, adjusting the rearview mirror, and quickly controlling the vehicle. The original intention is to allow the driver to control the vehicle more quickly, reducing distractions and helping to increase the safe operation factor. Remote language interaction is an important entrance to the implementation of the entire system, because the system must understand the driver's voice instructions and provide intelligent navigation. Not only can we passively accept tasks, but we can also provide you with additional services such as destination introduction and schedule planning.

Next, there is the monitoring of the vehicle and driver. During real-time operation, the performance and status of the vehicle such as tire pressure, tank temperature, coolant, and engine oil can be inquired at any time. . Real-time information query helps drivers process information in advance. Of course, you should also pay attention in real time when reaching the warning critical point. In addition to internal monitoring, external monitoring is of course also required. Mixed monitoring of biometrics and voice monitoring can monitor the driver's emotions. Remind the driver to cheer up at the appropriate time to avoid traffic accidents. As well as precautions for fatigue sounds caused by long-term driving. Finally, in terms of multimedia entertainment, driving scenes, playing music and radio are the most frequent operations and needs. In addition to simple functions such as play, pause, and song switching, the development of personalized functions such as collection, account registration, opening of play history, switching of play order, and on-site interaction are also awaiting.

Accommodating Errors

Fault tolerance mechanisms must be allowed in voice conversations. Basic fault tolerance is also handled on a scenario-by-scenario basis. The first is that the user does not understand, and the user is asked to say it again. The second is that the user has listened but does not have the ability to handle the problem. The third is that it is recognized as an error message, which can be confirmed again.

Face recognition

(1) Principle of face recognition.

Facial feature recognition technology in the cockpit generally includes the following three aspects: facial feature inspection and pattern recognition. As the overall information on the Internet becomes biogenic, facial information is input on multiple platforms, and cars are a focus of the Internet of Everything. As more mobile terminal usage scenarios move to the car, account registration and identity authentication need to be performed in the car.

(2) Face recognition usage scenarios.

Before driving, you must get in the car to verify the car owner information and register the application ID. During walking, facial recognition is the main work scenario for fatigue with eyes closed while walking, phone reminder, no eyesight, and yawning.

Mere interactions can make it more inconvenient for the driver. For example, using voice alone is prone to misdirections and simple touch operations, and the driver cannot meet the 3-second principle. Only when multiple interaction methods such as voice, gestures, and vision are integrated can the intelligent system communicate with the driver in various scenarios more accurately, conveniently, and safely.

Challenges and future of human-computer interaction

Challenges of human-computer interaction

The ideal natural interaction starts with the user’s experience and creates a safe, smooth, and predictable interactive experience. But no matter how rich life is, we must always start from the facts. There are still many challenges at present.

At present, misrecognition of natural interactions is still very serious, and reliability and accuracy in all working conditions and all-weather are far from enough. Therefore, in gesture recognition, the gesture recognition rate based on vision is still very low, so various algorithms must be developed to improve the accuracy and speed of recognition. Unintentional gestures may be mistaken for command actions, but in fact this is just one of countless misunderstandings. In the case of movement, the projection, vibration, and occlusion of light are all major technical issues. Therefore, in order to reduce the misrecognition rate, various technical means need to be comprehensively supported by using multi-sensor fusion verification methods, sound confirmation and other methods to match the operating scenario. Secondly, the current smoothness problem of natural interaction is still a difficulty that must be overcome, requiring more advanced sensors, more powerful capabilities, and more efficient computing. At the same time, natural language processing capabilities and intention expression are still in their infancy, and require in-depth research on algorithmic technology.

In the future, cockpit human-computer interaction will move toward the virtual world and emotional connection

One of the reasons why consumers are willing to pay for additional intelligent functions beyond car mobility is conversation and experience. We mentioned above that the development of smart cockpits in the future is people-centered, and it will evolve into the third space in people's lives.

This kind of human-computer interaction is by no means a simple call response, but a multi-channel, multi-level, multi-mode communication experience. From the perspective of the occupants, the future intelligent cockpit human-computer interaction system will use intelligent language as the main communication method, and use touch, gestures, dynamics, expressions, etc. as auxiliary communication methods to free the occupants' hands and eyes to reduce the risk of driver manipulation.

With the increase of sensors in the cockpit, it is a certain trend that the human-computer interaction service object shifts from the driver as the center to the full-vehicle passenger service. The smart cockpit builds a virtual space, and the natural interaction between people will bring a new immersive extended reality entertainment experience. The powerful configuration, combined with the powerful interactive equipment in the cockpit, can build an in-car metaverse and provide various immersive games. Smart cockpits may be a good carrier for original space.

The natural interaction between man and machine also brings emotional connection. The cockpit becomes a human companion, a more intelligent companion, learning the behavior, habits and preferences of the car owner, and sensing the inside of the cockpit. environment, combined with the vehicle's current location, to proactively provide information and functional prompts when needed. With the development of artificial intelligence, in our lifetime, we have the opportunity to see human emotional connections gradually participate in our personal lives. Ensuring that technology is good may be another major issue we must face at that time. But no matter what, technology will develop in this direction.

Summary of human-computer interaction in intelligent cockpit

#In the current fierce competition in the automobile industry, artificial intelligence cockpit systems have become The key issue to realize the functional differentiation of the whole machine factory is that the cockpit human-computer interaction system is closely related to people's communication behavior, language and culture, etc., so it needs to be highly localized. Intelligent vehicle human-computer interaction is an important breakthrough for the brand upgrade of Chinese intelligent vehicle companies and a breakthrough for China's intelligent vehicle technology to guide the world's technological development trends.

The integration of these interactions and interactions will provide a more comprehensive immersive experience in the future, continue to promote the maturity of new interaction methods and technologies, and hope to evolve from the current experience-enhancing functions to A must-have feature for future smart cockpits. In the future, smart cockpit interaction technology is expected to cover a variety of travel needs, whether it is basic safety needs or deeper psychological needs of belonging and self-realization.

The above is the detailed content of A brief analysis of the development of human-computer interaction in smart cockpits. For more information, please follow other related articles on the PHP Chinese website!

Windows 11 上的智能应用控制:如何打开或关闭它Jun 06, 2023 pm 11:10 PM

Windows 11 上的智能应用控制:如何打开或关闭它Jun 06, 2023 pm 11:10 PM智能应用控制是Windows11中非常有用的工具,可帮助保护你的电脑免受可能损害数据的未经授权的应用(如勒索软件或间谍软件)的侵害。本文将解释什么是智能应用控制、它是如何工作的,以及如何在Windows11中打开或关闭它。什么是Windows11中的智能应用控制?智能应用控制(SAC)是Windows1122H2更新中引入的一项新安全功能。它与MicrosoftDefender或第三方防病毒软件一起运行,以阻止可能不必要的应用,这些应用可能会减慢设备速度、显示意外广告或执行其他意外操作。智能应用

一文聊聊SLAM技术在自动驾驶的应用Apr 09, 2023 pm 01:11 PM

一文聊聊SLAM技术在自动驾驶的应用Apr 09, 2023 pm 01:11 PM定位在自动驾驶中占据着不可替代的地位,而且未来有着可期的发展。目前自动驾驶中的定位都是依赖RTK配合高精地图,这给自动驾驶的落地增加了不少成本与难度。试想一下人类开车,并非需要知道自己的全局高精定位及周围的详细环境,有一条全局导航路径并配合车辆在该路径上的位置,也就足够了,而这里牵涉到的,便是SLAM领域的关键技术。什么是SLAMSLAM (Simultaneous Localization and Mapping),也称为CML (Concurrent Mapping and Localiza

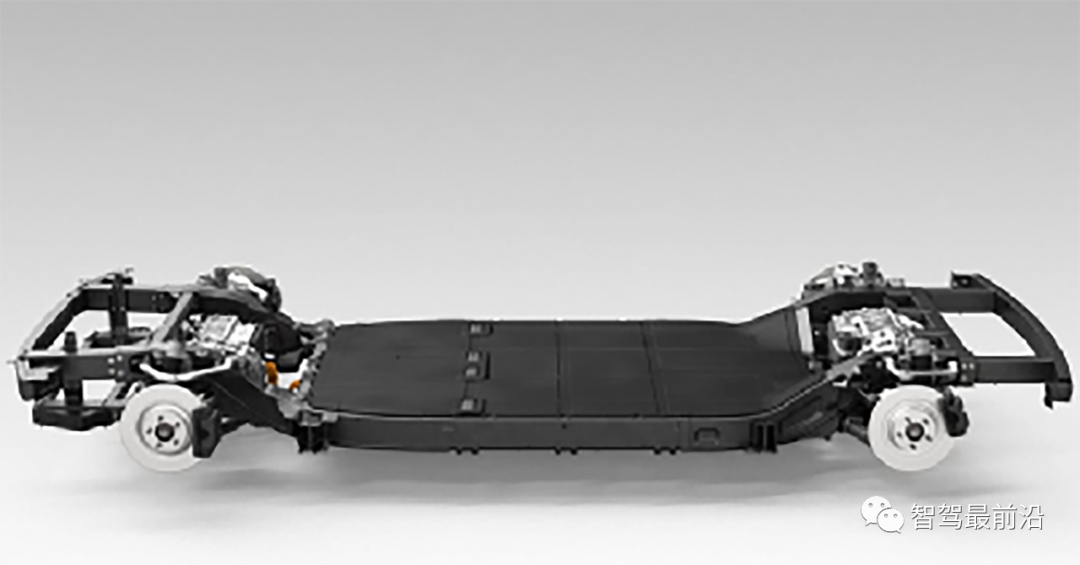

一文读懂智能汽车滑板底盘May 24, 2023 pm 12:01 PM

一文读懂智能汽车滑板底盘May 24, 2023 pm 12:01 PM01什么是滑板底盘所谓滑板式底盘,即将电池、电动传动系统、悬架、刹车等部件提前整合在底盘上,实现车身和底盘的分离,设计解耦。基于这类平台,车企可以大幅降低前期研发和测试成本,同时快速响应市场需求打造不同的车型。尤其是无人驾驶时代,车内的布局不再是以驾驶为中心,而是会注重空间属性,有了滑板式底盘,可以为上部车舱的开发提供更多的可能。如上图,当然我们看滑板底盘,不要上来就被「噢,就是非承载车身啊」的第一印象框住。当年没有电动车,所以没有几百公斤的电池包,没有能取消转向柱的线传转向系统,没有线传制动系

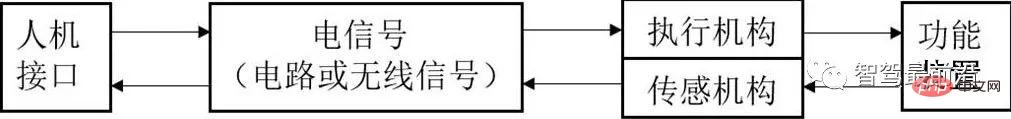

智能网联汽车线控底盘技术深度解析May 02, 2023 am 11:28 AM

智能网联汽车线控底盘技术深度解析May 02, 2023 am 11:28 AM01线控技术认知线控技术(XbyWire),是将驾驶员的操作动作经过传感器转变成电信号来实现传递控制,替代传统机械系统或者液压系统,并由电信号直接控制执行机构以实现控制目的,基本原理如图1所示。该技术源于美国国家航空航天局(NationalAeronauticsandSpaceAdministration,NASA)1972年推出的线控飞行技术(FlybyWire)的飞机。其中,“X”就像数学方程中的未知数,代表汽车中传统上由机械或液压控制的各个部件及相关的操作。图1线控技术的基本原理

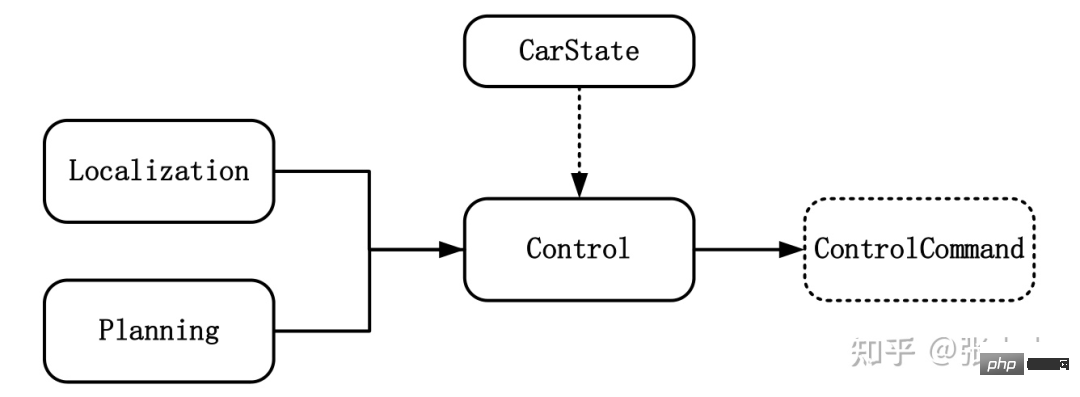

智能汽车规划控制常用控制方法详解Apr 11, 2023 pm 11:16 PM

智能汽车规划控制常用控制方法详解Apr 11, 2023 pm 11:16 PM控制是驱使车辆前行的策略。控制的目标是使用可行的控制量,最大限度地降低与目标轨迹的偏差、最大限度地提供乘客的舒适度等。如上图所示,与控制模块输入相关联的模块有规划模块、定位模块和车辆信息等。其中定位模块提供车辆的位置信息,规划模块提供目标轨迹信息,车辆信息则包括档位、速度、加速度等。控制输出量则为转向、加速和制动量。控制模块主要分为横向控制和纵向控制,根据耦合形式的不同可以分为独立和一体化两种方法。1 控制方法1.1 解耦控制所谓解耦控制,就是将横向和纵向控制方法独立分开进行控制。1.2 耦合控

一文读懂智能汽车驾驶员监控系统Apr 11, 2023 pm 08:07 PM

一文读懂智能汽车驾驶员监控系统Apr 11, 2023 pm 08:07 PM驾驶员监控系统,缩写DMS,是英文Driver Monitor System的缩写,即驾驶员监控系统。主要是实现对驾驶员的身份识别、驾驶员疲劳驾驶以及危险行为的检测功能。福特DMS系统01 法规加持,DMS进入发展快车道在现阶段开始量产的L2-L3级自动驾驶中,其实都只有在特定条件下才可以实行,很多状况下需要驾驶员能及时接管车辆进行处置。因此,在驾驶员太信任自动驾驶而放弃或减弱对驾驶过程的掌控时可能会导致某些事故的发生。而DMS-驾驶员监控系统的引入可以有效减轻这一问题的出现。麦格纳DMS系统,

李飞飞两位高徒联合指导:能看懂「多模态提示」的机器人,zero-shot性能提升2.9倍Apr 12, 2023 pm 08:37 PM

李飞飞两位高徒联合指导:能看懂「多模态提示」的机器人,zero-shot性能提升2.9倍Apr 12, 2023 pm 08:37 PM人工智能领域的下一个发展机会,有可能是给AI模型装上一个「身体」,与真实世界进行互动来学习。相比现有的自然语言处理、计算机视觉等在特定环境下执行的任务来说,开放领域的机器人技术显然更难。比如prompt-based学习可以让单个语言模型执行任意的自然语言处理任务,比如写代码、做文摘、问答,只需要修改prompt即可。但机器人技术中的任务规范种类更多,比如模仿单样本演示、遵照语言指示或者实现某一视觉目标,这些通常都被视为不同的任务,由专门训练后的模型来处理。最近来自英伟达、斯坦福大学、玛卡莱斯特学

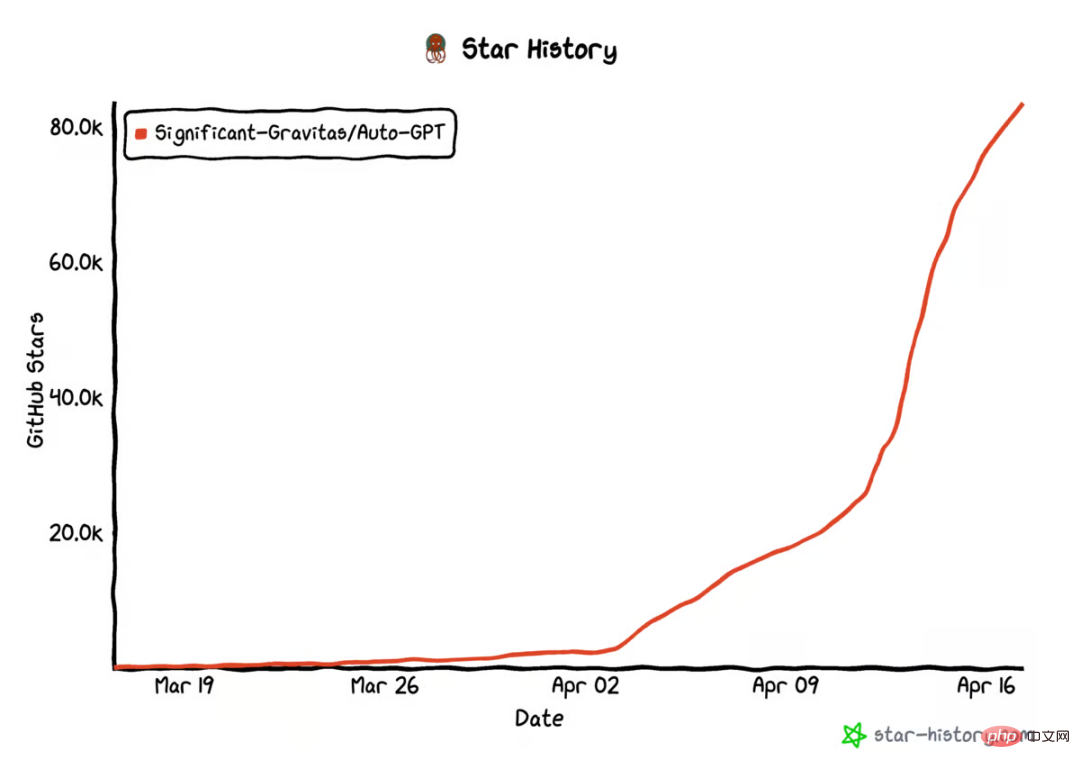

AutoGPT star量破10万,这是首篇系统介绍自主智能体的文章Apr 28, 2023 pm 04:10 PM

AutoGPT star量破10万,这是首篇系统介绍自主智能体的文章Apr 28, 2023 pm 04:10 PM在GitHub上,AutoGPT的star量已经破10万。这是一种新型人机交互方式:你不用告诉AI先做什么,再做什么,而是给它制定一个目标就好,哪怕像「创造世界上最好的冰淇淋」这样简单。类似的项目还有BabyAGI等等。这股自主智能体浪潮意味着什么?它们是怎么运行的?它们在未来会是什么样子?现阶段如何尝试这项新技术?在这篇文章中,OctaneAI首席执行官、联合创始人MattSchlicht进行了详细介绍。人工智能可以用来完成非常具体的任务,比如推荐内容、撰写文案、回答问题,甚至生成与现实生活无

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Notepad++7.3.1

Easy-to-use and free code editor

Atom editor mac version download

The most popular open source editor

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.