Home >Technology peripherals >AI >An article discussing the three core elements of autonomous driving

An article discussing the three core elements of autonomous driving

- 王林forward

- 2023-04-12 20:19:061361browse

Sensors: Different positioning and functions, complementary advantages

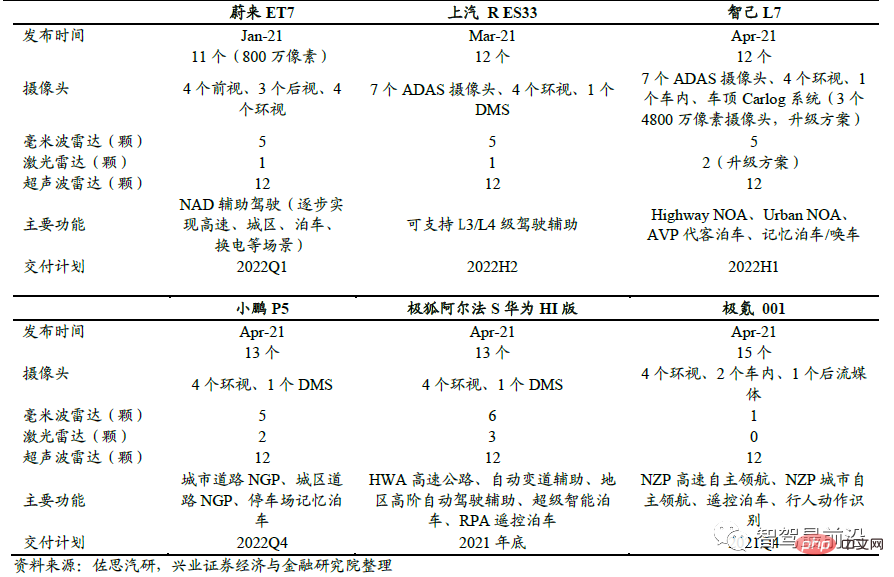

Autonomous vehicles are often equipped with a variety of sensors, including cameras, millimeter wave radar, and lidar. These sensors each have different functions and positioning, and complement each other's advantages; as a whole, they become the eyes of autonomous vehicles. New cars after 2021 will be equipped with a large number of sensors to reserve redundant hardware so that more autonomous driving functions can be implemented later through OTA.

Sensor configuration and core functions of newly released domestic models from January to May 2021

The function of the camera: It is mainly used for lane lines, traffic signs, traffic lights, vehicle and pedestrian detection. It has the characteristics of comprehensive detection information and low price, but it will be affected by rain, snow and light. Impact. Modern cameras are composed of lenses, lens modules, filters, CMOS/CCD, ISP, and data transmission parts. The light is focused on the sensor after passing through the optical lens and filter. The optical signal is converted into an electrical signal through a CMOS or CCD integrated circuit, and then converted into a standard digital image in RAW, RGB or YUV format by the image processor (ISP). The signal is transmitted to the computer through the data transmission interface. Cameras can provide a wealth of information. However, the camera relies on natural light sources. The current dynamic range of the visual sensor is not particularly wide. When the light is insufficient or the light changes drastically, the visual image may be temporarily lost, and the function will be severely limited in rain and pollution conditions. In the industry Computer vision is usually used to overcome various shortcomings of cameras.

Car cameras are a high-growth market. The use of in-vehicle cameras is increasing with the continuous upgrading of autonomous driving functions. For example, 1-3 cameras are generally required for forward view and 4-8 cameras for surround view. It is expected that the global automotive camera market will reach 176.26 billion yuan by 2025, of which the Chinese market will reach 23.72 billion yuan.

Global and Chinese automotive camera market size from 2015 to 2025 (100 million yuan)

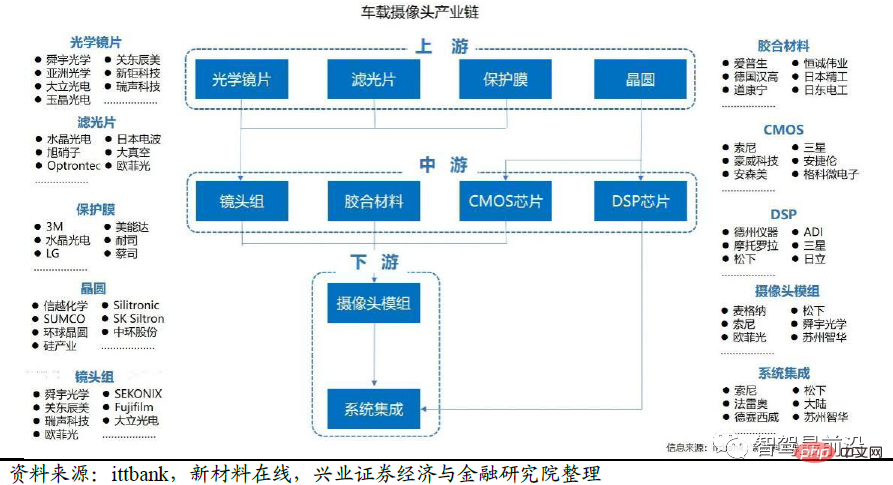

The automotive camera industry chain includes upstream lens set suppliers, glue material suppliers, image sensor suppliers, ISP chip suppliers, as well as midstream module suppliers and system integrators. , downstream consumer electronics companies, autonomous driving Tier1, etc. In terms of value, the image sensor (CMOS Image Sensor) accounts for 50% of the total cost, followed by module packaging, which accounts for 25%, and optical lenses, which account for 14%.

Camera Industry Chain

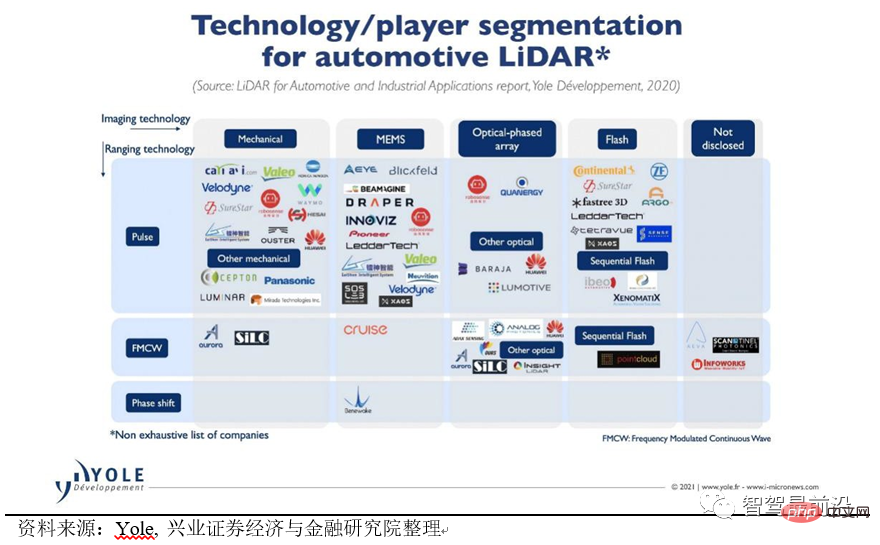

Laser The role of radar (Lidar): It is mainly used to detect the distance and speed of surrounding objects. At the transmitting end of the lidar, a high-energy laser beam is generated by a laser semiconductor. After the laser collides with the surrounding targets, it is reflected back, and is captured and calculated by the receiving end of the lidar to obtain the distance and speed of the target. LiDAR has higher detection accuracy than millimeter waves and cameras, and its detectable detection distance is long, often reaching more than 200 meters. LiDAR is divided into mechanical, rotating mirror, MEMS and solid-state LiDAR according to its scanning principle. According to the ranging principle, it can be divided into time-of-flight ranging (ToF) and frequency modulated continuous wave (FMCW). The industry is currently in the exploratory stage of lidar application, and there is no clear direction yet, and it is unclear which technical route will become the mainstream in the future.

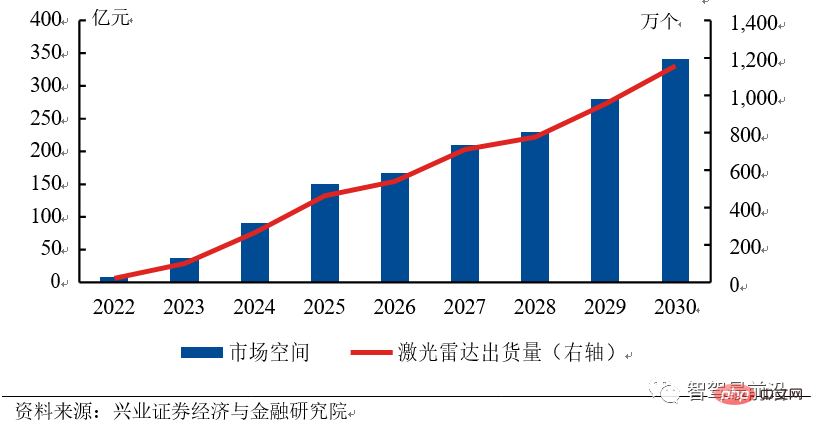

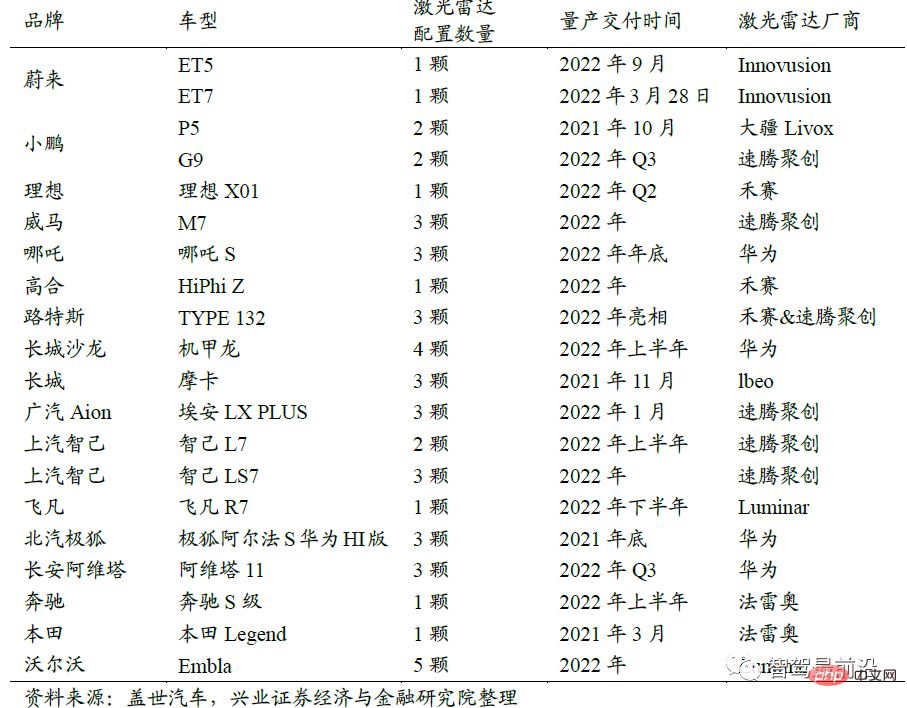

The laser radar market is vast, and Chinese companies will lead the United States. The lidar market has broad prospects. We predict that by 2025, the Chinese lidar market will be close to 15 billion yuan, and the global market will be close to 30 billion yuan; by 2030, the Chinese lidar market will be close to 35 billion yuan, and the global market will be close to 65 billion yuan. The annual growth rate of the market reached 48.3%. Tesla, the largest self-driving company in the United States, adopts a pure vision solution. Other car companies have no specific plans to put lidar on cars. Therefore, China has become the largest potential market for automotive lidar. In 2022, a large number of domestic vehicle manufacturers will launch products equipped with lidar, and it is expected that shipments of automotive lidar products will reach 200,000 units in 2022. Chinese companies have a higher probability of winning because they are closer to the market, have a high degree of cooperation with Chinese OEMs, and can more easily obtain market orders, so they can reduce costs faster, forming a virtuous cycle. China's vast market will help Chinese lidar companies bridge the technology gap with foreign companies.

China LiDAR Market Outlook from 2022 to 2030

List of lidar models

Each technical route at the current stage has its own advantages and disadvantages. Our judgment is In the future, FMCW technology will coexist with TOF technology, 1550nm laser emitters will be better than 905nm, and the market may skip semi-solid state and jump directly to the all-solid state stage.

FMCW technology coexists with TOF technology: TOF technology is relatively mature and has the advantages of fast response speed and high detection accuracy, but it cannot directly measure speed; FMCW can be measured directly through the Doppler principle It has high speed and sensitivity (more than 10 times higher than ToF), strong anti-interference ability, long-distance detection, and low power consumption. In the future, high-end products may use FMCW and low-end products use TOF.

1550nm is better than 905 nm: 905nm is a near-infrared laser that is easily absorbed by the human retina and causes retinal damage, so the 905nm solution can only be maintained at low power. The principle of 1550nm laser is visible spectrum. Laser under the same power condition causes less damage to the human eye and has a longer detection range. However, the disadvantage is that it requires InGaAs as the generator and cannot use silicon-based detectors.

Skip semi-solid state and jump directly to all-solid state: The existing semi-solid state solutions include rotating mirror type, angular type, and MEMS, all of which have a small number of mechanical parts, short service life in the vehicle environment, and are difficult to Passed vehicle certification. The VCSEL SPAD solution for solid-state lidar adopts chip-level technology, has a simple structure, and can easily pass vehicle regulations. It has become the most mainstream technical solution for pure solid-state lidar at present. The lidar behind iPhone12 pro uses the VCSEL SPAD solution.

Technical route and representative enterprises of lidar

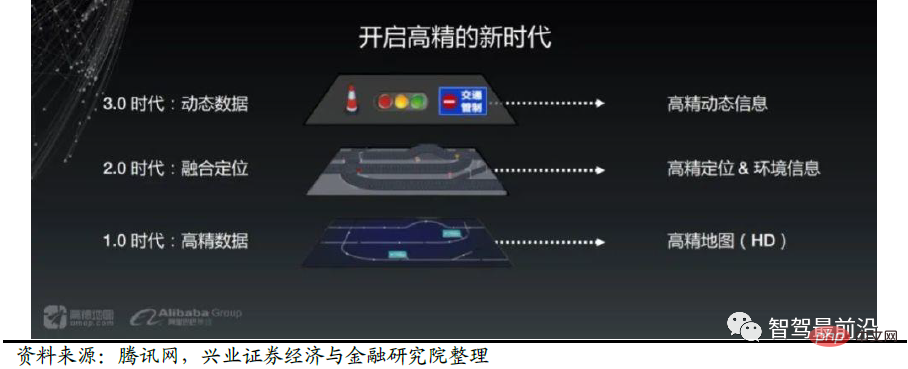

High-precision maps may be subverted. The battle for routes continues in the field of advanced maps. Tesla has proposed a high-precision map that does not require advance mapping. Based on the data collected by cameras, artificial intelligence technology is used to construct a three-dimensional space of the environment. It adopts crowdsourcing thinking and is composed of Each vehicle provides road information and is unified and aggregated in the cloud. Therefore, we need to be alert to the subversion of high-precision maps caused by technological innovation.

Some practitioners believe that high-precision maps are indispensable for intelligent driving. From the perspective of field of view, high-precision maps are not blocked and do not have distance and visual defects. Under special weather conditions Under certain conditions, high-precision maps can still play a role; in terms of errors, high-precision maps can effectively eliminate some sensor errors and can effectively supplement and correct existing sensor systems under some road conditions. In addition, high-precision maps can also build a driving experience database, analyze dangerous areas through multi-dimensional spatio-temporal data mining, and provide drivers with new driving experience data sets.

Lidar vision technology, collection vehicle and crowdsourcing model are the mainstream solutions for high-precision maps in the future.

High-precision maps need to balance the two measurement indicators of accuracy and speed. Too low collection accuracy and too low update frequency cannot meet the needs of autonomous driving for high-precision maps. In order to solve this problem, high-precision map companies have adopted some new methods, such as the crowdsourcing model. Each self-driving car serves as a high-precision map collection device to provide high-precision dynamic information, which is aggregated and distributed to other cars for use. . Under this model, leading high-precision map companies can collect more accurate and faster high-precision maps due to the large number of car models that can participate in crowdsourcing, maintaining a situation where the strong are always strong.

##Amap Fusion Solution

Computing platform: The requirements for chips continue to increase, and semiconductor technology is the moat

The computing platform is also called an autonomous driving domain controller. As the penetration rate of autonomous driving above L3 increases, the requirements for computing power also increase. Although the current L3 regulations and algorithms have not yet been introduced, vehicle companies have adopted computing power redundancy solutions to reserve for subsequent software iterations. space.

The computing platform will have two development characteristics in the future: heterogeneity and distributed elasticity.

Heterogeneous: For high-end autonomous vehicles, the computing platform needs to be compatible with multiple types and data sensors and have high security and high performance. The existing single chip cannot meet many interface and computing power requirements, and a heterogeneous chip hardware solution is required. Heterogeneity can be reflected in a single board integrating multiple architecture chips, such as Audi zFAS integrated MCU (microcontroller), FPGA (programmable gate array), CPU (central processing unit), etc.; it can also be reflected in a powerful single chip (SoC, system-on-chip) integrates multiple architectural units at the same time, such as NVIDIA Xavier integrated GPU (graphics processor) and CPU two heterogeneous units.

Distribution flexibility: The current automotive electronics architecture consists of many single-function chips gradually integrated into domain controllers. High-end autonomous driving requires on-board intelligent computing platforms with features such as system redundancy and smooth expansion. On the one hand, taking into account the heterogeneous architecture and system redundancy, multiple boards are used to realize system decoupling and backup; on the other hand, multi-board distributed expansion is used to meet the computing power and interface requirements of high-end autonomous driving. The overall system collaborates to implement autonomous driving functions under the unified management and adaptation of the same autonomous driving operating system, and adapts different chips by changing hardware drivers, communication services, etc. As the level of autonomous driving increases, the system's demand for computing power, interfaces, etc. will increase day by day. In addition to increasing the computing power of a single chip, hardware components can also be stacked repeatedly to achieve flexible adjustment and smooth expansion of hardware components, thereby improving the computing power of the entire system, increasing interfaces, and improving functions.

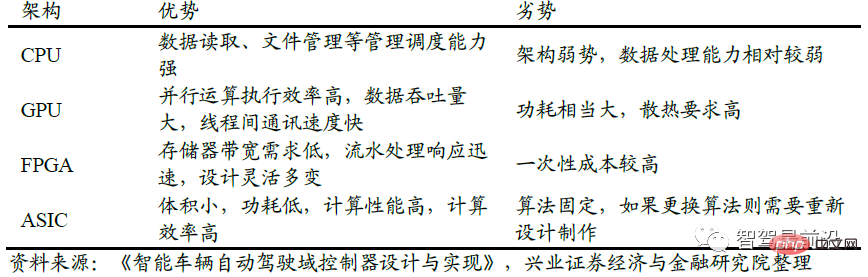

The heterogeneous distributed hardware architecture mainly consists of three parts: AI unit, computing unit and control unit.

AI unit: adopts a parallel computing architecture AI chip, and uses a multi-core CPU to configure the AI chip and necessary processors. Currently, AI chips are mainly used for efficient fusion and processing of multi-sensor data, and output key information for execution layer execution. The AI unit is the most demanding part of the heterogeneous architecture and needs to break through the bottlenecks of cost, power consumption and performance to meet industrialization requirements. AI chips can choose GPU, FPGA, ASIC (application specific integrated circuit), etc.

Comparison of different types of chips

Computing unit: The computing unit consists of multiple CPUs. It has the characteristics of high single-core frequency and strong computing power, and meets the corresponding functional safety requirements. Loading Hypervisor, Linux's kernel management system, manages software resources, completes task scheduling, and is used to execute most of the core algorithms related to autonomous driving and integrate multi-dimensional data to achieve path planning and decision-making control.

Control unit: Mainly based on traditional vehicle controller (MCU). The control unit loads the basic software of the ClassicAUTOSAR platform, and the MCU is connected to the ECU through the communication interface to achieve horizontal and longitudinal control of vehicle dynamics and meet the functional safety ASIL-D level requirements.

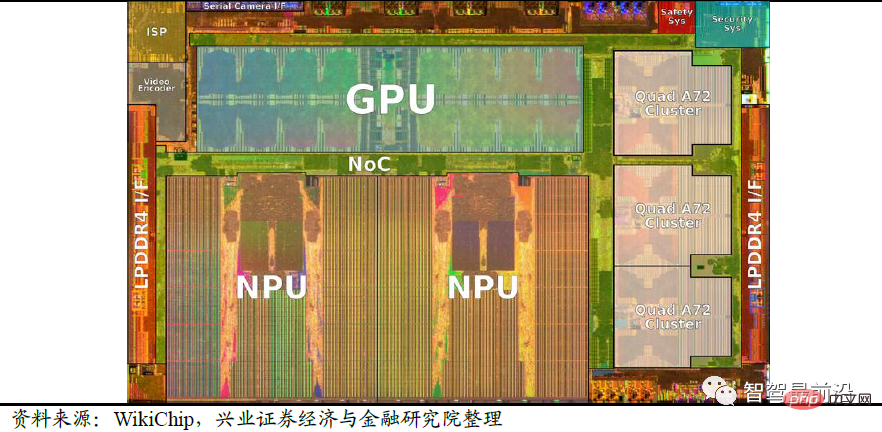

Take the Tesla FSD chip as an example. The FSD chip adopts the CPU GPU ASIC architecture. Contains 3 quad-core Cortex-A72 clusters for a total of 12 CPUs running at 2.2 GHz; a Mali G71 MP12 GPU running at 1 GHz, 2 Neural Processing Units (NPUs), and various other hardware accelerators. There is a clear division of labor between the three types of sensors. The Cortex-A72 core CPU is used for general computing processing, the Mali core GPU is used for lightweight post-processing, and the NPU is used for neural network calculations. The GPU computing power reaches 600GFLOPS, and the NPU computing power reaches 73.73Tops.

Tesla FSD chip architecture

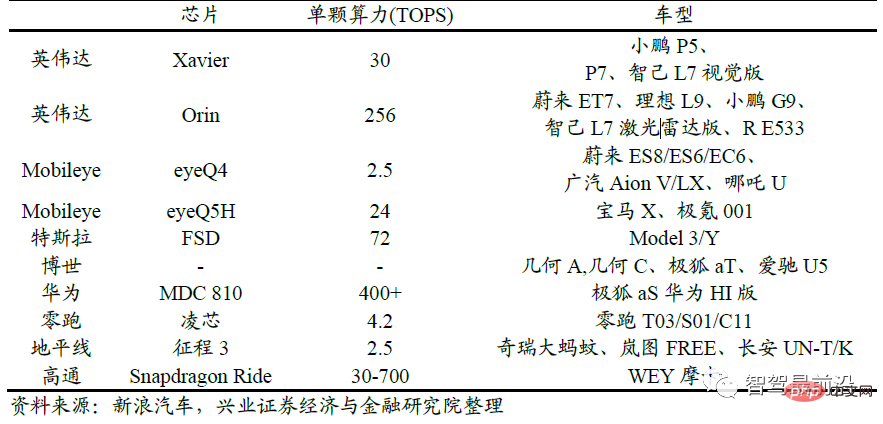

The core technology of the autonomous driving domain controller is the chip, followed by the software and operating system. The short-term moat is customers and delivery capabilities.

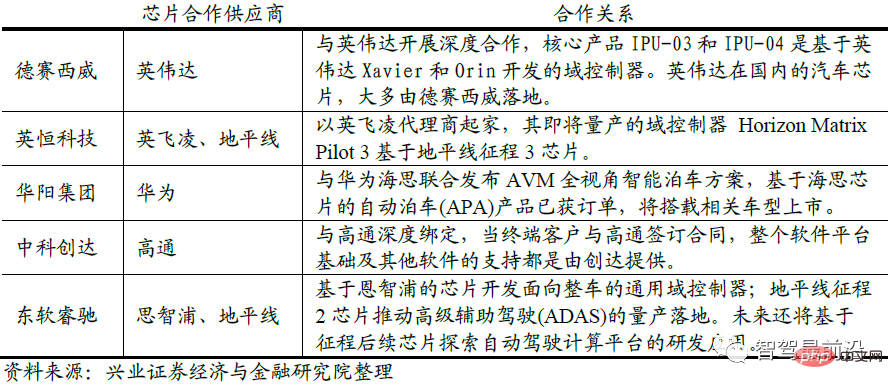

The chip determines the computing power of the autonomous driving computing platform. It is difficult to design and manufacture and can easily become a stuck link. The high-end market is dominated by international semiconductor giants NVIDIA, Mobileye, Texas Instruments, NXP, etc. In the L2 and below markets, domestic companies represented by Horizon are gradually gaining recognition from customers. China's domain controller manufacturers generally cooperate in depth with a chip manufacturer to purchase chips and deliver them to vehicle manufacturers with their own hardware manufacturing and software integration capabilities. Cooperation with chip companies is generally exclusive. From the perspective of chip cooperation, Desay SV has the most obvious advantages by tying up with Nvidia and Thunderstar with Qualcomm. Huayang Group, another domestic autonomous driving domain controller company, has tied up with Huawei HiSilicon and Neusoft Reach to establish cooperative relationships with NXP and Horizon.

The cooperative relationship between domestic domain controller companies and chip companies

The competitiveness of a domain controller is determined by the chip companies it cooperates with upstream. The downstream OEMs often purchase a complete set of solutions provided by the chip companies. For example, the high-end models of Weilai, Ideal, and Xpeng purchase NVIDIA Orin chips and NVIDIA autonomous driving software; Jikrypton and BMW purchase solutions from chip company Mobileye; Changan and Great Wall purchase Horizon's L2 solution. We should continue to pay attention to the cooperation between chip and domain controller companies.

##Cooperation between chip company products and car companies

3. Data and Algorithms: Data helps to iterate algorithms, and algorithm quality is the core competitiveness of autonomous driving companies

User data is extremely important for transforming autonomous driving systems. In the process of autonomous driving, there is a rare scenario that is unlikely to occur. This type of scenario is called a corner case. If the sensing system encounters a corner case, it will cause serious security risks. For example, what happened in the past few years was that Tesla's Autopilot did not recognize a large white truck that was crossing and hit it directly from the side, causing the death of the owner; in April 2022, Xiaopeng crashed and rolled over while turning on autonomous driving. Vehicles in the middle of the road.

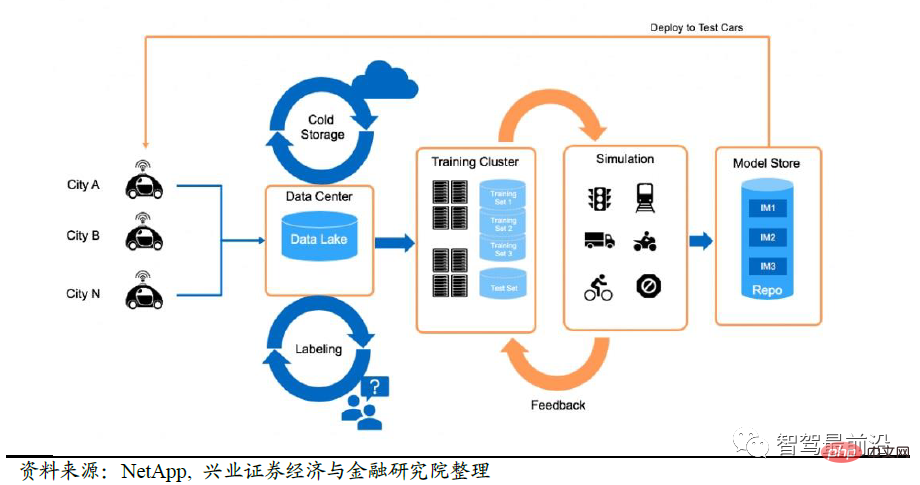

There is only one solution to such problems, which is for car companies to take the lead in collecting real data and at the same time simulate more similar environments on the autonomous driving computing platform so that the system can learn Better handle it next time. A typical example is Tesla's Shadow Mode: identifying potential corner cases by comparing it with human driver behavior. These scenes are then annotated and added to the training set.

Accordingly, car companies need to establish a data processing process so that the real data collected can be used for model iteration, and the iterated model can be installed on real mass-produced vehicles. At the same time, in order to let the machine learn cornercases on a large scale, after obtaining a cornercase, a large-scale simulation will be performed on the problems encountered in this cornercase to derive more cornercases for system learning. Nvidia DriveSim, a simulation platform developed by NVIDIA using Metaverse technology, is one of the simulation systems. Data-leading companies build data moats.

The common data processing process is:

1) Determine whether the autonomous vehicle encounters a corner case and upload it

2) Label the uploaded data

3) Use simulation software to simulate and create additional training data

4) Iteratively update the neural network model with data

5) Pass Deploy the model to real vehicles through OTA

Data processing process

Behind the data closed loop relies on data centers with extremely large computing power. According to NVIDIA’s speech at 2022CES, companies investing in L2 assisted driving systems only need 1-2000 GPUs to develop complete L4 autonomous driving. The systems company needs 25,000 GPUs to build a data center.

1. Tesla currently has 3 major computing centers with a total of 11,544 GPUs: the automatic marking computing center has 1,752 A100 GPUs, and the other two computing centers used for training have 4,032 GPUs respectively. , 5760 A100 GPUs; the self-developed DOJO supercomputer system released on 2021 AI DAY has 3000 D1 chips and a computing power of up to 1.1EFLOPS.

2. The Shanghai Supercomputing Center project under construction by SenseTime Technology has planned 20,000 A100 GPUs. Once completed, the peak computing power will reach 3.65EFLPOS (BF16/CFP8).

The above is the detailed content of An article discussing the three core elements of autonomous driving. For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- Technology trends to watch in 2023

- How Artificial Intelligence is Bringing New Everyday Work to Data Center Teams

- Can artificial intelligence or automation solve the problem of low energy efficiency in buildings?

- OpenAI co-founder interviewed by Huang Renxun: GPT-4's reasoning capabilities have not yet reached expectations

- Microsoft's Bing surpasses Google in search traffic thanks to OpenAI technology