Technology peripherals

Technology peripherals AI

AI Yann LeCun opens up about Google Research: Targeted communication has been around for a long time, where is your innovation?

Yann LeCun opens up about Google Research: Targeted communication has been around for a long time, where is your innovation?Yann LeCun opens up about Google Research: Targeted communication has been around for a long time, where is your innovation?

Recently, academic Turing Award winner Yann LeCun questioned a Google study.

Some time ago, Google AI proposed a general hierarchical loss structure for multi-layer neural networks in its new research "LocoProp: Enhancing BackProp via Local Loss Optimization" Framework LocoProp, which achieves performance close to second-order methods while using only first-order optimizers.

More specifically, the framework reimagines a neural network as a modular composition of multiple layers, where each layer uses its own weight regularizer, target output and loss function, ultimately simultaneously achieving Performance and efficiency.

Google experimentally verified the effectiveness of its approach on benchmark models and datasets, narrowing the gap between first- and second-order optimizers. In addition, Google researchers stated that their local loss construction method is the first time that square loss is used as a local loss.

Source: @Google AI

Some people’s comments about this research by Google are that it is great and interesting. However, some people expressed different views, including Turing Award winner Yann LeCun.

He believes that there are many versions of what we now call target props, some dating back to 1986. So, what is the difference between Google’s LocoProp and them?

Photo source: @Yann LeCun

Haohan Wang, who is about to become an assistant professor at UIUC, agrees with LeCun’s question. He said it was sometimes surprising why some authors thought such a simple idea was the first of its kind. Maybe they did something different, but the publicity team couldn't wait to come out and claim everything...

Photo source: @HaohanWang

However , some people are "not cold" to LeCun, thinking that he raises questions out of competitive considerations or even "starts a war." LeCun responded, claiming that his question had nothing to do with competition, and gave the example of former members of his laboratory such as Marc'Aurelio Ranzato, Karol Gregor, koray kavukcuoglu, etc., who have all used some versions of target propagation, and now they all work at Google DeepMind. .

Photo source: @Gabriel Jimenez@Yann LeCun

Some people even teased Yann LeCun, "When you can't beat Jürgen Schmidhuber, become him. 》

Is Yann LeCun right? Let’s first take a look at what this Google study is about. Is there any outstanding innovation?

Google LocoProp: Enhanced backpropagation with local loss optimization

This research was completed by three researchers from Google: Ehsan Amid, Rohan Anil, and Manfred K. Warmuth.

Paper address: https://proceedings.mlr.press/v151/amid22a/amid22a.pdf

This article believes that deep neural network (DNN) There are two key factors for success: model design and training data, but few researchers discuss optimization methods for updating model parameters. Our training of the DNN involves minimizing the loss function, which is used to predict the difference between the true value and the model's predicted value, and using backpropagation to update the parameters.

The simplest weight update method is stochastic gradient descent, that is, in each step, the weight moves in the negative direction relative to the gradient. In addition, there are advanced optimization methods, such as momentum optimizer, AdaGrad, etc. These optimizers are often called first-order methods because they typically only use information from the first-order derivatives to modify the update direction.

There are also more advanced optimization methods such as Shampoo, K-FAC, etc., which have been proven to improve convergence and reduce the number of iterations. These methods can capture changes in gradients. Using this additional information, higher-order optimizers can discover more efficient update directions for the trained model by taking into account correlations between different parameter groups. The disadvantage is that computing higher-order update directions is computationally more expensive than first-order updates.

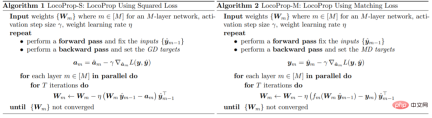

In the paper, Google introduced a framework for training DNN models: LocoProp, which conceives of neural networks as modular combinations of layers. Generally speaking, each layer of a neural network performs a linear transformation on the input, followed by a nonlinear activation function. In this study, each layer of the network was assigned its own weight regularizer, output target, and loss function. The loss function of each layer is designed to match the activation function of that layer. Using this form, training a given small batch of local losses can be minimized, iterating between layers in parallel.

Google uses this first-order optimizer for parameter updates, thus avoiding the computational cost required by higher-order optimizers.

Research shows that LocoProp outperforms first-order methods on deep autoencoder benchmarks and performs comparably to higher-order optimizers such as Shampoo and K-FAC without high memory and computational requirements.

LocoProp: Enhanced Backpropagation through Local Loss Optimization

Typically neural networks are viewed as composite functions that convert the input of each layer into an output express. LocoProp adopts this perspective when decomposing the network into layers. In particular, instead of updating a layer's weights to minimize a loss function on the output, LocoProp applies a predefined local loss function specific to each layer. For a given layer, the loss function is chosen to match the activation function, for example, a tanh loss would be chosen for a layer with tanh activation. Additionally, the regularization term ensures that the updated weights do not deviate too far from their current values.

Similar to backpropagation, LocoProp applies a forward pass to compute activations. In the backward pass, LocoProp sets targets for neurons in each layer. Finally, LocoProp decomposes model training into independent problems across layers, where multiple local updates can be applied to the weights of each layer in parallel.

Google conducted experiments on deep autoencoder models, a common benchmark for evaluating the performance of optimization algorithms. They performed extensive optimization on multiple commonly used first-order optimizers, including SGD, SGD with momentum, AdaGrad, RMSProp, Adam, and higher-order optimizers including Shampoo, K-FAC, and compared the results with LocoProp. The results show that the LocoProp method performs significantly better than first-order optimizers and is comparable to high-order optimizers, while being significantly faster when running on a single GPU.

The above is the detailed content of Yann LeCun opens up about Google Research: Targeted communication has been around for a long time, where is your innovation?. For more information, please follow other related articles on the PHP Chinese website!

Tool Calling in LLMsApr 14, 2025 am 11:28 AM

Tool Calling in LLMsApr 14, 2025 am 11:28 AMLarge language models (LLMs) have surged in popularity, with the tool-calling feature dramatically expanding their capabilities beyond simple text generation. Now, LLMs can handle complex automation tasks such as dynamic UI creation and autonomous a

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global HealthApr 14, 2025 am 11:27 AM

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global HealthApr 14, 2025 am 11:27 AMCan a video game ease anxiety, build focus, or support a child with ADHD? As healthcare challenges surge globally — especially among youth — innovators are turning to an unlikely tool: video games. Now one of the world’s largest entertainment indus

UN Input On AI: Winners, Losers, And OpportunitiesApr 14, 2025 am 11:25 AM

UN Input On AI: Winners, Losers, And OpportunitiesApr 14, 2025 am 11:25 AM“History has shown that while technological progress drives economic growth, it does not on its own ensure equitable income distribution or promote inclusive human development,” writes Rebeca Grynspan, Secretary-General of UNCTAD, in the preamble.

Learning Negotiation Skills Via Generative AIApr 14, 2025 am 11:23 AM

Learning Negotiation Skills Via Generative AIApr 14, 2025 am 11:23 AMEasy-peasy, use generative AI as your negotiation tutor and sparring partner. Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining

TED Reveals From OpenAI, Google, Meta Heads To Court, Selfie With MyselfApr 14, 2025 am 11:22 AM

TED Reveals From OpenAI, Google, Meta Heads To Court, Selfie With MyselfApr 14, 2025 am 11:22 AMThe TED2025 Conference, held in Vancouver, wrapped its 36th edition yesterday, April 11. It featured 80 speakers from more than 60 countries, including Sam Altman, Eric Schmidt, and Palmer Luckey. TED’s theme, “humanity reimagined,” was tailor made

Joseph Stiglitz Warns Of The Looming Inequality Amid AI Monopoly PowerApr 14, 2025 am 11:21 AM

Joseph Stiglitz Warns Of The Looming Inequality Amid AI Monopoly PowerApr 14, 2025 am 11:21 AMJoseph Stiglitz is renowned economist and recipient of the Nobel Prize in Economics in 2001. Stiglitz posits that AI can worsen existing inequalities and consolidated power in the hands of a few dominant corporations, ultimately undermining economic

What is Graph Database?Apr 14, 2025 am 11:19 AM

What is Graph Database?Apr 14, 2025 am 11:19 AMGraph Databases: Revolutionizing Data Management Through Relationships As data expands and its characteristics evolve across various fields, graph databases are emerging as transformative solutions for managing interconnected data. Unlike traditional

LLM Routing: Strategies, Techniques, and Python ImplementationApr 14, 2025 am 11:14 AM

LLM Routing: Strategies, Techniques, and Python ImplementationApr 14, 2025 am 11:14 AMLarge Language Model (LLM) Routing: Optimizing Performance Through Intelligent Task Distribution The rapidly evolving landscape of LLMs presents a diverse range of models, each with unique strengths and weaknesses. Some excel at creative content gen

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software