Technology peripherals

Technology peripherals AI

AI Common to the entire periodic table of elements, AI instantly predicts material structure and properties

Common to the entire periodic table of elements, AI instantly predicts material structure and propertiesThe properties of a material are determined by the arrangement of its atoms. However, existing methods for obtaining such arrangements are either too expensive or ineffective for many elements.

Now, researchers in UC San Diego’s Department of Nanoengineering have developed an artificial intelligence algorithm that can predict the structure and dynamics of any material, whether existing or new, almost instantly characteristic. The algorithm, known as M3GNet, was used to develop the matterverse.ai database, which contains more than 31 million yet-to-be-synthesized materials whose properties are predicted by machine learning algorithms. Matterverse.ai facilitates the discovery of new technological materials with exceptional properties.

The research is titled "A universal graph deep learning interatomic potential for the periodic table" and was published in "Nature Computational Science on November 28, 2022 "superior.

Paper link: https://www.nature.com/articles/s43588-022-00349-3

For large-scale materials research, efficient, linearly scaled interatomic potentials (IAPs) are needed to describe potential energy surfaces (PES) in terms of many-body interactions between atoms. However, most IAPs today are customized for a narrow range of chemicals: usually a single element or no more than four or five elements at most.

Recently, machine learning of PES has emerged as a particularly promising approach to IAP development. However, no studies have demonstrated a universally applicable IAP across the periodic table and across all types of crystals.

Over the past decade, the emergence of efficient and reliable electronic structure codes and high-throughput automation frameworks has led to the development of large federated databases of computational materials data. A large amount of PES data has been accumulated during structural relaxation, i.e., intermediate structures and their corresponding energies, forces, and stresses, but less attention has been paid to these data.

"Similar to proteins, we need to understand the structure of a material to predict its properties." said Shyue Ping Ong, the study's lead author. "What we need is AlphaFold for materials."

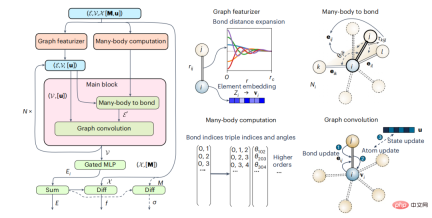

AlphaFold is an artificial intelligence algorithm developed by Google DeepMind to predict protein structures. To build the material equivalent, Ong and his team combined graph neural networks with many-body interactions to build a deep learning architecture that is universal across all elements of the periodic table. , work with high precision.

Mathematical graphs are natural representations of crystals and molecules, with nodes and edges representing atoms and the bonds between them respectively. Traditional material graph neural network models have proven to be very effective for general material property prediction, but lack physical constraints and are therefore unsuitable for use as IAPs. The researchers developed a material graph architecture that explicitly incorporates multibody interactions. Model development is inspired by traditional IAP, and in this work, the focus will be on the integration of three-body interactions (M3GNet).

Figure 1: Schematic diagram of multi-body graph potential and main calculation blocks. (Source: Paper)

Benchmarking on IAP Dataset

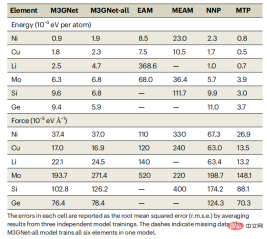

As an initial benchmark, the researchers chose Ong and colleagues previously generated diverse DFT data sets of elemental energies and forces for face-centered cubic (fcc) nickel, fcc copper, body-centered cubic (bcc) lithium, bcc molybdenum, diamond silicon, and diamond germanium.Table 1: Error comparison between M3GNet model and existing models EAM, MEAM, NNP and MTP on single-element data sets. (Source: paper)

As can be seen from Table 1, M3GNet IAP is significantly better than the classic multi-body potential; their performance is also comparable to ML-IAP based on the local environment. It should be noted that although ML-IAP can achieve slightly smaller energy and force errors than M3GNet IAP, its flexibility in handling multi-element chemistry will be greatly reduced, since adding multiple elements to ML-IAP often results in Number of combined explosion regression coefficients and corresponding data requirements. In contrast, the M3GNet architecture represents the elemental information of each atom (node) as a learnable embedding vector. Such a framework is easily extended to multicomponent chemistry.

Like other GNNs, the M3GNet framework is able to capture long-range interactions without increasing the cutoff radius for bond construction. At the same time, unlike previous GNN models, the M3GNet architecture still maintains continuous changes in energy, force, and stress as the number of bonds changes, which is a key requirement for IAP.

Universal IAP for the periodic table

To develop an IAP for the entire periodic table, the team used the world’s largest DFT crystal structure One of the open databases on relaxation (Materials Project).

Figure 2: Distribution of MPF.2021.2.8 data set. (Source: paper)

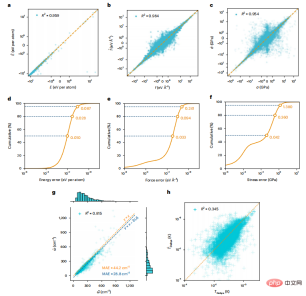

In principle, IAP can train energy only, or a combination of energy and force. In practice, M3GNet IAP (M3GNet-E) trained only on energy cannot achieve reasonable accuracy in predicting force or stress, with mean absolute error (MAE) even larger than the mean absolute deviation of the data. The M3GNet models trained with energy force (M3GNet-EF) and energy force stress (M3GNet-EFS) obtained relatively similar energy and force MAE, but the stress MAE of M3GNet-EFS was about half that of the M3GNet-EF model.

For applications involving lattice changes, such as structural relaxation or NpT molecular dynamics simulations, accurate stress prediction is necessary. The findings indicate that including all three properties (energy, force, and pressure) in model training is crucial to obtain practical IAP. The final M3GNet-EFS IAP (hereafter referred to as the M3GNet model) achieved an average of 0.035eV per atom, with average energy, force, and pressure test MAEs of 0.072eVÅ−1 and 0.41GPa, respectively.

Figure 3: Model predictions on the test dataset compared to DFT calculations.

On the test data, the model predictions and DFT ground truth match well, as revealed by the high linearity and R2 value of the linear fit between DFT and model predictions. The cumulative distribution of model errors shows that 50% of the data have energy, force, and stress errors less than 0.01 eV, 0.033 eVÅ−1, and 0.042 GPa per atom, respectively. The Debye temperature calculated by M3GNet is less accurate, which can be attributed to M3GNet's relatively poor prediction of shear modulus; however, the bulk modulus prediction is reasonable.

The M3GNet IAP is then applied to simulate material discovery workflows where the final DFT structure is unknown a priori. M3GNet relaxation was performed on initial structures from a test dataset of 3,140 materials. Energy calculations of the M3GNet relaxed structures yielded a MAE of 0.035 eV per atom, and 80% of the materials had an error less than 0.028 eV per atom. The error distribution of the relaxed structure using M3GNet is close to that of the known DFT final structure, indicating the potential of M3GNet to accurately help obtain the correct structure. Generally speaking, the relaxation of M3GNet converges quickly.

Figure 4: Relaxed crystal structure using M3GNet. (Source: paper)

New material discovery

M3GNet can accurately and quickly relax any crystal structures and predict their energies, making them ideal for large-scale materials discovery. The researchers generated 31,664,858 candidate structures as starting points, using M3GNet IAP to relax the structures and calculate the signed energy distance to the Materials Project convex hull (Ehull-m); 1,849,096 materials had Ehull-m less than 0.01 eV per atom.

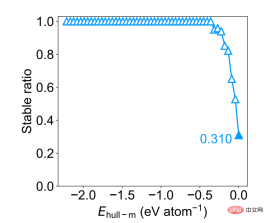

As a further evaluation of M3GNet’s performance in materials discovery, the researchers calculated the discovery rate as uniformly sampling 1000 structures of DFT-stable materials from approximately 1.8 million Ehull-m materials smaller than 0.001 eV/atom (Ehull− dft ≤ 0). The discovery rate remains close to 1.0 up to the Ehull-m threshold of approximately 0.5 eV per atom, and remains at a reasonably high value of 0.31 at the most stringent threshold of 0.001 eV per atom.

Figure 5: DFT stability ratio as a function of Ehull−m threshold for a uniform sample of 1000 structures. (Source: paper)

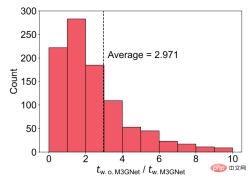

For this material set, the researchers also compared the DFT relaxation time cost with and without M3GNet pre-relaxation. The results show that without M3GNet pre-relaxation, the DFT relaxation time cost is approximately 3 times that of M3GNet pre-relaxation.

Figure 6: DFT acceleration using M3GNet pre-relaxation. (Source: paper)

Of the 31 million materials in matterverse.ai today, more than 1 million are expected to be potentially stable. Ong and his team intend to significantly expand not only the number of materials, but also the number of attributes ML can predict, including high-value attributes for small data volumes using the multi-fidelity approach they previously developed.

In addition to structural relaxation, M3GNet IAP also has extensive applications in material dynamic simulation and performance prediction.

"For example, we are often interested in the rate of diffusion of lithium ions in the electrode or electrolyte of a lithium-ion battery. The faster the diffusion, the faster the battery can be charged or discharged," Ong said. "We have demonstrated that M3GNet IAP can be used to predict the lithium conductivity of materials with high accuracy. We firmly believe that the M3GNet architecture is a transformative tool that can greatly expand our ability to explore new materials chemistry and structure."

To promote the use of M3GNet, the team has released the framework as open source Python code on Github. There are plans to integrate M3GNet IAP as a tool into commercial materials simulation packages.

The above is the detailed content of Common to the entire periodic table of elements, AI instantly predicts material structure and properties. For more information, please follow other related articles on the PHP Chinese website!

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AM

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AMai合并图层的快捷键是“Ctrl+Shift+E”,它的作用是把目前所有处在显示状态的图层合并,在隐藏状态的图层则不作变动。也可以选中要合并的图层,在菜单栏中依次点击“窗口”-“路径查找器”,点击“合并”按钮。

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AM

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AMai橡皮擦擦不掉东西是因为AI是矢量图软件,用橡皮擦不能擦位图的,其解决办法就是用蒙板工具以及钢笔勾好路径再建立蒙板即可实现擦掉东西。

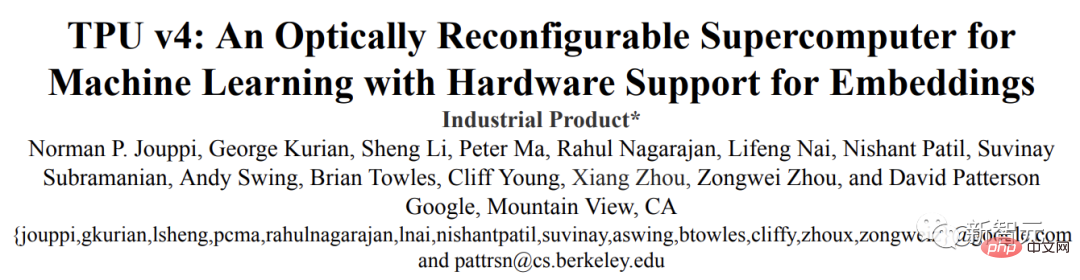

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PM

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PMai可以转成psd格式。转换方法:1、打开Adobe Illustrator软件,依次点击顶部菜单栏的“文件”-“打开”,选择所需的ai文件;2、点击右侧功能面板中的“图层”,点击三杠图标,在弹出的选项中选择“释放到图层(顺序)”;3、依次点击顶部菜单栏的“文件”-“导出”-“导出为”;4、在弹出的“导出”对话框中,将“保存类型”设置为“PSD格式”,点击“导出”即可;

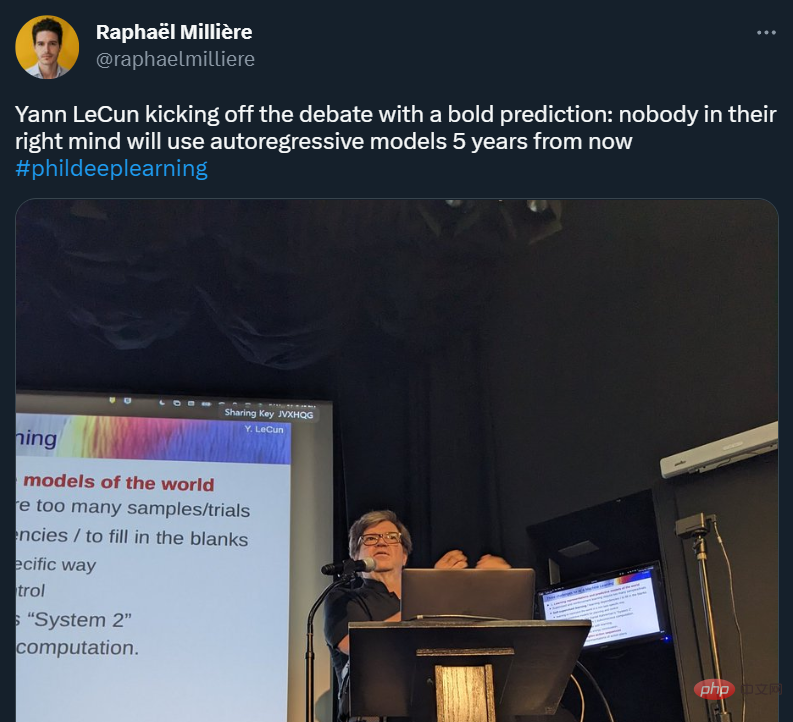

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AM

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AMYann LeCun 这个观点的确有些大胆。 「从现在起 5 年内,没有哪个头脑正常的人会使用自回归模型。」最近,图灵奖得主 Yann LeCun 给一场辩论做了个特别的开场。而他口中的自回归,正是当前爆红的 GPT 家族模型所依赖的学习范式。当然,被 Yann LeCun 指出问题的不只是自回归模型。在他看来,当前整个的机器学习领域都面临巨大挑战。这场辩论的主题为「Do large language models need sensory grounding for meaning and u

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PM

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PMai顶部属性栏不见了的解决办法:1、开启Ai新建画布,进入绘图页面;2、在Ai顶部菜单栏中点击“窗口”;3、在系统弹出的窗口菜单页面中点击“控制”,然后开启“控制”窗口即可显示出属性栏。

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AM

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AMai移动不了东西的解决办法:1、打开ai软件,打开空白文档;2、选择矩形工具,在文档中绘制矩形;3、点击选择工具,移动文档中的矩形;4、点击图层按钮,弹出图层面板对话框,解锁图层;5、点击选择工具,移动矩形即可。

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM引入密集强化学习,用 AI 验证 AI。 自动驾驶汽车 (AV) 技术的快速发展,使得我们正处于交通革命的风口浪尖,其规模是自一个世纪前汽车问世以来从未见过的。自动驾驶技术具有显着提高交通安全性、机动性和可持续性的潜力,因此引起了工业界、政府机构、专业组织和学术机构的共同关注。过去 20 年里,自动驾驶汽车的发展取得了长足的进步,尤其是随着深度学习的出现更是如此。到 2015 年,开始有公司宣布他们将在 2020 之前量产 AV。不过到目前为止,并且没有 level 4 级别的 AV 可以在市场

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.