Technology peripherals

Technology peripherals AI

AI A single card can run AI painting models. Tutorials that even novices can understand are here. Free NPU computing power is available with 1 million cards.

A single card can run AI painting models. Tutorials that even novices can understand are here. Free NPU computing power is available with 1 million cards.I believe everyone is familiar with the recent popularity of AI drawing.

From the works generated by AI drawing software to defeating many human artists and winning the digital art championship, to now, domestic and foreign platforms such as DALL.E, Imagen, and novelai have flourished.

Perhaps you have clicked on relevant websites and tried to let AI describe the scenery in your mind, or uploaded a handsome/beautiful photo of yourself, and then laughed and laughed at the rough guy finally generated.

So, while you are feeling the charm of AI drawing, have you ever thought about it (no, you must have), what is the mystery behind it?

△The work that won the digital art category championship at the Colorado Technology Expo in the United States - "Space Opera"

Everything starts from a project called Speaking of the DDPM model...

What is DDPM?

DDPM model, the full name is Denoising Diffusion Probabilistic Model, can be said to be the originator of the current diffusion model.

Different from predecessors such as GAN, VAE and flow models, the overall idea of the diffusion model is to gradually generate an image from a pure noise image through an optimization-oriented approach.

△Now there is a comparison of generated image models

Some friends may ask, what is a pure noise image?

It's very simple. When there is no signal on the old TV, the snowflake pictures that appear accompanied by the "prickling" noise are pure noise pictures.

What DDPM does in the generation phase is to remove these "snowflakes" bit by bit until the clear image reveals its true appearance. We call this stage "denoising".

△Pure noise picture: Snowflake screen of old TV

Through the description, you can feel that denoising is actually a quite complicated process.

There is no certain rule for denoising. Maybe you have been busy for a long time, but in the end you still want to cry in front of the weird pictures.

Of course, different types of pictures will also have different denoising rules. As for how to let the machine learn this rule, someone had an idea and thought of a wonderful method:

Since the denoising rules are difficult to learn, why don’t I first turn a picture into a pure noise image by adding noise, and then do the whole process in reverse?

This establishes the entire training-inference process of the diffusion model: first, by gradually adding noise in the forward process, the image is converted into a pure noise image that approximates a Gaussian distribution;

Then gradually denoise in the reverse process to generate the image;

Finally, with the goal of increasing the similarity between the original image and the generated image, the model is optimized until it reaches ideal effect.

△DDPM’s training-inference process

At this point, I wonder how everyone will accept it? If you feel that there is no problem and it is easy, get ready, I am going to start using the ultimate move (in-depth theory).

1.1.1 Forward process

The forward process is also called the diffusion process, and the whole is a parameterized Markov Chain (Markov chain). Starting from the initial data distribution x0~q(x), Gaussian noise is added to the data distribution at each step for T times. The process from step t-1 xt-1 to step t xt can be expressed by Gaussian distribution as:

With appropriate settings, as t continues to increase , the original data x0 will gradually lose its characteristics. We can understand that after an infinite number of noise addition steps, the final data xT will become a picture without any features and completely random noise, which is what we first called the "snowflake screen".

In this process, the changes at each step can be controlled by setting the hyperparameter βt. Under the premise that we know what the first picture is, the entire process of forward noise can be said to be known. And it is controllable, we can completely know what the generated data looks like at each step.

But the problem is that each calculation needs to start from the starting point, combine the process of each step, and slowly derive it to the certain step data xt you want, which is too troublesome. Fortunately, because of some characteristics of the Gaussian distribution, we can get xt directly from x0 in one step.

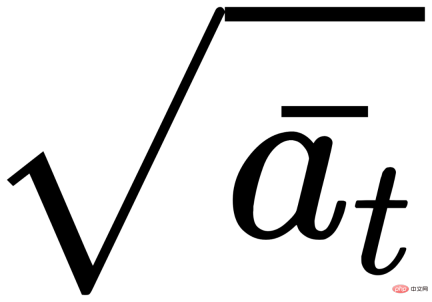

Note, the

here

and  are combination coefficients, which are essentially βt expressions of hyperparameters.

are combination coefficients, which are essentially βt expressions of hyperparameters.

1.1.2 Reverse process

The same as the forward process, the reverse process is also a Marl Markov chain, but the parameters used here are different. As for the specific parameters, this is what we need the machine to learn.

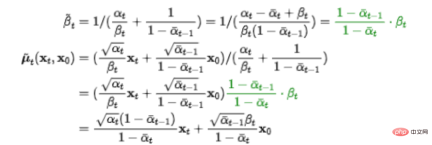

Before understanding how the machine learns, we first think about what the process of accurately inferring back to step t-1 xt-1 from step t xt based on a certain original data x0 should be?

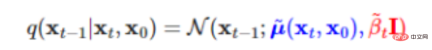

The answer is that this can still be expressed by Gaussian distribution:

Note that x0 must be considered here, which means that the final image generated by the reverse process still needs to be compared with related to the original data. If you input a picture of a cat, the image generated by the model should be of a cat. If you input a picture of a dog, the image generated by the model should also be related to a dog. If x0 is removed, no matter what type of image training is input, the final images generated by diffusion will be the same, "cats and dogs are not distinguished".

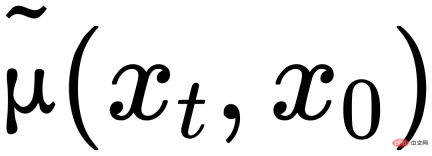

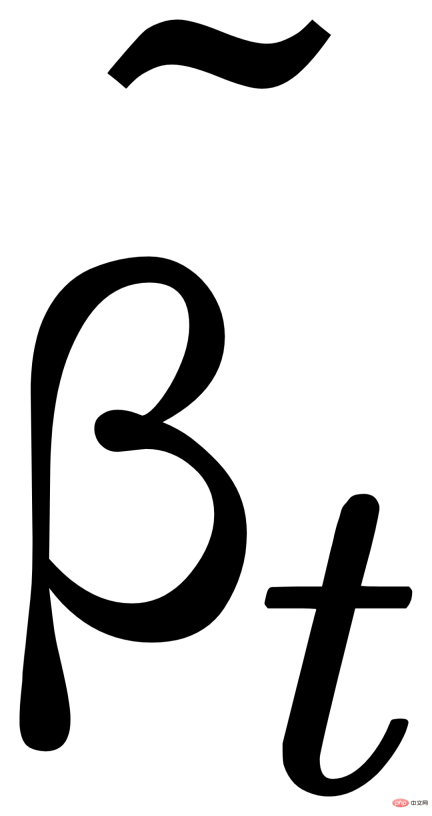

After a series of derivation, we found that the parameters in the reverse process

and

, it can still be represented by x0, xt, and parameters βt,  , isn’t it amazing~

, isn’t it amazing~

Of course, the machine does not know this in advance What it can do with the real inversion process is to simulate it with a roughly approximate estimated distribution, expressed as p0(xt-1|xt).

1.1.3 Optimization Goal

We mentioned at the beginning that the model needs to be optimized by increasing the similarity between the original data and the data finally generated by the reverse process. In machine learning, we calculate this similarity based on cross entropy.

Regarding cross entropy, the academic definition is "used to measure the difference information between two probability distributions." In other words, the smaller the cross entropy, the closer the image generated by the model is to the original image. However, in most cases, cross entropy is difficult or impossible to calculate, so we generally achieve the same effect by optimizing a simpler expression.

The Diffusion model draws on the optimization ideas of the VAE model and replaces cross entropy with variational lower bound (VLB, also known as ELBO) as the maximum optimization target. After countless steps of decomposition, we finally got:

Seeing such a complicated formula, many friends must have a big head. But don’t panic, what you need to pay attention to here is just Lt-1 in the middle. It represents the estimated distribution p0(xt-1|xt) and the real distribution q(xt-1|xt,x0 between xt and xt-1 )difference. The smaller the gap, the better the final image generated by the model.

1.1.4 Above code

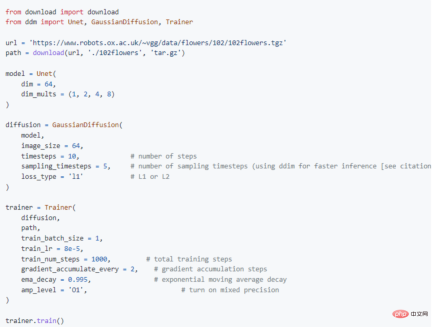

After understanding the principles behind DDPM, let us see how the DDPM model is implemented...

That’s weird. I believe that when you read this, you definitely don’t want to be baptized by hundreds or thousands of lines of code.

Fortunately, MindSpore has provided you with a fully developed DDPM model. Training and inference can be done with both hands. The operation is simple and can be run on a single card. Friends who want to experience the effect only need to

pip install denoising-diffusion-mindspore

Then, refer to the following code to configure parameters:

Some analysis of important parameters:

GaussianDiffusion

- image_size: Image size

- timesteps: Number of noise steps

- sampling_timesteps : The number of sampling steps. In order to improve the inference performance, it needs to be less than the number of noise adding steps

Trainer

- folder_or_dataset: corresponds to the path in the picture, which can be the downloaded dataset Path (str), or it can be VisionBaseDataset, GeneratorDataset or MindDataset that has been processed for data

- train_batch_size:batch size

- train_lr: learning rate

- train_num_steps: number of training steps

"Advanced version" DDPM model MindDiffusion

DDPM is just the beginning of the story of Diffusion. At present, countless researchers have been attracted by the magnificent world behind it and have devoted themselves to it.

While continuously optimizing the model, they have also gradually developed the application of Diffusion in various fields.

It includes image optimization, inpainting, 3D vision in the field of computer vision, text-to-speech in natural language processing, molecular conformation generation, material design in the field of AI for Science, etc.

Eric Zelikman, a doctoral student from the Department of Computer Science at Stanford University, used his imagination to try to combine DALLE-2 with ChatGPT, another recently popular conversation model, to create a heartwarming picture book story.

△DALLE-2 ChatGPT completed the story about a little robot named "Robbie"

But it is the most widely known to the public Yes, it should be its application in text-to-image. Enter a few keywords or a short description, and the model can generate the corresponding picture for you.

For example, if you enter "City Night Scene Cyberpunk Greg Lutkowsky", the final result will be a brightly colored work with a futuristic sci-fi style.

For another example, if you input "Monet's Woman Holding a Parasol in Moon Dream", what will be generated is a very hazy portrait of a woman, with a wooden style of color matching. Does it remind you of Monet's "Water Lilies"?

Want a realistic landscape photo as a screensaver? no problem!

△Country Field Screensaver

Want something with more two-dimensional density? That’s ok too!

△From the realistic style of abyss landscape painting

The above pictures are all made by Wukong Painting under the MindDiffusion platform Oh, Wukong Huahua is a large Chinese text graph model based on the diffusion model. It was jointly developed by Huawei's Noah team, ChinaSoft Distributed Parallel Laboratory, and Ascend Computing Product Department.

The model is trained based on Wukong dataset and implemented using MindSpore and Ascend software and hardware solutions.

Friends who are eager to give it a try, don’t worry. In order to give everyone a better experience and more room for self-development, we plan to make the models in MindDiffusion also have the characteristics of trainability and inference. It is expected that in I will meet you all next year, so stay tuned.

We welcome everyone to brainstorm and generate various unique styles of works~

(According to colleagues who went to inquire about internal information, some people have already begun to try "Zhang Fei Embroidery", "Liu Huaqiang" "Chopping Melon" and "Ancient Greek Gods vs. Godzilla". Ummmm, what should I do? I am suddenly looking forward to the finished product (ಡωಡ))

One More Thing

The last one, Now that Diffusion is booming, some people have also asked why it can become so popular and even start to surpass the GAN network in the limelight?

Diffusion has outstanding advantages and obvious disadvantages; many of its fields are still blank, and its future is still unknown.

Why are there so many people working tirelessly on it?

Perhaps, Professor Ma Yi’s words can provide us with an answer.

But the effectiveness of the diffusion process and its rapid replacement of GAN also fully illustrate a simple truth:

A few lines of simple and correct mathematical derivation can achieve greater results than those in the past ten years. Debugging hyperparameters at scale is much more effective than debugging network structures.

Perhaps this is the charm of the Diffusion model.

参考链接(可滑动查看):

[1]https://medium.com/mlearning-ai/ai-art-wins-fine-arts-competition-and-sparks-controversy-882f9b4df98c

[2]Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising Diffusion Probabilistic Models. arXiv:2006.11239, 2020.

[3]Ling Yang, Zhilong Zhang, Shenda Hong, Runsheng Xu, Yue Zhao, Yingxia Shao, Wentao Zhang, Ming-Hsuan Yang, and Bin Cui. Diffusion models: A comprehensive survey of methods and applications. arXiv preprint arXiv:2209.00796, 2022.

[4]https://lilianweng.github.io/posts/2021-07-11-diffusion-models

[5]https://github.com/lvyufeng/denoising-diffusion-mindspore

[6]https://zhuanlan.zhihu.com/p/525106459

[7]https://zhuanlan.zhihu.com/p/500532271

[8]https://www.zhihu.com/question/536012286

[9]https://mp.weixin.qq.com/s/XTNk1saGcgPO-PxzkrBnIg

[10]https://m.weibo.cn/3235040884/4804448864177745

The above is the detailed content of A single card can run AI painting models. Tutorials that even novices can understand are here. Free NPU computing power is available with 1 million cards.. For more information, please follow other related articles on the PHP Chinese website!

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AM

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AMai合并图层的快捷键是“Ctrl+Shift+E”,它的作用是把目前所有处在显示状态的图层合并,在隐藏状态的图层则不作变动。也可以选中要合并的图层,在菜单栏中依次点击“窗口”-“路径查找器”,点击“合并”按钮。

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AM

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AMai橡皮擦擦不掉东西是因为AI是矢量图软件,用橡皮擦不能擦位图的,其解决办法就是用蒙板工具以及钢笔勾好路径再建立蒙板即可实现擦掉东西。

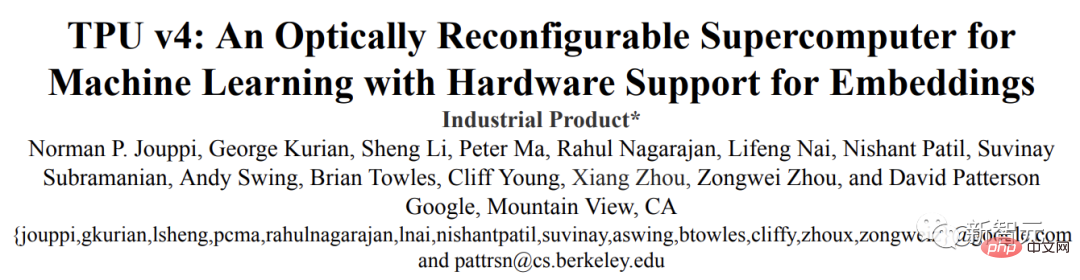

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PM

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PMai可以转成psd格式。转换方法:1、打开Adobe Illustrator软件,依次点击顶部菜单栏的“文件”-“打开”,选择所需的ai文件;2、点击右侧功能面板中的“图层”,点击三杠图标,在弹出的选项中选择“释放到图层(顺序)”;3、依次点击顶部菜单栏的“文件”-“导出”-“导出为”;4、在弹出的“导出”对话框中,将“保存类型”设置为“PSD格式”,点击“导出”即可;

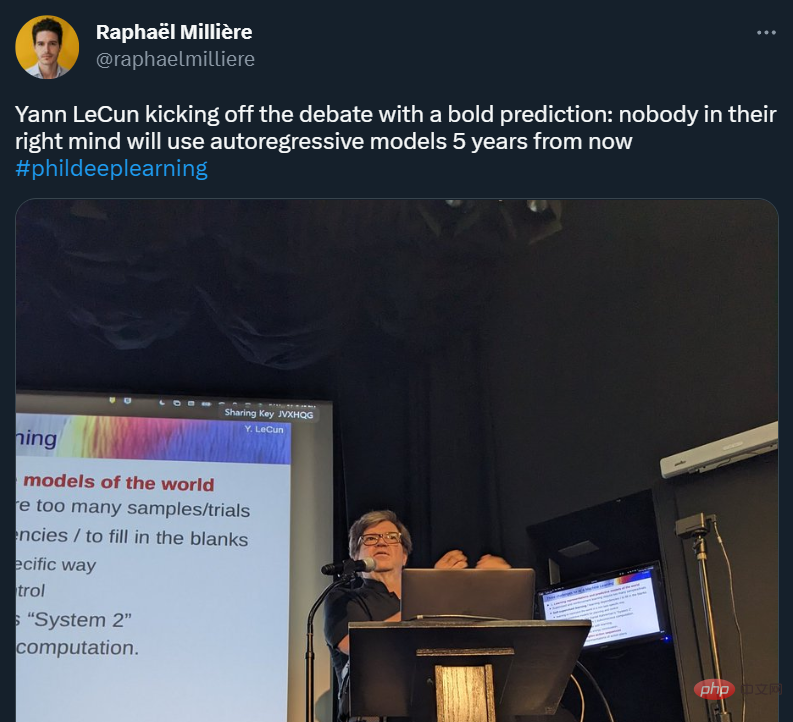

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AM

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AMYann LeCun 这个观点的确有些大胆。 「从现在起 5 年内,没有哪个头脑正常的人会使用自回归模型。」最近,图灵奖得主 Yann LeCun 给一场辩论做了个特别的开场。而他口中的自回归,正是当前爆红的 GPT 家族模型所依赖的学习范式。当然,被 Yann LeCun 指出问题的不只是自回归模型。在他看来,当前整个的机器学习领域都面临巨大挑战。这场辩论的主题为「Do large language models need sensory grounding for meaning and u

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PM

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PMai顶部属性栏不见了的解决办法:1、开启Ai新建画布,进入绘图页面;2、在Ai顶部菜单栏中点击“窗口”;3、在系统弹出的窗口菜单页面中点击“控制”,然后开启“控制”窗口即可显示出属性栏。

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM引入密集强化学习,用 AI 验证 AI。 自动驾驶汽车 (AV) 技术的快速发展,使得我们正处于交通革命的风口浪尖,其规模是自一个世纪前汽车问世以来从未见过的。自动驾驶技术具有显着提高交通安全性、机动性和可持续性的潜力,因此引起了工业界、政府机构、专业组织和学术机构的共同关注。过去 20 年里,自动驾驶汽车的发展取得了长足的进步,尤其是随着深度学习的出现更是如此。到 2015 年,开始有公司宣布他们将在 2020 之前量产 AV。不过到目前为止,并且没有 level 4 级别的 AV 可以在市场

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AM

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AMai移动不了东西的解决办法:1、打开ai软件,打开空白文档;2、选择矩形工具,在文档中绘制矩形;3、点击选择工具,移动文档中的矩形;4、点击图层按钮,弹出图层面板对话框,解锁图层;5、点击选择工具,移动矩形即可。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.