Technology peripherals

Technology peripherals AI

AI Deep Learning GPU Selection Guide: Which graphics card is worthy of my alchemy furnace?

Deep Learning GPU Selection Guide: Which graphics card is worthy of my alchemy furnace?As we all know, when dealing with deep learning and neural network tasks, it is better to use a GPU instead of a CPU, because when it comes to neural networks, even a relatively low-end GPU will outperform a CPU.

Deep learning is a field that requires a lot of computing. To a certain extent, the choice of GPU will fundamentally determine the deep learning experience.

But here comes the problem, how to choose the right GPU is also a headache and brain-burning thing.

How to avoid being in trouble and how to make a cost-effective choice?

Tim Dettmers, a well-known evaluation blogger who has received PhD offers from Stanford, UCL, CMU, NYU, and UW and is currently studying for a PhD at the University of Washington, discusses what kind of GPU is needed in the field of deep learning. , combined with his own experience, wrote a long article of 10,000 words, and finally gave a recommended GPU in the DL field.

Tim Dettmers’s research direction is deep learning of representation learning and hardware optimization. He created it himself The website is also well-known in the fields of deep learning and computer hardware.

The GPUs recommended by Tim Dettmers in this article are all from N Factory. He obviously also believes that AMD is not worthy of having a name when it comes to machine learning.

The editor has also posted the original link below.

Original link: https://timdettmers.com/2023/01/16/which-gpu-for-deep-learning /#GPU_Deep_Learning_Performance_per_Dollar

Advantages and Disadvantages of RTX 40 and 30 Series

Compared with the NVIDIA Turing architecture RTX 20 series, the new NVIDIA Ampere architecture RTX 30 series Has more advantages such as sparse network training and inference. Other features, such as new data types, should be viewed more as ease-of-use features, as they provide the same performance improvements as the Turing architecture but do not require any additional programming requirements.

The Ada RTX 40 series has even more advancements, such as the Tensor Memory Accelerator (TMA) and 8-bit floating point operations (FP8) introduced above. The RTX 40 series has similar power and temperature issues compared to the RTX 30. The issue with the RTX 40's melted power connector cable can be easily avoided by connecting the power cable correctly.

Sparse network training

Ampere allows automatic sparse matrix multiplication of fine-grained structures at dense speeds. How is this done? Take a weight matrix as an example and cut it into pieces with 4 elements. Now imagine that 2 of these 4 elements are zero. Figure 1 shows what this situation looks like.

Figure 1: Structures supported by the sparse matrix multiplication function in Ampere architecture GPU

When you multiply this sparse weight matrix with some dense input, Ampere's Sparse Matrix Tensor core functionality automatically compresses the sparse matrix into a dense representation that is half the size shown in Figure 2.

After compression, the densely compressed matrix tiles are fed into the tensor core, which computes matrix multiplications twice the usual size. This effectively yields 2x speedup because the bandwidth requirements are halved during matrix multiplication in shared memory.

Figure 2: Sparse matrices are compressed into dense representation before matrix multiplication.

I work on sparse network training in my research, and I also wrote a blog post about sparse training. One criticism of my work was: "You reduce the FLOPS required by the network, but don't produce a speedup because GPUs can't do fast sparse matrix multiplications".

With the addition of Tensor Cores' sparse matrix multiplication capabilities, my algorithm, or other sparse training algorithms, now actually provides up to 2x speedup during training.

The sparse training algorithm developed has three stages: (1) Determine the importance of each layer. (2) Remove the least important weights. (3) Promote new weights proportional to the importance of each layer.

While this feature is still experimental and training sparse networks is not yet common, having this feature on your GPU means you are already trained for sparse Prepare for the future.

Low Precision Computation

In my work, I have previously shown that new data types can improve low precision during backpropagation stability.

Figure 4: Low-precision deep learning 8-bit data type. Deep learning training benefits from highly specialized data types The ordinary FP16 data type only supports numbers in the range [-65,504, 65,504]. If your gradient slips past this range, your gradient will explode into NaN values.

To prevent this situation in FP16 training, we usually do loss scaling, that is, multiply the loss by a small number before backpropagation to prevent this gradient explosion .

Brain Float 16 format (BF16) uses more bits for the exponent so that the range of possible numbers is the same as FP32, BF16 has less precision, that is, significant digits, but the gradient Accuracy is not that important for learning.

So what BF16 does is you no longer need to do any loss scaling, and you don't need to worry about gradients exploding quickly. Therefore, we should see an improvement in the stability of training by using the BF16 format, as there is a slight loss in accuracy.

What does this mean to you. Using BF16 precision, training is likely to be more stable than using FP16 precision while providing the same speed increase. With TF32 precision, you get stability close to FP32 while providing speed improvements close to FP16.

The good thing is that to use these data types, you only need to replace FP32 with TF32 and FP16 with BF16--no code changes required.

But in general, these new data types can be considered lazy data types, because you can get rid of them with some extra programming effort (proper loss scaling, initialization, Normalize, use Apex) to get all the benefits of the old data types.

Thus, these data types do not provide speed, but rather improve ease of use with low precision in training.

Fan Design and GPU Temperature

While the new fan design of the RTX 30 series does a very good job of cooling the GPU, non-Founder Edition GPUs Additional issues may arise with different fan designs.

If your GPU gets hotter than 80C, it will self-throttle, slowing down its computing speed/power. The solution to this problem is to use a PCIe extender to create space between the GPUs.

Spreading the GPUs with PCIe extenders is very effective for cooling, other PhD students at the University of Washington and I have used this setup with great success. It doesn't look pretty, but it keeps your GPU cool!

The following system has been running for 4 years without any problems. This can also be used if you don't have enough space to fit all the GPUs in the PCIe slots.

Figure 5: A 4-graphics card system with PCIE expansion ports looks like a mess, but the heat dissipation efficiency is very high.

Solve the power limit problem gracefully

It is possible to set a power limit on your GPU. As a result, you'll be able to programmatically set the RTX 3090's power limit to 300W instead of its standard 350W. In a 4-GPU system, this equates to a saving of 200W, which may be just enough to make a 4x RTX 3090 system feasible with a 1600W PSU.

This also helps keep the GPU cool. Therefore, setting a power limit solves both the main problems of a 4x RTX 3080 or 4x RTX 3090 setup, cooling and power. For a 4x setup, you still need an efficient cooling fan for the GPU, but this solves the power issue.

Figure 6: Reducing the power limit has a slight cooling effect. Lowering the power limit of the RTX 2080 Ti by 50-60W results in slightly lower temperatures and quieter fan operation

You may ask, "Won't this slow down the GPU? ?” Yes, it will indeed fall, but the question is how much.

I benchmarked the 4x RTX 2080 Ti system shown in Figure 5 at different power limits. I benchmarked the time for 500 mini-batches of BERT Large during inference (excluding softmax layer). Choosing BERT Large inference puts the greatest pressure on the GPU.

Figure 7: Measured speed drop at a given power limit on RTX 2080 Ti

We can see that setting a power limit does not seriously affect performance. Limiting the power to 50W only reduces performance by 7%.

RTX 4090 connector fire problem

There is a misunderstanding that the RTX 4090 power cord catches fire because it is excessively bent. This is actually the case for only 0.1% of users, and the main problem is that the cable is not plugged in correctly.

Therefore, it is completely safe to use the RTX 4090 if you follow the installation instructions below.

1. If you are using an old cable or an old GPU, make sure the contacts are free of debris/dust.

2. Use the power connector and plug it into the outlet until you hear a click - this is the most important part.

3. Test the fit by twisting the cord from left to right. The cable should not move.

4. Visually check the contact with the socket and there is no gap between the cable and the socket.

8-bit floating point support in H100 and RTX40

Support for 8-bit floating point (FP8) is RTX 40 series and H100 A huge advantage for GPUs.

With 8-bit input, it allows you to load data for matrix multiplication twice as fast, and you can store twice as many matrix elements in the cache as in Ada and Hopper architectures , the cache is very large, and now with FP8 tensor cores, you can get 0.66 PFLOPS of compute for the RTX 4090.

This is higher than the entire computing power of the world’s fastest supercomputer in 2007. The RTX 4090 has 4 times the FP8 calculations and is comparable to the world’s fastest supercomputer in 2010.

As can be seen, the best 8-bit baseline fails to provide good zero-point performance. The method I developed, LLM.int8(), can do Int8 matrix multiplication with the same results as the 16-bit baseline.

But Int8 is already supported by RTX 30/A100/Ampere generation GPUs. Why is FP8 another big upgrade in RTX 40? The FP8 data type is much more stable than the Int8 data type and is easy to use in layer specifications or non-linear functions, which is difficult to do with the integer data type.

This will make its use in training and inference very simple and straightforward. I think this will make FP8 training and inference relatively commonplace in a few months.

Below you can see a relevant main result from this paper about the Float vs Integer data type. We can see that bit by bit, the FP4 data type retains more information than the Int4 data type, thereby improving the average LLM zero-point accuracy across the 4 tasks.

GPU Deep Learning Performance Ranking

Let’s take a look at the original performance ranking of GPU and see who is the best beat.

We can see a huge gap between the 8-bit performance of the H100 GPU and older cards optimized for 16-bit performance.

The above figure shows the raw relative performance of the GPU. For example, for 8-bit inference, the performance of the RTX 4090 is approximately 0.33 times that of the H100 SMX.

In other words, the H100 SMX is three times faster at 8-bit inference compared to the RTX 4090.

For this data, he did not model 8-bit computing for older GPUs.

Because 8-bit inference and training are more efficient on Ada/Hopper GPUs, and the Tensor Memory Accelerator (TMA) saves a lot of registers that are very accurate in 8-bit matrix multiplication .

Ada/Hopper also has FP8 support, which makes especially 8-bit training more efficient. On Hopper/Ada, 8-bit training performance is likely to be 3-4 times that of 16-bit training. times.

For old GPUs, the Int8 inference performance of old GPUs is close to 16-bit inference performance.

How much computing power can you buy per dollar?

Then the problem is, the GPU performance is strong but I can’t afford it...

For those who don’t have enough budget, the following chart is his performance per dollar ranking (Performance per Dollar) based on the price and performance statistics of each GPU, which reflects the cost-effectiveness of the GPU.

Selecting a GPU that completes deep learning tasks and meets the budget can be divided into the following steps:

- First determine how much video memory you need (at least 12GB for image generation, at least 24GB for Transformer processing);

- Regarding whether to choose 8-bit or 16-bit, it is recommended to use 16-bit if you can. 8-bit will still have difficulties in handling complex encoding tasks;

- Find the GPU with the highest relative performance/cost based on the metrics in the image above.

We can see that the RTX4070Ti is the most cost-effective for 8-bit and 16-bit inference, while the RTX3080 is the most cost-effective for 16-bit training.

Although these GPUs are the most cost-effective, their memory is also a shortcoming, and 10GB and 12GB of memory may not meet all needs.

But it may be an ideal GPU for novices who are new to deep learning.

Some of these GPUs are great for Kaggle competitions. To do well in Kaggle competitions, working method is more important than model size, so many smaller GPUs are well suited.

Kaggle is known as the world's largest gathering platform for data scientists, with experts gathered here, and it is also very friendly to newbies.

The best GPU if used for academic research and server operations seems to be the A6000 Ada GPU.

At the same time, H100 SXM is also very cost-effective, with large memory and strong performance.

Speaking from personal experience, if I were to build a small cluster for a corporate/academic lab, I would recommend 66-80% A6000 GPU and 20-33% H100 SXM GPU.

Comprehensive Recommendation

Having said so much, we finally come to the GPU Amway section.

Tim Dettmers specially created a "GPU purchase flow chart". If you have enough budget, you can go for a higher configuration. If you don't have enough budget, please refer to the cost-effective choice.

The first thing to emphasize here is: no matter which GPU you choose, first make sure that its memory can meet your needs. To do this, you have to ask yourself a few questions:

What do I want to do with the GPU? Is it used to participate in Kaggle competitions, learn deep learning, do CV/NLP research, or play small projects?

If you have enough budget, you can check out the benchmarks above and choose the best GPU for you.

You can also estimate the GPU memory required by running your problem in vast.ai or Lambda Cloud for a period of time to understand whether it will meet your needs.

If you only need a GPU occasionally (for a few hours every few days) and don't need to download and process large datasets, vast.ai or Lambda Cloud will also work well Work.

However, if the GPU is used every day for a month and the usage frequency is high (12 hours a day), cloud GPU is usually not a good choice.

The above is the detailed content of Deep Learning GPU Selection Guide: Which graphics card is worthy of my alchemy furnace?. For more information, please follow other related articles on the PHP Chinese website!

人工智能(AI)、机器学习(ML)和深度学习(DL):有什么区别?Apr 12, 2023 pm 01:25 PM

人工智能(AI)、机器学习(ML)和深度学习(DL):有什么区别?Apr 12, 2023 pm 01:25 PM人工智能Artificial Intelligence(AI)、机器学习Machine Learning(ML)和深度学习Deep Learning(DL)通常可以互换使用。但是,它们并不完全相同。人工智能是最广泛的概念,它赋予机器模仿人类行为的能力。机器学习是将人工智能应用到系统或机器中,帮助其自我学习和不断改进。最后,深度学习使用复杂的算法和深度神经网络来重复训练特定的模型或模式。让我们看看每个术语的演变和历程,以更好地理解人工智能、机器学习和深度学习实际指的是什么。人工智能自过去 70 多

深度学习GPU选购指南:哪款显卡配得上我的炼丹炉?Apr 12, 2023 pm 04:31 PM

深度学习GPU选购指南:哪款显卡配得上我的炼丹炉?Apr 12, 2023 pm 04:31 PM众所周知,在处理深度学习和神经网络任务时,最好使用GPU而不是CPU来处理,因为在神经网络方面,即使是一个比较低端的GPU,性能也会胜过CPU。深度学习是一个对计算有着大量需求的领域,从一定程度上来说,GPU的选择将从根本上决定深度学习的体验。但问题来了,如何选购合适的GPU也是件头疼烧脑的事。怎么避免踩雷,如何做出性价比高的选择?曾经拿到过斯坦福、UCL、CMU、NYU、UW 博士 offer、目前在华盛顿大学读博的知名评测博主Tim Dettmers就针对深度学习领域需要怎样的GPU,结合自

字节跳动模型大规模部署实战Apr 12, 2023 pm 08:31 PM

字节跳动模型大规模部署实战Apr 12, 2023 pm 08:31 PM一. 背景介绍在字节跳动,基于深度学习的应用遍地开花,工程师关注模型效果的同时也需要关注线上服务一致性和性能,早期这通常需要算法专家和工程专家分工合作并紧密配合来完成,这种模式存在比较高的 diff 排查验证等成本。随着 PyTorch/TensorFlow 框架的流行,深度学习模型训练和在线推理完成了统一,开发者仅需要关注具体算法逻辑,调用框架的 Python API 完成训练验证过程即可,之后模型可以很方便的序列化导出,并由统一的高性能 C++ 引擎完成推理工作。提升了开发者训练到部署的体验

基于深度学习的Deepfake检测综述Apr 12, 2023 pm 06:04 PM

基于深度学习的Deepfake检测综述Apr 12, 2023 pm 06:04 PM深度学习 (DL) 已成为计算机科学中最具影响力的领域之一,直接影响着当今人类生活和社会。与历史上所有其他技术创新一样,深度学习也被用于一些违法的行为。Deepfakes 就是这样一种深度学习应用,在过去的几年里已经进行了数百项研究,发明和优化各种使用 AI 的 Deepfake 检测,本文主要就是讨论如何对 Deepfake 进行检测。为了应对Deepfake,已经开发出了深度学习方法以及机器学习(非深度学习)方法来检测 。深度学习模型需要考虑大量参数,因此需要大量数据来训练此类模型。这正是

地址标准化服务AI深度学习模型推理优化实践Apr 11, 2023 pm 07:28 PM

地址标准化服务AI深度学习模型推理优化实践Apr 11, 2023 pm 07:28 PM导读深度学习已在面向自然语言处理等领域的实际业务场景中广泛落地,对它的推理性能优化成为了部署环节中重要的一环。推理性能的提升:一方面,可以充分发挥部署硬件的能力,降低用户响应时间,同时节省成本;另一方面,可以在保持响应时间不变的前提下,使用结构更为复杂的深度学习模型,进而提升业务精度指标。本文针对地址标准化服务中的深度学习模型开展了推理性能优化工作。通过高性能算子、量化、编译优化等优化手段,在精度指标不降低的前提下,AI模型的模型端到端推理速度最高可获得了4.11倍的提升。1. 模型推理性能优化

聊聊实时通信中的AI降噪技术Apr 12, 2023 pm 01:07 PM

聊聊实时通信中的AI降噪技术Apr 12, 2023 pm 01:07 PMPart 01 概述 在实时音视频通信场景,麦克风采集用户语音的同时会采集大量环境噪声,传统降噪算法仅对平稳噪声(如电扇风声、白噪声、电路底噪等)有一定效果,对非平稳的瞬态噪声(如餐厅嘈杂噪声、地铁环境噪声、家庭厨房噪声等)降噪效果较差,严重影响用户的通话体验。针对泛家庭、办公等复杂场景中的上百种非平稳噪声问题,融合通信系统部生态赋能团队自主研发基于GRU模型的AI音频降噪技术,并通过算法和工程优化,将降噪模型尺寸从2.4MB压缩至82KB,运行内存降低约65%;计算复杂度从约186Mflop

深度学习撞墙?LeCun与Marcus到底谁捅了马蜂窝Apr 09, 2023 am 09:41 AM

深度学习撞墙?LeCun与Marcus到底谁捅了马蜂窝Apr 09, 2023 am 09:41 AM今天的主角,是一对AI界相爱相杀的老冤家:Yann LeCun和Gary Marcus在正式讲述这一次的「新仇」之前,我们先来回顾一下,两位大神的「旧恨」。LeCun与Marcus之争Facebook首席人工智能科学家和纽约大学教授,2018年图灵奖(Turing Award)得主杨立昆(Yann LeCun)在NOEMA杂志发表文章,回应此前Gary Marcus对AI与深度学习的评论。此前,Marcus在杂志Nautilus中发文,称深度学习已经「无法前进」Marcus此人,属于是看热闹的不

英伟达首席科学家:深度学习硬件的过去、现在和未来Apr 12, 2023 pm 03:07 PM

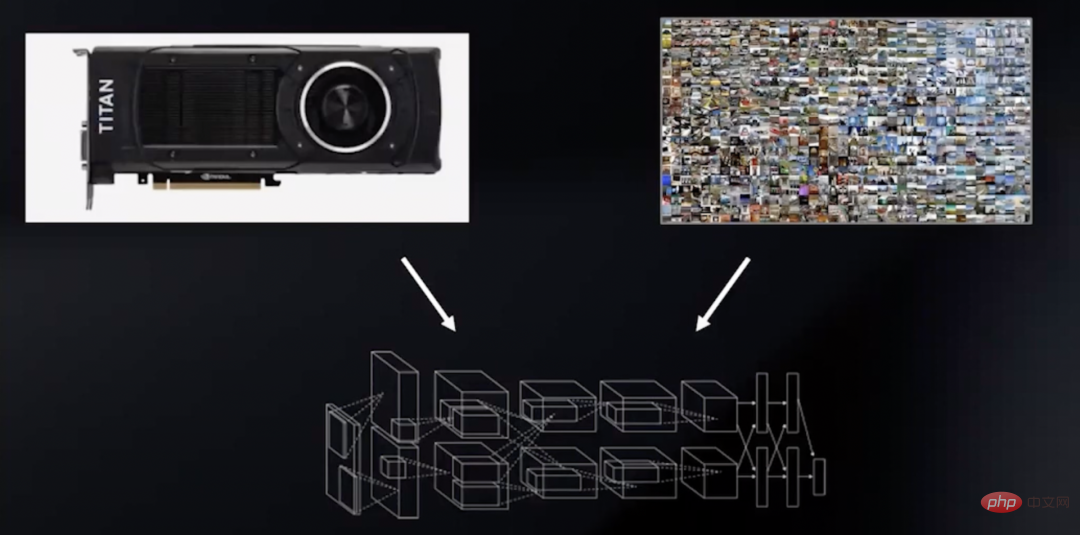

英伟达首席科学家:深度学习硬件的过去、现在和未来Apr 12, 2023 pm 03:07 PM过去十年是深度学习的“黄金十年”,它彻底改变了人类的工作和娱乐方式,并且广泛应用到医疗、教育、产品设计等各行各业,而这一切离不开计算硬件的进步,特别是GPU的革新。 深度学习技术的成功实现取决于三大要素:第一是算法。20世纪80年代甚至更早就提出了大多数深度学习算法如深度神经网络、卷积神经网络、反向传播算法和随机梯度下降等。 第二是数据集。训练神经网络的数据集必须足够大,才能使神经网络的性能优于其他技术。直至21世纪初,诸如Pascal和ImageNet等大数据集才得以现世。 第三是硬件。只有

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment