#This article mainly shares Datacake’s experience in applying AI algorithms in big data governance. This sharing is divided into five parts: the first part clarifies the relationship between big data and AI. Big data can not only serve AI, but can also use AI to optimize its own services. The two are mutually supportive and dependent; the second part introduces the use of The application practice of AI model comprehensively evaluates the health of big data tasks, providing quantitative basis for subsequent data governance; the third part introduces the application practice of using AI model to intelligently recommend Spark task running parameter configuration, achieving the goal of improving cloud resource utilization; The fourth part introduces the practice of intelligently recommending task execution engines by models in SQL query scenarios; the fifth part looks forward to the application scenarios of AI in the entire life cycle of big data.

##1. Big Data and AI

# #The general concept is that cloud computing collects and stores massive data to form big data; and then through the mining and learning of big data, AI models are further formed. This concept tacitly assumes that big data serves AI, but ignores the fact that AI algorithms can also feed back to big data. There is a two-way, mutually supporting and dependent relationship between them.

The whole life cycle of big data can be divided into six stages, each stage faces For some problems, the appropriate use of AI algorithms can help solve these problems.

Data collection: At this stage, more attention will be paid to the quality, frequency, and security of data collection, such as Whether the collected data is complete, whether the data collection speed is too fast or too slow, whether the collected data has been desensitized or encrypted, etc. At this time, AI can play some roles, such as evaluating the rationality of log collection based on similar applications, and using anomaly detection algorithms to detect sudden increases or decreases in data volume.

Data transmission: This stage pays more attention to the availability, integrity and security of data, and AI can be used Algorithms are used to perform some fault diagnosis and intrusion detection.

Data storage: At this stage, we pay more attention to whether the data storage structure is reasonable and whether the resource usage is low enough. Whether it is safe enough, etc., AI algorithms can also be used to do some evaluation and optimization.

Data processing: This stage is the most obvious stage that affects and optimizes returns. The core issue is To improve data processing efficiency and reduce resource consumption, AI can be optimized from multiple starting points.

Data exchange: There are more and more cooperation between enterprises, which will involve data Security issues. Algorithms can also be applied in this area. For example, the popular federated learning can help share data better and more securely.

Data destruction: It is impossible to just save data without deleting it, so you need to consider when to delete it. data, whether there are risks. Based on business rules, AI algorithms can assist in determining the timing and associated impact of deleting data.

Overall, data lifecycle management has three major goals: high efficiency, low cost, and security. The past approach was to rely on expert experience to formulate some rules and strategies, which had very obvious drawbacks, such as high cost and low efficiency. Appropriate use of AI algorithms can avoid these drawbacks and feed back into the construction of big data basic services. #In Qingzi Technology, there are several application scenarios that have been implemented. The first is the assessment of big data task health. . 2. Big data task health assessment

On the big data platform, thousands of tasks are run every day. However, many tasks only stay in the stage of producing correct numbers, and no attention is paid to the task's running time, resource consumption, etc., resulting in low efficiency and waste of resources in many tasks.

#Even if data developers begin to pay attention to task health, it is difficult to accurately assess whether the task is healthy or not. Because there are many task-related indicators, such as failure rate, time-consuming, resource consumption, etc., and there are natural differences in the complexity of different tasks and the volume of data processed, it is obviously unreasonable to simply select the absolute value of a certain indicator as the evaluation criterion. of.

# Without quantified task health, it is difficult to determine which tasks are unhealthy and need governance, let alone where the problem lies and where to start governance. Even if governance is I don’t know exactly how effective it will be, and there may even be situations where one indicator improves but other indicators deteriorate.

Requirements: Faced with the above problems, we urgently need a quantitative indicator to accurately reflect the overall health of the mission situation. Manually formulating rules is inefficient and incomplete, so consider using the power of machine learning models. The goal is that the model can give a quantitative score of the task and its position in the global distribution, and give the main problems and solutions of the task.

#To meet this requirement, our functional module solution is to display the key information of all tasks under the owner's name on the management interface, such as ratings, task costs, and CPU utilization. rate, memory utilization, etc. In this way, the health of the task is clear at a glance, making it easier for the task owner to manage the task later.

Secondly, for the model solution of the scoring function, we treat it as a classification problem. Intuitively, task scoring is obviously a regression problem and should be given any real number between 0 and 100. However, this requires a sufficient number of scored samples, and manual labeling is expensive and unreliable.

# Therefore we consider converting the problem into a classification problem, and the class probability given by the classification model can be further mapped into a real score. We divide tasks into two categories: good task 1 and bad task 0, which are labeled by big data engineers. The so-called good tasks usually refer to tasks that take a short time and consume less resources under the same task volume and complexity.

The model training process is:

The first is sample preparation, we The samples come from historical running task data. The sample characteristics include running time, resources used, whether execution failed, etc. The sample labels are marked by big data engineers into good and bad categories based on rules or experience. Then the model can be trained. We have tried LR, GBDT, XGboost and other models successively. Both theory and practice have proved that XGboost has better classification results. The model will eventually output the probability that the task is a "good task". The greater the probability, the higher the final mapped task score.

After training, 19 features were selected from the original nearly 50 original features. These 19 features can basically determine whether a task is a good task. For example, most of the tasks that have failed many times and have low resource utilization will not score too high, which is basically consistent with the subjective feelings of humans.

After using the model to score the task, you can see that scores below 0 to 30 are unhealthy and urgent. Tasks that require management; tasks between 30 and 60 are tasks with acceptable health; tasks with scores above 60 are tasks with relatively good health and need to maintain the status quo. In this way, with quantitative indicators, the task owner can be guided to actively manage some tasks, thereby achieving the goal of reducing costs and increasing efficiency.

The application of the model has brought us the following benefits:

① First, the task owner can do You can know whether a task needs management through scores and rankings;

② Quantitative indicators provide a basis for subsequent task management;

③ The benefits and improvements achieved after task management is completed can also be quantified through scores.

3. Spark task intelligent parameter adjustment

##The second application scenario is intelligent parameter adjustment of Spark tasks. A Gartner survey revealed that 70% of cloud resources consumed by cloud users are needlessly wasted. When applying for cloud resources, many people may apply for more resources in order to ensure the successful execution of tasks, which will cause unnecessary waste. There are also many people who use the default configuration when creating tasks, but in fact this is not the optimal configuration. If you can configure it carefully, you can achieve very good results, which can not only ensure operating efficiency and success, but also save a lot of resources. However, task parameter configuration places high demands on users. In addition to understanding the meaning of configuration items, it is also necessary to consider the impact of associations between configuration items. Even relying on expert experience is difficult to achieve optimality, and rule-based strategies are difficult to dynamically adjust.

This puts forward a requirement, hoping that the model can intelligently recommend the optimal parameter configuration for task operation, so that the original running time of the task remains unchanged. Under the premise of improving the utilization of task cloud resources.

For the task parameter adjustment function module, the solution we designed includes two situations: the first is for those who are already online For tasks that have been running for a period of time, the model must be able to recommend the most appropriate configuration parameters based on the historical running status of the task; the second case is for tasks that the user has not yet gone online, the model must be able to provide reasonable configuration through analysis of the task. .

The next step is to train the model. First, determine the output target of the model. There are more than 300 configurable items, and it is impossible for them all to be given by the model. After testing and research, we selected three parameters that have the greatest impact on task running performance, namely the number of cores of the executor, the total amount of memory, and the number of instances. Each configuration item has its default value and adjustable range. In fact, a parameter space is given, and the model only needs to find the optimal solution in this space.

In the training phase, there are two options for . The first option is to learn empirical rules: use rules to recommend parameters in the early stage, and the results are good after going online. Therefore, let the model learn this set of rules first, so as to achieve the goal of quickly going online. The model training samples are more than 70,000 task configurations previously calculated based on rules. The sample characteristics are the historical running data of the task (such as the amount of data processed by the task, the usage of resources, the time taken by the task, etc.), and some statistical information (such as Average consumption, maximum consumption, etc. in the past seven days).

Basic model We chose a multiple regression model with multiple dependent variables. Common regression models are single-output, with many independent variables but only one dependent variable. Here we hope to output three parameters, so we use a multiple regression model with multiple dependent variables, which is essentially an LR model.

The above figure shows the theoretical basis of this model. On the left is a multi-label, which is three configuration items. β is the coefficient of each feature, and Σ is the error. The training method is the same as that of unary regression, using the least squares method to estimate the sum of squares of each element in Σ to the minimum.

The advantage of option one is that you can quickly learn rules and experience, and the cost is relatively small. The disadvantage is that its optimization upper limit can achieve at most as good an effect as the rules, but it will be more difficult to exceed it.

The second option is Bayesian optimization. Its idea is similar to reinforcement learning. It tries to find the optimal solution in the parameter space. configuration. The Bayesian framework is used here because it can use the basis of the previous attempt and have some prior experience in the next attempt to quickly find a better position. The entire training process will be carried out in a parameter space, randomly sampling a configuration for verification, and then running it; after running, it will pay attention to some indicators, such as usage, cost, etc., to determine whether it is optimal; then repeat the above steps until Tuning completed. After the model is trained, there is also a trick-or-treating process during use. If the new task has a certain degree of similarity with the historical task, there is no need to calculate the configuration again, and the previous optimal configuration can be directly used.

Through the experiment and practice of these two plans, we can see that certain achievements have been made. Effect. For existing tasks, after modification according to the configuration parameters recommended by the model, more than 80% of the tasks can achieve an improvement of about 15% in resource utilization, and the resource utilization of some tasks is even doubled. However, both solutions actually have deficiencies: The regression model of learning rules has a low upper limit of optimization; the Bayesian optimization model of global optimization has the disadvantage that it requires various attempts and the cost is too high.

##The future exploration directions are as follows:

Semantic analysis: Spark semantics is relatively rich, including different code structures and operator functions, which are related to task parameter configuration and resource consumption. closely related. But currently we only use the historical running status of the task and ignore the Spark semantics itself. This is a waste of information. The next thing to do is to penetrate into the code level, analyze the operator functions included in the Spark task, and make more fine-grained tuning accordingly.

Classification tuning: Spark has many application scenarios, such as for pure analysis, development, For processing, etc., the tuning space and goals of different scenarios are also different, so it is necessary to perform classification tuning.

Engineering Optimization: One of the difficulties encountered in practice is the small number of samples and high testing costs, which requires the cooperation of relevant parties. Optimize projects or processes.

4. Intelligent selection of SQL task execution engine

The third application scenario is the SQL query task execution engine Smart choice.

Background:

(1) The SQL query platform is the big data product that most users have the most contact with and have the most obvious experience. Whether they are data analysts, R&D, or product managers, they write a lot of SQL every day to get the data they want;

(2) Many people do not pay attention to the underlying execution engine when running SQL tasks. For example, Presto is based on pure memory calculations. In some simple query scenarios The advantage is that the execution speed will be faster, but the disadvantage is that if the storage capacity is not enough, it will hang directly; in contrast to Spark, it is more suitable for executing complex scenarios with large amounts of data, even if oom occurs. Use disk storage to avoid task failure. Therefore, different engines are suitable for different task scenarios.

# (3) The SQL query effect must comprehensively consider the task execution time and resource consumption. Neither excessive pursuit of query speed without considering resource consumption, nor In order to save resources, query efficiency is affected.

(4) There are three main traditional engine selection methods in the industry, RBO, CBO and HBO. RBO is a rule-based optimizer. Rule formulation is difficult and the update frequency is low; CBO is a cost-based optimization. Too much pursuit of cost optimization may lead to task execution failure; HBO is an optimizer based on historical task running conditions. Relatively limited to historical data.

#The design on the functional module, after the user writes the SQL statement and submits it for execution, the model automatically determines which engine to use and pops up a window prompt , the user ultimately decides whether to use the recommended engine for execution.

The overall solution of the model is to recommend execution engines based on the SQL statement itself. Because you can see what tables are used, which functions are used, etc. from SQL itself, this information directly determines the complexity of SQL, thus affecting the choice of execution engine. Model training samples come from historically run SQL statements, and model labels are marked based on historical execution conditions. For example, tasks that take a long time to execute and involve a large amount of data will be marked as suitable for running on Spark, and the rest are suitable for running on Presto. Running SQL. Sample feature extraction uses NLP technology, N-gram plus TF-IDF method. The general principle is to extract phrases to see how often they appear in sentences, so that key phrases can be extracted. The vector features generated after this operation are very large. We first use a linear model to filter out 3000 features, and then train and generate the XGBoost model as the final prediction model.

After training, you can see that the accuracy of model prediction is still relatively high. Probably more than 90%.

The final online application process of the model is: after the user submits SQL, the model recommends an execution engine. If it is different from the engine originally selected by the user, the language conversion module will be called to complete the conversion of the SQL statement. If the execution fails after switching the engine, we will have a failover mechanism to switch back to the user's original engine for execution to ensure successful task execution.

The benefit of this practice is that the model can automatically select the most suitable execution engine, and To complete the subsequent statement conversion, the user does not need to do additional learning.

In addition, the model recommendation engine can basically maintain the original execution efficiency while reducing the failure rate, so the overall user experience will improve .

Finally, the overall resource cost consumption is reduced due to the reduction in the use of unnecessary high-cost engines and the reduction in task execution failure rate.

#In Parts 2 to 4, we shared three applications of AI algorithms on big data platforms. One of its characteristics that can be seen is that the algorithm used by is not particularly complex, but the effect will be very obvious. This inspires us to take the initiative to understand the pain points or optimization space during the operation of the big data platform. After determining the application scenarios, we can try to use different machine learning methods to solve these problems, so as to realize the application of AI algorithms to the big data platform. Data feedback.

5. Prospects for the application of AI algorithms in big data governance

Finally, we look forward to the application of AI algorithms in big data Application scenarios in data governance.

The three application scenarios introduced above are more concentrated in the data processing stage. In fact, echoing the relationship between AI and big data discussed in Chapter 1, AI can play a relatively good role throughout the entire data life cycle.

For example, in the data collection stage, it can be judged whether the log is reasonable; intrusion detection can be done during transmission; during processing, it can further reduce costs and increase efficiency. ; When exchanging, do some work to ensure data security; when destroying, you can judge the timing and related impact of destruction, etc. There are many application scenarios for AI in big data platforms, and this is just an introduction. It is believed that the mutually supporting relationship between AI and big data will become more prominent in the future. AI-assisted big data platforms can better collect and process data, and better data quality can subsequently help train better AI models, thus achieving a virtuous cycle.

6. Question and Answer Session

#Q1: What kind of rule engine is used? Is it open source?

A1: The so-called parameter tuning rules here were formulated by our big data colleagues in the early stage based on manual tuning experience, such as how many minutes the task execution time exceeds, or the processed data How much does the amount exceed, how many cores or memory are recommended for the task, etc. This is a set of rules that have been accumulated over a long period of time, and the results are relatively good after going online, so we use this set of rules to train our parameter recommendation model.

Q2: Is the dependent variable only the adjustment of parameters? Have you considered the impact of the performance instability of the big data platform on the calculation results?

#A2: When making parameter recommendations, we do not just pursue low cost, otherwise the recommended resources will be low and the task will fail. The dependent variable does only have parameter adjustments, but we add additional restrictions to prevent instability. The first is the model characteristics. We choose the average value over a certain period of time rather than the value on an isolated day. Secondly, for the parameters recommended by the model, we will compare the difference with the actual configuration value. If the difference is too large, we will use Slow-up and slow-down strategy to avoid mission failure caused by excessive one-time adjustment.

#Q3: Are regression models and Bayesian models used at the same time?

A3: No. As mentioned just now, we have used two solutions for parameter recommendation: the regression model is used for learning rules; and the Bayesian optimization framework is used later. They are not used at the same time. We have made two attempts. The advantage of the former learning rule is that it can quickly use historical experience; the second model can find a better or even optimal configuration based on the previous one. The two of them belong to a sequential or progressive relationship, rather than being used at the same time.

#Q4: Is the introduction of semantic analysis based on expanding more features?

A4: Yes. As mentioned just now, when doing Spark parameter adjustment, the only information we use is its historical execution status, but we have not yet paid attention to the Spark task itself. Spark itself actually contains a lot of information, including various operators, stages, etc. If you don't analyze its semantics, a lot of information will be lost. So our next plan is to analyze the semantics of Spark tasks and expand more features to assist parameter calculation.

#Q5: Will the parameter recommendation be unreasonable, resulting in task anomalies or even failure? Then how to reduce abnormal task errors and task fluctuations in such a scenario?

A5: If you rely entirely on the model, it may be pursuing the highest possible improvement in resource utilization. In this case, the recommended parameters may be more aggressive, such as The memory suddenly shrunk from 30g to 5g. Therefore, in addition to model recommendation, we will add additional constraints, such as how many g the parameter adjustment span cannot exceed, etc., that is, a slow-up and slow-down strategy.

Q6: There are some articles related to parameter tuning in sigmoid 2022. Do you have any reference?

#A6: Intelligent task parameter adjustment is still a relatively popular research direction, and teams in different fields have adopted different method models. We investigated many industry methods before starting to do it, including the sigmoid 2022 paper you mentioned. After comparison and practice, we finally tried the two solutions we shared. In the future, we will continue to pay attention to the latest developments in this direction and try more methods to improve the recommendation effect.

That’s it for today’s sharing, thank you all.

The above is the detailed content of Application of AI algorithms in big data governance. For more information, please follow other related articles on the PHP Chinese website!

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AM

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AMai合并图层的快捷键是“Ctrl+Shift+E”,它的作用是把目前所有处在显示状态的图层合并,在隐藏状态的图层则不作变动。也可以选中要合并的图层,在菜单栏中依次点击“窗口”-“路径查找器”,点击“合并”按钮。

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AM

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AMai橡皮擦擦不掉东西是因为AI是矢量图软件,用橡皮擦不能擦位图的,其解决办法就是用蒙板工具以及钢笔勾好路径再建立蒙板即可实现擦掉东西。

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PM

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PMai可以转成psd格式。转换方法:1、打开Adobe Illustrator软件,依次点击顶部菜单栏的“文件”-“打开”,选择所需的ai文件;2、点击右侧功能面板中的“图层”,点击三杠图标,在弹出的选项中选择“释放到图层(顺序)”;3、依次点击顶部菜单栏的“文件”-“导出”-“导出为”;4、在弹出的“导出”对话框中,将“保存类型”设置为“PSD格式”,点击“导出”即可;

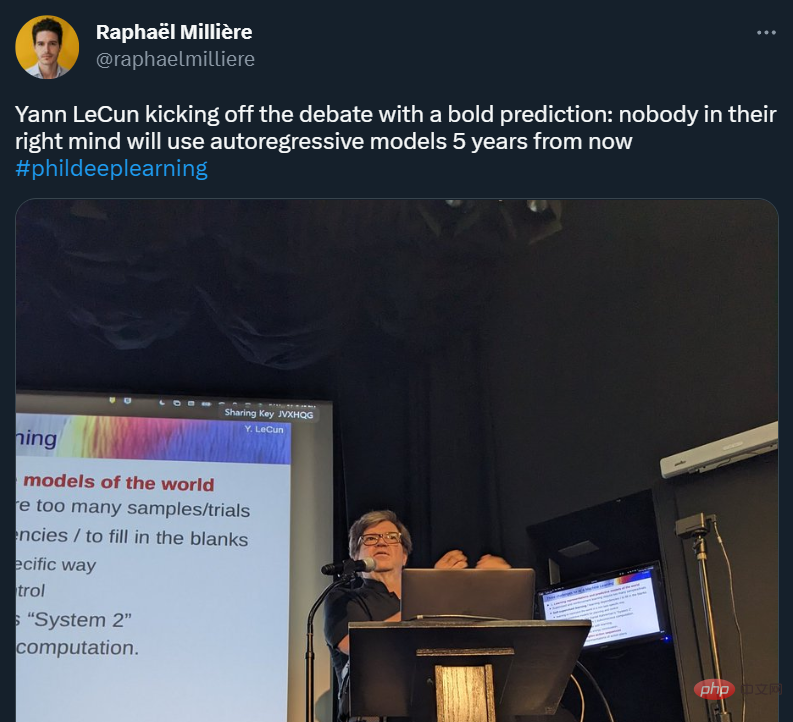

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AM

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AMYann LeCun 这个观点的确有些大胆。 「从现在起 5 年内,没有哪个头脑正常的人会使用自回归模型。」最近,图灵奖得主 Yann LeCun 给一场辩论做了个特别的开场。而他口中的自回归,正是当前爆红的 GPT 家族模型所依赖的学习范式。当然,被 Yann LeCun 指出问题的不只是自回归模型。在他看来,当前整个的机器学习领域都面临巨大挑战。这场辩论的主题为「Do large language models need sensory grounding for meaning and u

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PM

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PMai顶部属性栏不见了的解决办法:1、开启Ai新建画布,进入绘图页面;2、在Ai顶部菜单栏中点击“窗口”;3、在系统弹出的窗口菜单页面中点击“控制”,然后开启“控制”窗口即可显示出属性栏。

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AM

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AMai移动不了东西的解决办法:1、打开ai软件,打开空白文档;2、选择矩形工具,在文档中绘制矩形;3、点击选择工具,移动文档中的矩形;4、点击图层按钮,弹出图层面板对话框,解锁图层;5、点击选择工具,移动矩形即可。

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM引入密集强化学习,用 AI 验证 AI。 自动驾驶汽车 (AV) 技术的快速发展,使得我们正处于交通革命的风口浪尖,其规模是自一个世纪前汽车问世以来从未见过的。自动驾驶技术具有显着提高交通安全性、机动性和可持续性的潜力,因此引起了工业界、政府机构、专业组织和学术机构的共同关注。过去 20 年里,自动驾驶汽车的发展取得了长足的进步,尤其是随着深度学习的出现更是如此。到 2015 年,开始有公司宣布他们将在 2020 之前量产 AV。不过到目前为止,并且没有 level 4 级别的 AV 可以在市场

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.