Technology peripherals

Technology peripherals AI

AI Erase blemishes and wrinkles with one click: in-depth interpretation of DAMO Academy's high-definition portrait skin beauty model ABPN

Erase blemishes and wrinkles with one click: in-depth interpretation of DAMO Academy's high-definition portrait skin beauty model ABPNWith the vigorous development of the digital cultural industry, artificial intelligence technology has begun to be widely used in the field of image editing and beautification. Among them, portrait skin beautification is undoubtedly one of the most widely used and most demanded technologies. Traditional beauty algorithms use filter-based image editing technology to achieve automated skin resurfacing and blemish removal effects, and have been widely used in social networking, live broadcasts and other scenarios.

However, in the professional photography industry with high thresholds, due to the high requirements for image resolution and quality standards, manual retouchers are still the main productive force in portrait beauty retouching. , complete a series of tasks including skin leveling, blemish removal, whitening, etc. Usually, the average processing time for a professional retoucher to perform skin beautification operations on a high-definition portrait is 1-2 minutes. In fields such as advertising, film and television, which require higher accuracy, the processing time will be longer.

Compared with skin resurfacing in interactive entertainment scenes, advertising-level and studio-level refined skin beautification brings higher requirements and challenges to the algorithm. On the one hand, there are many types of blemishes, including acne, acne marks, freckles, uneven skin tone, etc. The algorithm needs to adaptively process different blemishes; on the other hand, in the process of removing blemishes, the texture of the skin needs to be preserved as much as possible , texture, and achieve high-precision skin modification; last but not least, with the continuous iteration of photographic equipment, the image resolution commonly used in professional photography has reached 4K or even 8K, which poses great challenges to the processing efficiency of the algorithm. Stringent requirements.

Therefore, with the starting point of realizing professional-level intelligent skin beautification, we have developed a set of ultra-fine local image retouching algorithms ABPN for high-definition images. Very good results and applications have been achieved in clothing wrinkle removal tasks.

- ##Paper: https://openaccess.thecvf.com/content/CVPR2022/papers/Lei_ABPN_Adaptive_Blend_Pyramid_Network_for_Real-Time_Local_Retouching_of_CVPR_2022_paper.pdf

- Model & code: https://www.modelscope.cn/models/damo/cv_unet_skin-retouching/summary

##3.1 Traditional beauty algorithm

#The core of the traditional beauty algorithm is to make the pixels in the skin area smoother and reduce the conspicuousness of flaws, thereby making the skin look smoother. Generally speaking, existing beautification algorithms can be divided into three steps: 1) image filtering algorithm, 2) image fusion, and 3) sharpening. The overall process is as follows:

##The original image comes from unsplash [31]From the effect point of view, the traditional beauty algorithm has two major problems: 1) The processing of defects is non-adaptive and cannot handle different types of defects well. 2) Smoothing processing causes the loss of skin texture and texture. These problems are particularly noticeable in high-definition images.

3.2 Existing deep learning algorithm

In order to achieve adaptive modification of different skin areas and different flaws, based on Data-driven deep learning algorithms appear to be a better solution. Considering the relevance of the task, we discussed and compared the applicability of four existing methods: Image-to-Image Translation, Photo Retouching, Image Inpainting, and High-resolution Image Editing for skin beautification tasks.

- 3.2.1 Image-to-Image Translation

Image-to-Image Translation The task was initially started by pix2pix [1 ], which summarizes a large number of computer vision tasks into pixel-to-pixel prediction tasks, and proposes a general framework based on conditional generative adversarial networks to solve such problems. Based on pix2pix [1], various methods have been proposed to solve the image translation problem, including methods using paired images [2, 3, 4, 5] and methods using unpaired images. Methods [6,7,8,9]. Some work focuses on certain specific image translation tasks (such as semantic image synthesis [2, 3, 5], style transfer, etc. [9, 10, 11, 12]) and has achieved impressive results. However, most of the above image translations mainly focus on the overall transformation of image to image and lack attention to local areas, which limits their performance in skin beautification tasks.

- 3.2.2 Photo Retouching

##Benefiting from the development of deep convolutional neural networks, learning-based methods[ 13,14,15,16] has shown excellent results in the field of image retouching in recent years. However, similar to most image translation methods, existing retouching algorithms mainly focus on manipulating some overall properties of the image, such as color, lighting, exposure, etc. Little attention is paid to the retouching of local areas, and skin retouching is exactly a local retouching task (Local Photo Retouching), which requires retouching the target area while keeping the background area unchanged.

- 3.2.3 Image Inpainting

- 3.2.4 High-resolution Image Editing

Local retouching framework based on adaptive blending pyramid

The essence of skin beauty lies in the editing of images. Unlike most other image conversion tasks, this Editing is partial. Similar tasks include wrinkle removal on clothing and product modification. This type of local image retouching task has strong commonality. We summarize its three main difficulties and challenges: 1) Accurate positioning of the target area. 2) Local generation (modification) with global consistency and detail fidelity. 3) Ultra-high resolution image processing. To this end, we propose a local retouching framework based on Adaptive Blend Pyramid (ABPN: Adaptive Blend Pyramid Network for Real-Time Local Retouching of Ultra High-Resolution Photo, CVPR2022,[27]) to achieve ultra-high resolution For refined local retouching of images, we will introduce its implementation details below.

4.1 Overall network structure

As shown in the figure above, the network structure mainly consists of two parts: context-aware local modification layer (LRL) and adaptive blending pyramid layer (BPL). The purpose of LRL is to locally modify the downsampled low-resolution image and generate a low-resolution modification result image, fully considering the global context information and local texture information. Further, BPL is used to gradually upscale the low-resolution results generated in LRL to high-resolution results. Among them, we designed an adaptive blending module (ABM) and its reverse module (R-ABM). Using the intermediate blending layer Bi, we can achieve adaptive conversion and upward expansion between the original image and the result image, showing a powerful scalability and detail fidelity capabilities. We conducted a large number of experiments in the two data sets of facial modification and clothing modification, and the results show that our method is significantly ahead of existing methods in terms of effectiveness and efficiency. It is worth mentioning that our model achieves real-time inference of 4K ultra-high-resolution images on a single card P100. Below, we introduce LRL, BPL and network training loss respectively.

4.2 Context-aware Local Retouching Layer

In LRL, we Want to solve the two challenges mentioned in Part 3: precise positioning of the target area and local generation with global consistency. As shown in Figure 3, LRL consists of a shared encoder, mask prediction branch (MPB), and local modification branch (LRB).

In general, we use a multi-tasking structure to achieve explicit target areas Prediction,guidance with local modification. Among them, the structure of the shared encoder can use the joint training of the two branches to optimize features and improve the modification branch's global semantic information and local perception of the target. Most image translation methods use the traditional encoder-decoder structure to directly implement local editing without decoupling target positioning and generation, thus limiting the generation effect (the capacity of the network is limited). In contrast, multi-branch structures It is more conducive to task decoupling and mutual benefit. In the local modification branch LRB, we designed LAM (Figure 4), which uses the spatial attention mechanism and the feature attention mechanism simultaneously to achieve full fusion of features and capture of the semantics and texture of the target area. The ablation experiment (Figure 6) demonstrates the effectiveness of each module design.

4.3 Adaptive Blend Pyramid Layer

LRL is implemented at low resolution For local retouching, how to extend the retouching results to high resolution while enhancing its detail fidelity? This is the problem we want to solve in this part.

- 4.3.1 Adaptive Blend Module

##In the field of image editing, blending layers (Blend layer) is often used to mix with the image (base layer) in different modes to achieve various image editing tasks, such as contrast enhancement, deepening, and lightening operations, etc. Usually, given a picture , and a blending layer

, we can blend the two layers to get the image editing result , as follows:

, we can blend the two layers to get the image editing result , as follows:

where f is a fixed pixel-by-pixel mapping function, usually determined by the blending mode. Limited by the conversion capability, a specific blending mode and fixed function f are difficult to be directly applied to a variety of editing tasks. In order to better adapt to the distribution of data and the conversion modes of different tasks, we drew on the soft light mode commonly used in image editing and designed an adaptive blending module (ABM), as follows:

represents Hadmard product, and are learnable parameters, which are used by all ABM modules in the network and the following Shared by the R-ABM module, represents a constant matrix with all values 1.

- 4.3.2 Reverse Adaptive Blend Module

, in order to obtain the hybrid layer B, we solve formula 3 and construct a reverse adaptive blending module (R-ABM), as follows:

In general, by using the mixed layer as an intermediary, the ABM module and the R-ABM module realize the adaptive conversion between the image I and the result R. Compared with directly using convolution on the low-resolution result Upsampling and other operations are expanded upward (such as Pix2PixHD). We use the hybrid layer to achieve this goal, which has two advantages: 1) In the local modification task, the hybrid layer mainly records the local part between the two images. Transform information, meaning it contains less irrelevant information and is easier to optimize by a lightweight network. 2) The blending layer acts directly on the original image to achieve the final modification, which can make full use of the information of the image itself, thereby achieving a high degree of detail fidelity.

In fact, there are many alternative functions or strategies for the adaptive hybrid module. We discuss the design motivation and other solutions in the paper. The comparison is introduced in detail and will not be elaborated here. Figure 7 shows the ablation comparison between our method and other hybrid methods.

4.3.3 Refining Module

##4.4 Loss function

5.1 Comparison with SOTA method

5.2 Ablation experiment

##5.3 Running speed And memory consumption

Effect display

Effect display

Original image from unsplash [31]

##The original image comes from the face data set FFHQ [32] It can be seen that compared with the traditional beauty algorithm, the local retouching framework we proposed fully retains the texture and texture of the skin while removing skin defects, achieving fine and intelligent Skin texture optimization. Further, we extended this method to the field of clothing wrinkle removal and achieved good results, as follows:

##

The above is the detailed content of Erase blemishes and wrinkles with one click: in-depth interpretation of DAMO Academy's high-definition portrait skin beauty model ABPN. For more information, please follow other related articles on the PHP Chinese website!

An AI Space Company Is BornMay 12, 2025 am 11:07 AM

An AI Space Company Is BornMay 12, 2025 am 11:07 AMThis article showcases how AI is revolutionizing the space industry, using Tomorrow.io as a prime example. Unlike established space companies like SpaceX, which weren't built with AI at their core, Tomorrow.io is an AI-native company. Let's explore

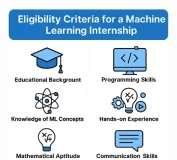

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AM

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AMLand Your Dream Machine Learning Internship in India (2025)! For students and early-career professionals, a machine learning internship is the perfect launchpad for a rewarding career. Indian companies across diverse sectors – from cutting-edge GenA

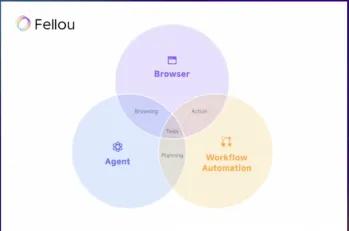

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AM

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AMThe landscape of online browsing has undergone a significant transformation in the past year. This shift began with enhanced, personalized search results from platforms like Perplexity and Copilot, and accelerated with ChatGPT's integration of web s

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft