Technology peripherals

Technology peripherals AI

AI Smooth video is generated based on GAN, and the effect is very impressive: no texture adhesion, jitter reduction

Smooth video is generated based on GAN, and the effect is very impressive: no texture adhesion, jitter reductionSmooth video is generated based on GAN, and the effect is very impressive: no texture adhesion, jitter reduction

In recent years, research on image generation based on Generative Adversarial Network (GAN) has made significant progress. In addition to being able to generate high-resolution, realistic pictures, many innovative applications have also emerged, such as personalized picture editing, picture animation, etc. However, how to use GAN for video generation is still a challenging problem.

In addition to modeling single-frame images, video generation also requires learning complex temporal relationships. Recently, researchers from the Chinese University of Hong Kong, Shanghai Artificial Intelligence Laboratory, Ant Technology Research Institute and the University of California, Los Angeles proposed a new video generation method (Towards Smooth Video Composition). In the article, they conducted detailed modeling and improvements on the timing relationships of different spans (short-term range, moderate range, long range), and achieved significant improvements in multiple data sets compared with previous work. This work provides a simple and effective new benchmark for GAN-based video generation.

- Paper address: https://arxiv.org/pdf/2212.07413.pdf

- Project code link: https://github.com/genforce/StyleSV

Model architecture

The image generation network based on GAN can be expressed as: I=G(Z), where Z is a random variable, G is the generation network, and I is the generated image. We can simply extend this framework to the category of video generation: I_i=G(z_i), i=[1,...,N], where we sample N random variables z_i at one time, and each random variable z_i generates a corresponding A frame of picture I_i. The generated video can be obtained by stacking the generated images in the time dimension.

MoCoGAN, StyleGAN-V and other works have proposed a decoupled expression on this basis: I_i=G(u, v_i), i=[1,..., N], where u represents the random variable that controls the content, and v_i represents the random variable that controls the action. This representation holds that all frames share the same content and have unique motion. Through this decoupled expression, we can better generate action videos with consistent content styles and changeable realism. The new work adopts the design of StyleGAN-V and uses it as a baseline.

Difficulties in video generation: How to effectively and reasonably model temporal relationships?

The new work focuses on the timing relationships of different spans (short range, moderate range, long range), and conducts detailed modeling and improvement respectively:

1. Short-term (~5 frames) timing relationships

#Let us first consider a video with only a few frames . These short video frames often contain very similar content, showing only very subtle movements. Therefore, it is crucial to realistically generate subtle movements between frames. However, serious texture sticking occurs in the videos generated by StyleGAN-V.

Texture adhesion refers to the dependence of part of the generated content on specific coordinates. This causes the phenomenon of "sticking" to a fixed area. In the field of image generation, StyleGAN3 alleviates the problem of texture adhesion through detailed signal processing, expanded padding range and other operations. This work verifies that the same technique is still effective for video generation.

In the visualization below, we track pixels at the same location in each frame of the video. It is easy to find that in the StyleGAN-V video, some content has been "sticky" at fixed coordinates for a long time and has not moved over time, thus producing a "brush phenomenon" in the visualization. In the videos generated by the new work, all pixels exhibit natural movement.

However, researchers found that referencing the backbone of StyleGAN3 will reduce the quality of image generation. To alleviate this problem, they introduced image-level pre-training. In the pre-training stage, the network only needs to consider the generation quality of a certain frame in the video, and does not need to learn the modeling of the temporal range, making it easier to learn knowledge about image distribution.

2. Medium length (~5 seconds) timing relationship

As the generated video has more of frames, it will be able to show more specific actions. Therefore, it is important to ensure that the generated video has realistic motion. For example, if we want to generate a first-person driving video, we should generate a gradually receding ground and street scene, and the approaching car should also follow a natural driving trajectory.

In adversarial training, in order to ensure that the generative network receives sufficient training supervision, the discriminative network is crucial. Therefore, in video generation, in order to ensure that the generative network can generate realistic actions, the discriminative network needs to model the temporal relationships in multiple frames and capture the generated unrealistic motion. However, in previous work, the discriminant network only used a simple concatenation operation (concatenation operation) to perform temporal modeling: y = cat (y_i), where y_i represents the single-frame feature and y represents the feature after time domain fusion.

For the discriminant network, the new work proposes an explicit timing modeling, that is, introducing the Temporal Shift Module (TSM) at each layer of the discriminant network. . TSM comes from the field of action recognition and realizes temporal information exchange through simple shift operations:

Experiments show that after the introduction of TSM, three FVD16, FVD128 on the data set have been reduced to a great extent.

##3. Unlimited video generation

While previously introduced improvements focused on short and moderate length video generation, the new work further explores how to generate high-quality videos of any length (including infinite length). Previous work (StyleGAN-V) can generate infinitely long videos, but the video contains very obvious periodic jitter:

As shown in the figure, in the video generated by StyleGAN-V, as the vehicle advances, the zebra crossing originally retreated normally, but then suddenly changed to move forward. This work found that discontinuity in motion features (motion embedding) caused this jitter phenomenon.

Previous work used linear interpolation to calculate action features. However, linear interpolation will lead to first-order discontinuity, as shown in the following figure (the left is the interpolation diagram, and the right is the T-SNE feature) Visualization):

This work proposes the motion characteristics of B-spline control (B-Spline based motion embedding). Interpolation through B-spline can obtain smoother action features with respect to time, as shown in the figure (the left is the interpolation diagram, the right is the T-SNE feature visualization):

By introducing the action characteristics of B-spline control, the new work alleviates the jitter phenomenon:

##As shown in the figure, in the video generated by StyleGAN-V, the street lights and the ground will suddenly change the direction of movement. In the videos generated by the new work, the direction of movement is consistent and natural.

At the same time, the new work also proposes a low rank constraint on action features to further alleviate the occurrence of periodic repetitive content.

ExperimentsThe work has been fully experimented on three data sets (YouTube Driving, Timelapse, Taichi-HD) and fully compared with previous work. The results show that the new work has achieved sufficient improvements in picture quality (FID) and video quality (FVD).

SkyTimelapse Experimental results:

##

##

Taichi-HD Experiment Result:

## Summary

Summary

The above is the detailed content of Smooth video is generated based on GAN, and the effect is very impressive: no texture adhesion, jitter reduction. For more information, please follow other related articles on the PHP Chinese website!

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

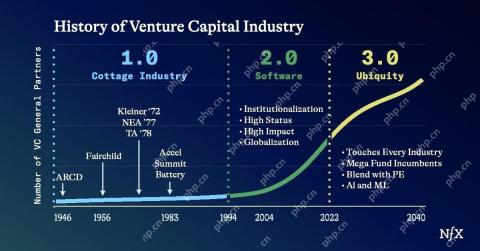

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Zend Studio 13.0.1

Powerful PHP integrated development environment

Atom editor mac version download

The most popular open source editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft