Technology peripherals

Technology peripherals AI

AI What happens when the customer support company upgrades certain features to ChatGPT?

What happens when the customer support company upgrades certain features to ChatGPT?

ChatGPT has now become a popular chatbot around the world, and almost all companies are considering how to use it to improve business efficiency. Five days after it went online in November last year, it had 1 million users. In the following two months, this artificial intelligence chatbot had more than 200 million users.

This machine learning language program developed by San Francisco-based artificial intelligence developer OpenAI can provide human-like text responses. It can summarize long articles or text conversations, provide writing advice, plan marketing campaigns, and formulate Business plans, even creating or modifying computer code, all can be achieved with a limited investment.

Microsoft, which owns 49% of OpenAI, has invested billions of dollars in the company. Microsoft has also launched a Bing search based on the latest version of OpenAI’s GPT-4 large language model. Engine, ChatGPT is also developed based on this model. Not to be outdone, Google recently released its own experimental artificial intelligence chatbot.

Intercom, a customer support software provider whose products are used by 25,000 companies around the world, including Atlassian, Amazon, and Lyft Business, is currently at the forefront of ChatGPT usage. The company's software simply uses features of ChatGPT's large language model (GPT3.5) to add AI-enabled functionality to its platform.

Fergal Reid, director of machine learning at Intercom, said that adding ChatGPT functionality to the company’s artificial intelligence customer service software has undeniable advantages. Intercom's software is used to help customer service representatives answer customer questions. Intercom also sells a support chatbot called "Resolution Bot" that businesses can embed on their websites to automatically answer end-user questions.

But Reid warned that ChatGPT also has some problems that cannot be ignored. Due to pricing issues, the new customer service software is still in beta for hundreds of Intercom customers.

However, customers who have tested Intercom’s software have praised the updated software, which is based on OpenAI’s GPT-3.5 language model, and claim it will make their jobs easier.

In an interview with industry media, Reid elaborated on the process of customizing ChatGPT software for Intercom’s commercial use, how it provides business value, and the challenges he and his machine learning team have faced and still face.

The following is an excerpt from the interview:

Could you please introduce the business carried out by Intercom? Why do you feel the need to upgrade existing products?

Reid: Our business is basically It's customer service. We developed a messenger so if someone asked a customer support or service question, they would go to a business website and type the question into the messenger, just like a WhatsApp chat.

Intercom is one of the leaders in the customer support industry and one of the first companies to launch this business messenger service. So we developed this messenger and then built an entire customer support platform for customer support people (we call them teammates) whose job it was to answer customer support questions repeatedly, day after day.

We found that ChatGPT goes to another level in handling random conversations. When people ask questions, they may ask them in surprising ways. During the course of a conversation, people may bring up things they said in previous turns of the conversation. This is difficult for traditional machine learning systems to handle, but OpenAI's new technology seems to do a better job at this.

We tried ChatGPT and GPT3. We were like, "This is a big deal. This will unlock more powerful capabilities for our chatbots and teammates.

How long will it take to create and upgrade ChatGPT?

Reid: After starting to develop the product in early December last year, we demonstrated the first prototype in early January. It was a very fast development cycle. By about mid-January, our product already had 108 customers using it for testing, and then Another beta version was released at the end of January. Our product is now in public beta, so hundreds of content customers are using it. We still call it a beta, even though people are using it in production every day.

This is a compelling demo prototype that doesn't provide real value yet. So, we still have a lot of work to do to understand how much real value this prototype has.

Talk to people The cost of these open APIs that OpenAI has is very high compared to any API that might be used. It will cost the user just to have it summarize what it is doing, and while it's much cheaper than doing it yourself, it's a must for businesses Problem solved. So how to price it?

That's another reason why we're still in the beta phase. We, like everyone else, are still learning how much this technology will cost. In terms of computer processing costs, it It saves people more time, but how to achieve economy still needs to be explored.

What is the difference between ChatGPT and OpenAI’s GPT 3.5 large language model? Is Intercom’s product developed in collaboration with them?

Reid: I think ChatGPT is more like the front end of the GPT 3.5 model. However, anyone building on ChatGPT is building on the same underlying model, which OpenAI calls GPT 3.5. They are basically the same, the difference is in the user interface.

Training ChatGPT has more guardrails, so if you let it do something it doesn't want to do, it will say, "I'm just a big language model, I can't do these." And the underlying language model doesn't. These guardrails. They are not trained to talk to end users on the Internet. Therefore, any developer building a product is using the underlying model, not the ChatGPT interface. However, in terms of complexity of understanding and functionality of the underlying model, it's basically the same.

The model we are using is Text-Davinci-003, basically everyone is using it.

Do you have options on what to build? Are there other large language models from third parties that can be used to build new service representation features?

Reid: Currently ChatGPT does not host these models on OpenAI s application. No one can really use ChatGPT except OpenAI. Technically, I think ChatGPT is a service provided by OpenAI to the public, and anyone can build a ChatGPT thing on their website. To be more precise, they use the same OpenAI model that powers ChatGPT.

Is ChatGPT used for the same tasks as Intercom's Resolution Bot product?

Reid: The functionality we initially released was aimed at customer support staff, not end users. We develop chatbots for end users and then have machine learning-based productivity features for customer support staff. Our initial release of the Resolution Bot product had features to make customer support staff better, it was not designed for end users.

The reason we do this is because many of OpenAI’s current machine learning models suffer from so-called hallucinations. If you ask them a question and the correct answer isn't given, they will often make up some answer.

This situation exceeded our expectations. There are features that are obviously valuable, like summarizing, and then there are others, like rephrasing text or making text more user-friendly.

It is conceivable that their job is not to ensure that real information is provided, so they can only make up information. We were initially reluctant to let users use them and answer questions. We were concerned that our customers’ experience would be affected by the chatbot making up incorrect answers. Our early testing does show that letting customers use an immature chatbot powered by ChatGPT is a very bad idea. We will continue to work hard and we think there will be better solutions in the future.

If this tool just makes up answers, what use is it to customer support people?

Reid: While we are working in this area and certainly have internal R&D prototypes, at this time we No products or tools have been named or committed to release yet.

We originally released the chatbot just to help customer support people because they would usually know what the correct answer is, and the chatbot would still allow them to provide service faster and more efficiently because they are at 90% time without having to enter the answers yourself. Then 10% of the time, when something goes wrong, customer support can resolve it directly.

So, it becomes more like an interface. If you use Google Docs or any predictive text that can give suggestions, it's okay that sometimes the suggestions are wrong, but when they give the right suggestions, it will increase user efficiency. That's why we initially released a beta version, which by the end of January this year had been tested by hundreds of customers and we received a lot of positive feedback on the new features. It makes customer support staff more efficient and increases sales.

It helps sales reps rephrase texts, it doesn’t just automatically send text to end users, it’s designed to allow customer support staff to complete tasks faster.

Are there other great features of ChatGPT for account reps?

Reid: Another feature of ChatGPT that we built is summaries. This large language model is excellent at processing existing text and generating summaries of large articles or text conversations. Likewise, we have a lot of customer support people who, when a customer raises an issue that is too complex for ChatGPT, has to refer the conversation to customer support, who are often asked to write a summary of the conversation with the end user. Some customer support staff say that writing summaries and responding to customer conversations is sometimes time-consuming, but they have to do it.

So, this technology is excellent at summarizing and summarizing text content. One of the features we're most proud of is this summary feature, which allows you to press a button and get a summary of a conversation, which can then be edited and sent to the client.

These features are designed to allow customers to participate and continuously enhance the capabilities of ChatGPT. Customer support doesn’t need to spend more time reading through the entire conversation and figuring out the main takeaways. Instead, ChatGPT pulls out the relevant snippets, and customer support just needs to confirm.

These models are much better than those we have developed before, but they are still not perfect. They still occasionally overlook details and fail to understand things that a skilled customer support staff would.

How did you modify the ChatGPT software to meet your needs? How was this done?

Reid: OpenAI provides us with an API that we can send text to and get from Get the text in its model. Unlike in the past, the way people actually use this technology is to "tell it" what they want it to do and send it some text, such as the following summary of the conversation:

Customer: "Hi, I have a question."

Customer representative: "Hello, how can I help you?""

Customers may text it directly, including what they want it to do. , it sends back the text - which in this case now contains the summary version. It then processes it and submits it to customer support, so they can choose to use it or not.

Customers can use it to summarize emails. An email will usually contain previous email history underneath, which can be used to get clues to the email. This feature was not available in past programming languages, but it must be spent A lot of effort to get it to do what you want it to do. When asking it to do something, you have to be very specific to avoid errors.

Have you used your own IT team or software engineers to customize ChatGPT? How difficult is it? What?

Reid: Intercom has a strong R&D team, just like any software and service technology company. I lead the machine learning team, so most of the people in the team are experts in the field of machine learning , myself included. So, we have extensive experience training and working with machine learning models.

We have an internal (customer success) team that treats them as alpha customers. Intercom Inc. There are 100 (customer success) reps. So, we will deliver prototypes to them very quickly and get their feedback on the model, but we are not using them to train the model, we are just using them as alpha testers to help identify issues , and determine what went wrong.

We have a lot of work to do. It's easy to make a chatbot for demos, but there's a lot more to make it work in a production environment.

Do you think this chatbot product will eventually be shipped to end users for use? No middlemen, so to speak, with customer representatives?

Reid: We're currently looking into that, And it's not ready yet, but we think this type of technology will be available to customers soon. Google recently released a product, and their artificial intelligence model showed some errors at the launch event, which made A lot of people are disappointed.

So, everyone has to figure out how to deal with this occasional issue, and we're working hard to fix it.

Upgrading AI-enabled features for customers and their How much time and effort does the customer service rep save? Does it cut customer response time by a third or half?

Reid: Probably not that high. I don’t have an exact number because this technology is so New. While we already have the telemetry, it's probably going to be a few more weeks before we get the data. It's a hard thing to measure.

I would say that something like text summarization can save about 1 to 2 minutes out of a 10 to 15 minute conversation. Here's some of the feedback we've gotten and what's exciting to see. Since the open beta, you can find time-saving posts from Intercom customers on Twitter.

I also think everyone in this field faces the challenge of having to be honest. This technology is so exciting, it's hard to stay awake without overdoing it. What we're releasing is an AI bot for demonstration purposes that doesn't provide real value yet, so there's still a lot of work to do to understand how much real value is being delivered here. We'll learn more about this and hope to release it sooner rather than later and see what our customers think.

The customer feedback we received exceeded my expectations, and there are some features (such as summaries) that are clearly valuable, and others, such as the ability to rephrase text or make it more user-friendly, or Another feature we offer is the ability to write abbreviated versions of information and expand them, and customer feedback on these features has been good.

You don’t have any hard data to prove that doing this makes customer service reps more efficient?

Reid: Actually, we need to understand our telemetry within a month or two , and determine whether you are using it every time. We need to verify, I think killer apps are still being developed in this space.

We had a great demo using ChatGPT and it caught everyone's attention. But there are companies like Intercom that are figuring out how to take it from a toy to something with real business value. Even at Intercom, we'd say, "We've rolled out these features, they're cool and they seem valuable, but they're not game-changing enough." I think that's going to be us The next wave of technology is being developed and has longer development cycles. None of this is as simple as quick integration. We have to get very deep into the user's problem and all the different aspects and where it fails. Many of our competitors and industry players are solving the same problems and creating even more valuable features.

We had a very fast development cycle and launched the chatbot very quickly and got great feedback from our customers which helped us decide where to go next, this is my realistic view of the current situation.

There is a lot of hype about ChatGPT right now. How do you deal with this when you're trying to lower customer expectations for your product?

Reid: Our actual strategy here is to try to be as honest as possible about our expectations. We feel we can differentiate ourselves from the hype wave by being honest.

How do you get customers to choose to use your new ChatGPT bot?

Reid: Intercom has more than 25,000 customers. We would say to customers, ‘We have some beta products. Will you participate? Some customers have opted in and indicated they would like to use the early software, but some will not. Some customers are risk-averse businesses (such as banks) who do not want to be part of the test project.

When we develop new software, we send them a message asking them to recruit people for the beta. Our project manager started promoting the event and that's all we did. We invited hundreds of customers for testing in mid-January. After customers join, they use the API to process data, and we open these functions for them.

Then we start looking at the telemetry the next day and see where customers are using it and is it working for them? And then that's how we typically run tests at Intercom, we say when we contact them , "Can we get your feedback on this? We'd like to know your quotes." Some customers gave us real quotes within a few weeks, which we later posted on the blog.

We want to stand out from all the hype. Many startups usually use the guise of ChapGPT and just create a landing page. And we actually have products like ChapGPT and publish actual customer quotes on our website, and it's really real.

The above is the detailed content of What happens when the customer support company upgrades certain features to ChatGPT?. For more information, please follow other related articles on the PHP Chinese website!

令人惊艳的4个ChatGPT项目,开源了!Mar 30, 2023 pm 02:11 PM

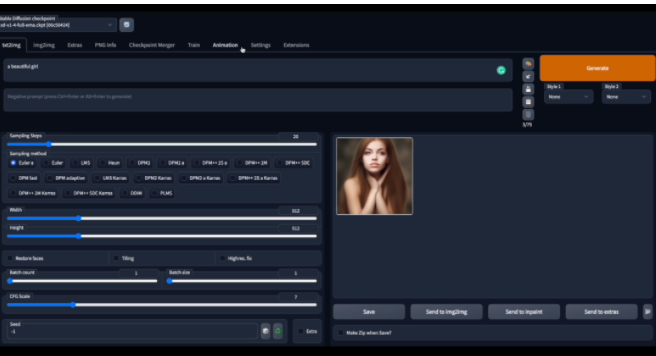

令人惊艳的4个ChatGPT项目,开源了!Mar 30, 2023 pm 02:11 PM自从 ChatGPT、Stable Diffusion 发布以来,各种相关开源项目百花齐放,着实让人应接不暇。今天,着重挑选几个优质的开源项目分享给大家,对我们的日常工作、学习生活,都会有很大的帮助。

Word文档拆分后的子文档字体格式变了怎么办Feb 07, 2023 am 11:40 AM

Word文档拆分后的子文档字体格式变了怎么办Feb 07, 2023 am 11:40 AMWord文档拆分后的子文档字体格式变了的解决办法:1、在大纲模式拆分文档前,先选中正文内容创建一个新的样式,给样式取一个与众不同的名字;2、选中第二段正文内容,通过选择相似文本的功能将剩余正文内容全部设置为新建样式格式;3、进入大纲模式进行文档拆分,操作完成后打开子文档,正文字体格式就是拆分前新建的样式内容。

学术专用版ChatGPT火了,一键完成论文润色、代码解释、报告生成Apr 04, 2023 pm 01:05 PM

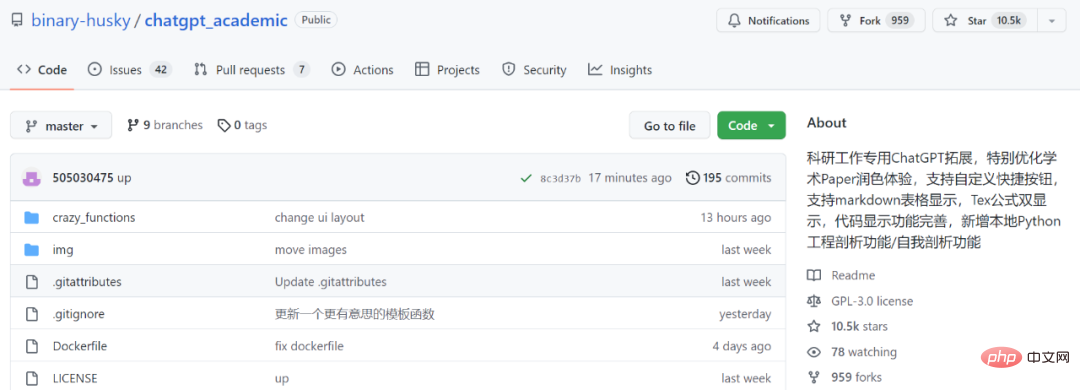

学术专用版ChatGPT火了,一键完成论文润色、代码解释、报告生成Apr 04, 2023 pm 01:05 PM用 ChatGPT 辅助写论文这件事,越来越靠谱了。 ChatGPT 发布以来,各个领域的从业者都在探索 ChatGPT 的应用前景,挖掘它的潜力。其中,学术文本的理解与编辑是一种极具挑战性的应用场景,因为学术文本需要较高的专业性、严谨性等,有时还需要处理公式、代码、图谱等特殊的内容格式。现在,一个名为「ChatGPT 学术优化(chatgpt_academic)」的新项目在 GitHub 上爆火,上线几天就在 GitHub 上狂揽上万 Star。项目地址:https://github.com/

vscode配置中文插件,带你无需注册体验ChatGPT!Dec 16, 2022 pm 07:51 PM

vscode配置中文插件,带你无需注册体验ChatGPT!Dec 16, 2022 pm 07:51 PM面对一夜爆火的 ChatGPT ,我最终也没抵得住诱惑,决定体验一下,不过这玩意要注册需要外国手机号以及科学上网,将许多人拦在门外,本篇博客将体验当下爆火的 ChatGPT 以及无需注册和科学上网,拿来即用的 ChatGPT 使用攻略,快来试试吧!

30行Python代码就可以调用ChatGPT API总结论文的主要内容Apr 04, 2023 pm 12:05 PM

30行Python代码就可以调用ChatGPT API总结论文的主要内容Apr 04, 2023 pm 12:05 PM阅读论文可以说是我们的日常工作之一,论文的数量太多,我们如何快速阅读归纳呢?自从ChatGPT出现以后,有很多阅读论文的服务可以使用。其实使用ChatGPT API非常简单,我们只用30行python代码就可以在本地搭建一个自己的应用。 阅读论文可以说是我们的日常工作之一,论文的数量太多,我们如何快速阅读归纳呢?自从ChatGPT出现以后,有很多阅读论文的服务可以使用。其实使用ChatGPT API非常简单,我们只用30行python代码就可以在本地搭建一个自己的应用。使用 Python 和 C

用ChatGPT秒建大模型!OpenAI全新插件杀疯了,接入代码解释器一键getApr 04, 2023 am 11:30 AM

用ChatGPT秒建大模型!OpenAI全新插件杀疯了,接入代码解释器一键getApr 04, 2023 am 11:30 AMChatGPT可以联网后,OpenAI还火速介绍了一款代码生成器,在这个插件的加持下,ChatGPT甚至可以自己生成机器学习模型了。 上周五,OpenAI刚刚宣布了惊爆的消息,ChatGPT可以联网,接入第三方插件了!而除了第三方插件,OpenAI也介绍了一款自家的插件「代码解释器」,并给出了几个特别的用例:解决定量和定性的数学问题;进行数据分析和可视化;快速转换文件格式。此外,Greg Brockman演示了ChatGPT还可以对上传视频文件进行处理。而一位叫Andrew Mayne的畅销作

ChatGPT教我学习PHP中AOP的实现(附代码)Mar 30, 2023 am 10:45 AM

ChatGPT教我学习PHP中AOP的实现(附代码)Mar 30, 2023 am 10:45 AM本篇文章给大家带来了关于php的相关知识,其中主要介绍了我是怎么用ChatGPT学习PHP中AOP的实现,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Zend Studio 13.0.1

Powerful PHP integrated development environment

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),