Technology peripherals

Technology peripherals AI

AI Unbelievable! Use Numpy to develop deep learning framework and look into the neural network training process

Unbelievable! Use Numpy to develop deep learning framework and look into the neural network training processHello, everyone.

Today I would like to share with you a very awesome open source project. A deep learning framework was developed using Numpy. The syntax is basically the same as Pytorch.

Today we take a simple convolutional neural network as an example to analyze the core steps involved in the neural network training process, such as forward propagation, back propagation, and parameter optimization. Source code.

The data sets and codes used have been packaged, and there are ways to obtain them at the end of the article.

1. Preparation

First prepare the data and code.

1.1 Build the network

First, download the framework source code, address: https://github.com/duma-repo/PyDyNet

git clone https://github.com/duma-repo/PyDyNet.git

Build the LeNet convolutional neural network, Train a three-classification model.

Just create the code file directly in the PyDyNet directory.

from pydynet import nn class LeNet(nn.Module): def __init__(self): super().__init__() self.conv1 = nn.Conv2d(1, 6, kernel_size=5, padding=2) self.conv2 = nn.Conv2d(6, 16, kernel_size=5) self.avg_pool = nn.AvgPool2d(kernel_size=2, stride=2, padding=0) self.sigmoid = nn.Sigmoid() self.fc1 = nn.Linear(16 * 5 * 5, 120) self.fc2 = nn.Linear(120, 84) self.fc3 = nn.Linear(84, 3) def forward(self, x): x = self.conv1(x) x = self.sigmoid(x) x = self.avg_pool(x) x = self.conv2(x) x = self.sigmoid(x) x = self.avg_pool(x) x = x.reshape(x.shape[0], -1) x = self.fc1(x) x = self.sigmoid(x) x = self.fc2(x) x = self.sigmoid(x) x = self.fc3(x) return x

As you can see, the definition of the network is exactly the same as Pytorch syntax.

In the source code I provided, the summary function is provided to print the network structure.

1.2 Preparing data

The training data uses the Fanshion-MNIST data set, which contains 10 categories of pictures, 6k images in each category.

In order to speed up training, I only extracted the first 3 categories, a total of 1.8w training images, to make a three-classification model.

1.3 Model training

import pydynet from pydynet import nn from pydynet import optim lr, num_epochs = 0.9, 10 optimizer = optim.SGD(net.parameters(), lr=lr) loss = nn.CrossEntropyLoss() for epoch in range(num_epochs): net.train() for i, (X, y) in enumerate(train_iter): optimizer.zero_grad() y_hat = net(X) l = loss(y_hat, y) l.backward() optimizer.step() with pydynet.no_grad(): metric.add(l.numpy() * X.shape[0], accuracy(y_hat, y), X.shape[0])

The training code is also the same as Pytorch.

The key thing to do next is to go deep into the source code of model training to learn the principles of model training.

2. train, no_grad and eval

Before the model starts training, net.train will be called.

def train(self, mode: bool = True): set_grad_enabled(mode) self.set_module_state(mode)

You can see that it will set grad(gradient) to True, and the Tensor created afterwards can have gradients. After Tensor brings the gradient, it will be put into the calculation graph and wait for derivation to calculate the gradient.

The following with no_grad(): code

class no_grad: def __enter__(self) -> None: self.prev = is_grad_enable() set_grad_enabled(False)

will set grad(gradient) to False, so that the Tensor created later will not be placed in the calculation graph, and naturally it will not The gradient needs to be calculated, which can speed up inference.

We often see the usage of net.eval() in Pytorch, and we also take a look at its source code.

def eval(self): return self.train(False)

As you can see, it directly calls train(False) to turn off the gradient, and the effect is similar to no_grad().

So, generally call train to turn on the gradient before training. After training, call eval to close the gradient to facilitate fast inference.

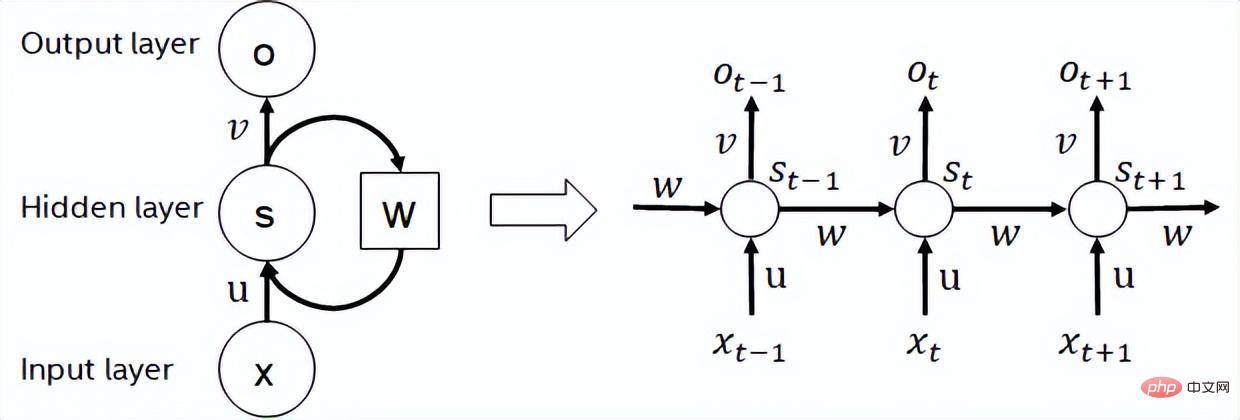

3. Forward propagation

In addition to calculating the category probability, the most important thing in forward propagation is to organize the tensors in the network into a calculation graph according to the order of forward propagation. The purpose It is used to calculate the gradient of each tensor during backpropagation.

In neural networks, tensor is not only used to store data, but also to calculate and store gradients.

Take the first layer convolution operation as an example to see how to generate a calculation graph.

def conv2d(x: tensor.Tensor, kernel: tensor.Tensor, padding: int = 0, stride: int = 1): '''二维卷积函数 ''' N, _, _, _ = x.shape out_channels, _, kernel_size, _ = kernel.shape pad_x = __pad2d(x, padding) col = __im2col2d(pad_x, kernel_size, stride) out_h, out_w = col.shape[-2:] col = col.transpose(0, 4, 5, 1, 2, 3).reshape(N * out_h * out_w, -1) col_filter = kernel.reshape(out_channels, -1).T out = col @ col_filter return out.reshape(N, out_h, out_w, -1).transpose(0, 3, 1, 2)

x is the input image, and there is no need to record the gradient. Kernel is the weight of the convolution kernel and needs to calculate the gradient.

So, the new tensor generated by pad_x = __pad2d(x, padding) also has no gradient, so it does not need to be added to the calculation graph.

The tensor generated by kernel.reshape(out_channels, -1) needs to calculate the gradient and also needs to be added to the calculation graph.

Let’s take a look at the joining process:

def reshape(self, *new_shape): return reshape(self, new_shape) class reshape(UnaryOperator): ''' 张量形状变换算子,在Tensor中进行重载 Parameters ---------- new_shape : tuple 变换后的形状,用法同NumPy ''' def __init__(self, x: Tensor, new_shape: tuple) -> None: self.new_shape = new_shape super().__init__(x) def forward(self, x: Tensor) return x.data.reshape(self.new_shape) def grad_fn(self, x: Tensor, grad: np.ndarray) return grad.reshape(x.shape)

The reshape function will return a reshape class object. The reshape class inherits the UnaryOperator class and is called in the __init__ function. Parent class initialization function.

class UnaryOperator(Tensor): def __init__(self, x: Tensor) -> None: if not isinstance(x, Tensor): x = Tensor(x) self.device = x.device super().__init__( data=self.forward(x), device=x.device, # 这里 requires_grad 为 True requires_grad=is_grad_enable() and x.requires_grad, )

The UnaryOperator class inherits the Tensor class, so the reshape object is also a tensor.

In the __init__ function of UnaryOperator, the initialization function of Tensor is called, and the required_grad parameter passed in is True, which means that the gradient needs to be calculated.

requires_gradThe calculation code is is_grad_enable() and x.requires_grad, is_grad_enable()has been set to True by train, and x is the convolution kernel, and its requires_grad is also True.

class Tensor: def __init__( self, data: Any, dtype=None, device: Union[Device, int, str, None] = None, requires_grad: bool = False, ) -> None: if self.requires_grad: # 不需要求梯度的节点不出现在动态计算图中 Graph.add_node(self)

Finally, in the initialization method of the Tensor class, call Graph.add_node(self) to add the current tensor to the calculation graph.

Similarly, new tensors that are commonly used in the tensor that requires_grad=True will be placed in the calculation graph.

After a convolution operation, 6 nodes will be added to the calculation graph.

4. Backpropagation

After one forward propagation is completed, start from the last node in the calculation graph and perform backpropagation from back to front.

l = loss(y_hat, y) l.backward()

After propagating layer by layer through the forward network, it is finally transmitted to the loss tensor l.

Taking l as the starting point and propagating from front to back, the gradient of each node in the calculation graph can be calculated.

The core code of backward is as follows:

def backward(self, retain_graph: bool = False): for node in Graph.node_list[y_id::-1]: grad = node.grad for last in [l for l in node.last if l.requires_grad]: add_grad = node.grad_fn(last, grad) last.grad += add_grad

Graph.node_list[y_id::-1] sorts the calculation graph in reverse order.

node是前向传播时放入计算图中的每个tensor。

node.last 是生成当前tensor的直接父节点。

调用node.grad_fn计算梯度,并反向传给它的父节点。

grad_fn其实就是Tensor的求导公式,如:

class pow(BinaryOperator): ''' 幂运算算子,在Tensor类中进行重载 See also -------- add : 加法算子 ''' def grad_fn(self, node: Tensor, grad: np.ndarray) if node is self.last[0]: return (self.data * self.last[1].data / node.data) * grad

return后的代码其实就是幂函数求导公式。

假设y=x^2,x的导数为2x。

5. 更新参数

反向传播计算梯度后,便可以调用优化器,更新模型参数。

l.backward() optimizer.step()

本次训练我们用梯度下降SGD算法优化参数,更新过程如下:

def step(self): for i in range(len(self.params)): grad = self.params[i].grad + self.weight_decay * self.params[i].data self.v[i] *= self.momentum self.v[i] += self.lr * grad self.params[i].data -= self.v[i] if self.nesterov: self.params[i].data -= self.lr * grad

self.params是整个网络的权重,初始化SGD时传进去的。

step函数最核心的两行代码,self.v[i] += self.lr * grad 和 self.params[i].data -= self.v[i],用当前参数 - 学习速率 * 梯度更新当前参数。

这是机器学习的基础内容了,我们应该很熟悉了。

一次模型训练的完整过程大致就串完了,大家可以设置打印语句,或者通过DEBUG的方式跟踪每一行代码的执行过程,这样可以更了解模型的训练过程。

The above is the detailed content of Unbelievable! Use Numpy to develop deep learning framework and look into the neural network training process. For more information, please follow other related articles on the PHP Chinese website!

人工智能(AI)、机器学习(ML)和深度学习(DL):有什么区别?Apr 12, 2023 pm 01:25 PM

人工智能(AI)、机器学习(ML)和深度学习(DL):有什么区别?Apr 12, 2023 pm 01:25 PM人工智能Artificial Intelligence(AI)、机器学习Machine Learning(ML)和深度学习Deep Learning(DL)通常可以互换使用。但是,它们并不完全相同。人工智能是最广泛的概念,它赋予机器模仿人类行为的能力。机器学习是将人工智能应用到系统或机器中,帮助其自我学习和不断改进。最后,深度学习使用复杂的算法和深度神经网络来重复训练特定的模型或模式。让我们看看每个术语的演变和历程,以更好地理解人工智能、机器学习和深度学习实际指的是什么。人工智能自过去 70 多

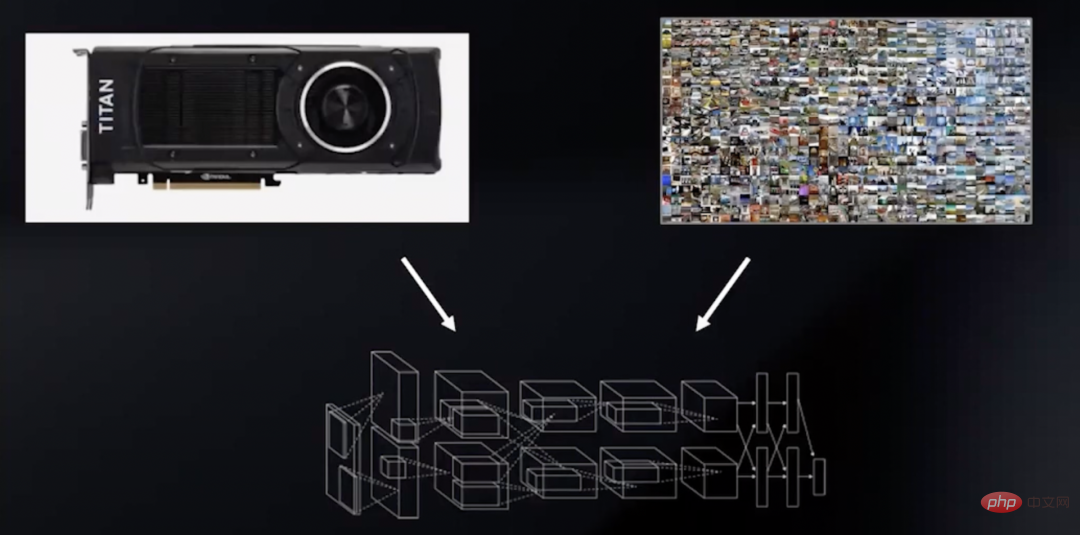

深度学习GPU选购指南:哪款显卡配得上我的炼丹炉?Apr 12, 2023 pm 04:31 PM

深度学习GPU选购指南:哪款显卡配得上我的炼丹炉?Apr 12, 2023 pm 04:31 PM众所周知,在处理深度学习和神经网络任务时,最好使用GPU而不是CPU来处理,因为在神经网络方面,即使是一个比较低端的GPU,性能也会胜过CPU。深度学习是一个对计算有着大量需求的领域,从一定程度上来说,GPU的选择将从根本上决定深度学习的体验。但问题来了,如何选购合适的GPU也是件头疼烧脑的事。怎么避免踩雷,如何做出性价比高的选择?曾经拿到过斯坦福、UCL、CMU、NYU、UW 博士 offer、目前在华盛顿大学读博的知名评测博主Tim Dettmers就针对深度学习领域需要怎样的GPU,结合自

字节跳动模型大规模部署实战Apr 12, 2023 pm 08:31 PM

字节跳动模型大规模部署实战Apr 12, 2023 pm 08:31 PM一. 背景介绍在字节跳动,基于深度学习的应用遍地开花,工程师关注模型效果的同时也需要关注线上服务一致性和性能,早期这通常需要算法专家和工程专家分工合作并紧密配合来完成,这种模式存在比较高的 diff 排查验证等成本。随着 PyTorch/TensorFlow 框架的流行,深度学习模型训练和在线推理完成了统一,开发者仅需要关注具体算法逻辑,调用框架的 Python API 完成训练验证过程即可,之后模型可以很方便的序列化导出,并由统一的高性能 C++ 引擎完成推理工作。提升了开发者训练到部署的体验

基于深度学习的Deepfake检测综述Apr 12, 2023 pm 06:04 PM

基于深度学习的Deepfake检测综述Apr 12, 2023 pm 06:04 PM深度学习 (DL) 已成为计算机科学中最具影响力的领域之一,直接影响着当今人类生活和社会。与历史上所有其他技术创新一样,深度学习也被用于一些违法的行为。Deepfakes 就是这样一种深度学习应用,在过去的几年里已经进行了数百项研究,发明和优化各种使用 AI 的 Deepfake 检测,本文主要就是讨论如何对 Deepfake 进行检测。为了应对Deepfake,已经开发出了深度学习方法以及机器学习(非深度学习)方法来检测 。深度学习模型需要考虑大量参数,因此需要大量数据来训练此类模型。这正是

聊聊实时通信中的AI降噪技术Apr 12, 2023 pm 01:07 PM

聊聊实时通信中的AI降噪技术Apr 12, 2023 pm 01:07 PMPart 01 概述 在实时音视频通信场景,麦克风采集用户语音的同时会采集大量环境噪声,传统降噪算法仅对平稳噪声(如电扇风声、白噪声、电路底噪等)有一定效果,对非平稳的瞬态噪声(如餐厅嘈杂噪声、地铁环境噪声、家庭厨房噪声等)降噪效果较差,严重影响用户的通话体验。针对泛家庭、办公等复杂场景中的上百种非平稳噪声问题,融合通信系统部生态赋能团队自主研发基于GRU模型的AI音频降噪技术,并通过算法和工程优化,将降噪模型尺寸从2.4MB压缩至82KB,运行内存降低约65%;计算复杂度从约186Mflop

地址标准化服务AI深度学习模型推理优化实践Apr 11, 2023 pm 07:28 PM

地址标准化服务AI深度学习模型推理优化实践Apr 11, 2023 pm 07:28 PM导读深度学习已在面向自然语言处理等领域的实际业务场景中广泛落地,对它的推理性能优化成为了部署环节中重要的一环。推理性能的提升:一方面,可以充分发挥部署硬件的能力,降低用户响应时间,同时节省成本;另一方面,可以在保持响应时间不变的前提下,使用结构更为复杂的深度学习模型,进而提升业务精度指标。本文针对地址标准化服务中的深度学习模型开展了推理性能优化工作。通过高性能算子、量化、编译优化等优化手段,在精度指标不降低的前提下,AI模型的模型端到端推理速度最高可获得了4.11倍的提升。1. 模型推理性能优化

深度学习撞墙?LeCun与Marcus到底谁捅了马蜂窝Apr 09, 2023 am 09:41 AM

深度学习撞墙?LeCun与Marcus到底谁捅了马蜂窝Apr 09, 2023 am 09:41 AM今天的主角,是一对AI界相爱相杀的老冤家:Yann LeCun和Gary Marcus在正式讲述这一次的「新仇」之前,我们先来回顾一下,两位大神的「旧恨」。LeCun与Marcus之争Facebook首席人工智能科学家和纽约大学教授,2018年图灵奖(Turing Award)得主杨立昆(Yann LeCun)在NOEMA杂志发表文章,回应此前Gary Marcus对AI与深度学习的评论。此前,Marcus在杂志Nautilus中发文,称深度学习已经「无法前进」Marcus此人,属于是看热闹的不

英伟达首席科学家:深度学习硬件的过去、现在和未来Apr 12, 2023 pm 03:07 PM

英伟达首席科学家:深度学习硬件的过去、现在和未来Apr 12, 2023 pm 03:07 PM过去十年是深度学习的“黄金十年”,它彻底改变了人类的工作和娱乐方式,并且广泛应用到医疗、教育、产品设计等各行各业,而这一切离不开计算硬件的进步,特别是GPU的革新。 深度学习技术的成功实现取决于三大要素:第一是算法。20世纪80年代甚至更早就提出了大多数深度学习算法如深度神经网络、卷积神经网络、反向传播算法和随机梯度下降等。 第二是数据集。训练神经网络的数据集必须足够大,才能使神经网络的性能优于其他技术。直至21世纪初,诸如Pascal和ImageNet等大数据集才得以现世。 第三是硬件。只有

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.