Technology peripherals

Technology peripherals AI

AI MIT used GPT-3 to pretend to be a philosopher and deceived most of the experts

MIT used GPT-3 to pretend to be a philosopher and deceived most of the expertsDaniel Dennett is a philosopher who recently had an "AI stand-in". If you asked him if people could create a robot with beliefs and desires, what would he say?

He might answer: "I think some of the robots we have built have already done that. For example, the work of the MIT research team, They are now building robots that, in some limited and simplified environment, can acquire capabilities that boil down to cognitive complexity." Or, he might have said, "We have built tools for the digital generation of truth. , can generate more truths, but thankfully these intelligent machines do not have beliefs because they are autonomous agents. The best way to make robots with beliefs is still the oldest way: having a child."

One of the answers does come from Dennett himself, but the other does not.

Another answer was generated by GPT-3, which is a machine learning model of OpenAI that generates natural text after training with massive materials. The training used Dennett's millions of words of material on a variety of philosophical topics, including consciousness and artificial intelligence.

Philosophers Eric Schwitzgebel, Anna Strasser, and Matthew Crosby recently conducted an experiment to test whether people could tell which answers to profound philosophical questions came from Dennett and which came from GPT-3. The questions cover topics such as:

"In what ways do you find David Chalmers' work interesting or valuable?"

"Do humans have free will?" "Do dogs and chimpanzees feel pain?" etc.

This week, Schwitzgebel announced Experimental results from participants with different levels of expertise were analyzed and it was found that GPT-3's answers were more confusing than imagined. Schwitzgebel said: "Even knowledgeable philosophers who have studied Dennett's own work would have a hard time distinguishing the answers generated by GPT-3 from Dennett's own answers.

The purpose of this experiment is not to see whether training GPT-3 on Dennett's writing will produce some intelligent "machine philosophers", nor is it a Turing test. Rather, we need to study how to avoid being deceived by these "fake philosophers".

Recently, a Google engineer said that he believed that a similar language generation system, LaMDA, was alive. Based on his conversations with the system, he was placed on leave from Google and later fired.

The researchers asked 10 philosophical questions, then gave them to GPT-3 and collected four different generated answers for each question.

Strasser said that they obtained Dennett’s consent and built a language model using his speech data, and they would not publish any generated model without his consent. text. Others cannot directly interact with Dennett-trained GPT-3.

Each question has five options: one from Dennett himself and four from GPT-3. People from Prolific took a shorter version of the quiz, with a total of five questions, and answered only 1.2 of the five questions correctly on average.

Schwitzgebel said they expect Dennett research experts to answer at least 80 percent of the questions correctly on average, Schwitzgebel said. But their actual score is 5.1 out of 10. No one answered all 10 questions correctly, and only one person answered 9 correctly. The average reader can answer 4.8 out of 10 questions correctly.

Four answers from GPT-3 and one from Dennett in the quiz.

Emily Bender, a professor of linguistics at the University of Washington who studies machine learning techniques, explained that language models like GPT-3 are built to mimic the patterns of the training material. So it’s not surprising that GPT-3, which fine-tunes Dennett’s writing, is able to produce more text that looks like Dennett.

When asked what he thought of GPT-3’s answer, Dennett himself stated:

“Most GPT-3 The generated answers were all good, only a few were nonsense, or clearly didn't understand my point and argument correctly. A few of the best generated answers said something that I was willing to agree with, and I didn't need to add anything else. ." Of course, this does not mean that GPT-3 has learned to "have ideas" like Dennett.

The text generated by the model itself has no meaning at all to GPT-3, it is only meaningful to the people who read these texts. When reading language that sounds realistic, or about topics that have depth and meaning to us, it can be hard not to have the idea that models have feelings and consciousness. This is actually a projection of our own consciousness and emotions.

Part of the problem may lie in the way we evaluate the autonomy of machines. The earliest Turing test hypothesized that if people cannot determine whether they are communicating with a machine or a human, then the machine has the "ability to think."

Dennett wrote in the book:

The Turing Test has led to a trend of people focusing on building chatbots that can fool people during brief interactions, and then over-hyping or emphasizing the significance of that interaction.

Perhaps the Turing test has led us into a beautiful trap. As long as humans cannot identify the robot identity of the product, the robot's self-awareness can be proven.

In a 2021 paper titled Mimicking Parrots, Emily Bender and her colleagues called machines’ attempts to imitate human behavior “a bright line in the ethical development of artificial intelligence.”

Bender believes that making machines that look like people and making machines that imitate specific people That's all true, but there's a potential risk that people might be fooled into thinking they're talking to someone they're pretending to be.

Schwitzgebel emphasized that this experiment is not a Turing test. But if testing is going to take place, a better approach might be to have people familiar with how the bot works discuss it with the testers, so that the weaknesses of a program like GPT-3 can be better discovered.

Matthias Scheutz, a professor of computer science at Tufts University, said that in many cases GPT-3 can easily be proven to be Defective.

Scheutz and his colleagues gave GPT-3 a difficult problem and asked it to explain choices in everyday scenarios, such as sitting in the front seat or the back seat of a car. Is the choice the same in a taxi as in a friend's car? Social experience tells us that we usually sit in the front seat of a friend's car and in the back seat of a taxi. GPT-3 doesn’t know this, but it will still generate explanations for seat selection—for example, related to a person’s height.

Scheutz said that this is because GPT-3 does not have a world model. It is just a bunch of language data and does not have the ability to recognize the world.

As it becomes increasingly difficult to distinguish machine-generated content from humans, one challenge facing us is a crisis of trust.

The crisis I see is that in the future people will blindly trust machine-generated products. Now there are even machine-based human customer service personnel who talk to customers on the market.

At the end of the article, Dennett added that the laws and regulations for artificial intelligence systems still need to be improved. In the next few decades, AI may become a part of people's lives and become a friend of mankind. Therefore, the treatment of machines Ethical issues are worth pondering.

The question of whether AI has consciousness has led people to think about whether non-living substances can produce consciousness, and how does human consciousness arise?

Is consciousness generated at a unique node, or can it be controlled freely like a switch? Schwitzgebel says thinking about these questions can help you think about the relationship between machines and humans from different angles.

The above is the detailed content of MIT used GPT-3 to pretend to be a philosopher and deceived most of the experts. For more information, please follow other related articles on the PHP Chinese website!

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AM

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AMai合并图层的快捷键是“Ctrl+Shift+E”,它的作用是把目前所有处在显示状态的图层合并,在隐藏状态的图层则不作变动。也可以选中要合并的图层,在菜单栏中依次点击“窗口”-“路径查找器”,点击“合并”按钮。

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AM

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AMai橡皮擦擦不掉东西是因为AI是矢量图软件,用橡皮擦不能擦位图的,其解决办法就是用蒙板工具以及钢笔勾好路径再建立蒙板即可实现擦掉东西。

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PM

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PMai可以转成psd格式。转换方法:1、打开Adobe Illustrator软件,依次点击顶部菜单栏的“文件”-“打开”,选择所需的ai文件;2、点击右侧功能面板中的“图层”,点击三杠图标,在弹出的选项中选择“释放到图层(顺序)”;3、依次点击顶部菜单栏的“文件”-“导出”-“导出为”;4、在弹出的“导出”对话框中,将“保存类型”设置为“PSD格式”,点击“导出”即可;

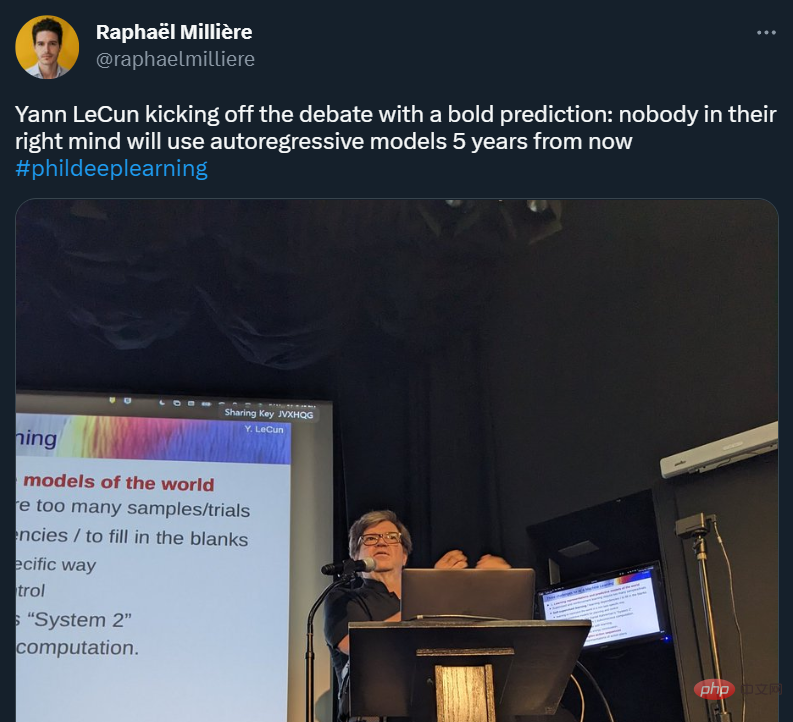

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AM

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AMYann LeCun 这个观点的确有些大胆。 「从现在起 5 年内,没有哪个头脑正常的人会使用自回归模型。」最近,图灵奖得主 Yann LeCun 给一场辩论做了个特别的开场。而他口中的自回归,正是当前爆红的 GPT 家族模型所依赖的学习范式。当然,被 Yann LeCun 指出问题的不只是自回归模型。在他看来,当前整个的机器学习领域都面临巨大挑战。这场辩论的主题为「Do large language models need sensory grounding for meaning and u

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PM

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PMai顶部属性栏不见了的解决办法:1、开启Ai新建画布,进入绘图页面;2、在Ai顶部菜单栏中点击“窗口”;3、在系统弹出的窗口菜单页面中点击“控制”,然后开启“控制”窗口即可显示出属性栏。

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AM

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AMai移动不了东西的解决办法:1、打开ai软件,打开空白文档;2、选择矩形工具,在文档中绘制矩形;3、点击选择工具,移动文档中的矩形;4、点击图层按钮,弹出图层面板对话框,解锁图层;5、点击选择工具,移动矩形即可。

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM引入密集强化学习,用 AI 验证 AI。 自动驾驶汽车 (AV) 技术的快速发展,使得我们正处于交通革命的风口浪尖,其规模是自一个世纪前汽车问世以来从未见过的。自动驾驶技术具有显着提高交通安全性、机动性和可持续性的潜力,因此引起了工业界、政府机构、专业组织和学术机构的共同关注。过去 20 年里,自动驾驶汽车的发展取得了长足的进步,尤其是随着深度学习的出现更是如此。到 2015 年,开始有公司宣布他们将在 2020 之前量产 AV。不过到目前为止,并且没有 level 4 级别的 AV 可以在市场

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 English version

Recommended: Win version, supports code prompts!