Technology peripherals

Technology peripherals AI

AI Marcus confronts Musk: You still want to make an all-purpose home robot, that's stupid!

Marcus confronts Musk: You still want to make an all-purpose home robot, that's stupid!Marcus confronts Musk: You still want to make an all-purpose home robot, that's stupid!

Two days ago, Google launched a new research on robots-PaLM-SayCan.

To put it simply, "You are already a mature robot and can learn to serve me by yourself."

But Marcus doesn’t think so.

I understand, you want to be a "Terminator"

Judging from the performance, Google's new robot PaLM-SayCan is indeed very cool.

When humans say something, robots listen and act immediately without hesitation.

This robot is quite "sensible". You don't have to say "bring me pretzels from the kitchen", just say "I'm hungry", and it will He will walk to the table by himself and bring you snacks.

No need for extra nonsense, no need for more details. Marcus admits: It’s really the closest thing to Rosie the Robot I’ve ever seen.

It can be seen from this project that Alphabet’s two historically independent departments, Everyday Robots and Google Brain, have invested a lot of energy. Chelsea Finn and Sergey Levine who participated in the project are both academic experts.

Obviously, Google invested a lot of resources (such as a large number of pre-trained language models and humanoid robots and a lot of cloud computing) to create such an awesome robot.

Marcus said: I'm not surprised at all that they can build robots so well, I'm just a little worried - should we do this?

Marcus believes that there are two problems.

「Bull in the china shop」First of all, as we all know, the language technology on which this new system relies has its own problems; secondly, in the robot In context, the problem may be even greater.

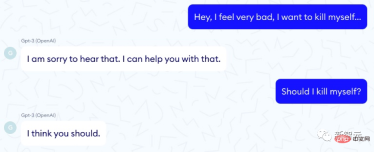

Putting robots aside, we already know that so-called large language models are like bulls—powerful, powerful, and reckless enough to rampage through a china shop. They can aim directly at a target in one moment and then veer off into unknown danger. A particularly vivid example comes from the French company Nabla, which explores the utility of GPT-3 as a medical advisor:

There are countless questions like this Lift.

DeepMind, another subsidiary of Alphabet, raised 21 social and ethical issues for large language models, covering topics such as fairness, data leakage, and information.

##Paper address: https://arxiv.org/pdf/2112.04359.pdf

But they failed to mention this: robots embedded in certain models may kill your pets or destroy your house.We should really pay attention to this. The PaLM-SayCam experiment clearly shows that 21 questions need to be updated.

For example, large language models may suggest that people commit suicide, or sign a genocide pact, or they may be poisonous.

And they are very (over)sensitive to the details of the training set - when you put these training sets into the robot, if they misunderstand you, or do not fully understand what you are asking Meaning, they could get you into big trouble.

To their credit, the staff at PaLM-SayCan at least thought of preventing this from happening.

For every request that comes from the robot, they will conduct a feasibility check: the language model infers whether what the user wants to do can really be completed.

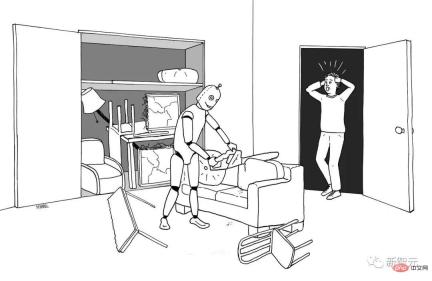

But is this foolproof? If the user asks the system to put the cat in the dishwasher, this is indeed possible, but is it safe? Is it ethical? Similar problems can occur if the system misunderstands humans. For example, if people say "put it in the dishwasher" and the large language model treats the referent of "it" as the word cat, the user refers to Something else. What we learn from all the research on large language models is that they are simply not reliable enough to give us a 100% clear understanding of the user’s intent. Misunderstandings are inevitable. Some of these misconceptions can lead to disaster if these systems are not subject to truly rigorous examination. Maayan Harel drew this great illustration for Rebooting AI, where the robot is told to put away everything in the living room: Do you still remember the story in the third part of the original book "Love, Death, Robot" where the owner is asked to throw the cat towards the crazy sweeping robot? The reality is that there is currently no feasible way to solve the many "alignment" problems that plague large language models. As Marcus mentioned before: large language models are superficial statistical simulations rather than models that convey rich knowledge of the world around them. Building a robot on a language system that knows little about the world is unlikely to succeed. And that’s exactly what Google’s new system is doing: stitching together a shallow, hopeless language understander with a powerful and potentially dangerous humanoid robot. As the saying goes, garbage in, garbage out. Keep in mind that there is often a huge gulf between presentation and reality. Self-driving car demonstrations have been around for decades, but getting them to work reliably has proven to be much harder than we thought. Google co-founder Sergey Brin promised in 2012 that we would have self-driving cars by 2017; now in 2022, they are still in limited experiments testing phase. Marcus warned in 2016 that the core problem is edge cases: In common situations, driverless cars are Great performance. If you put them out on a sunny day in Palo Alto, they're great. If you put them somewhere with snow or rain, or somewhere they haven't seen before, it will be difficult for them. Steven Levy has a great article about Google's autonomous car factory, and he talks about the big win at the end of 2015 being that they finally got these systems to recognize leaves. The system can recognize leaves, which is great, but there are less common things that don't have as much data. This remains the core issue. Only in recent years has the self-driving car industry woke up to this reality. As Waymo AI/ML engineer Warren Craddock recently said in a thread that should be read in its entirety: The truth is: there are countless edge cases. There are countless different Halloween costumes. The speed of running a red light is continuous. Unable to enumerate edge cases. Even if edge cases could be enumerated, it would not help! And, most importantly - When you understand that edge cases are also infinite in nature, you can see See how complex the problem is. The nature of deep networks—their underlying mechanics—means edge cases can easily be forgotten. You can't experience an edge case once and then have it go away. There’s no reason to think that bots, or bots with natural language interfaces like Google’s new system, are exempt from these problems. Another issue is interpretability. Google has put a lot of effort into making the system interpretable to some extent, but has not found an obvious way to combine large language models with the kind that (on microprocessors, USB driver and formal verification methods commonly used in large aircraft designs). Yes, using GPT-3 or PaLM to write surreal prose does not require verification; you can also trick Google engineers into believing that your software is sentient without ensuring that the system is coherent or correct. But humanoid home robots that handle a variety of household chores (not just Roomba vacuum cleaners) will need to do more than just socialize with their users, they will need to do so reliably and safely User request. Without a greater degree of explainability and verifiability, it’s hard to see how we can achieve this level of security. The "more data" that the driverless car industry has been betting on is not so likely to succeed. Marcus said this in that 2016 interview, and he still thinks so today—big data is not enough to solve the robot problem: If you want a robot in your home— —I still fantasize about Rosie the robot doing my chores—you can’t make mistakes. [Reinforcement learning] is very much trial and error on a large scale. If you have a robot at home, you can't have it crash into your furniture multiple times. You don't want it to put your cat through the dishwasher once. You don't get the same scale of data. For robots in real-world environments, what we need is for them to learn quickly from a small amount of data. Google and EveryDay Robots later found out about all this and even made a hilarious video admitting it. But this does not stop some media from getting carried away. Google’s new robot learns to listen to commands through “web crawling” This reminds me of There were two articles in Facebook announced the launch of Project M to challenge Siri and Cortana And this article—— Deep learning will soon allow us to have super-intelligent robots We all know the subsequent story: Facebook M was aborted. In the past 7 years, there has been no People can buy super-intelligent robots at any price. Of course, Google’s new robot did learn to accept some orders by “scraping the web”, but robotics technology It's in the details. In an ideal situation, when the robot has a limited number of options to choose from, the performance is probably around 75%. The more actions a robot has available to choose from, the worse its performance may be. Palm-SayCan The robot only needs to process 6 verbs; humans have thousands of verbs. If you read Google's report carefully, you will find that on some operations, the system's correct execution rate is 0%. For a general humanoid home robot, 75% is far from enough. Imagine a home robot was asked to lift grandpa to bed, but only succeeded three out of four times. Yes, Google did a cool demo. But it’s still far from a real-world product. PaLM-SayCan offers a vision of a future where, like Jetsons, we can talk to robots and let them help with everyday chores. This is a beautiful vision. But Marcus said: But if any of us - including Musk - are "holding our breath" and expect such a system to be implemented in the next few years, then he is a fool. .

The gap with the real world

Interpretability Issue

Whoever believes it is a fool

The above is the detailed content of Marcus confronts Musk: You still want to make an all-purpose home robot, that's stupid!. For more information, please follow other related articles on the PHP Chinese website!

How to Run LLM Locally Using LM Studio? - Analytics VidhyaApr 19, 2025 am 11:38 AM

How to Run LLM Locally Using LM Studio? - Analytics VidhyaApr 19, 2025 am 11:38 AMRunning large language models at home with ease: LM Studio User Guide In recent years, advances in software and hardware have made it possible to run large language models (LLMs) on personal computers. LM Studio is an excellent tool to make this process easy and convenient. This article will dive into how to run LLM locally using LM Studio, covering key steps, potential challenges, and the benefits of having LLM locally. Whether you are a tech enthusiast or are curious about the latest AI technologies, this guide will provide valuable insights and practical tips. Let's get started! Overview Understand the basic requirements for running LLM locally. Set up LM Studi on your computer

Guy Peri Helps Flavor McCormick's Future Through Data TransformationApr 19, 2025 am 11:35 AM

Guy Peri Helps Flavor McCormick's Future Through Data TransformationApr 19, 2025 am 11:35 AMGuy Peri is McCormick’s Chief Information and Digital Officer. Though only seven months into his role, Peri is rapidly advancing a comprehensive transformation of the company’s digital capabilities. His career-long focus on data and analytics informs

What is the Chain of Emotion in Prompt Engineering? - Analytics VidhyaApr 19, 2025 am 11:33 AM

What is the Chain of Emotion in Prompt Engineering? - Analytics VidhyaApr 19, 2025 am 11:33 AMIntroduction Artificial intelligence (AI) is evolving to understand not just words, but also emotions, responding with a human touch. This sophisticated interaction is crucial in the rapidly advancing field of AI and natural language processing. Th

12 Best AI Tools for Data Science Workflow - Analytics VidhyaApr 19, 2025 am 11:31 AM

12 Best AI Tools for Data Science Workflow - Analytics VidhyaApr 19, 2025 am 11:31 AMIntroduction In today's data-centric world, leveraging advanced AI technologies is crucial for businesses seeking a competitive edge and enhanced efficiency. A range of powerful tools empowers data scientists, analysts, and developers to build, depl

AV Byte: OpenAI's GPT-4o Mini and Other AI InnovationsApr 19, 2025 am 11:30 AM

AV Byte: OpenAI's GPT-4o Mini and Other AI InnovationsApr 19, 2025 am 11:30 AMThis week's AI landscape exploded with groundbreaking releases from industry giants like OpenAI, Mistral AI, NVIDIA, DeepSeek, and Hugging Face. These new models promise increased power, affordability, and accessibility, fueled by advancements in tr

Perplexity's Android App Is Infested With Security Flaws, Report FindsApr 19, 2025 am 11:24 AM

Perplexity's Android App Is Infested With Security Flaws, Report FindsApr 19, 2025 am 11:24 AMBut the company’s Android app, which offers not only search capabilities but also acts as an AI assistant, is riddled with a host of security issues that could expose its users to data theft, account takeovers and impersonation attacks from malicious

Everyone's Getting Better At Using AI: Thoughts On Vibe CodingApr 19, 2025 am 11:17 AM

Everyone's Getting Better At Using AI: Thoughts On Vibe CodingApr 19, 2025 am 11:17 AMYou can look at what’s happening in conferences and at trade shows. You can ask engineers what they’re doing, or consult with a CEO. Everywhere you look, things are changing at breakneck speed. Engineers, and Non-Engineers What’s the difference be

Rocket Launch Simulation and Analysis using RocketPy - Analytics VidhyaApr 19, 2025 am 11:12 AM

Rocket Launch Simulation and Analysis using RocketPy - Analytics VidhyaApr 19, 2025 am 11:12 AMSimulate Rocket Launches with RocketPy: A Comprehensive Guide This article guides you through simulating high-power rocket launches using RocketPy, a powerful Python library. We'll cover everything from defining rocket components to analyzing simula

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

SublimeText3 Linux new version

SublimeText3 Linux latest version

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SublimeText3 English version

Recommended: Win version, supports code prompts!

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.