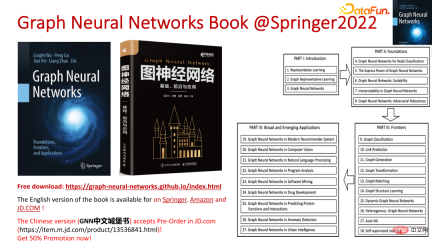

# In recent years, graph neural networks (GNN) have made rapid and incredible progress. Graph neural network, also known as graph deep learning, graph representation learning (graph representation learning) or geometric deep learning, is the fastest growing research topic in the field of machine learning, especially deep learning. The title of this sharing is "Basics, Frontiers and Applications of GNN", which mainly introduces the general content of the comprehensive book "Basics, Frontiers and Applications of Graph Neural Networks" compiled by scholars Wu Lingfei, Cui Peng, Pei Jian and Zhao Liang. .

1. Introduction to graph neural networks

1. Why study graphs?

Diagrams are a universal language for describing and modeling complex systems. The graph itself is not complicated, it mainly consists of edges and nodes. We can use nodes to represent any object we want to model, and we can use edges to represent the relationship or similarity between two nodes. What we often call graph neural network or graph machine learning usually uses the structure of the graph and the information of edges and nodes as the input of the algorithm to output the desired results. For example, in a search engine, when we enter a query, the engine will return personalized search results based on the query information, user information, and some contextual information. This information can be naturally organized in a graph.

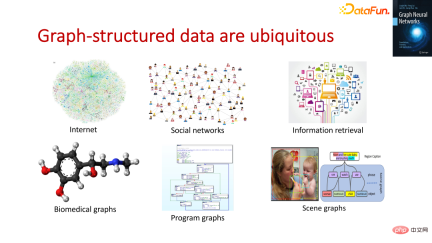

2. Graph structured data is everywhere

Graph structured data can be found everywhere, such as the Internet, social networks, etc. In addition, in the currently very popular field of protein discovery, people will use graphs to describe and model existing proteins and generate new graphs to help people discover new drugs. We can also use graphs to do some complex program analysis, and we can also do some high-level reasoning in computer vision.

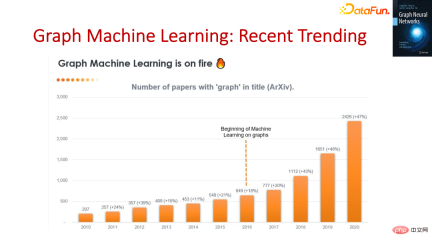

3. Recent trends in graph machine learning

Graph machine learning It is not a very new topic. This research direction has been in the past 20 years, and it has always been relatively niche before. Since 2016, with the emergence of modern graph neural network-related papers, graph machine learning has become a popular research direction. It is found that this new generation of graph machine learning method can better learn the data itself and the information between the data, so that it can better represent the data, and ultimately be able to better complete more important tasks.

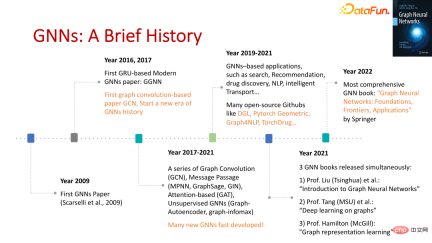

4. A brief history of graph neural networks

The earliest paper related to graph neural network appeared in 2009, before deep learning became popular. Papers on modern graph neural networks appeared in 2016, which were improvements to early graph neural networks. Afterwards, the emergence of GCN promoted the rapid development of graph neural networks. Since 2017, a large number of new algorithms have emerged. As the algorithms of graph neural networks become more and more mature, since 2019, the industry has tried to use these algorithms to solve some practical problems. At the same time, many open source tools have been developed to improve the efficiency of solving problems. Since 2021, many books related to graph neural networks have been written, including of course this "Basics, Frontiers and Applications of Graph Neural Networks".

The book "Basics, Frontiers and Applications of Graph Neural Networks" systematically introduces the core concepts and technologies in the field of graph neural networks, as well as cutting-edge research and development, and introduces applications in different fields. Applications. Readers from both academia and industry can benefit from it.

##2. The basis of graph neural network

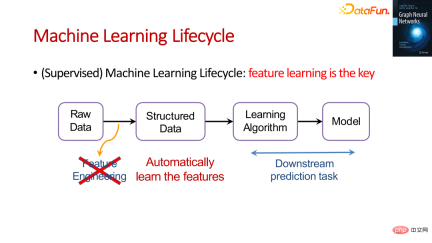

1. The life cycle of machine learning

.

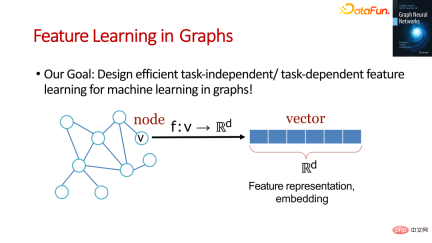

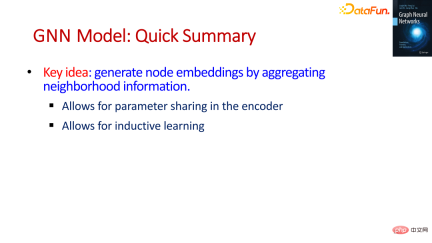

2. Feature learning in the graph

Feature Learning in Graphs is very similar to deep learning. The goal is to design an effective task-related or task-independent feature learning method to map the nodes in the original graph into a high-dimensional space to obtain the embedding of the nodes. representation, and then complete the downstream tasks.

3. The basis of graph neural network

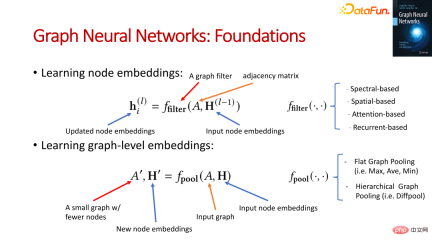

There are two types of representations that need to be learned in graph neural networks:

- Representation of graph nodes

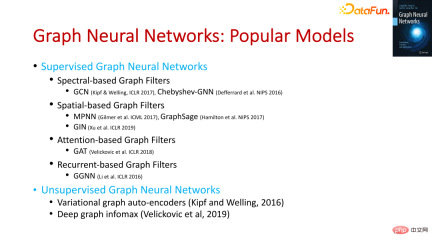

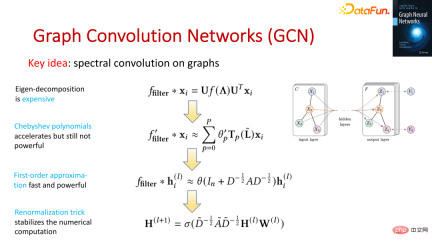

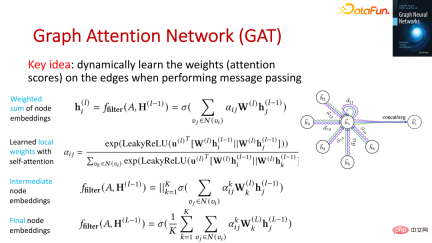

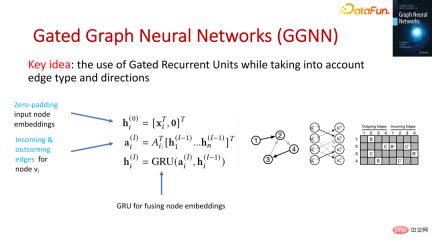

Requires a filter operation, which takes the matrix of the graph and the vector representation of the node as input, continuously learns, and updates the vector representation of the node. Currently, the more common filter operations include Spectral-based, Spatial-based, Attention-based, and Recurrent-based.

- Representation of the graph

Requires a pool operation, Taking the matrix of the graph and the vector representation of the nodes as input, it continuously learns to obtain the matrix of the graph containing fewer nodes and the vector representation of its nodes, and finally obtains a graph-level vector representation to represent the entire graph. Currently, the more common pool operations include Flat Graph Pooling (such as Max, Ave, Min) and Hierarchical Graph Pooling (such as Diffpool).

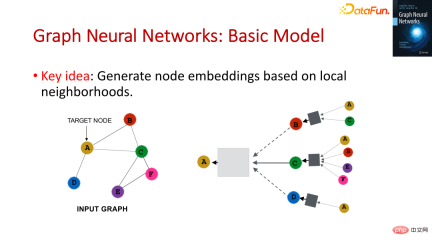

4. Basic model of graph neural network

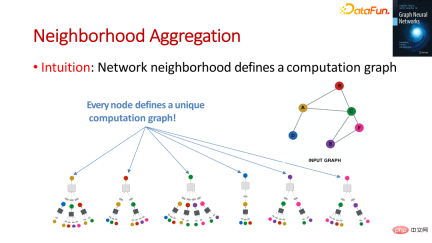

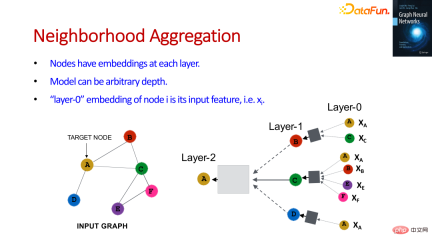

In this way, each node can define a calculation graph.

We can layer the calculation graph. The first layer is the most original information, and the sum is passed layer by layer. Aggregate information to learn vector representations of all nodes.

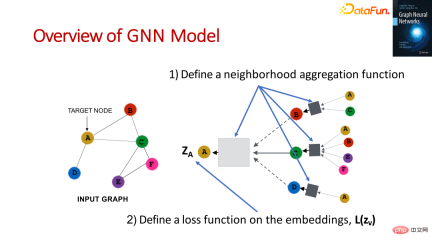

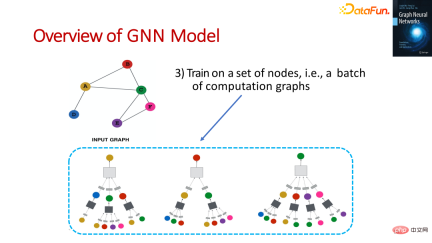

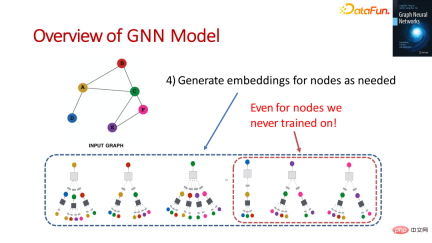

The above figure roughly describes the main steps of graph neural network model learning. There are mainly four steps:

- Define a Aggregation function;

- Define the loss function according to the task;

- Train a batch of nodes , for example, you can train a batch of calculation graphs at one time;

- # produces the required vector representation for each node, even some nodes that have never been trained. (What is learned is the aggregation function, and the vector representation of the new node can be obtained by using the aggregation function and the vector representation that has been trained).

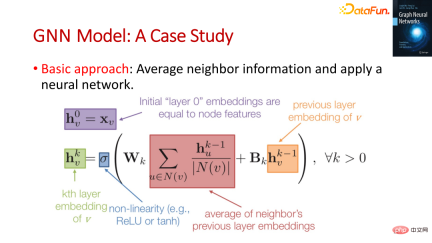

##Picture above is an example of using average as an aggregation function. The vector representation of node v in the kth layer depends on the average of the vector representations of its neighbor nodes in the previous layer and its own vector representation in the previous layer.

5. Popular models of graph neural networks

is one of the most classic algorithms, It can Act directly on the graph and exploit its structural information. Focusing on improving model speed, practicality and stability, as shown in the figure above, GCN has also gone through several iterations. The GCN paper is of epoch-making significance and laid the foundation for graph neural networks.

The core pointis to transform the graph into convolution For the process of information transmission, it defines two functions, namely aggregation function and update function. This algorithm is a simple and general algorithm, but it is not efficient.

GraphSageis an industrial-level algorithm. It uses sampling to get a certain number of neighbor nodes. Thus the vector representation of the school node.

is the introduction of the idea of attention, its core The point is to dynamically learn edge weights during information transfer.

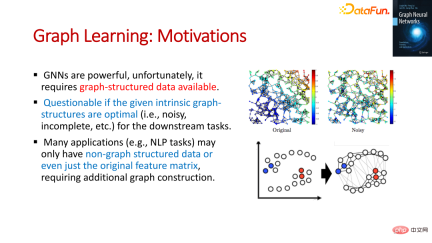

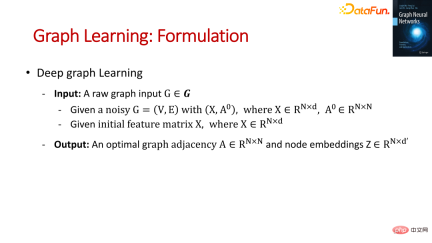

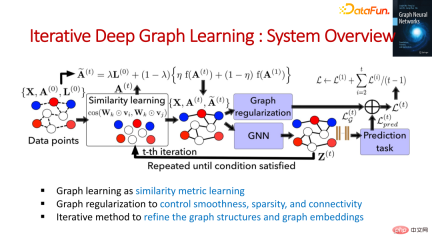

In the book "Basics, Frontiers and Applications of Graph Neural Networks", Chapters 5, 6, 7 and 8 also introduce how to evaluate the scalability of graph neural networks and graph neural networks respectively. , the interpretability of graph neural networks, and the adversarial stability of graph neural networks. If you are interested, you can read the corresponding chapters in the book. Graph neural network requires graph structure data, but it is doubtful whether the given graph structure is optimal. Sometimes there may be a lot of noise, and many applications There may be no graph-structured data, or even just raw features. So, we need to use the graph neural network to learn the optimal graph representation and graph node representation. ##3. The Frontier of Graph Neural Networks

1. Graph Structure Learning

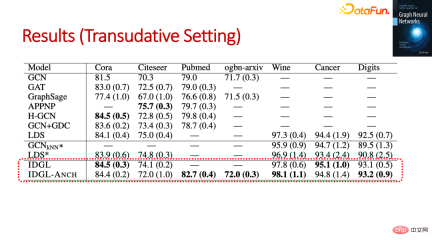

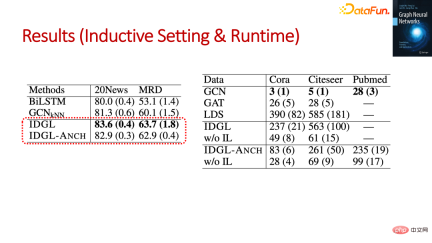

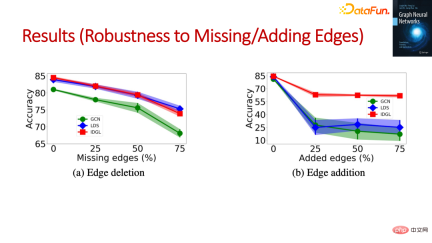

#Experimental data can show the advantages of this method.

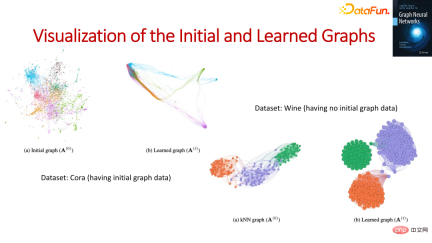

Through the visualization results of the graph, it can be found that the learned graphs tend to compare similar graphs Objects are clustered together and have a certain interpretability.

2. Other FrontiersIn the book "Basics, Frontiers and Applications of Graph Neural Networks", the following frontiers are also introduced. Research, these cutting-edge research have important applications in many scenarios:

- Graph classification;

- Link Prediction;

- Graph generation;

- Graph conversion;

- Graph matching;

- Dynamic graph neural network;

- Heterogeneous Graph Neural Network;

- AutoML for Graph Neural Network;

- Self-supervised learning of graph neural networks.

1. Application of graph neural network in recommendation system

We can use session information to construct a heterogeneous global graph, and then learn the vector representation of users or items through graph neural network learning, and use this vector representation for personalization recommendation.

We can use session information to construct a heterogeneous global graph, and then learn the vector representation of users or items through graph neural network learning, and use this vector representation for personalization recommendation.

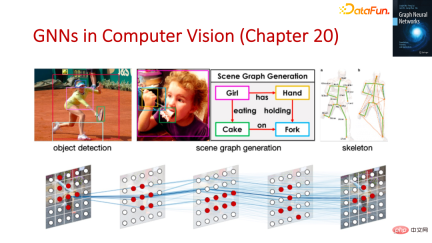

2. Application of graph neural network in computer vision

We can track the dynamic change process of objects, Deepen your understanding of video with graph neural networks.

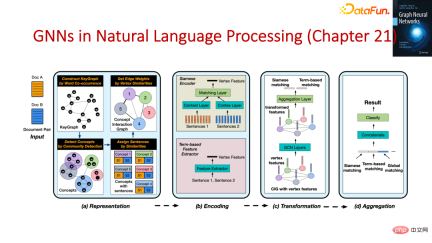

3. Application of graph neural network in natural language processing

We can use graph neural networks to understand high-level information of natural language.

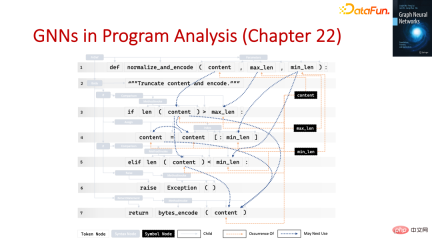

4. Application of graph neural network in program analysis

##5. Application of graph neural network in smart cities

Q1: Is GNN an important method for the next generation of deep learning?

#A1: Graph neural network is a very important branch, and the one that keeps pace with graph neural network is Transformer. In view of the flexibility of graph neural networks, graph neural networks and Transformer can be combined with each other to take advantage of greater advantages.

Q2: Can GNN and causal learning be combined? How to combine?#A2: The important link in causal learning is the causal graph. The causal graph and GNN can be naturally combined. The difficulty of causal learning is that its data size is small. We can use the ability of GNN to better learn causal graphs.

Q3: What is the difference and connection between the interpretability of GNN and the interpretability of traditional machine learning?#A3: There will be a detailed introduction in the book "Basics, Frontiers and Applications of Graph Neural Networks".

Q4: How to train and infer GNN directly based on the graph database and using the capabilities of graph computing?A4: At present, there is no good practice on the unified graph computing platform. There are some startup companies and scientific research teams exploring related directions. This will be a very For valuable and challenging research directions, a more feasible approach is to divide the research into different fields.

The above is the detailed content of The foundation, frontier and application of GNN. For more information, please follow other related articles on the PHP Chinese website!

GNN的基础、前沿和应用Apr 11, 2023 pm 11:40 PM

GNN的基础、前沿和应用Apr 11, 2023 pm 11:40 PM近年来,图神经网络(GNN)取得了快速、令人难以置信的进展。图神经网络又称为图深度学习、图表征学习(图表示学习)或几何深度学习,是机器学习特别是深度学习领域增长最快的研究课题。本次分享的题目为《GNN的基础、前沿和应用》,主要介绍由吴凌飞、崔鹏、裴健、赵亮几位学者牵头编撰的综合性书籍《图神经网络基础、前沿与应用》中的大致内容。一、图神经网络的介绍1、为什么要研究图?图是一种描述和建模复杂系统的通用语言。图本身并不复杂,它主要由边和结点构成。我们可以用结点表示任何我们想要建模的物体,可以用边表示两

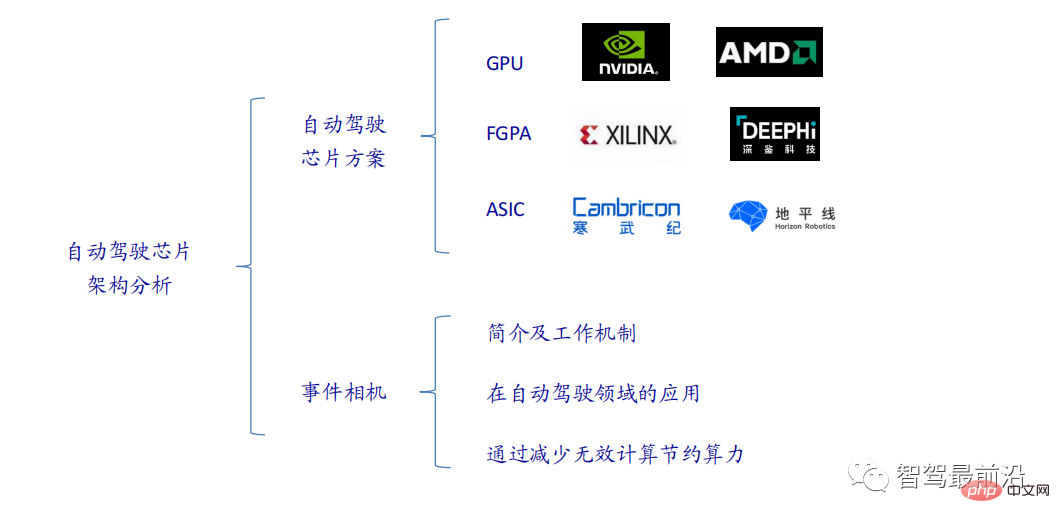

一文通览自动驾驶三大主流芯片架构Apr 12, 2023 pm 12:07 PM

一文通览自动驾驶三大主流芯片架构Apr 12, 2023 pm 12:07 PM当前主流的AI芯片主要分为三类,GPU、FPGA、ASIC。GPU、FPGA均是前期较为成熟的芯片架构,属于通用型芯片。ASIC属于为AI特定场景定制的芯片。行业内已经确认CPU不适用于AI计算,但是在AI应用领域也是必不可少。 GPU方案GPU与CPU的架构对比CPU遵循的是冯·诺依曼架构,其核心是存储程序/数据、串行顺序执行。因此CPU的架构中需要大量的空间去放置存储单元(Cache)和控制单元(Control),相比之下计算单元(ALU)只占据了很小的一部分,所以CPU在进行大规模并行计算

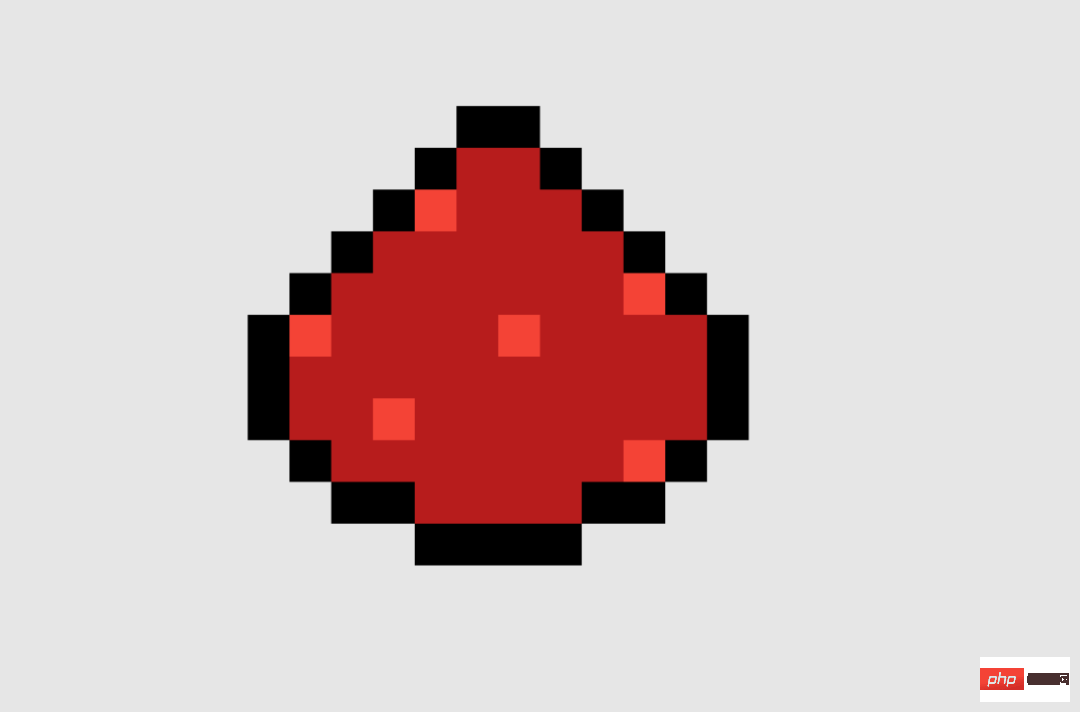

"B站UP主成功打造全球首个基于红石的神经网络在社交媒体引起轰动,得到Yann LeCun的点赞赞赏"May 07, 2023 pm 10:58 PM

"B站UP主成功打造全球首个基于红石的神经网络在社交媒体引起轰动,得到Yann LeCun的点赞赞赏"May 07, 2023 pm 10:58 PM在我的世界(Minecraft)中,红石是一种非常重要的物品。它是游戏中的一种独特材料,开关、红石火把和红石块等能对导线或物体提供类似电流的能量。红石电路可以为你建造用于控制或激活其他机械的结构,其本身既可以被设计为用于响应玩家的手动激活,也可以反复输出信号或者响应非玩家引发的变化,如生物移动、物品掉落、植物生长、日夜更替等等。因此,在我的世界中,红石能够控制的机械类别极其多,小到简单机械如自动门、光开关和频闪电源,大到占地巨大的电梯、自动农场、小游戏平台甚至游戏内建的计算机。近日,B站UP主@

扛住强风的无人机?加州理工用12分钟飞行数据教会无人机御风飞行Apr 09, 2023 pm 11:51 PM

扛住强风的无人机?加州理工用12分钟飞行数据教会无人机御风飞行Apr 09, 2023 pm 11:51 PM当风大到可以把伞吹坏的程度,无人机却稳稳当当,就像这样:御风飞行是空中飞行的一部分,从大的层面来讲,当飞行员驾驶飞机着陆时,风速可能会给他们带来挑战;从小的层面来讲,阵风也会影响无人机的飞行。目前来看,无人机要么在受控条件下飞行,无风;要么由人类使用遥控器操作。无人机被研究者控制在开阔的天空中编队飞行,但这些飞行通常是在理想的条件和环境下进行的。然而,要想让无人机自主执行必要但日常的任务,例如运送包裹,无人机必须能够实时适应风况。为了让无人机在风中飞行时具有更好的机动性,来自加州理工学院的一组工

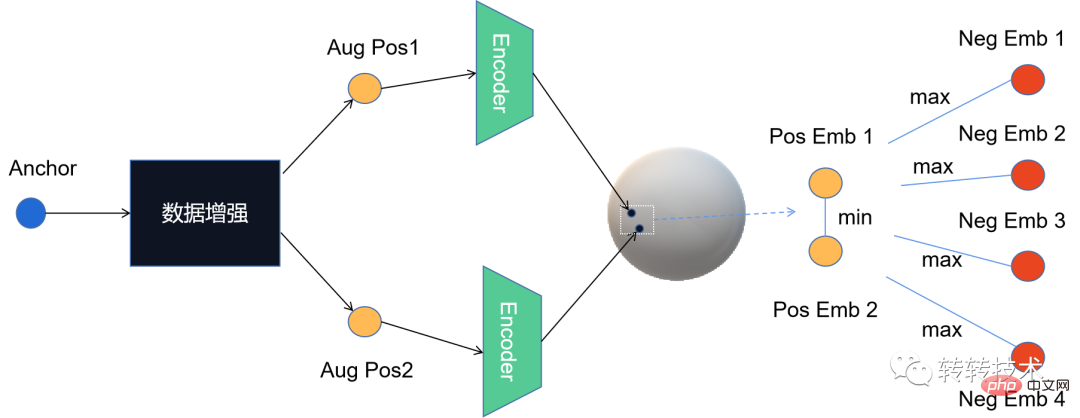

对比学习算法在转转的实践Apr 11, 2023 pm 09:25 PM

对比学习算法在转转的实践Apr 11, 2023 pm 09:25 PM1 什么是对比学习1.1 对比学习的定义1.2 对比学习的原理1.3 经典对比学习算法系列2 对比学习的应用3 对比学习在转转的实践3.1 CL在推荐召回的实践3.2 CL在转转的未来规划1 什么是对比学习1.1 对比学习的定义对比学习(Contrastive Learning, CL)是近年来 AI 领域的热门研究方向,吸引了众多研究学者的关注,其所属的自监督学习方式,更是在 ICLR 2020 被 Bengio 和 LeCun 等大佬点名称为 AI 的未来,后陆续登陆 NIPS, ACL,

Michael Bronstein从代数拓扑学取经,提出了一种新的图神经网络计算结构!Apr 09, 2023 pm 10:11 PM

Michael Bronstein从代数拓扑学取经,提出了一种新的图神经网络计算结构!Apr 09, 2023 pm 10:11 PM本文由Cristian Bodnar 和Fabrizio Frasca 合著,以 C. Bodnar 、F. Frasca 等人发表于2021 ICML《Weisfeiler and Lehman Go Topological: 信息传递简单网络》和2021 NeurIPS 《Weisfeiler and Lehman Go Cellular: CW 网络》论文为参考。本文仅是通过微分几何学和代数拓扑学的视角讨论图神经网络系列的部分内容。从计算机网络到大型强子对撞机中的粒子相互作用,图可以用来模

用AI寻找大屠杀后失散的亲人!谷歌工程师研发人脸识别程序,可识别超70万张二战时期老照片Apr 08, 2023 pm 04:21 PM

用AI寻找大屠杀后失散的亲人!谷歌工程师研发人脸识别程序,可识别超70万张二战时期老照片Apr 08, 2023 pm 04:21 PMAI面部识别领域又开辟新业务了?这次,是鉴别二战时期老照片里的人脸图像。近日,来自谷歌的一名软件工程师Daniel Patt 研发了一项名为N2N(Numbers to Names)的 AI人脸识别技术,它可识别二战前欧洲和大屠杀时期的照片,并将他们与现代的人们联系起来。用AI寻找失散多年的亲人2016年,帕特在参观华沙波兰裔犹太人纪念馆时,萌生了一个想法。这一张张陌生的脸庞,会不会与自己存在血缘的联系?他的祖父母/外祖父母中有三位是来自波兰的大屠杀幸存者,他想帮助祖母找到被纳粹杀害的家人的照

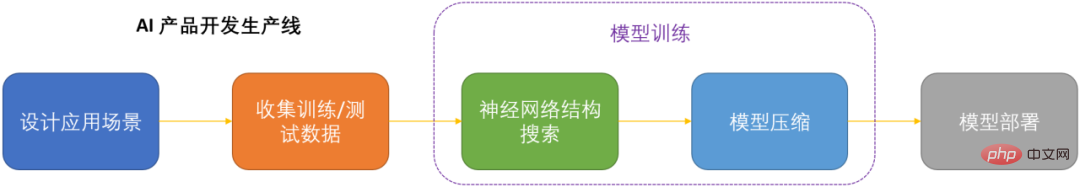

微软提出自动化神经网络训练剪枝框架OTO,一站式获得高性能轻量化模型Apr 04, 2023 pm 12:50 PM

微软提出自动化神经网络训练剪枝框架OTO,一站式获得高性能轻量化模型Apr 04, 2023 pm 12:50 PMOTO 是业内首个自动化、一站式、用户友好且通用的神经网络训练与结构压缩框架。 在人工智能时代,如何部署和维护神经网络是产品化的关键问题考虑到节省运算成本,同时尽可能小地损失模型性能,压缩神经网络成为了 DNN 产品化的关键之一。DNN 压缩通常来说有三种方式,剪枝,知识蒸馏和量化。剪枝旨在识别并去除冗余结构,给 DNN 瘦身的同时尽可能地保持模型性能,是最为通用且有效的压缩方法。三种方法通常来讲可以相辅相成,共同作用来达到最佳的压缩效果。然而现存的剪枝方法大都只针对特定模型,特定任务,且需要很

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Dreamweaver Mac version

Visual web development tools

Notepad++7.3.1

Easy-to-use and free code editor

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Mac version

God-level code editing software (SublimeText3)