Technology peripherals

Technology peripherals AI

AI Diffusion pre-training costs are reduced by 6.5 times, and fine-tuning hardware costs are reduced by 7 times! Colossal-AI complete open source solution accelerates the implementation of AIGC industry at low cost

Diffusion pre-training costs are reduced by 6.5 times, and fine-tuning hardware costs are reduced by 7 times! Colossal-AI complete open source solution accelerates the implementation of AIGC industry at low costHow to train and fine-tune AIGC models better, faster and cheaper has become the biggest pain point in the commercialization and application explosion of AIGC.

Colossal-AI is based on the accumulation of professional technology in the democratization of large models, open source and complete Stable Diffusion pre-training and personalized fine-tuning solutions, pre-training time acceleration and economic costs are reduced by 6.5 times, and personalized fine-tuning hardware costs are reduced by 7 Times! The fine-tuning task process can be quickly completed on the RTX 2070/3050 of a personal computer, making AIGC models such as Stable Diffusion within reach.

Open source address:

https://github.com/hpcaitech/ColossalAI

Hot AIGC track and high cost

AIGC (AI- Generated Content (artificial intelligence generated content) is one of the hottest topics in the current AI field. Especially with the emergence of cross-modal applications of text-generated images represented by Stable Diffusion, Midjourney, NovelAI, DALL-E, etc., AIGC is even more popular. Get out of the circle and get widespread attention.

Stable Diffusion generates images

Since AIGC has stimulated a large amount of industry demand, it has been regarded as one of the important directions of the next wave of AI in the industry. It is widely expected that new technological revolutions and killer applications based on AIGC will appear in text, audio, image and video, games, metaverse and other technical scenarios. The successful commercialization of AIGC in relevant scenarios and the potential multi-trillion dollar market have made related startups the darlings of capital. For example, Stability AI, Jasper, etc. have received hundreds of millions of dollars in financing in just one or two years since their establishment, and have been promoted to unicorns. The procession of beasts.

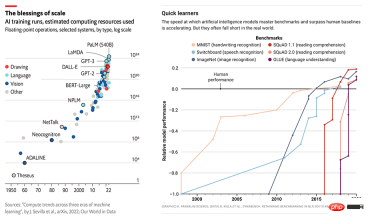

AI model scale and performance are growing simultaneously

But high hardware requirements and training costs still seriously hinder the rapid development of the AIGC industry. Outstanding performance in AIGC applications is often built on large models such as GPT-3 or Stable Diffusion and fine-tuned for specific downstream tasks and applications. Take the popular Stable Diffusion as an example. Although the Stability AI behind it was established not long ago, it maintains more than 4,000 NVIDIA A100 GPU clusters and has spent more than 50 million US dollars in operating costs for this purpose. Only the Stable Diffusion v1 version of the model alone This training requires 150,000 A100 GPU Hours.

Diffusion model

The idea of Diffusion model (diffusion model) was first proposed in the 2015 paper Deep Unsupervised Learning using Nonequilibrium Thermodynamics, and was promoted by the 2020 paper Denoising Diffusion Probabilistic Models (DDPM) Reaching a new height, DALL-E 2, Imagen, and Stable Diffusion based on the diffusion model have achieved far more than the Generative Adversarial Network (GAN), Variable Differential Autoencoder (VAE), and Autoregressive Model (AR) in the generation task. and other effects of traditional generative models.

The diffusion model consists of two processes: the forward diffusion process and the reverse generation process. The forward diffusion process is to gradually add Gaussian noise to an image until it becomes random noise, while the reverse generation process is to remove noise. In the process, a random noise is gradually denoised using multiple U-Nets until an image is generated, which is also part of the diffusion model training.

Latent Diffusion model

Compared with the traditional end-to-end deep learning model, the training process of the diffusion model is undoubtedly more complicated. Taking Stable Diffusion as an example, in addition to The diffusion model itself also has a Frozen CLIP Textcoder to input text prompts, and an Autoencoder to compress high-resolution images into latent space (Latent Space) and calculate loss at each time step. This poses greater challenges to the graphics memory overhead and computing speed of the training solution.

Lower cost - pre-training acceleration and low-resource fine-tuning

Pre-training optimization

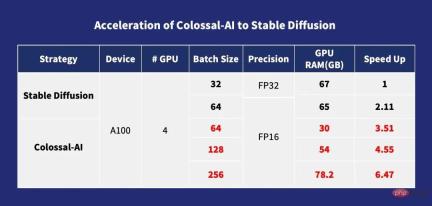

For pre-training, generally the larger the batch size , the training speed is also faster, and the Diffusion model is similar. Colossal-AI optimizes cross-attention calculations through ZeRO, Gemini, Chunk-based memory management strategies and the Flash Attention module, which greatly reduces the memory overhead of Diffusion model training, allowing users to use consumer-grade graphics cards with 10G memory (such as RTX3080) You can train the Diffusion model on a dedicated graphics card like the A100, which can directly support training with a maximum single-card Batch Size 256. Compared with stable-diffusion-v1-1, the FP32 DistributedDataParallel (DDP) training can be accelerated by 6.5 times. This means that the training cost of millions of dollars can be reduced by 6.5 times, greatly reducing the training cost and entry barrier of the AIGC industry!

Acceleration of Colossal-AI to Stable Diffusion

Personalized fine-tuning optimization

Because the LAION-5B data set used in the pre-training of Stable Diffusion has a total of 585 billion image-text pairs require 240TB of storage space. Combined with the complexity of the model, it is obvious that the cost of complete pre-training is extremely high: Stable Diffusion's Stability team spent more than $50 million to deploy 4,000 A100 GPUs. A more practical option for most AIGC players is to use open source pre-trained model weights for fine-tuning personalization downstream tasks.

However, the training parallelism method used in other existing open source finetune solutions is mainly DDP, which results in a huge amount of video memory usage during the training process. Even fine-tuning requires the use of at least RTX 3090 or 4090, the highest-end consumer-grade graphics card. start up. At the same time, many open source training frameworks at this stage do not provide complete training configurations and scripts, requiring users to spend extra time on tedious completion and debugging.

Unlike other solutions, Colossal-AI is the first solution to open source complete training configuration parameters and training scripts at the same time, allowing users to train the latest version of the segmentation model for new downstream tasks at any time, using more Flexible and wider range of applications. And because Colossal-AI introduces video memory optimization and other technologies, it can quickly complete the fine-tuning task process on only a single consumer-grade graphics card of an ordinary personal computer (such as GeForce RTX 2070/3050 8GB). Compared with RTX 3090 or 4090, it can save about 7 times the hardware cost, which greatly reduces the threshold and cost of using AIGC models such as Stable Diffusion, allowing users to no longer be limited to existing weight reasoning and complete personalized customization services quickly and easily. For tasks that are not speed-sensitive, you can further use Colossal-AI NVMe, which uses low-cost hard disk space to reduce graphics memory consumption.

Memory Reduction of Colossal-AI to Stable Diffusion

Optimization technology behind

ZeRO Gemini

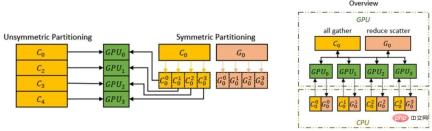

Colossal-AI supports the use of the Zero Redundancy Optimizer (ZeRO) method to eliminate memory redundancy. Compared with the classic data parallelism strategy, it can greatly improve memory usage efficiency without sacrificing computational granularity and communication efficiency. .

Colossal-AI introduces the Chunk mechanism so that we can further improve the performance of ZeRO. A set of parameters that are consecutive in the order of operations are stored in a Chunk (Chunk is a continuous section of memory space), and each Chunk is the same size. The chunk method of organizing memory can ensure efficient utilization of network bandwidth between PCI-e and GPU-GPU, reduce the number of communications, and avoid potential memory fragmentation.

Chunk Mechanism

In addition, Colossal-AI’s heterogeneous memory space manager Gemini supports offloading the optimizer state from the GPU to the CPU to save GPU Memory usage. GPU memory and CPU memory (composed of CPU DRAM or NVMe SSD memory) can be used simultaneously to break through the limitations of a single GPU memory wall and further expand the scale of the trainable model.

Improve the model capacity of hardware through ZeRO Gemini

Flash Attention

LDM (Latent Diffusion Models) Passed Introducing cross-attention (cross-attention layer) into the model architecture to achieve multi-modal training allows the Diffusion model to more flexibly support class-condition, text-to-image, and layout-to-image. However, the cross-attention layer adds additional computational overhead compared to the CNN layer of the original Diffusion model, which greatly increases the training cost.

By introducing the Flash attention mechanism, Colossal-AI successfully increased the speed of attention by 104% and reduced the peak video memory of end-to-end training by 23%. Flash attention is an accelerated version for long sequence attention. Flatten is used to reduce the number of memory reads/writes between GPU high-bandwidth memory (HBM). Flash attention also designs an approximate attention algorithm for block sparse attention. Faster than any existing approximate attention method.

Other optimization

Colossal-AI also integrates common optimization technologies such as FP16 and activation checkpoint. For example, activate checkpoint works by trading computation for memory. It avoids storing all intermediate activations of the entire computation graph for backward computation, does not save intermediate activations in the checkpoint part, and instead recomputes them in the backward pass, further reducing video memory. FP16, on the other hand, converts the original 32-bit floating-point number operations into 16-bit without affecting the accuracy, reducing the usage of video memory and improving computing efficiency.

Get started quickly

Different from the common PyTorch open source project, the current hot stable diffusion is built based on PyTorch Lightning. PyTorch Lightning provides a simple, easy-to-use, flexible and efficient high-level interface for the popular deep learning framework PyTorch, providing a simple and easy-to-use high-level abstraction for the majority of AI researchers, thereby making deep learning experiments easier to read and reproduce. It has been published on GitHub 20.5k Stars were harvested.

Invited by PyTorch Lightning, Colossal-AI has been integrated as PyTorch Lightning’s official large model solution. Thanks to the powerful combination of the two, AI researchers can now train and use diffusion models more efficiently. Taking training a stable diffusion model as an example, it can be started quickly with only a small amount of code.

from colossalai.nn.optimizer import HybridAdam from lightning.pytorch import trainer class MyDiffuser(LightningModule): ... def configure_sharded_model(self) -> None: # create your model here self.model = construct_diffuser_model(...) ... def configure_optimizers(self): # use the specified optimizer optimizer = HybridAdam(self.model.parameters(), self.lr) ... model = MyDiffuser() trainer = Trainer(accelerator="gpu", devices=1, precision=16, strategy="colossalai") trainer.fit(model)

Colossal-AI and PyTorch Lightning also provide good support and optimization for popular models and communities such as OPT and HuggingFace.

Low-cost fine-tuning

Colossal-AI In order to meet the needs of users to generate models with their own style through short-term training with less resources, Colossal-AI provides the weights of the Stable Diffusion model based on the open source HuggingFace Ability to make fine adjustments. Users only need to simply modify the Dataloader to load their own fine-tuned data set and read the pre-training weights, simply modify the parameter configuration yaml file and run the training script to fine-tune their own personalized model on their personal computer.

model: target: ldm.models.diffusion.ddpm.LatentDiffusion params: your_sub_module_config: target: your.model.import.path params: from_pretrained: 'your_file_path/unet/diffusion_pytorch_model.bin' ... lightning: trainer: strategy: target: pytorch_lightning.strategies.ColossalAIStrategy params: ... python main.py --logdir /your_log_dir -t -b config/train_colossalai.yaml

Fast Inference

Colossal-AI also supports the native Stable Diffusion inference pipeline. After completing training or fine-tuning, you only need to directly call the diffuser library and load the saved model parameters to proceed directly. Inference requires no other changes, making it easier for new users to familiarize themselves with the inference process and allowing users who are accustomed to using the original framework to get started quickly.

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained(

"your_ColoDiffusion_checkpoint_path"

).to("cuda")

image = pipe('your prompt', num_inference_steps=50)["sample"][0]

image.save('file path')

Generated works of the above inference process

One More Thing

The above-mentioned breakthroughs in AIGC training optimization represented by Diffusion are based on large-scale Colossal-AI, a general deep learning system in the model era, achieves efficient and rapid deployment of AI large model training and inference through efficient multi-dimensional automatic parallelism, heterogeneous memory management, large-scale optimization libraries, adaptive task scheduling, etc., and reduces the application cost of AI large models. . Since its open source, Colossal-AI has ranked first in the world on GitHub and Papers With Code hot lists many times, and has attracted attention at home and abroad together with many star open source projects with tens of thousands of stars! After strict review by international experts, Colossal-AI has been successfully selected as the official tutorial of top international AI and HPC conferences such as SC, AAAI, and PPoPP.

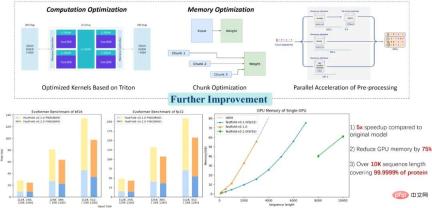

Colossal-AI application: better protein structure prediction solution

Colossal-AI related solutions have been successfully used in autonomous driving, cloud computing, retail It has been implemented by well-known manufacturers in , medicine, chip and other industries and has been widely praised. For example, for the protein structure prediction model AlphaFold in the biomedical industry, the optimization solution FastFold based on Colossal-AI successfully exceeded the maximum amino acid sequence length that can be inferred by a single GPU to 10,000, covering 99.9999% of proteins, using only a laptop computer. A consumer-grade graphics card can resolve 90% of proteins. It can further accelerate the entire process of training and reasoning in parallel, and has helped many new drug R&D companies shorten the development process and reduce R&D costs.

Open source address:

https://github.com/hpcaitech/ColossalAI

The above is the detailed content of Diffusion pre-training costs are reduced by 6.5 times, and fine-tuning hardware costs are reduced by 7 times! Colossal-AI complete open source solution accelerates the implementation of AIGC industry at low cost. For more information, please follow other related articles on the PHP Chinese website!

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AM

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AMai合并图层的快捷键是“Ctrl+Shift+E”,它的作用是把目前所有处在显示状态的图层合并,在隐藏状态的图层则不作变动。也可以选中要合并的图层,在菜单栏中依次点击“窗口”-“路径查找器”,点击“合并”按钮。

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AM

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AMai橡皮擦擦不掉东西是因为AI是矢量图软件,用橡皮擦不能擦位图的,其解决办法就是用蒙板工具以及钢笔勾好路径再建立蒙板即可实现擦掉东西。

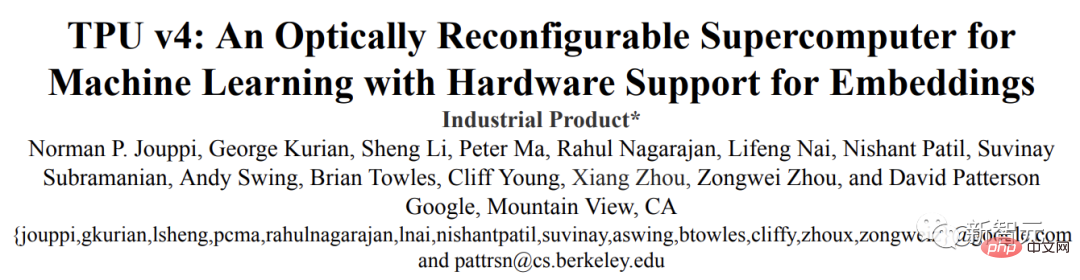

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PM

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PMai可以转成psd格式。转换方法:1、打开Adobe Illustrator软件,依次点击顶部菜单栏的“文件”-“打开”,选择所需的ai文件;2、点击右侧功能面板中的“图层”,点击三杠图标,在弹出的选项中选择“释放到图层(顺序)”;3、依次点击顶部菜单栏的“文件”-“导出”-“导出为”;4、在弹出的“导出”对话框中,将“保存类型”设置为“PSD格式”,点击“导出”即可;

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PM

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PMai顶部属性栏不见了的解决办法:1、开启Ai新建画布,进入绘图页面;2、在Ai顶部菜单栏中点击“窗口”;3、在系统弹出的窗口菜单页面中点击“控制”,然后开启“控制”窗口即可显示出属性栏。

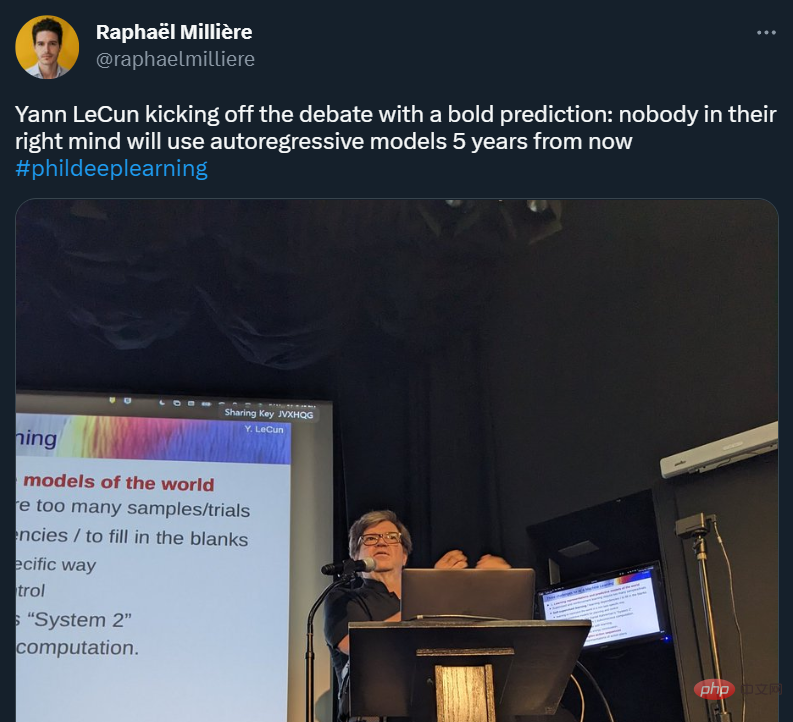

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AM

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AMYann LeCun 这个观点的确有些大胆。 「从现在起 5 年内,没有哪个头脑正常的人会使用自回归模型。」最近,图灵奖得主 Yann LeCun 给一场辩论做了个特别的开场。而他口中的自回归,正是当前爆红的 GPT 家族模型所依赖的学习范式。当然,被 Yann LeCun 指出问题的不只是自回归模型。在他看来,当前整个的机器学习领域都面临巨大挑战。这场辩论的主题为「Do large language models need sensory grounding for meaning and u

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM引入密集强化学习,用 AI 验证 AI。 自动驾驶汽车 (AV) 技术的快速发展,使得我们正处于交通革命的风口浪尖,其规模是自一个世纪前汽车问世以来从未见过的。自动驾驶技术具有显着提高交通安全性、机动性和可持续性的潜力,因此引起了工业界、政府机构、专业组织和学术机构的共同关注。过去 20 年里,自动驾驶汽车的发展取得了长足的进步,尤其是随着深度学习的出现更是如此。到 2015 年,开始有公司宣布他们将在 2020 之前量产 AV。不过到目前为止,并且没有 level 4 级别的 AV 可以在市场

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AM

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AMai移动不了东西的解决办法:1、打开ai软件,打开空白文档;2、选择矩形工具,在文档中绘制矩形;3、点击选择工具,移动文档中的矩形;4、点击图层按钮,弹出图层面板对话框,解锁图层;5、点击选择工具,移动矩形即可。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Atom editor mac version download

The most popular open source editor

Dreamweaver Mac version

Visual web development tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 English version

Recommended: Win version, supports code prompts!