Technology peripherals

Technology peripherals AI

AI AIGC that conforms to the human creative process: a model that automatically generates growth stories emerges

AIGC that conforms to the human creative process: a model that automatically generates growth stories emergesAIGC that conforms to the human creative process: a model that automatically generates growth stories emerges

In today’s field of artificial intelligence, AI writing artifacts are emerging one after another, and technology and products are changing with each passing day.

If the GPT-3 released by OpenAI two years ago is still a little lacking in writing, then the generated results of ChatGPT some time ago can be regarded as "gorgeous writing, full plot, and logical logic" It’s both harmonious and harmonious.”

Some people say that if AI starts to write, it will really have nothing to do with humans.

But whether it is humans or AI, once the "word count requirement" is increased, the article will become more difficult to "control".

Recently, Chinese AI research scientist Tian Yuandong and several other researchers recently released a new language model-Re^3. This research was also selected for EMNLP 2022.

Paper link: https://arxiv.org/pdf/2210.06774.pdf

Tian Yuandong once introduced this model on Zhihu:

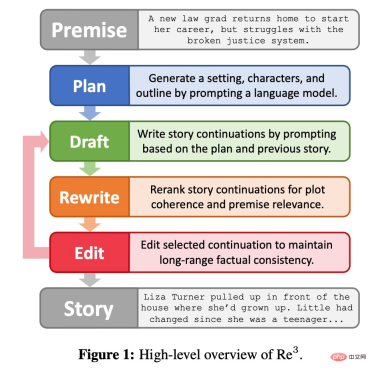

The idea of Re^3 is extremely simple. By designing prompts, it can generate strong consistency story, no need to fine-tune the large model at all. We jump out of the linear logic of word-by-word generation of the language model and use a hierarchical generation method: first generate the story characters, various attributes and outlines of the characters in the Plan stage, and then give the story outline and roles in the Draft stage, and repeatedly generate specific Paragraphs, these specific paragraphs are filtered by the Rewrite stage to select generated paragraphs that are highly related to the previous paragraph, while discarding those that are not closely related (this requires training a small model), and finally correct some obvious factual errors in the Edit stage.

Method Introduction

The idea of Re^3 is to generate longer stories through recursive Reprompt and adjustments, which is more in line with the creative process of human writers. Re^3 breaks down the human writing process into 4 modules: planning, drafting, rewriting, and editing.

Plan module

As shown in Figure 2 below , the planning module will expand the story premise (Premise) into background, characters and story outline. First, the background is a simple one-sentence extension of the story premise, obtained using GPT3-Instruct-175B (Ouyang et al., 2022); then, GPT3-Instruct175B regenerates character names and generates character descriptions based on the premise and background; finally, the Method prompt GPT3-Instruct175B to write the story outline. The components in the planning module are generated by prompt themselves and will be used over and over again.

Draft module

For each result obtained by the planning module An outline, the draft module will continue to generate several story paragraphs. Each paragraph is a fixed-length continuation generated from a structured prompt formed by a recursive reprompt. The draft module is shown in Figure 3 below.

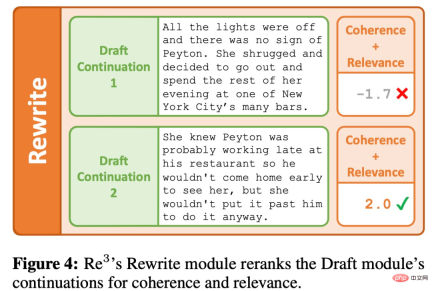

Rewrite module

The first one of the generator The output is often low quality, like a first draft that people complete, a second draft that may require rewriting an article based on feedback.

The Rewrite module simulates the rewriting process by reordering the Draft module output based on coherence with previous paragraphs and relevance to the current outline point, as shown in Figure 4 below.

Edit module

#Different from substantial rewriting, the editing module is generated through the planning, drafting and rewriting modules. Partial editing of paragraphs to further improve the generated content. Specifically, the goal is to eliminate long sequences of factual inconsistencies. When one discovers a small factual discontinuity while proofreading, one may simply edit the problematic detail rather than make major revisions or substantive rewrites of the high-level article plan. The editing module mimics this process of human authoring in two steps: detecting factual inconsistencies and correcting them, as shown in Figure 5 below.

Evaluation

In the evaluation session, the researcher sets the task to perform a brief initial A story is generated from the previous situation. Since “stories” are difficult to define in a rule-based manner, we did not impose any rule-based constraints on acceptable outputs and instead evaluated them through several human-annotated metrics. To generate initial premises, the researchers prompted with GPT3-Instruct-175B to obtain 100 different premises.

Baseline

It was difficult since the previous method focused more on short stories compared to Re^3 Direct comparison. So the researchers used the following two baselines based on GPT3-175B:

1. ROLLING, generate 256 tokens at a time through GPT3-175B, using the previous situation and all previously generated stories The text is used as a prompt, and if there are more than 768 tokens, the prompt is left truncated. Therefore, the "rolling window" maximum context length is 1024, which is the same maximum context length used in RE^3. After generating 3072 tokens, the researchers used the same story ending mechanism as RE^3.

2. ROLLING-FT, the same as ROLLING, except that GPT3-175B first fine-tunes hundreds of paragraphs in the WritingPrompts story, which have at least 3000 tokens.

Indicators

Several evaluation indicators used by researchers include:

1. Interesting. Be interesting to readers.

2. Continuity. The plot is coherent.

3. Relevance. Stay true to the original.

4. Humanoid. Judged to be written by humans.

In addition, the researchers also tracked how many times the generated stories had writing problems in the following aspects:

1. Narrative. A shocking change in narrative or style.

2. Inconsistency. It is factually incorrect or contains very strange details.

3. Confusion. Confusing or difficult to understand.

4. Repeatability. High degree of repeatability.

5. Not smooth. Frequent grammatical errors.

The results

## are shown in Table 1 As shown, Re^3 is very effective at writing a longer story based on anticipated events while maintaining a coherent overall plot, validating the researchers' design choices inspired by the human writing process, as well as the reprompting generation method. . Compared with ROLLING and ROLLING-FT, Re^3 significantly improves both coherence and relevance. The annotator also marked Re^3's story as having "significantly fewer redundant writing issues".

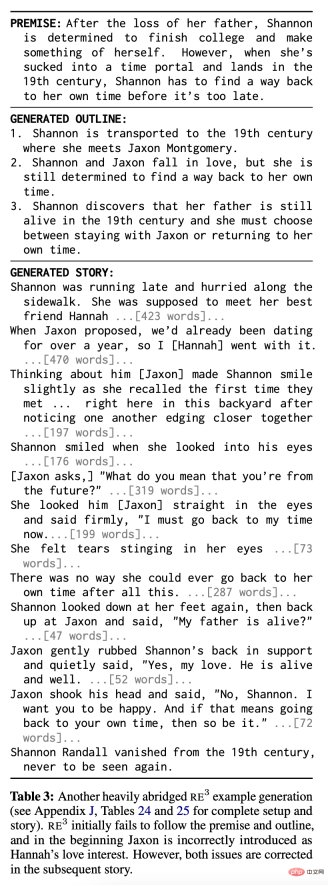

Re^3 shows strong performance in an absolute sense: annotators believe that in the two comparisons, 83.3% and 80.0% of Re^3's stories were written by humans, respectively. Table 2 shows a heavily abridged story example from Re^3, showing strong coherence and context relevance:

Despite this, researchers still qualitatively observed that Re^3 still has a lot of room for improvement.

Two common issues are shown in Table 3. First, although Re^3s almost always follow the story premise to some extent, unlike baseline stories, they may not capture all parts of the premise and may not follow the partial outline generated by the planning module (e.g., Table 3 The first part of the story and outline). Secondly, due to the failure of the rewriting module, especially the editing module, there are still some confusing passages or contradictory statements: for example, in Table 3, the character Jaxon has a contradictory identity in some places.

However, unlike the rolling window method (rolling window), Re^3's planning method can "self-correct" , back to the original plot. The second half of the story in Table 3 illustrates this ability.

Analysis

Ablation Experiment

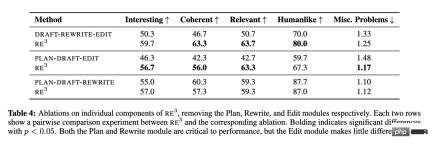

The researchers discussed the various modules of Re^3 Relative contribution of: planning, drafting, rewriting, and editing, and conducting ablation experiments on each module in turn. The exception is the Draft module, as it is unclear how the system would function without it.

Table 4 shows that the “Planning” and “Rewriting” modules that imitate the human planning and rewriting process have an impact on the overall plot. Coherence and the relevance of premises are crucial. However, the "Edit" module contributes very little to these metrics. The researchers also qualitatively observed that there are still many coherence issues in Re^3's final story that are not addressed by the editing module, but that these issues could be resolved by a careful human editor.

Further analysis of the "Edit" module

##The researcher used a controlled environment to study Whether the Edit module can at least detect role-based factual inconsistencies. The detection subsystem is called STRUCTURED-DETECT to avoid confusion with the entire editing module.

As shown in Table 5, STRUCTUREDDETECT outperforms both baselines when detecting role-based inconsistencies according to the standard ROC-AUC classification metric. The ENTAILMENT system's ROC-AUC score is barely better than chance performance (0.5), highlighting the core challenge that detection systems must be overwhelmingly accurate. Additionally, STRUCTURED-DETECT is designed to scale to longer paragraphs. The researchers hypothesized that the performance gap would widen in evaluations with longer inputs compared to the baseline.

Even in this simplified environment, the absolute performance of all systems is still low. Additionally, many of the generated complete stories contain non-character inconsistencies, such as background inconsistencies with the current scene. Although the researchers did not formally analyze the GPT-3 editing API's ability to correct inconsistencies after detecting them, they also observed that it can correct isolated details but struggles when dealing with larger changes.

Taken together, compound errors from the detection and correction subsystems make it difficult for this study’s current editing module to effectively improve factual consistency across thousands of words, without introducing unnecessary changes at the same time.

The above is the detailed content of AIGC that conforms to the human creative process: a model that automatically generates growth stories emerges. For more information, please follow other related articles on the PHP Chinese website!

Tool Calling in LLMsApr 14, 2025 am 11:28 AM

Tool Calling in LLMsApr 14, 2025 am 11:28 AMLarge language models (LLMs) have surged in popularity, with the tool-calling feature dramatically expanding their capabilities beyond simple text generation. Now, LLMs can handle complex automation tasks such as dynamic UI creation and autonomous a

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global HealthApr 14, 2025 am 11:27 AM

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global HealthApr 14, 2025 am 11:27 AMCan a video game ease anxiety, build focus, or support a child with ADHD? As healthcare challenges surge globally — especially among youth — innovators are turning to an unlikely tool: video games. Now one of the world’s largest entertainment indus

UN Input On AI: Winners, Losers, And OpportunitiesApr 14, 2025 am 11:25 AM

UN Input On AI: Winners, Losers, And OpportunitiesApr 14, 2025 am 11:25 AM“History has shown that while technological progress drives economic growth, it does not on its own ensure equitable income distribution or promote inclusive human development,” writes Rebeca Grynspan, Secretary-General of UNCTAD, in the preamble.

Learning Negotiation Skills Via Generative AIApr 14, 2025 am 11:23 AM

Learning Negotiation Skills Via Generative AIApr 14, 2025 am 11:23 AMEasy-peasy, use generative AI as your negotiation tutor and sparring partner. Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining

TED Reveals From OpenAI, Google, Meta Heads To Court, Selfie With MyselfApr 14, 2025 am 11:22 AM

TED Reveals From OpenAI, Google, Meta Heads To Court, Selfie With MyselfApr 14, 2025 am 11:22 AMThe TED2025 Conference, held in Vancouver, wrapped its 36th edition yesterday, April 11. It featured 80 speakers from more than 60 countries, including Sam Altman, Eric Schmidt, and Palmer Luckey. TED’s theme, “humanity reimagined,” was tailor made

Joseph Stiglitz Warns Of The Looming Inequality Amid AI Monopoly PowerApr 14, 2025 am 11:21 AM

Joseph Stiglitz Warns Of The Looming Inequality Amid AI Monopoly PowerApr 14, 2025 am 11:21 AMJoseph Stiglitz is renowned economist and recipient of the Nobel Prize in Economics in 2001. Stiglitz posits that AI can worsen existing inequalities and consolidated power in the hands of a few dominant corporations, ultimately undermining economic

What is Graph Database?Apr 14, 2025 am 11:19 AM

What is Graph Database?Apr 14, 2025 am 11:19 AMGraph Databases: Revolutionizing Data Management Through Relationships As data expands and its characteristics evolve across various fields, graph databases are emerging as transformative solutions for managing interconnected data. Unlike traditional

LLM Routing: Strategies, Techniques, and Python ImplementationApr 14, 2025 am 11:14 AM

LLM Routing: Strategies, Techniques, and Python ImplementationApr 14, 2025 am 11:14 AMLarge Language Model (LLM) Routing: Optimizing Performance Through Intelligent Task Distribution The rapidly evolving landscape of LLMs presents a diverse range of models, each with unique strengths and weaknesses. Some excel at creative content gen

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment