Translator|Li Rui

Reviewer|Sun Shujuan

The world-renowned streaming service Netflix changed its five-star rating system to "thumbs up" in 2017 Simple rating system of "thumbs down" (like) and "thumbs down" (dislike). The system can recommend movies based on percentage matches, but some people find this objectionable. So how do you reduce all the nuances in the art of cinema to a primitive binary reaction? Give it a high rating, but it's not necessarily a movie they'd actually enjoy watching. At least that's what the data says. So how does data analytics work at a company like Netflix? What is the role of the data science team?

NETFLIX FEEDBACK SYSTEM

Extraction: Extract data from the data source and send it to the staging area.

Transformation: Prepare data for consumption and load to push the prepared data further into ETL.

- All prepared data goes into another storage, the data warehouse.

- Data Warehouse

Data Engineer

The data engineer is responsible for building the entire pipeline. Most technicians are well versed in what is called "piping." Move data from source to destination through pipelines, transforming it along the way. They design the pipeline architecture, set up the ETL process, configure the warehouse, and connect it with reporting tools. For example, Airbnb has about 50 data engineers. The company may sometimes encounter a more elaborate approach that involves some additional rules. For example, data quality engineers ensure that data is captured and transformed correctly. Having biased or incorrect data is too costly when trying to draw decisions from it. There may be a separate engineer responsible only for ETL. Additionally, business intelligence developers only focus on integrating reporting and visualization tools. However, reporting tools don’t grab the headlines, and data engineer isn’t the best job of the 21st century, but machine learning and data scientist probably are.

Machine Learning and Data Scientists

It is well known that data scientists are particularly good at collecting data and answering complex questions about the data, such as what will the company's revenue be next quarter? When will the car scheduled with Uber arrive? How likely is it to like Schindler's List and Uncut Gems?

There are actually two ways to answer these questions. Data scientists work with business intelligence tools and warehouse data just like business analysts and data analysts. So, they will get the data from the warehouse. Sometimes data scientists use a data lake: another type of storage for unstructured fraud data. They will create a forecast model and come up with forecasts that can be used by management. It's good for one-time reporting of revenue estimates, but it's not helpful for predicting arrival times of cars for Uber appointments.

The real value of machine learning is that production models can work automatically and regularly generate answers to complex questions, sometimes thousands of times per second, and the things they can handle are much more complex.

Producing Machine Learning Models

In order for the model to work, infrastructure is also required. Sometimes this is a big problem. Data scientists explore data in data warehouses and data lakes, conduct experiments on it, select algorithms, and train models to produce final machine learning code. This requires a deep understanding of statistical databases, machine learning algorithms, and subject areas.

Josh Wills, the former head of data engineering at SLAC, said on Twitter, "Data scientists are people who are better at statistics than any software engineering."

For example, orderers use ubereats software order. Once the user confirms the order, the application must estimate the delivery time, the orderer's location, the restaurant and the order data to be sent to a server where a delivery prediction machine learning model is deployed. But these data are not enough. The model also pulls additional data from a separate database that contains average restaurant prep times and other details. Once all the data is available, the model returns predictions to the orderer. However, the process does not end there. The predictions themselves are saved in a separate database. It will be aimed at monitoring model performance and exploring the model through analysis tools so that it can be updated later. All this data ends up in data lakes and data warehouses.

In fact, the UberEats food ordering service alone uses hundreds of different models working simultaneously to score recommendations, rank restaurants in searches, and estimate delivery times.

Conclusion

Adam Waxman, the core technology leader of Foursquare, believes that there will no longer be data scientists or machine learning engineers in the future, because with the automation of model training and the continuous construction of production environments, Many data scientist jobs will become common functions in software development.

Original title: Roles in Data Science Teams, author: Anomi Ragendran

The above is the detailed content of What you need to know about these roles on a data science team. For more information, please follow other related articles on the PHP Chinese website!

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM

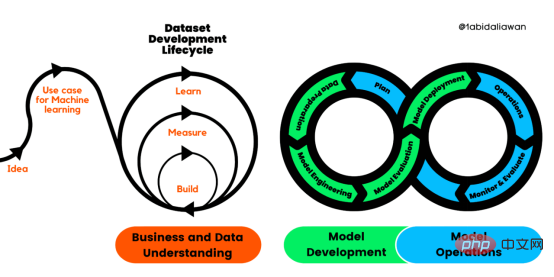

解读CRISP-ML(Q):机器学习生命周期流程Apr 08, 2023 pm 01:21 PM译者 | 布加迪审校 | 孙淑娟目前,没有用于构建和管理机器学习(ML)应用程序的标准实践。机器学习项目组织得不好,缺乏可重复性,而且从长远来看容易彻底失败。因此,我们需要一套流程来帮助自己在整个机器学习生命周期中保持质量、可持续性、稳健性和成本管理。图1. 机器学习开发生命周期流程使用质量保证方法开发机器学习应用程序的跨行业标准流程(CRISP-ML(Q))是CRISP-DM的升级版,以确保机器学习产品的质量。CRISP-ML(Q)有六个单独的阶段:1. 业务和数据理解2. 数据准备3. 模型

2023年机器学习的十大概念和技术Apr 04, 2023 pm 12:30 PM

2023年机器学习的十大概念和技术Apr 04, 2023 pm 12:30 PM机器学习是一个不断发展的学科,一直在创造新的想法和技术。本文罗列了2023年机器学习的十大概念和技术。 本文罗列了2023年机器学习的十大概念和技术。2023年机器学习的十大概念和技术是一个教计算机从数据中学习的过程,无需明确的编程。机器学习是一个不断发展的学科,一直在创造新的想法和技术。为了保持领先,数据科学家应该关注其中一些网站,以跟上最新的发展。这将有助于了解机器学习中的技术如何在实践中使用,并为自己的业务或工作领域中的可能应用提供想法。2023年机器学习的十大概念和技术:1. 深度神经网

基于因果森林算法的决策定位应用Apr 08, 2023 am 11:21 AM

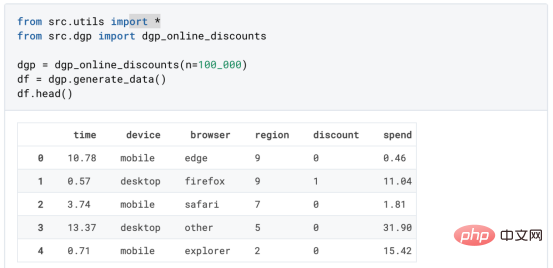

基于因果森林算法的决策定位应用Apr 08, 2023 am 11:21 AM译者 | 朱先忠审校 | 孙淑娟在我之前的博客中,我们已经了解了如何使用因果树来评估政策的异质处理效应。如果你还没有阅读过,我建议你在阅读本文前先读一遍,因为我们在本文中认为你已经了解了此文中的部分与本文相关的内容。为什么是异质处理效应(HTE:heterogenous treatment effects)呢?首先,对异质处理效应的估计允许我们根据它们的预期结果(疾病、公司收入、客户满意度等)选择提供处理(药物、广告、产品等)的用户(患者、用户、客户等)。换句话说,估计HTE有助于我

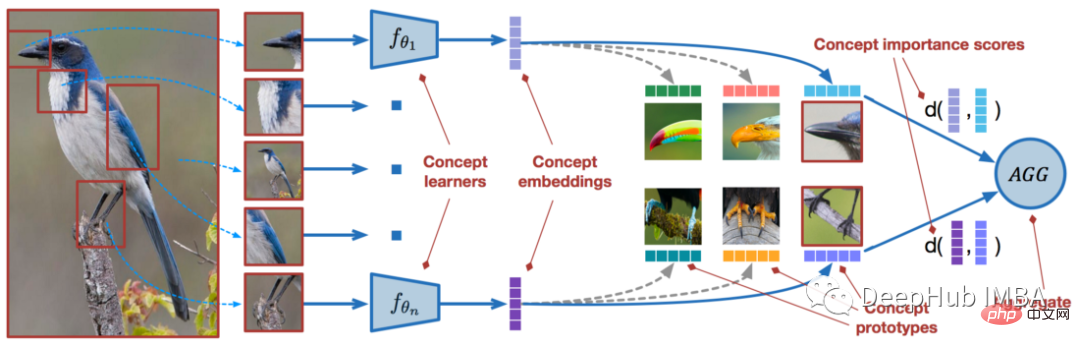

使用PyTorch进行小样本学习的图像分类Apr 09, 2023 am 10:51 AM

使用PyTorch进行小样本学习的图像分类Apr 09, 2023 am 10:51 AM近年来,基于深度学习的模型在目标检测和图像识别等任务中表现出色。像ImageNet这样具有挑战性的图像分类数据集,包含1000种不同的对象分类,现在一些模型已经超过了人类水平上。但是这些模型依赖于监督训练流程,标记训练数据的可用性对它们有重大影响,并且模型能够检测到的类别也仅限于它们接受训练的类。由于在训练过程中没有足够的标记图像用于所有类,这些模型在现实环境中可能不太有用。并且我们希望的模型能够识别它在训练期间没有见到过的类,因为几乎不可能在所有潜在对象的图像上进行训练。我们将从几个样本中学习

LazyPredict:为你选择最佳ML模型!Apr 06, 2023 pm 08:45 PM

LazyPredict:为你选择最佳ML模型!Apr 06, 2023 pm 08:45 PM本文讨论使用LazyPredict来创建简单的ML模型。LazyPredict创建机器学习模型的特点是不需要大量的代码,同时在不修改参数的情况下进行多模型拟合,从而在众多模型中选出性能最佳的一个。 摘要本文讨论使用LazyPredict来创建简单的ML模型。LazyPredict创建机器学习模型的特点是不需要大量的代码,同时在不修改参数的情况下进行多模型拟合,从而在众多模型中选出性能最佳的一个。本文包括的内容如下:简介LazyPredict模块的安装在分类模型中实施LazyPredict

Mango:基于Python环境的贝叶斯优化新方法Apr 08, 2023 pm 12:44 PM

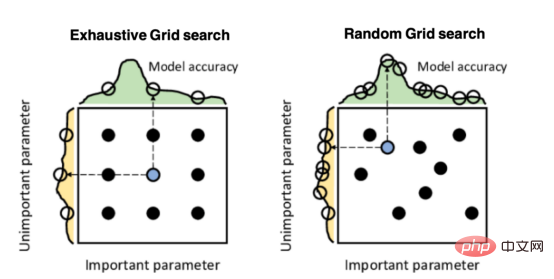

Mango:基于Python环境的贝叶斯优化新方法Apr 08, 2023 pm 12:44 PM译者 | 朱先忠审校 | 孙淑娟引言模型超参数(或模型设置)的优化可能是训练机器学习算法中最重要的一步,因为它可以找到最小化模型损失函数的最佳参数。这一步对于构建不易过拟合的泛化模型也是必不可少的。优化模型超参数的最著名技术是穷举网格搜索和随机网格搜索。在第一种方法中,搜索空间被定义为跨越每个模型超参数的域的网格。通过在网格的每个点上训练模型来获得最优超参数。尽管网格搜索非常容易实现,但它在计算上变得昂贵,尤其是当要优化的变量数量很大时。另一方面,随机网格搜索是一种更快的优化方法,可以提供更好的

人工智能自动获取知识和技能,实现自我完善的过程是什么Aug 24, 2022 am 11:57 AM

人工智能自动获取知识和技能,实现自我完善的过程是什么Aug 24, 2022 am 11:57 AM实现自我完善的过程是“机器学习”。机器学习是人工智能核心,是使计算机具有智能的根本途径;它使计算机能模拟人的学习行为,自动地通过学习来获取知识和技能,不断改善性能,实现自我完善。机器学习主要研究三方面问题:1、学习机理,人类获取知识、技能和抽象概念的天赋能力;2、学习方法,对生物学习机理进行简化的基础上,用计算的方法进行再现;3、学习系统,能够在一定程度上实现机器学习的系统。

超参数优化比较之网格搜索、随机搜索和贝叶斯优化Apr 04, 2023 pm 12:05 PM

超参数优化比较之网格搜索、随机搜索和贝叶斯优化Apr 04, 2023 pm 12:05 PM本文将详细介绍用来提高机器学习效果的最常见的超参数优化方法。 译者 | 朱先忠审校 | 孙淑娟简介通常,在尝试改进机器学习模型时,人们首先想到的解决方案是添加更多的训练数据。额外的数据通常是有帮助(在某些情况下除外)的,但生成高质量的数据可能非常昂贵。通过使用现有数据获得最佳模型性能,超参数优化可以节省我们的时间和资源。顾名思义,超参数优化是为机器学习模型确定最佳超参数组合以满足优化函数(即,给定研究中的数据集,最大化模型的性能)的过程。换句话说,每个模型都会提供多个有关选项的调整“按钮

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.