Technology peripherals

Technology peripherals AI

AI The correct way to play building-block deep learning! National University of Singapore releases DeRy, a new transfer learning paradigm that turns knowledge transfer into movable type printing

The correct way to play building-block deep learning! National University of Singapore releases DeRy, a new transfer learning paradigm that turns knowledge transfer into movable type printingDuring the Qingli period of Renzong in the Northern Song Dynasty 980 years ago, a revolution in knowledge was quietly taking place in China.

#The trigger for all this is not the words of the sages who live in temples, but the clay bricks with regular inscriptions fired piece by piece.

This revolution is "movable type printing".

The subtlety of movable type printing lies in the idea of "building block assembly": the craftsman first makes the reverse character mold of the single character, and then puts the single character according to the manuscript. Selected and printed with ink, these fonts can be used as many times as needed.

#Compared with the cumbersome process of "one print, one version" of woodblock printing, Modularization-Assembled on demand-Multiple uses This working mode geometrically improves the efficiency of printing and lays the foundation for the development and inheritance of human civilization for thousands of years.

Returning to the field of deep learning, today with the popularity of large pre-trained models, how to migrate the capabilities of a series of large models to specific downstream tasks has become a problem. The key issue.

The previous knowledge transfer or reuse method is similar to "block printing": we often need to train a new complete model according to task requirements. These methods are often accompanied by huge training costs and are difficult to scale to a large number of tasks.

So a very natural idea came up: Can we regard the neural network as an assembly of building blocks? And obtain a new network by reassembling the existing network, and use it to perform transfer learning?

##

##

Paper link: https://arxiv.org/abs/2210.17409

Code link: https://github.com/Adamdad/DeRy

Project homepage: https://adamdad.github.io/dery/

OpenReview: https://openreview.net/forum?id=gtCPWaY5bNh

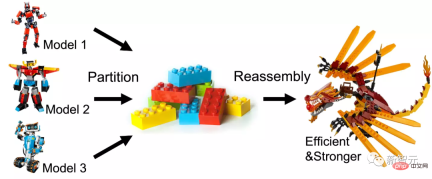

The author comes first Disassemble the existing pre-trained model into a sub-network based on functional similarity, and then reassemble the sub-network to build an efficient and easy-to-use model for specific tasks.

The paper was accepted by NeurIPS with a score of 886 and recommended as Paper Award Nomination.

In this article, the author explores a new knowledge transfer task called Deep Model Reassembly (DeRy), using for general model reuse.

Given a set of pre-trained models trained on different data and heterogeneous architectures, deep model restructuring first splits each model into independent model chunks and then selectively to reassemble sub-model pieces within hardware and performance constraints.

This method is similar to treating the deep neural network model as building blocks: dismantle the existing large building blocks into small building blocks, and then The parts are assembled as required. The assembled new model should not only have stronger performance; the assembly process should not change the structure and parameters of the original module as much as possible to ensure its efficiency.

Breaking up and reorganizing the deep model

The method in this article can be divided into two parts. DeRy first solves a Set Cover Problem and splits all pre-trained networks according to functional levels; in the second step, DeRy formalizes the model assembly into a 0-1 integer programming problem to ensure that the assembled model is Best performance on specific tasks.

##Deep Model Reassembly

First, the author defines the problem of deep model reassembly: given a trained deep model, it is called a model library.

Each model is composed of layer links, represented by . Different networks can have completely different structures and operations, as long as the model is connected layer by layer.

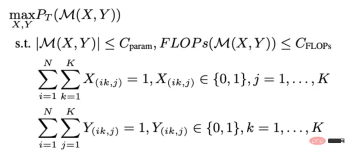

Given a task, we hope to find the layer mixture model with the best performance, and the calculation amount of the model meets certain restrictions:

Performance on the task; Represents the layer operation of the th model;

This problem requires searching all permutations of all model layers in order to Maximize revenue. In essence, this task involves an extremely complex combinatorial optimization.

In order to simplify the search cost, this article first splits the model library model from the depth direction to form some shallower and smaller sub-networks; then performs splicing search at the sub-network level.

Split the network according to functional levels

DeRy’s first step is Take apart deep learning models like building blocks. The author adopts a deep network splitting method to split the deep model into some shallower small models.

The article hopes that the disassembled sub-models have different functions as much as possible. This process can be compared to the process of dismantling building blocks and putting them into toy boxes into categories: Similar building blocks are put together, and different building blocks are taken apart.

For example, split the model into the bottom layer and the high layer, and expect that the bottom layer is mainly responsible for identifying local patterns such as curves or shapes, while the high layer can judge the overall semantics of the sample.

Using the general feature similarity measurement index, the functional similarity of any model can be quantitatively measured.

The key idea is that for similar inputs, neural networks with the same function can produce similar outputs.

So, for the input tensors X and X' corresponding to the sum of the two networks, their functional similarity is defined as:

Then the model library can be divided into functional equivalence sets through functional similarity.

The subnetworks in each equivalence set have high functional similarity, and the division of each model ensures the separability of the model library.

One of the core benefits of such disassembly is that due to functional similarities, the subnetworks in each equivalent set can be regarded as approximately commutative, that is, a network block can be Replaced by another subnetwork of the same equivalence set without affecting network prediction.

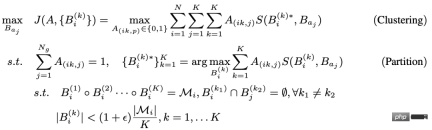

The above splitting problem can be formalized as a three-layer constrained optimization problem:

The inner-level optimization of this problem is very similar to the general covering set problem or graph segmentation problem. Therefore, the author uses a heuristic Kernighan-Lin (KL) algorithm to optimize the inner layer.

The general idea is that for two randomly initialized sub-models, one layer of operations is exchanged each time. If the exchange can increase the value of the evaluation function, the exchange is retained; otherwise, it is given up. This exchange.

The outer loop here adopts a K-Means clustering algorithm.

For each network division, each subnetwork is always assigned to the function set with the largest center distance. Since the inner and outer loops are iterative and have convergence guarantee, the optimal subnetwork split according to functional levels can be obtained by solving the above problem.

Network assembly based on integer optimization

Network splitting divides each network into sub-networks, each sub-network Belongs to an equivalence set. This can be used as a search space to find the optimal network splicing for downstream tasks.

Due to the diversity of sub-models, this network assembly is a combinatorial optimization problem with a large search space, and certain search conditions are defined: Each network combination takes a network block from the same functional set and places it according to its position in the original network; the synthesized network needs to meet the computational limit. This process is described as optimization of a 0-1 integer optimization problem.

In order to further reduce the training overhead for each calculation of the combined model performance, the author draws on an alternative function in NAS training that does not require training, called for NASWOT. From this, the true performance of the network can be approximated simply by using the network's inference on a specified data set.

Through the above split-recombine process, different pre-trained models can be spliced and fused to obtain a new and stronger model.

Experimental results

Model reorganization is suitable for transfer learning

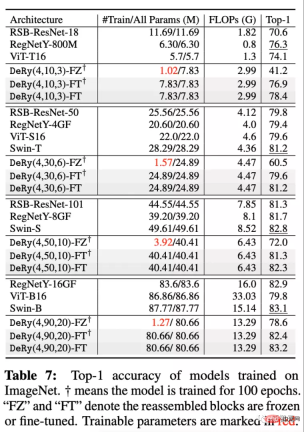

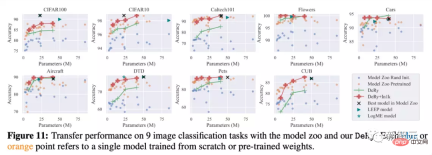

The author combines a model containing 30 different pre-trained networks The library was painstakingly disassembled and reassembled, and performance evaluated on ImageNet and 9 other downstream classification tasks.

Two different training methods were used in the experiment: Full-Tuning, which means that all parameters of the spliced model are trained; Freeze- Tuning means that only the spliced connection layer is trained.

In addition, five scale models were selected and compared, called DeRy(, ,).

As you can see in the picture above, on the ImageNet data set, the models of different scales obtained by DeRy can be better than or equal to the models of similar size in the model library.

It can be found that even if only the parameters of the link part are trained, the model can still obtain strong performance gains. For example, the DeRy(4,90,20) model achieved a Top1 accuracy of 78.6% with only 1.27M parameters trained.

At the same time, nine transfer learning experiments also verified the effectiveness of DeRy. It can be seen that without pre-training, DeRy's model can outperform other models in comparisons of various model sizes; by continuously pre-training the reassembled model, the model performance can be greatly improved. Reach the red curve.

Compared with other transfer learning methods from the model library such as LEEP or LogME, DeRy can surpass the performance limitations of the model library itself, and even be better than the best model in the original model library. Best model.

Exploring the nature of model reorganization

The author is also very curious about the model reorganization proposed in this article properties, such as "What pattern will the model be split according to?" and "What rules will the model be reorganized according to?". The author provides experiments for analysis.

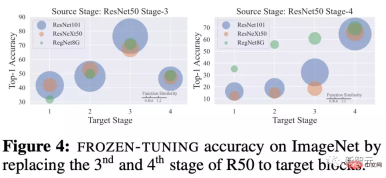

Functional similarity, reassembly location and reassembly performance

The author explores how the same network block is used by other After replacing network blocks with different functional similarities, Freeze-Tuning Performance comparison of 20 epochs.

For ResNet50 trained on ImageNet, use the network blocks of the 3rd and 4th stages, Replacement with different network blocks for ResNet101, ResNeXt50 and RegNetY8G.

It can be observed that the replacement position has a great impact on performance.

For example, if the third stage is replaced by the third stage of another network, the performance of the reorganized network will be particularly strong . At the same time, functional similarity is also positively matched with recombination performance.

Network model blocks at the same depth have greater similarity, resulting in stronger model capabilities after training. This points to the dependence and positive relationship between similarity-recombination position-recombination performance.

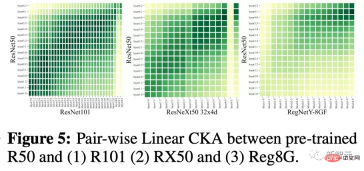

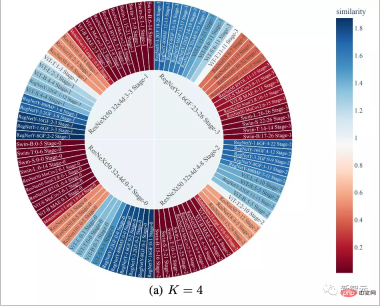

Observation of splitting results

In the figure below, the author draws the The result of one step splitting. The color represents the similarity between the network block and the network block at the center of the equivalence set of the song.

It can be seen that the division proposed in this article tends to cluster the sub-networks together according to depth and split them. At the same time, the functional similarity data between CNN and Transformer is small, but the functional similarity between CNN and CNNs of different architectures is usually larger.

##Using NASWOT as a performance indicator

Since this article applies NASWOT for zero-training transfer prediction for the first time, the author also tested the reliability of this indicator.

In the figure below, the author calculates the NASWOT scores of different models on different data sets, and compares them with the accuracy of transfer learning plus one.

It can be observed that the NASWOT scores have obtained a more accurate performance ranking (Kendall's Tau correlation). This shows that the zero training index used in this article can effectively predict the performance of the model on downstream data.

Summary

This paper proposes a new knowledge transfer task called deep model restructuring (Deep Model Reassembly, DeRy for short). He constructs a model adapted to downstream tasks by breaking up existing heterogeneous pre-trained models and reassembling them.

The author proposes a simple two-stage implementation to accomplish this task. First, DeRy solves a covering set problem and splits all pre-trained networks according to functional levels; in the second step, DeRy formalizes the model assembly into a 0-1 integer programming problem to ensure the performance of the assembled model on specific tasks. optimal.

This work not only achieved strong performance improvements, but also mapped the possible connectivity between different neural networks.

The above is the detailed content of The correct way to play building-block deep learning! National University of Singapore releases DeRy, a new transfer learning paradigm that turns knowledge transfer into movable type printing. For more information, please follow other related articles on the PHP Chinese website!

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AM

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AMThe legal tech revolution is gaining momentum, pushing legal professionals to actively embrace AI solutions. Passive resistance is no longer a viable option for those aiming to stay competitive. Why is Technology Adoption Crucial? Legal professional

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AM

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AMMany assume interactions with AI are anonymous, a stark contrast to human communication. However, AI actively profiles users during every chat. Every prompt, every word, is analyzed and categorized. Let's explore this critical aspect of the AI revo

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AM

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AMA successful artificial intelligence strategy cannot be separated from strong corporate culture support. As Peter Drucker said, business operations depend on people, and so does the success of artificial intelligence. For organizations that actively embrace artificial intelligence, building a corporate culture that adapts to AI is crucial, and it even determines the success or failure of AI strategies. West Monroe recently released a practical guide to building a thriving AI-friendly corporate culture, and here are some key points: 1. Clarify the success model of AI: First of all, we must have a clear vision of how AI can empower business. An ideal AI operation culture can achieve a natural integration of work processes between humans and AI systems. AI is good at certain tasks, while humans are good at creativity and judgment

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AM

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AMMeta upgrades AI assistant application, and the era of wearable AI is coming! The app, designed to compete with ChatGPT, offers standard AI features such as text, voice interaction, image generation and web search, but has now added geolocation capabilities for the first time. This means that Meta AI knows where you are and what you are viewing when answering your question. It uses your interests, location, profile and activity information to provide the latest situational information that was not possible before. The app also supports real-time translation, which completely changed the AI experience on Ray-Ban glasses and greatly improved its usefulness. The imposition of tariffs on foreign films is a naked exercise of power over the media and culture. If implemented, this will accelerate toward AI and virtual production

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AM

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AMArtificial intelligence is revolutionizing the field of cybercrime, which forces us to learn new defensive skills. Cyber criminals are increasingly using powerful artificial intelligence technologies such as deep forgery and intelligent cyberattacks to fraud and destruction at an unprecedented scale. It is reported that 87% of global businesses have been targeted for AI cybercrime over the past year. So, how can we avoid becoming victims of this wave of smart crimes? Let’s explore how to identify risks and take protective measures at the individual and organizational level. How cybercriminals use artificial intelligence As technology advances, criminals are constantly looking for new ways to attack individuals, businesses and governments. The widespread use of artificial intelligence may be the latest aspect, but its potential harm is unprecedented. In particular, artificial intelligence

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AM

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AMThe intricate relationship between artificial intelligence (AI) and human intelligence (NI) is best understood as a feedback loop. Humans create AI, training it on data generated by human activity to enhance or replicate human capabilities. This AI

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AM

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AMAnthropic's recent statement, highlighting the lack of understanding surrounding cutting-edge AI models, has sparked a heated debate among experts. Is this opacity a genuine technological crisis, or simply a temporary hurdle on the path to more soph

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AM

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AMIndia is a diverse country with a rich tapestry of languages, making seamless communication across regions a persistent challenge. However, Sarvam’s Bulbul-V2 is helping to bridge this gap with its advanced text-to-speech (TTS) t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Notepad++7.3.1

Easy-to-use and free code editor

WebStorm Mac version

Useful JavaScript development tools