Technology peripherals

Technology peripherals AI

AI An article explaining the testing technology of intelligent driving perception system in detail

An article explaining the testing technology of intelligent driving perception system in detailForeword

With the advancement of artificial intelligence and its software and hardware technology, autonomous driving has developed rapidly in recent years. Autonomous driving systems have been used in civilian vehicle driver assistance systems, autonomous logistics robots, drones and other fields. The perception component is the core of the autonomous driving system, which enables the vehicle to analyze and understand information about the internal and external traffic environment. However, like other software systems, autonomous driving perception systems are plagued by software flaws. Moreover, the autonomous driving system operates in safety-critical scenarios, and its software defects may lead to catastrophic consequences. In recent years, there have been many fatalities and injuries caused by defects in autonomous driving systems. Autonomous driving system testing technology has received widespread attention from academia and industry. Enterprises and research institutions have proposed a series of technologies and environments including virtual simulation testing, real-life road testing and combined virtual and real testing. However, due to the particularity of input data types and the diversity of operating environments of autonomous driving systems, the implementation of this type of testing technology requires excessive resources and entails greater risks. This article briefly analyzes the current research and application status of autonomous driving perception system testing methods.

1 Automatic driving perception system test

The quality assurance of the automatic driving perception system is becoming more and more important. The perception system needs to help vehicles automatically analyze and understand road condition information. Its composition is very complex, and it is necessary to fully test the reliability and safety of the system to be tested in many traffic scenarios. Current autonomous driving perception tests are mainly divided into three categories. No matter what kind of testing method, it shows an important feature that is different from traditional testing, that is, strong dependence on test data.

The first type of testing is mainly based on software engineering theory and formal methods, etc., and takes the model structure mechanism of the perception system implementation as the entry point. This testing method is based on a high-level understanding of the operating mechanism and system characteristics of autonomous driving perception. The purpose of this biased perception system logic test is to discover the design flaws of the perception module in the early stages of system development to ensure the effectiveness of the model algorithm in early system iterations. Based on the characteristics of the autonomous driving algorithm model, the researchers proposed a series of test data generation, test verification indicators, test evaluation methods and technologies.

The second type of testing virtual simulation method uses computers to abstract the actual traffic system to complete testing tasks, including system testing in a preset virtual environment or independent testing of perception components. The effect of virtual simulation testing depends on the reality of the virtual environment, test data quality and specific test execution technology. It is necessary to fully consider the effectiveness of the simulation environment construction method, data quality assessment and test verification technology. Autonomous driving environment perception and scene analysis models rely on large-scale effective traffic scene data for training and testing verification. Domestic and foreign researchers have conducted a lot of research on traffic scenes and their data structure generation technology. Use methods such as data mutation, simulation engine generation, and game model rendering to construct virtual test scene data to obtain high-quality test data, and use different generated test data for autonomous driving models and data amplification and enhancement. Test scenarios and data generation are key technologies. Test cases must be rich enough to cover the state space of the test sample. Test samples need to be generated under extreme traffic conditions to test the safety of the system's decision output model under these boundary use cases. Virtual testing often combines existing testing theories and technologies to construct effective methods for evaluating and verifying test effects.

The third category is road testing of real vehicles equipped with autonomous driving perception systems, including preset closed scene testing and actual road condition testing. The advantage of this type of testing is that testing in a real environment can fully guarantee the validity of the results. However, this type of method has difficulties such as test scenarios that are difficult to meet diverse needs, difficulty in obtaining relevant traffic scene data samples, high cost of manual annotation of real road collection data, uneven annotation quality, excessive test mileage requirements, and too long data collection cycle, etc. . There are safety risks in manual driving in dangerous scenarios, and it is difficult for testers to solve these problems in the real world. At the same time, traffic scene data also suffers from problems such as a single data source and insufficient data diversity, which is insufficient to meet the testing and verification requirements of autonomous driving researchers in software engineering. Despite this, road testing is an indispensable part of traditional car testing and is extremely important in autonomous driving perception testing.

From the perspective of test types, perception system testing has different test contents for the vehicle development life cycle. Autonomous driving testing can be divided into model-in-the-loop (MiL) testing, software-in-the-loop (SiL) testing, hardware-in-the-loop (HiL) testing, vehicle-in-the-loop (ViL) testing, etc. This article focuses on the SiL and HiL related parts of the autonomous driving perception system test. HiL includes perception hardware devices, such as cameras, lidar, and human-computer interaction perception modules. SiL uses software simulation to replace the data generated by the real hardware. The purpose of both tests is to verify the functionality, performance, robustness and reliability of the autonomous driving system. For specific test objects, different types of tests are combined with different testing technologies at each perception system development stage to complete the corresponding verification requirements. Current autonomous driving perception information mainly comes from the analysis of several types of main data, including image (camera), point cloud (lidar), and fusion perception systems. This article mainly analyzes the perception tests of these three types of data.

2 Autonomous Driving Image System Test

Images collected by multiple types of cameras are one of the most important input data types for autonomous driving perception. Image data can provide front-view, surround-view, rear-view and side-view environmental information when the vehicle is running, and help the autonomous driving system achieve functions such as road ranging, target recognition and tracking, and automatic lane change analysis. Image data comes in various formats, such as RGB images, semantic images, depth images, etc. These image formats have their own characteristics. For example, RGB images have richer color information, depth-of-field images contain more scene depth information, and semantic images are obtained based on pixel classification, which is more beneficial for target detection and tracking tasks.

Image-based automatic driving perception system testing relies on large-scale effective traffic scene images for training and testing verification. However, the cost of manual labeling of real road collection data is high, the data collection cycle is too long, laws and regulations for manual driving in dangerous scenes are imperfect, and the quality of labeling is uneven. At the same time, traffic scene data is also affected by factors such as a single data source and insufficient data diversity, which is insufficient to meet the testing and verification requirements of autonomous driving research.

Domestic and foreign researchers have conducted a lot of research on the construction and generation technology of traffic scene data, using methods such as data mutation, adversarial generation network, simulation engine generation and game model rendering to build virtual tests. Scenario data to obtain high-quality test data, and use different generated test data for autonomous driving models and data enhancement. Using hard-coded image transformations to generate test images is an effective method. A variety of mathematical transformations and image processing techniques can be used to mutate the original image to test the potential erroneous behavior of the autonomous driving system under different environmental conditions.

Zhang et al. used an adversarial generative network-based method for image style transformation to simulate vehicle driving scenes under specified environmental conditions. Some studies perform autonomous driving tests in virtual environments, using 3D models from physical simulation models to construct traffic scenes and rendering them into 2D images as input to the perception system. Test images can also be generated by synthesis, sampling modifiable content in the subspace of low-dimensional images and performing image synthesis. Compared with direct mutation of images, the synthetic scene is richer and the image perturbation operation is more free. Fremont et al. used the autonomous driving domain-specific programming language Scenic to pre-design test scenarios, used a game engine interface to generate specific traffic scene images, and used the rendered images for training and verification on the target detection model.

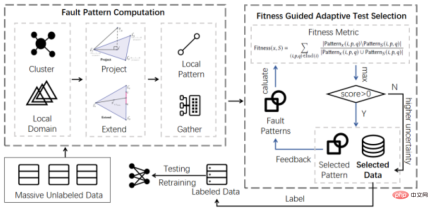

Pei et al. used the idea of differential testing to find inconsistent outputs of the autonomous driving steering model, and also proposed using neuron coverage, that is, the neurons in the neural network exceed the preset given activation threshold. ratio to measure the effectiveness of the test sample. On the basis of neural coverage, researchers have also proposed many new test coverage concepts, such as neuron boundary coverage, strong neuron coverage, hierarchical neuron coverage, etc. In addition, using heuristic search technology to find target test cases is also an effective method. The core difficulty lies in designing test evaluation indicators to guide the search. There are common problems in autonomous driving image system testing such as lack of labeled data for special driving scenarios. This team proposed an adaptive deep neural network test case selection method ATS, inspired by the idea of adaptive random testing in the field of software testing, to solve the high human resource cost of deep neural network test data labeling in the autonomous driving perception system. This problem.

3 Autonomous Driving LiDAR System Test

Lidar is a crucial sensor for the autonomous driving system and can determine the distance between the sensor transmitter and the target object. propagation distance, and analyze information such as the amount of reflected energy on the surface of the target object, the amplitude, frequency and phase of the reflected wave spectrum. The point cloud data collected accurately depicts the three-dimensional scale and reflection intensity information of various objects in the driving scene, which can make up for the camera's lack of data form and accuracy. Lidar plays an important role in tasks such as autonomous driving target detection and positioning mapping, and cannot be replaced by single vision alone.

As a typical complex intelligent software system, autonomous driving takes the surrounding environment information captured by lidar as input, and makes judgments through the artificial intelligence model in the perception module, and is controlled by system planning Finally, complete various driving tasks. Although the high complexity of the artificial intelligence model gives the autonomous driving system the perception capability, the existing traditional testing technology relies on the manual collection and annotation of point cloud data, which is costly and inefficient. On the other hand, point cloud data is disordered, lacks obvious color information, is easily interfered by weather factors, and the signal is easily attenuated, making the diversity of point cloud data particularly important during the testing process.

Testing of autonomous driving systems based on lidar is still in its preliminary stages. Both actual drive tests and simulation tests have problems such as high cost, low test efficiency, and unguaranteed test adequacy. In view of the problems faced by autonomous driving systems such as changeable test scenarios, large and complex software systems, and huge testing costs, being able to propose test data generation technology based on domain knowledge is of great significance to the guarantee of autonomous driving systems.

In terms of radar point cloud data generation, Sallab et al. modeled radar point cloud data by building a cycle consistency generative adversarial network, and conducted feature analysis on the simulated data to generate new Point cloud data. Yue et al. proposed a point cloud data generation framework for autonomous driving scenes. This framework accurately mutates the point cloud data in the game scene based on annotated objects to obtain new data. The mutation they obtained using this method The data retrained the point cloud data processing module of the autonomous driving system and achieved better accuracy improvements.

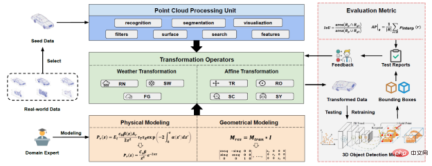

This team designed and implemented a lidar automated testing tool LiRTest, which is mainly used for automated testing of autonomous vehicle target detection systems, and can be further retrained to improve system robustness. . LiRTest first designs physical and geometric models by domain experts, and then constructs transformation operators based on the models. Developers select point cloud seeds from real-world data, use point cloud processing units to identify and process them, and implement transformation operator-based mutation algorithms to generate tests that evaluate the robustness of autonomous driving 3D target detection models. data. Finally, LiRTest gets the test report and gives feedback on the operator design, thereby iteratively improving quality.

The autonomous driving system is a typical information-physical fusion system. Its operating status is not only determined by user input information and the internal status of the software system, but is also affected by the physical environment. Although there is currently a small amount of research focusing on the point cloud data generation problem affected by various environmental factors, due to the characteristics of point cloud data, the authenticity of the generated data is difficult to equate with the drive test data. Therefore, how to do this without significantly increasing additional resource consumption? In this case, automatically generating point cloud data that can describe a variety of real environmental factors is a key issue that needs to be solved.

In the common software architecture of autonomous driving software, artificial intelligence models have an extremely important impact on driving decisions and system behaviors. The functions they affect include: object recognition, path planning, behavior Forecast etc. The most commonly used artificial intelligence model for point cloud data processing is the target detection model, which is implemented using deep neural networks. Although this technology can achieve high accuracy on specific tasks, due to the lack of interpretability of its results, users and developers cannot analyze and confirm its behavior, which brings difficulties to the development of testing technology and the evaluation of test adequacy. great difficulty. These are all challenges that future lidar model testers will need to face.

4 Autonomous Driving Fusion Perception System Test

Autonomous driving systems are usually equipped with a variety of sensors to sense environmental information, and are equipped with a variety of software and algorithms to complete various autonomous driving tasks. Different sensors have different physical characteristics and their application scenarios are also different. Fusion sensing technology can make up for the poor environmental adaptability of a single sensor, and ensure the normal operation of the autonomous driving system under various environmental conditions through the cooperation of multiple sensors.

Due to different information recording methods, there is strong complementarity between different types of sensors. The installation cost of the camera is low, and the image data collected has high resolution and rich visual information such as color and texture. However, the camera is sensitive to the environment and may be unreliable at night, during strong light, and other light changes. LiDAR, on the other hand, is not easily affected by changes in light and provides precise three-dimensional perception during the day and night. However, lidar is expensive and the point cloud data collected lacks color information, making it difficult to identify targets without obvious shapes. How to utilize the advantages of each modal data and mine deeper semantic information has become an important issue in fused sensing technology.

Researchers have proposed a variety of data fusion methods. Fusion sensing technology of lidar and cameras based on deep learning has become a major research direction due to its high accuracy. Feng et al. briefly summarized the fusion methods into three types: early stage, mid stage and late stage fusion. Early fusion only fuses original data or preprocessed data; mid-stage fusion cross-fuses the data features extracted by each branch; late fusion only fuses the final output results of each branch. Although deep learning-based fused sensing technology has demonstrated great potential in existing benchmark datasets, such intelligent models may still exhibit incorrect and unexpected extreme behaviors in real-world scenarios with complex environments, leading to fatal loss. To ensure the safety of autonomous driving systems, such fused perception models need to be thoroughly tested.

Currently, fusion sensing testing technology is still in its preliminary stage. The test input domain is huge and the cost of data collection is high. The main problems are automated test data generation technology. Therefore, automated test data generation technology has received widespread attention. Wang et al. proposed a cross-modal data enhancement algorithm that inserts virtual objects into images and point clouds according to geometric consistency rules to generate test data sets. Zhang et al. proposed a multimodal data enhancement method that utilizes multimodal transformation flows to maintain the correct mapping between point clouds and image pixels, and based on this, further proposed a multimodal cut and paste enhancement method.

Considering the impact of complex environments in real scenes on sensors, our team designed a data amplification technology for multi-modal fusion sensing systems. This method involves domain experts formulating a set of mutation rules with realistic semantics for each modal data, and automatically generating test data to simulate various factors that interfere with sensors in real scenarios, and help software developers test and Evaluating fused sensing systems. The mutation operators used in this method include three categories: signal noise operators, signal alignment operators and signal loss operators, which simulate different types of interference existing in real scenes. The noise operator refers to the presence of noise in the collected data due to the influence of environmental factors during the sensor data collection process. For example, for image data, operators such as spot and blur are used to simulate the situation when the camera encounters strong light and shakes. The alignment operator simulates the misalignment of multimodal data modes, specifically including time misalignment and space misalignment. For the former, one signal is randomly delayed to simulate transmission congestion or delay. For the latter, minor adjustments are made to the calibration parameters of each sensor to simulate slight changes in sensor position due to vehicle jitter and other issues while the vehicle is traveling. The signal loss operator simulates sensor failure. Specifically, after randomly discarding one signal, observe whether the fusion algorithm can respond in time or work normally.

In short, multi-sensor fusion perception technology is an inevitable trend in the development of autonomous driving. Complete testing is a necessary condition to ensure that the system can work normally in a complex real environment. How to use limited resources Adequate testing within the network remains a pressing issue.

in conclusion

Autonomous driving perception testing is being closely integrated with the autonomous driving software development process, and various in-the-loop tests will gradually become a necessary component of autonomous driving quality assurance. In industrial applications, actual drive testing remains important. However, there are problems such as excessive cost, insufficient efficiency, and high safety hazards, which are far from meeting the testing and verification needs of autonomous driving intelligent perception systems. The rapid development of formal methods and simulation virtual testing in multiple branches of research provides effective ways to improve testing. Researchers are exploring model testing indicators and technologies suitable for intelligent driving to provide support for virtual simulation testing methods. This team is committed to researching the generation, evaluation and optimization methods of autonomous driving perception test data, focusing on in-depth research on three aspects based on images, point cloud data and perception fusion testing to ensure a high-quality autonomous driving perception system.

The above is the detailed content of An article explaining the testing technology of intelligent driving perception system in detail. For more information, please follow other related articles on the PHP Chinese website!

SOA中的软件架构设计及软硬件解耦方法论Apr 08, 2023 pm 11:21 PM

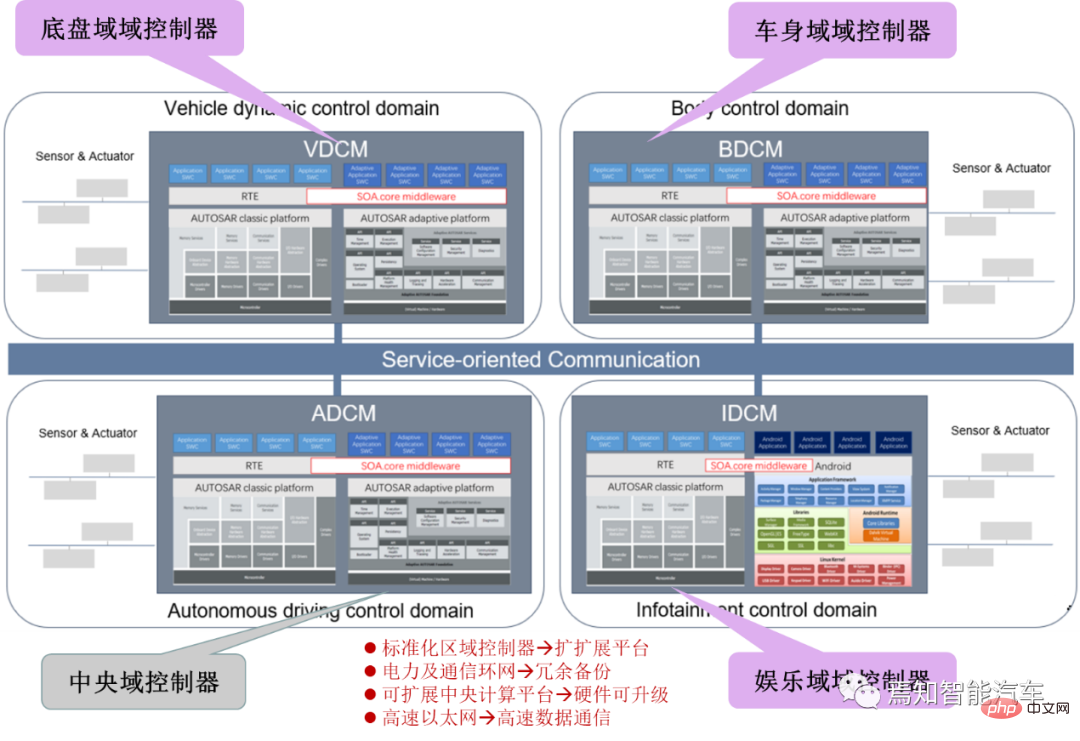

SOA中的软件架构设计及软硬件解耦方法论Apr 08, 2023 pm 11:21 PM对于下一代集中式电子电器架构而言,采用central+zonal 中央计算单元与区域控制器布局已经成为各主机厂或者tier1玩家的必争选项,关于中央计算单元的架构方式,有三种方式:分离SOC、硬件隔离、软件虚拟化。集中式中央计算单元将整合自动驾驶,智能座舱和车辆控制三大域的核心业务功能,标准化的区域控制器主要有三个职责:电力分配、数据服务、区域网关。因此,中央计算单元将会集成一个高吞吐量的以太网交换机。随着整车集成化的程度越来越高,越来越多ECU的功能将会慢慢的被吸收到区域控制器当中。而平台化

新视角图像生成:讨论基于NeRF的泛化方法Apr 09, 2023 pm 05:31 PM

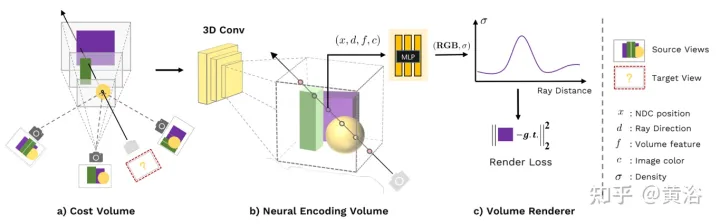

新视角图像生成:讨论基于NeRF的泛化方法Apr 09, 2023 pm 05:31 PM新视角图像生成(NVS)是计算机视觉的一个应用领域,在1998年SuperBowl的比赛,CMU的RI曾展示过给定多摄像头立体视觉(MVS)的NVS,当时这个技术曾转让给美国一家体育电视台,但最终没有商业化;英国BBC广播公司为此做过研发投入,但是没有真正产品化。在基于图像渲染(IBR)领域,NVS应用有一个分支,即基于深度图像的渲染(DBIR)。另外,在2010年曾很火的3D TV,也是需要从单目视频中得到双目立体,但是由于技术的不成熟,最终没有流行起来。当时基于机器学习的方法已经开始研究,比

多无人机协同3D打印盖房子,研究登上Nature封面Apr 09, 2023 am 11:51 AM

多无人机协同3D打印盖房子,研究登上Nature封面Apr 09, 2023 am 11:51 AM我们经常可以看到蜜蜂、蚂蚁等各种动物忙碌地筑巢。经过自然选择,它们的工作效率高到叹为观止这些动物的分工合作能力已经「传给」了无人机,来自英国帝国理工学院的一项研究向我们展示了未来的方向,就像这样:无人机 3D 打灰:本周三,这一研究成果登上了《自然》封面。论文地址:https://www.nature.com/articles/s41586-022-04988-4为了展示无人机的能力,研究人员使用泡沫和一种特殊的轻质水泥材料,建造了高度从 0.18 米到 2.05 米不等的结构。与预想的原始蓝图

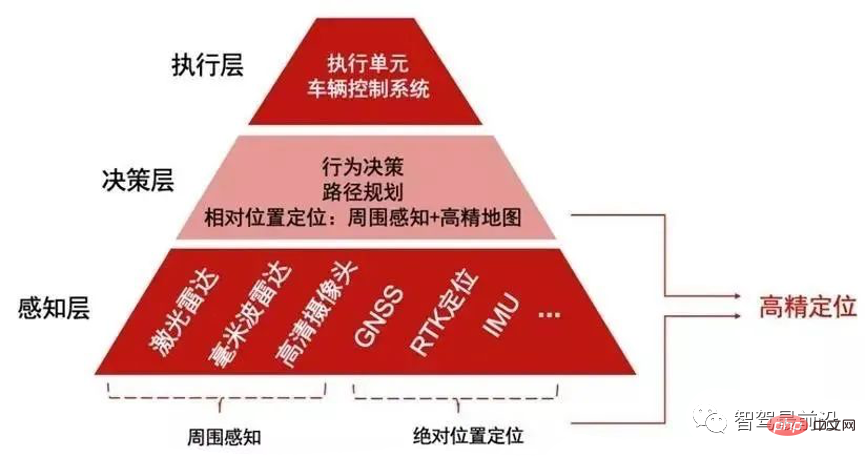

如何让自动驾驶汽车“认得路”Apr 09, 2023 pm 01:41 PM

如何让自动驾驶汽车“认得路”Apr 09, 2023 pm 01:41 PM与人类行走一样,自动驾驶汽车想要完成出行过程也需要有独立思考,可以对交通环境进行判断、决策的能力。随着高级辅助驾驶系统技术的提升,驾驶员驾驶汽车的安全性不断提高,驾驶员参与驾驶决策的程度也逐渐降低,自动驾驶离我们越来越近。自动驾驶汽车又称为无人驾驶车,其本质就是高智能机器人,可以仅需要驾驶员辅助或完全不需要驾驶员操作即可完成出行行为的高智能机器人。自动驾驶主要通过感知层、决策层及执行层来实现,作为自动化载具,自动驾驶汽车可以通过加装的雷达(毫米波雷达、激光雷达)、车载摄像头、全球导航卫星系统(G

超逼真渲染!虚幻引擎技术大牛解读全局光照系统LumenApr 08, 2023 pm 10:21 PM

超逼真渲染!虚幻引擎技术大牛解读全局光照系统LumenApr 08, 2023 pm 10:21 PM实时全局光照(Real-time GI)一直是计算机图形学的圣杯。多年来,业界也提出多种方法来解决这个问题。常用的方法包通过利用某些假设来约束问题域,比如静态几何,粗糙的场景表示或者追踪粗糙探针,以及在两者之间插值照明。在虚幻引擎中,全局光照和反射系统Lumen这一技术便是由Krzysztof Narkowicz和Daniel Wright一起创立的。目标是构建一个与前人不同的方案,能够实现统一照明,以及类似烘烤一样的照明质量。近期,在SIGGRAPH 2022上,Krzysztof Narko

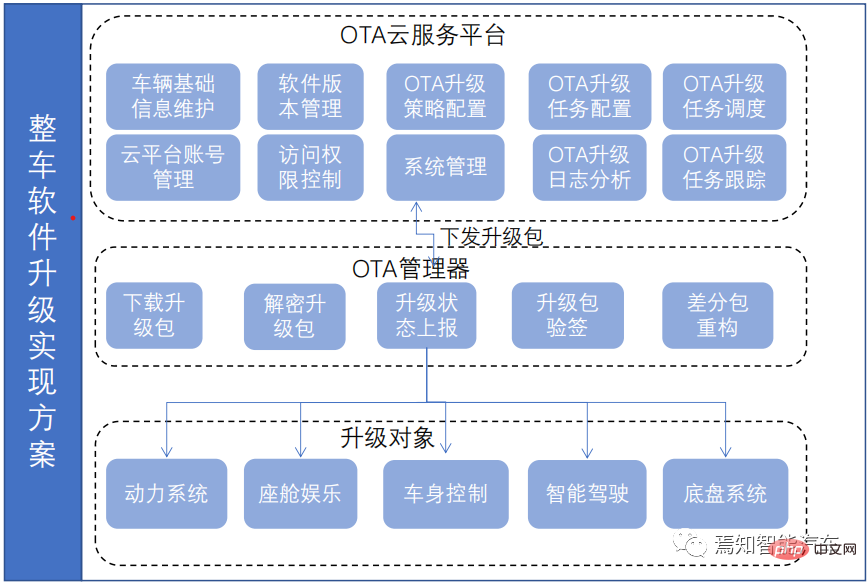

一文聊聊智能驾驶系统与软件升级的关联设计方案Apr 11, 2023 pm 07:49 PM

一文聊聊智能驾驶系统与软件升级的关联设计方案Apr 11, 2023 pm 07:49 PM由于智能汽车集中化趋势,导致在网络连接上已经由传统的低带宽Can网络升级转换到高带宽以太网网络为主的升级过程。为了提升车辆升级能力,基于为车主提供持续且优质的体验和服务,需要在现有系统基础(由原始只对车机上传统的 ECU 进行升级,转换到实现以太网增量升级的过程)之上开发一套可兼容现有 OTA 系统的全新 OTA 服务系统,实现对整车软件、固件、服务的 OTA 升级能力,从而最终提升用户的使用体验和服务体验。软件升级触及的两大领域-FOTA/SOTA整车软件升级是通过OTA技术,是对车载娱乐、导

internet的基本结构与技术起源于什么Dec 15, 2020 pm 04:48 PM

internet的基本结构与技术起源于什么Dec 15, 2020 pm 04:48 PMinternet的基本结构与技术起源于ARPANET。ARPANET是计算机网络技术发展中的一个里程碑,它的研究成果对促进网络技术的发展起到了重要的作用,并未internet的形成奠定了基础。arpanet(阿帕网)为美国国防部高级研究计划署开发的世界上第一个运营的封包交换网络,它是全球互联网的始祖。

综述:自动驾驶的协同感知技术Apr 08, 2023 pm 03:01 PM

综述:自动驾驶的协同感知技术Apr 08, 2023 pm 03:01 PMarXiv综述论文“Collaborative Perception for Autonomous Driving: Current Status and Future Trend“,2022年8月23日,上海交大。感知是自主驾驶系统的关键模块之一,然而单车的有限能力造成感知性能提高的瓶颈。为了突破单个感知的限制,提出协同感知,使车辆能够共享信息,感知视线之外和视野以外的环境。本文回顾了很有前途的协同感知技术相关工作,包括基本概念、协同模式以及关键要素和应用。最后,讨论该研究领域的开放挑战和问题

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Linux new version

SublimeText3 Linux latest version

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Atom editor mac version download

The most popular open source editor

SublimeText3 Mac version

God-level code editing software (SublimeText3)