Technology peripherals

Technology peripherals AI

AI The AIGC unified model is here! The team founded by Huang Xutao, a leader in the CV industry, proposed 'Almighty Diffusion'

The AIGC unified model is here! The team founded by Huang Xutao, a leader in the CV industry, proposed 'Almighty Diffusion'The AIGC unified model is here! The team founded by Huang Xutao, a leader in the CV industry, proposed 'Almighty Diffusion'

Recent advances in Diffusion models set an impressive milestone in many generative tasks. Attractive works such as DALL·E 2, Imagen, and Stable Diffusion (SD) have aroused great interest in academia and industry.

However, although these models perform amazingly, they are basically focused on a certain type of task, such as generating images from given text. For different types of tasks, it is often necessary to Dedicated training alone, or building a new model from scratch.

So can we build an "all-round" Diffusion based on previous models to achieve the unification of the AIGC model? Some people are trying to explore in this direction and have made progress.

This joint team from the University of Illinois at Urbana-Champaign and the University of Texas at Austin is trying to extend the existing single-stream Diffusion into a multi-stream network, called Versatile Diffusion ( VD), the first unified multi-stream multi-modal diffusion framework and a step towards general generative artificial intelligence.

##Paper address: https://arxiv.org/abs/2211.08332

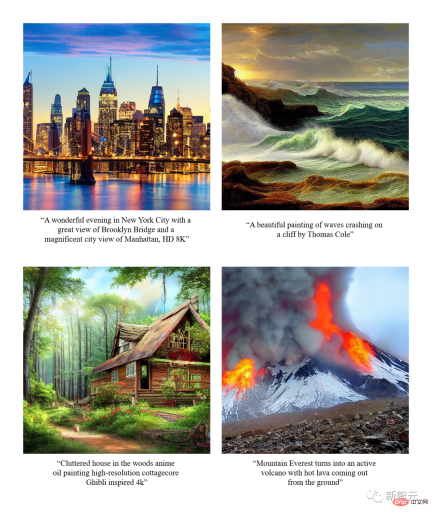

In addition to the ordinary text generation function, Versatile Diffusion can also input images to generate similar images, input images to generate text, input text to generate similar text, image semantic decoupling editing, input images and Generate videos from text, edit image content based on latent space, and more.

Future versions will also support more modes such as voice, music, video and 3D.

According to the paper, it has been proven that VD and its basic framework have the following advantages:

a) It can be used with competitive high quality Process all subtasks.

b) Support new extensions and applications, such as separation of graphic style and semantics, image-text dual guidance generation, etc.

c) These experiments and applications provide richer semantic insights into the generated output.

In terms of training data set, VD uses Laion2B-en with custom data filters as the main data set.

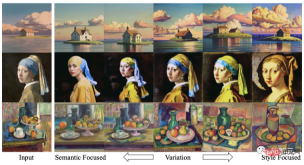

First exploration ofOne of the exciting findings of VD is that it can semantically enhance or reduce image style without further supervision.

Such a phenomenon inspired the author to explore a completely new field, where the separation between style and semantics can occur for images with arbitrary styles and arbitrary content.

The authors state that they are the first to explore: a) interpretation of the semantics and style of natural images without domain specification; b) diffusion model latent space Semantic and stylistic decomposition team.

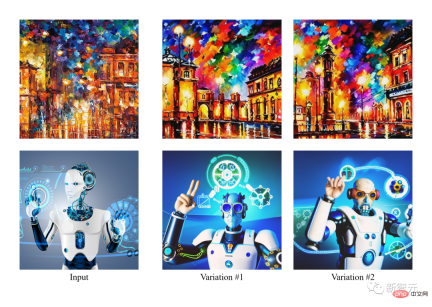

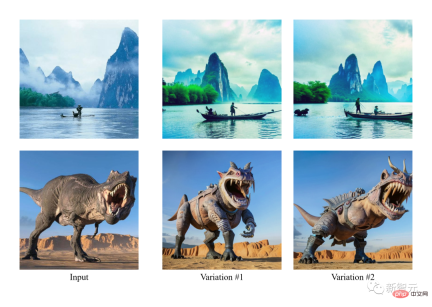

In the image below, the author first generates variants of the input image and then operates on them with a semantic (left) or stylistic (right) focus.

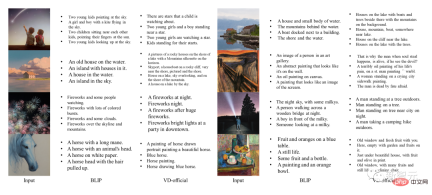

Since VD supports both image to text and text to image, the author team tried editing from the perspective of text prompts for the first time by following the steps below Images: a) Convert image to text, b) Edit text, c) Convert text back to image.

In the experiment the author removed the described content from the image and then added new content using this image-text-image (I2T2I) paradigm. Unlike painting or other image editing methods that require the location of objects as input, VD's I2T2I does not require masks because it automatically positions and replaces objects on command.

However, the output image of I2T2I is inconsistent with the pixels of the input image, which is caused by image-to-text semantic extraction and text-to-image content creation.

In the display below, the input image is first translated into a prompt, and then the prompt is edited using subtraction (red box) and addition (green box). Finally, the edited prompt is translated into an image.

#In addition, they are also the first team to explore generating similar text based on given text.

Network Framework

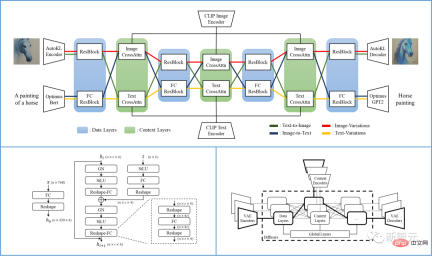

Specifically, the VD framework proposed in the article is a multi-stream network with various types of data as input and background.

The VD multi-stream multi-modal diffusion framework inherits the advantages of LDM/SD, with interpretable latent space, modal structure and low computational cost.

VD can jointly train multiple streams, each stream representing a cross-modal task. Its core design is to diffuser the grouping, sharing and switching protocols within the network, adapting the framework to all supported tasks and beyond.

diffuser is divided into three groups: global layer, data layer and context layer. The global layer is the temporal embedding layer, the data layer is the residual block, and the context layer is the cross-attention.

This grouping corresponds to the function of the layer. When working on multiple tasks, the global layer is shared among all tasks. The data layer and context layer contain multiple data flows. Each data stream can be shared or exchanged based on the current data and context type.

For example, when processing text-image requests, diffuser uses the image data layer and the text context layer. When dealing with image mutation tasks, the image data layer and image context layer are used.

A single VD process contains a VAE, a diffuser and a context encoder, processing a task under one data type (such as image) and one context type (such as text) ( Such as text to image).

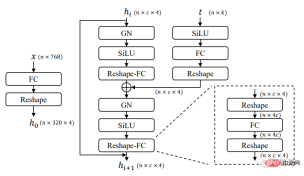

The multi-stream structure of Versatile Diffusion is shown in the figure below:

The researchers based on Versatile Diffusion, A general multi-stream multi-modal framework is further proposed, which includes VAE, context encoder and diffuser containing three layers (i.e. global, data and context layers).

Diffuser:

VD uses the widely adopted cross-concern UNet as The main architecture of the diffuser network divides the layers into global layer, data layer and context layer. The data layer and context layer have two data streams to support images and text.

For image data flow, follow LDM and use residual block (ResBlock), whose spatial dimension gradually decreases and the number of channels gradually increases.

For text data flow, the new fully connected residual block (FCResBlock) is utilized to expand the 768-dimensional text latent vector into 320*4 hidden features and follow a similar channel Add normalization, reuse GroupNorms, SiLU and skip connections, just like normal ResBlock.

As shown in the figure above, FCResBlock contains two sets of fully connected layers (FC), group normalization (GN) and sigmoid linear unit (SiLU). x is the input text latent code, t is the input time embedding, and hi is the intermediate feature.

For context groups, cross-attention layers are used for both image and context streams, where content embedding operates data features through projection layers, dot products, and sigmoids.

Variational Autoencoder (VAE):

VD adopts the previous potential The autoencoder-KL of the Latent Diffusion Model (LDM) is used as the image data VAE, and Optimus is used as the text data VAE. Optimus consists of the BERT text encoder and the GPT2 text decoder, which can bidirectionally convert sentences into 768-dimensional normally distributed latent vectors.

At the same time, Optimus also shows satisfactory VAE characteristics with its reconfigurable and interpretable text latent space. Optimus was therefore chosen as the text VAE because it fits well with the prerequisites of a multi-stream multi-modal framework.

Context Encoder:

VD uses CLIP text and Image encoder as context encoder. Unlike LDM and SD which only use raw text embeddings as context input, VD uses normalized and projected embeddings to minimize the CLIP contrast loss of text and images.

Experiments show that a closer embedding space between context types helps the model converge quickly and perform better. Similar conclusions can also be achieved in DALL·E 2, which fine-tunes the text-to-image model with an additional projection layer to minimize the difference between text and image embeddings for image variations.

Performance

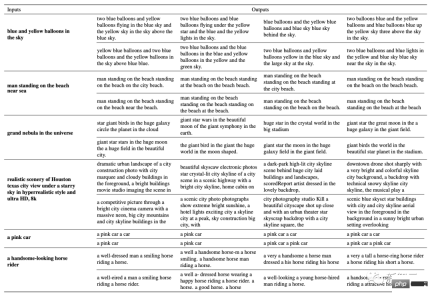

The authors used early single-task models as baseline models and compared the results of VD with these baselines. Among them, SDv1.4 is used as the baseline model from text to image, SD-variation is used for image-variation, and BLIP is used for image-text.

Meanwhile, the authors also conduct a qualitative comparison of different VD models, where VDDC and VD-official are used for text to image, and all three models are used for image variants.

The image samples of SD and VD are generated with controlled random seeds to better check the quality.

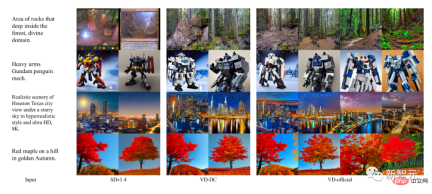

Text to Image Performance

Although DALLE 2 and Imagen are State-of-the-art results were also achieved on these tasks, but since there are no public code or training details, the authors skip comparing them.

The results show that multi-process structure and multi-task training can help VD capture contextual semantics and generate output more accurately, and complete all sub-tasks excellently.

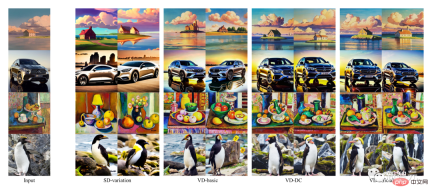

Performance of Image-Variant

Also, generated by VD The image annotation also contains some creative words. In comparison, the generation of BLIP is very short and lacks description of details.

Image to text performance

Effect display

文生图

Image variations

with semantics Focused Image Variants

Dual Boot

Summary

- The authors introduce Versatile Diffusion (VD), a multi-stream multi-modal diffusion network that addresses text, images, and variations in a unified model. Based on VD, the author further introduces a general multi-stream multi-modal framework, which can involve new tasks and domains.

- Through experiments, the authors found that VD can produce high-quality output on all supported tasks, among which VD’s text-to-image and image-to-variant results can better capture the semantics in context, VD The image-to-text results are creative and illustrative.

- Given the multi-stream multi-modal nature of VD, the authors introduce novel extensions and applications that may further benefit downstream users working on this technology.

Team Introduction

The IFP team at the University of Illinois at Urbana-Champaign was founded by Professor Huang Xutao in the 1980s, originally as Beckman Advanced Science and Technology Research Institute's Image Formation and Processing Group.

Over the years, IFP has been committed to research and innovation beyond images, including image and video coding, multi-modal human-computer interaction, and multimedia annotation and search, computer vision and pattern recognition, machine learning, big data, deep learning, and high-performance computing.

The current research direction of IFP is to solve the problem of multi-modal information processing by collaboratively combining big data, deep learning and high-performance computing.

In addition, IFP has won several best papers at top conferences in the field of artificial intelligence and won many international competitions, including the first NIST TrecVID, the first ImageNet Challenge and the first Artificial Intelligence City Challenge.

Interestingly, since Professor Huang began teaching at MIT in the 1960s, the "members" of the IFP group have even included friends, students, students' students, and students' students. Even students of students’ students.

The above is the detailed content of The AIGC unified model is here! The team founded by Huang Xutao, a leader in the CV industry, proposed 'Almighty Diffusion'. For more information, please follow other related articles on the PHP Chinese website!

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AM

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AMThe legal tech revolution is gaining momentum, pushing legal professionals to actively embrace AI solutions. Passive resistance is no longer a viable option for those aiming to stay competitive. Why is Technology Adoption Crucial? Legal professional

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AM

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AMMany assume interactions with AI are anonymous, a stark contrast to human communication. However, AI actively profiles users during every chat. Every prompt, every word, is analyzed and categorized. Let's explore this critical aspect of the AI revo

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AM

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AMA successful artificial intelligence strategy cannot be separated from strong corporate culture support. As Peter Drucker said, business operations depend on people, and so does the success of artificial intelligence. For organizations that actively embrace artificial intelligence, building a corporate culture that adapts to AI is crucial, and it even determines the success or failure of AI strategies. West Monroe recently released a practical guide to building a thriving AI-friendly corporate culture, and here are some key points: 1. Clarify the success model of AI: First of all, we must have a clear vision of how AI can empower business. An ideal AI operation culture can achieve a natural integration of work processes between humans and AI systems. AI is good at certain tasks, while humans are good at creativity and judgment

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AM

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AMMeta upgrades AI assistant application, and the era of wearable AI is coming! The app, designed to compete with ChatGPT, offers standard AI features such as text, voice interaction, image generation and web search, but has now added geolocation capabilities for the first time. This means that Meta AI knows where you are and what you are viewing when answering your question. It uses your interests, location, profile and activity information to provide the latest situational information that was not possible before. The app also supports real-time translation, which completely changed the AI experience on Ray-Ban glasses and greatly improved its usefulness. The imposition of tariffs on foreign films is a naked exercise of power over the media and culture. If implemented, this will accelerate toward AI and virtual production

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AM

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AMArtificial intelligence is revolutionizing the field of cybercrime, which forces us to learn new defensive skills. Cyber criminals are increasingly using powerful artificial intelligence technologies such as deep forgery and intelligent cyberattacks to fraud and destruction at an unprecedented scale. It is reported that 87% of global businesses have been targeted for AI cybercrime over the past year. So, how can we avoid becoming victims of this wave of smart crimes? Let’s explore how to identify risks and take protective measures at the individual and organizational level. How cybercriminals use artificial intelligence As technology advances, criminals are constantly looking for new ways to attack individuals, businesses and governments. The widespread use of artificial intelligence may be the latest aspect, but its potential harm is unprecedented. In particular, artificial intelligence

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AM

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AMThe intricate relationship between artificial intelligence (AI) and human intelligence (NI) is best understood as a feedback loop. Humans create AI, training it on data generated by human activity to enhance or replicate human capabilities. This AI

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AM

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AMAnthropic's recent statement, highlighting the lack of understanding surrounding cutting-edge AI models, has sparked a heated debate among experts. Is this opacity a genuine technological crisis, or simply a temporary hurdle on the path to more soph

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AM

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AMIndia is a diverse country with a rich tapestry of languages, making seamless communication across regions a persistent challenge. However, Sarvam’s Bulbul-V2 is helping to bridge this gap with its advanced text-to-speech (TTS) t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

Notepad++7.3.1

Easy-to-use and free code editor

WebStorm Mac version

Useful JavaScript development tools