Technology peripherals

Technology peripherals AI

AI 'Image generation technology' wandering on the edge of the law: This paper teaches you to avoid becoming a 'defendant'

'Image generation technology' wandering on the edge of the law: This paper teaches you to avoid becoming a 'defendant''Image generation technology' wandering on the edge of the law: This paper teaches you to avoid becoming a 'defendant'

In recent years, AI-generated content (AIGC) has attracted much attention. Its content covers images, text, audio, video, etc. However, AIGC has become a double-edged sword and has been criticized for its irresponsible use. Controversial.

Once the image generation technology is not used properly, you may become a "defendant".

Recently, researchers from Sony AI and Wisdom Source have discussed the current issues of AIGC from many aspects and how to make AI-generated content more responsible.

Paper link: https://arxiv.org/pdf/2303.01325.pdf

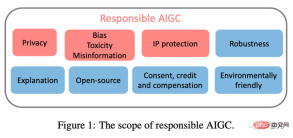

This article focuses on three main issues that may hinder the healthy development of AIGC, including: (1)Privacy; (2)Bias, toxicity, misinformation; (3) Intellectual property (IP) risks.

By documenting the known and potential risks, as well as any possible AIGC abuse scenarios, this article aims to raise awareness of Concerns about the potential risks and abuse of AIGC and provide directions to address these risks to promote the development of AIGC in a more ethical and safe direction for the benefit of society.

Privacy

As we all know, large-scale basic models have a series of privacy leak problems.

Previous research has shown that intruders can generate sequences from trained GPT-2 models and identify those memorized sequences from the training set, [Kandpal et al., 2022] attribute the success of these privacy intrusions to the presence of duplicate data in the training set, and research has demonstrated that sequences that appear multiple times are more likely to be generated than sequences that appear only once.

Since the AIGC model is trained on large-scale web scraped data, the issues of overfitting and privacy leakage become particularly important.

For example, the Stable Diffusion model memorizes repeated images in the training data [Rombach et al., 2022c]. [Somepalli et al., 2022] demonstrated that a Stable Diffusion model blatantly copies images from its training data and generates simple combinations of foreground and background objects in the training data set.

Additionally, the model shows the ability to reconstruct memory, resulting in objects that are semantically identical to the original but pixel-wise objects of different forms. The existence of such images raises concerns about data memory and ownership.

Similarly, recent research shows that Google’s Imagen system also has problems leaking photos of real people and copyrighted images. In Matthew Butterick's recent lawsuit [Butterick, 2023], he pointed out that because all visual information in the system comes from copyrighted training images, the generated images, regardless of their appearance, must be the work of those training images. .

Similarly, DALL·E 2 suffered from a similar problem: it would sometimes copy images from its training data instead of creating new ones.

OpenAI found that this phenomenon occurred because the image was copied multiple times in the data set. Similarly, ChatGPT itself admitted that it had the risk of privacy leakage.

#In order to alleviate the privacy leakage problem of large models, many companies and researchers have made a lot of efforts in privacy defense. At the industrial level, Stability AI has recognized the limitations of Stable Diffusion.

To this end, they provide a website (https://rom1504.github.io/clip-retrieval/) to identify images remembered by Stable Diffusion.

In addition, art company Spawning AI has created a website called "Have I Been Trained" (https://haveibeentrained.com) to help users identify their photos or works Whether it is used for artificial intelligence training.

OpenAI attempts to solve privacy issues by reducing data duplication.

In addition, companies such as Microsoft and Amazon have banned employees from sharing sensitive data with ChatGPT to prevent employees from leaking confidentiality, because this information can be used for training future versions of ChatGPT.

At the academic level, Somepalli et al. studied an image retrieval framework to identify content duplication, and Dockhorn et al. also proposed a differential privacy diffusion model to ensure the privacy of the generative model.

Bias, toxicity, misinformation

The training data for the AIGC model comes from the real world. However, these data may inadvertently reinforce harmful stereotypes and exclude or marginalize certain people. groups and contain toxic data sources, which may incite hatred or violence and offend individuals [Weidinger et al., 2021].

Models trained or fine-tuned on these problematic datasets may inherit harmful stereotypes, social biases and toxicity, or even generate misinformation that leads to unfair discrimination and harm to certain social groups.

For example, the Stable Diffusion v1 model is primarily trained on the LAION-2B dataset, which only contains images with English descriptions. Therefore, the model is biased toward white people and Western cultures, and cues from other languages may not be fully represented.

While subsequent versions of the Stable Diffusion model were fine-tuned on filtered versions of the LAION dataset, issues of bias persisted. Likewise, DALLA·E, DALLA·E 2 and Imagen also exhibit social bias and negative stereotypes of minority groups.

Additionally, Imagen has been shown to have social and cultural biases even when generating images of non-humans. Due to these issues, Google decided not to make Imagen available to the public.

In order to illustrate the inherent bias of the AIGC model, we tested Stable Diffusion v2.1. The images generated using the prompt "Three engineers running on the grassland" were all male. And none belong to a neglected minority group, which illustrates the lack of diversity in the resulting images.

In addition, the AIGC model may also produce incorrect information. For example, content generated by GPT and its derivatives may appear to be accurate and authoritative, but may contain completely false information.

Therefore, it may provide misleading information in some areas (such as schools, law, medicine, weather forecasts). For example, in the medical field, answers provided by ChatGPT about medical dosages may be inaccurate or incomplete, which could be life-threatening. In the field of transportation, if drivers follow the wrong traffic rules given by ChatGPT, it may lead to accidents or even death.

Many defensive measures have been taken against problematic data and models.

OpenAI fine-filters the original training data set and removes any violent or pornographic content in the DALLA·E 2 training data. However, filtering may introduce bias in the training data, These biases are then propagated to downstream models.

To solve this problem, OpenAI developed pre-training technology to mitigate bias caused by filters. In addition, in order to ensure that the AIGC model can reflect the current social situation in a timely manner, researchers must regularly update the data sets used by the model, which will help prevent the negative impact caused by information lag.

It is worth noting that although biases and stereotypes in source data can be reduced, they may still be spread or even exacerbated during the training and development of the AIGC model. Therefore, it is critical to assess the presence of bias, toxicity, and misinformation throughout the model training and development lifecycle, not just at the data source level.

Intellectual Property (IP)

With the rapid development and widespread application of AIGC, the copyright issue of AIGC has become particularly important.

In November 2022, Matthew Butterick filed a class action lawsuit against Microsoft subsidiary GitHub, accusing its product code generation service Copilot of infringing copyright laws. As with text-to-image models, some generative models have been accused of infringing on artists’ original rights to their work.

[Somepalli et al., 2022] shows that the images generated by Stable Diffusion may be copied from the training data. Although Stable Diffusion denies any ownership rights to the generated images and allows users to freely use them as long as the image content is legal and harmless, this freedom still triggers fierce disputes over copyright.

Generative models like Stable Diffusion are trained on large-scale images from the Internet without authorization from the intellectual property holder, and as such, some believe this violated their rights.

To address intellectual property issues, many AIGC companies have taken action.

For example, Midjourney has included a DMCA takedown policy in its terms of service, allowing artists to request that their work be removed from the dataset if they suspect copyright infringement.

Similarly, Stability AI plans to offer artists the option of excluding their work from the training set for future versions of Stable Diffusion. Additionally, text watermarks [He et al., 2022a; He et al., 2022b] can also be used to identify whether these AIGC tools use samples from other sources without permission.

For example, Stable Diffusion produced images with a Getty Images watermark [Vincent, 2023].

OpenAI is developing watermarking technology to identify text generated by GPT models, a tool that educators can use to detect plagiarism in assignments. Google has also applied Parti watermarks to the images it publishes. In addition to watermarks, OpenAI recently released a classifier for distinguishing between AI-generated text and human-written text.

Conclusion

Although AIGC is still in its infancy, it is expanding rapidly and will remain active for the foreseeable future.

In order for users and companies to fully understand these risks and take appropriate measures to mitigate these threats, we summarize the current and potential risks in the AIGC model in this article.

If these potential risks cannot be fully understood and appropriate risk prevention measures and safety guarantees are adopted, the development of AIGC may face significant challenges and regulatory obstacles. Therefore, we need broader community participation to contribute to a responsible AIGC.

Finally, thank you SonyAI and BAAI!

The above is the detailed content of 'Image generation technology' wandering on the edge of the law: This paper teaches you to avoid becoming a 'defendant'. For more information, please follow other related articles on the PHP Chinese website!

![Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]](https://img.php.cn/upload/article/001/242/473/174717025174979.jpg?x-oss-process=image/resize,p_40) Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AM

Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AMChatGPT is not accessible? This article provides a variety of practical solutions! Many users may encounter problems such as inaccessibility or slow response when using ChatGPT on a daily basis. This article will guide you to solve these problems step by step based on different situations. Causes of ChatGPT's inaccessibility and preliminary troubleshooting First, we need to determine whether the problem lies in the OpenAI server side, or the user's own network or device problems. Please follow the steps below to troubleshoot: Step 1: Check the official status of OpenAI Visit the OpenAI Status page (status.openai.com) to see if the ChatGPT service is running normally. If a red or yellow alarm is displayed, it means Open

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AM

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AMOn 10 May 2025, MIT physicist Max Tegmark told The Guardian that AI labs should emulate Oppenheimer’s Trinity-test calculus before releasing Artificial Super-Intelligence. “My assessment is that the 'Compton constant', the probability that a race to

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AM

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AMAI music creation technology is changing with each passing day. This article will use AI models such as ChatGPT as an example to explain in detail how to use AI to assist music creation, and explain it with actual cases. We will introduce how to create music through SunoAI, AI jukebox on Hugging Face, and Python's Music21 library. Through these technologies, everyone can easily create original music. However, it should be noted that the copyright issue of AI-generated content cannot be ignored, and you must be cautious when using it. Let’s explore the infinite possibilities of AI in the music field together! OpenAI's latest AI agent "OpenAI Deep Research" introduces: [ChatGPT]Ope

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AM

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AMThe emergence of ChatGPT-4 has greatly expanded the possibility of AI applications. Compared with GPT-3.5, ChatGPT-4 has significantly improved. It has powerful context comprehension capabilities and can also recognize and generate images. It is a universal AI assistant. It has shown great potential in many fields such as improving business efficiency and assisting creation. However, at the same time, we must also pay attention to the precautions in its use. This article will explain the characteristics of ChatGPT-4 in detail and introduce effective usage methods for different scenarios. The article contains skills to make full use of the latest AI technologies, please refer to it. OpenAI's latest AI agent, please click the link below for details of "OpenAI Deep Research"

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AM

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AMChatGPT App: Unleash your creativity with the AI assistant! Beginner's Guide The ChatGPT app is an innovative AI assistant that handles a wide range of tasks, including writing, translation, and question answering. It is a tool with endless possibilities that is useful for creative activities and information gathering. In this article, we will explain in an easy-to-understand way for beginners, from how to install the ChatGPT smartphone app, to the features unique to apps such as voice input functions and plugins, as well as the points to keep in mind when using the app. We'll also be taking a closer look at plugin restrictions and device-to-device configuration synchronization

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AM

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AMChatGPT Chinese version: Unlock new experience of Chinese AI dialogue ChatGPT is popular all over the world, did you know it also offers a Chinese version? This powerful AI tool not only supports daily conversations, but also handles professional content and is compatible with Simplified and Traditional Chinese. Whether it is a user in China or a friend who is learning Chinese, you can benefit from it. This article will introduce in detail how to use ChatGPT Chinese version, including account settings, Chinese prompt word input, filter use, and selection of different packages, and analyze potential risks and response strategies. In addition, we will also compare ChatGPT Chinese version with other Chinese AI tools to help you better understand its advantages and application scenarios. OpenAI's latest AI intelligence

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AM

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AMThese can be thought of as the next leap forward in the field of generative AI, which gave us ChatGPT and other large-language-model chatbots. Rather than simply answering questions or generating information, they can take action on our behalf, inter

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AM

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AMEfficient multiple account management techniques using ChatGPT | A thorough explanation of how to use business and private life! ChatGPT is used in a variety of situations, but some people may be worried about managing multiple accounts. This article will explain in detail how to create multiple accounts for ChatGPT, what to do when using it, and how to operate it safely and efficiently. We also cover important points such as the difference in business and private use, and complying with OpenAI's terms of use, and provide a guide to help you safely utilize multiple accounts. OpenAI

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

WebStorm Mac version

Useful JavaScript development tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

Dreamweaver Mac version

Visual web development tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.