Technology peripherals

Technology peripherals AI

AI The first multi-modal scientific question and answer data set with detailed explanations, deep learning model reasoning has a thinking chain

The first multi-modal scientific question and answer data set with detailed explanations, deep learning model reasoning has a thinking chainThe first multi-modal scientific question and answer data set with detailed explanations, deep learning model reasoning has a thinking chain

When answering complex questions, humans can understand information in different modalities and form a complete chain of thought (CoT). Can the deep learning model open the "black box" and provide a chain of thinking for its reasoning process? Recently, UCLA and the Allen Institute for Artificial Intelligence (AI2) proposed ScienceQA, the first multi-modal scientific question and answer data set with detailed explanations, to test the multi-modal reasoning capabilities of the model. In the ScienceQA task, the author proposed the GPT-3 (CoT) model, which introduced prompt learning based on thought chains into the GPT-3 model, so that the model can generate corresponding reasoning explanations while generating answers. GPT-3 (CoT) achieves 75.17% accuracy on ScienceQA; and human evaluation shows that it can generate higher quality explanations.

Learning and completing complex tasks as effectively as humans is one of the long-term goals pursued by artificial intelligence. Humans can follow a complete chain of thought (CoT) reasoning process during the decision-making process to make reasonable explanations for the answers given.

However, most existing machine learning models rely on a large number of input-output sample training to complete specific tasks. These black box models often directly generate the final answer without revealing the specific reasoning process.

Science Question Answering can well diagnose whether the artificial intelligence model has multi-step reasoning capabilities and interpretability. To answer scientific questions, a model not only needs to understand multimodal content, but also extract external knowledge to arrive at the correct answer. At the same time, a reliable model should also provide explanations that reveal its reasoning process. However, most of the current scientific question and answer data sets lack detailed explanations of the answers, or are limited to text modalities.

Therefore, The author collected a new science question and answer data set ScienceQA, which contains 21,208 question and answer multiple-choice questions from primary and secondary school science courses. A typical question contains multi-modal context (context), correct options, general background knowledge (lecture), and specific explanation (explanation).

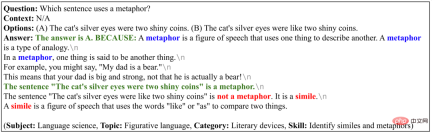

An example of the ScienceQA dataset.

To answer the example shown above, we must first recall the definition of force: "A force is a push or a pull that ... The direction of a push is... The direction of a pull is...", and then form a multi-step reasoning process: "The baby's hand applies a force to the cabinet door. → This force causes the door to open. → The direction of this force is toward the baby's hand.", and finally got the correct answer: "This force is a pull.".

In the ScienceQA task, the model needs to predict the answer while outputting a detailed explanation. In this article, The author utilizes a large-scale language model to generate background knowledge and explanations as a chain of thought (CoT) to imitate the multi-step reasoning ability that humans have.

Experiments show that current multi-modal question answering methods cannot achieve good performance in the ScienceQA task. On the contrary, Through prompt learning based on thought chains, the GPT-3 model can achieve an accuracy of 75.17% on the ScienceQA data set and can generate higher-quality explanations: According to human assessment, where 65.2% of explanations were relevant, correct, and complete. Thoughtchain can also help the UnifiedQA model achieve a 3.99% improvement on the ScienceQA dataset.

- Paper link: https://arxiv.org/abs/2209.09513

- Code link: https:/ /github.com/lupantech/ScienceQA

- Project homepage: https://scienceqa.github.io/

- Data visualization: https://scienceqa.github.io/explore.html

- Leaderboard: https://scienceqa.github .io/leaderboard.html

1. ScienceQA data set

Dataset statistics

ScienceQA’s main statistics are shown below.

Main information of ScienceQA data set

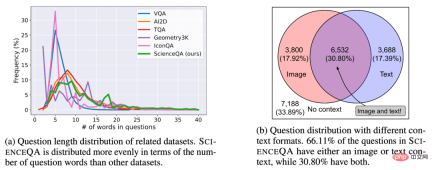

ScienceQA contains 21208 examples, including 9122 different questions. 10332 tracks (48.7%) had visual background information, 10220 tracks (48.2%) had textual background information, and 6532 tracks (30.8%) had visual textual background information. The vast majority of questions are annotated with detailed explanations: 83.9% of the questions have background knowledge annotations (lecture), and 90.5% of the questions have detailed answers (explanation).

## Question and background distribution in ScienceQA dataset.

Dataset topic distribution

Different from existing data sets,ScienceQA covers three major branches of natural sciences, social sciences and linguistics, including 26 topics, 127 categories and 379 knowledge skills (skills).

## Topic distribution of ScienceQA.

Word cloud distribution of the data set

The word cloud distribution in the figure below is shown, Questions in ScienceQA are rich in semantic diversity. Models need to understand different problem formulations, scenarios, and background knowledge.

#Word cloud distribution of ScienceQA.

Dataset comparison

ScienceQA is the first A multi-modal scientific question and answer dataset with detailed explanations. Compared with existing data sets, ScienceQA's data size, question type diversity, topic diversity and other dimensions reflect its advantages.

##Comparison of ScienceQA dataset with other scientific question and answer datasets . 2. Models and methods

BaselinesThe author evaluates different benchmark methods on the ScienceQA dataset, including VQA models such as Top-Down Attention, MCAN, BAN, DFAF, ViLT, Patch-TRM and VisualBERT, and large-scale language models such as UnifiedQA and GPT- 3, as well as random chance and human performance. For language models UnifiedQA and GPT-3, background images are converted into textual captions. GPT-3 (CoT) Recent research work has shown that, given appropriate cues, The GPT-3 model can show excellent performance on different downstream tasks. To this end, the author proposes the GPT-3 (CoT) model, which adds a chain of thought (CoT) to the prompts, so that the model can generate corresponding background knowledge and explanations while generating answers.

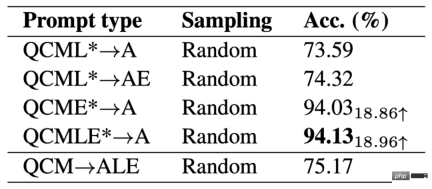

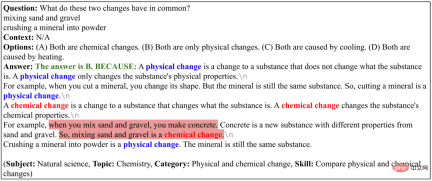

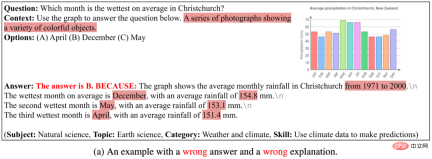

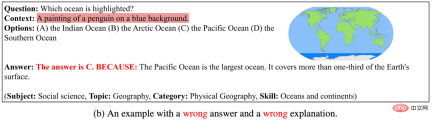

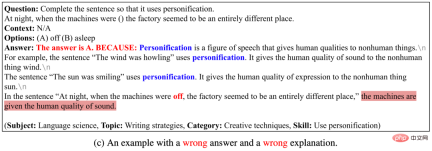

## Hint template adopted by GPT-3 (CoT). 3. Experiment and analysis are different The accuracy results of the benchmarks and methods on the ScienceQA test set are shown in the table below. VisualBERT, one of the current best VQA models, can only achieve 61.87% accuracy. Introducing CoT data during the training process, the UnifiedQA_BASE model can achieve an accuracy of 74.11%. And GPT-3 (CoT) achieved an accuracy of 75.17% with the prompt of 2 training examples, which is higher than other benchmark models. Humans perform well on the ScienceQA dataset, achieving an overall accuracy of 88.40% and performing stably across different categories of questions. Evaluation of generated explanations The author uses automatic evaluation metrics such as BLEU-1, BLEU-2, ROUGE-L and Sentence Similarity evaluate the explanations generated by different methods. Since automatic evaluation metrics can only measure the similarity between prediction results and annotated content, the authors further adopted manual evaluation methods to evaluate the relevance, correctness, and completeness of the generated explanations. As can be seen, 65.2% of the explanations generated by . Different prompt templates The author compared the different The impact of prompt templates on GPT-3 (CoT) accuracy . It can be seen that under the QAM-ALE template, GPT-3 (CoT) can obtain the largest average accuracy and the smallest variance. Additionally, GPT-3 (CoT) performs best when prompted with 2 training examples. Model upper limit In order to explore the performance upper limit of the GPT-3 (CoT) model, the author added annotated background knowledge and explanations to the input of the model (QCMLE*-A). We can see that GPT-3 (CoT) can achieve up to 94.13% accuracy. This also suggests a possible direction for model improvement: the model can perform step-by-step reasoning, that is, first retrieve accurate background knowledge and generate accurate explanations, and then use these results as input. This process is very similar to how humans solve complex problems. ## Performance upper limit for GPT-3 (CoT) models. Different ALE locations The author further discusses GPT-3 (CoT) When generating predictions, the impact of different ALE positions on the results. Experimental results on ScienceQA show that if GPT-3 (CoT) first generates background knowledge L or explanation E, and then generates answer A, its prediction accuracy will drop significantly. The main reason is that background knowledge L and explanation E have a large number of words. If LE is generated first, the GPT-3 model may run out of the maximum number of words, or stop generating text early, so that the final answer A cannot be obtained. Successful Cases Among the following 4 examples, GPT-3 (CoT) Not only generates correct answers, but also gives relevant, correct and complete explanations. This shows that GPT-3 (CoT) exhibits strong multi-step reasoning and explanation capabilities on the ScienceQA dataset. ##GPT-3 (CoT) Examples of generating correct answers and explanations. Failure Case I Although the correct answer was generated, the explanation generated was irrelevant, incorrect, or incomplete. This shows that GPT-3 (CoT) still faces greater difficulties in generating logically consistent long sequences. #GPT-3 (CoT) can generate the correct answer, but the generated explanation is incorrect. Failure Case II In the following four examples, GPT-3 (CoT) cannot be generated correctly The answer also cannot generate the correct explanation . The reasons are: (1) The current image captioning model cannot accurately describe the semantic information of schematic diagrams, tables and other pictures. If the picture is represented by picture annotation text, GPT-3 (CoT) cannot yet answer the question that contains the chart background. problems; (2) When GPT-3 (CoT) generates long sequences, it is prone to inconsistent or incoherent problems; (3) GPT-3 (CoT) is not yet able to answer specific questions. Domain knowledge issues. #GPT-3 (CoT) can generate examples of incorrect answers and explanations. The author proposed ScienceQA, the first multi-modal scientific question and answer data set with detailed explanations. ScienceQA contains 21,208 multiple-choice questions from primary and secondary school science subjects, covering three major science fields and a variety of topics. Most questions are annotated with detailed background knowledge and explanations. ScienceQA evaluates a model's capabilities in multimodal understanding, multistep reasoning, and interpretability. The authors evaluate different baseline models on the ScienceQA dataset and propose that the GPT-3 (CoT) model can generate corresponding background knowledge and explanations while generating answers. A large number of experimental analyzes and case studies have provided useful inspiration for the improvement of the model.

Different LE locations.

Different LE locations.

The above is the detailed content of The first multi-modal scientific question and answer data set with detailed explanations, deep learning model reasoning has a thinking chain. For more information, please follow other related articles on the PHP Chinese website!

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

AI Unearths New Potential In The Mining IndustryMay 10, 2025 am 11:16 AM

AI Unearths New Potential In The Mining IndustryMay 10, 2025 am 11:16 AMAI Enhanced Mining Equipment The mining operation environment is harsh and dangerous. Artificial intelligence systems help improve overall efficiency and security by removing humans from the most dangerous environments and enhancing human capabilities. Artificial intelligence is increasingly used to power autonomous trucks, drills and loaders used in mining operations. These AI-powered vehicles can operate accurately in hazardous environments, thereby increasing safety and productivity. Some companies have developed autonomous mining vehicles for large-scale mining operations. Equipment operating in challenging environments requires ongoing maintenance. However, maintenance can keep critical devices offline and consume resources. More precise maintenance means increased uptime for expensive and necessary equipment and significant cost savings. AI-driven

Why AI Agents Will Trigger The Biggest Workplace Revolution In 25 YearsMay 10, 2025 am 11:15 AM

Why AI Agents Will Trigger The Biggest Workplace Revolution In 25 YearsMay 10, 2025 am 11:15 AMMarc Benioff, Salesforce CEO, predicts a monumental workplace revolution driven by AI agents, a transformation already underway within Salesforce and its client base. He envisions a shift from traditional markets to a vastly larger market focused on

AI HR Is Going To Rock Our Worlds As AI Adoption SoarsMay 10, 2025 am 11:14 AM

AI HR Is Going To Rock Our Worlds As AI Adoption SoarsMay 10, 2025 am 11:14 AMThe Rise of AI in HR: Navigating a Workforce with Robot Colleagues The integration of AI into human resources (HR) is no longer a futuristic concept; it's rapidly becoming the new reality. This shift impacts both HR professionals and employees, dem

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.