How do we ensure healthcare AI is useful?

In the grand scheme of the business of healthcare, predictive models play a role no different than a blood test, X-ray, or MRI: they influence decisions about whether an intervention is appropriate.

"Broadly speaking, models perform mathematical operations and produce probability estimates that help doctors and patients decide whether to take action," said Chief Data Scientist at Stanford Health Care and Stanford University HAI faculty member Nigam Shah said. But these probability estimates are only useful to health care providers if they trigger more beneficial decisions.

"As a community, I think we are obsessed with the performance of the model, rather than asking, does this model work?" Shah said. "We need to think outside the box."

Shah's team is one of the few health care research groups to assess whether hospitals have the ability to deliver interventions based on the model, and whether the interventions will be beneficial to patients and health care organizations .

“There is growing concern that AI researchers are building models left and right without deploying anything,” Shah said. One reason for this is the failure of modelers to conduct usefulness analyzes that show how interventions triggered by the model can be cost-effectively integrated into hospital operations while causing more harm than good. ""If model developers are willing to take the time to do this additional analysis, hospitals will pay attention. ” he said.

Tools for usefulness analysis already exist in operations research, health care policy and econometrics, Shah said, but model developers in health care have been slow to use them. He himself The team tried to change this mentality by publishing a number of papers urging more people to evaluate the usefulness of their models. These included a JAMA paper addressing the need for modellers to consider usefulness, and a study paper, which proposes a framework for analyzing the usefulness of predictive models in healthcare and shows how it works using real-world examples.

"Like what hospitals might add to their operations Like anything new, deploying a new model must be worthwhile," Shah said. "There are mature frameworks in place to determine the value of the model. Now it's time for modelers to put them to use. ”

Understand the interplay between models, interventions, and the benefits and harms of interventions

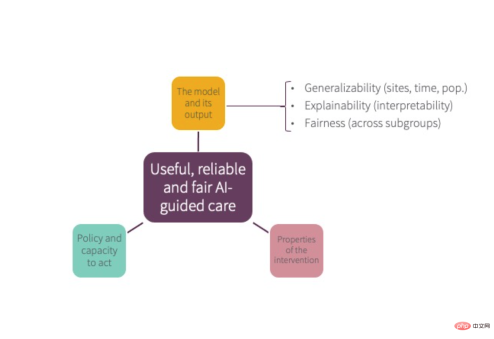

As shown in the figure above, the usefulness of a model depends on the The interplay between factors, the interventions it triggers, and the pros and cons of that intervention, Shah said.

First, The model—which often gets the most attention—should be good at predicting what it’s supposed to predict Anything, whether it's a patient's risk of readmission to the hospital or their risk of developing diabetes. Additionally, Shah said, it must be equitable, meaning the predictions it produces apply equally to everyone regardless of race, ethnicity, nationality or gender; and must be generalizable from one hospital site to another], or at least make reliable predictions about the local hospital population; furthermore, it should be interpretable.

Secondly, Healthcare organizations must develop policies about when and how to intervene based on tests or models, as well as decisions about who is responsible for the intervention. They must also have the capacity (sufficient staff, materials, or other resources) to perform the intervention.

Shah said developing policies about whether or how to intervene in specific ways in response to models affects health equity. When it comes to equity, Shah said, “Researchers spend too much time focusing on whether a model is equally accurate for everyone, And not enough time is spent focusing on whether the intervention will benefit everyone equally – even though most of the inequities we try to address arise from the latter. ”

For example, predicting which patients will not show up for their appointments may not be unfair in itself if its predictions are equally accurate for all racial and ethnic groups, but the choice of how to intervene—whether Duplicate appointment times or providing transportation support to help people get to their appointments — may have different impacts on different groups of people.

Third, the benefits of the intervention outweigh the harms, Shah said , any intervention can have both positive and negative consequences. Therefore, the usefulness of a model prediction will depend on the pros and cons of the intervention it triggers.

To understand this interaction, consider a commonly used predictive model: the atherosclerotic cardiovascular disease (ASCVD) risk equation, which relies on nine major data points including age, sex, race, total cholesterol , LDL/HDL cholesterol, blood pressure, smoking history, diabetes status, and use of antihypertensive medications) to calculate a patient's 10-year risk of heart attack or stroke. A fleshed-out usefulness analysis of the ASCVD risk equation would consider the three parts of the figure above and find it useful, Shah said.

First, the model is widely considered to be highly predictive of heart disease, and is also fair, generalizable, and interpretable. Second, most medical institutions intervene by following standard policies regarding risk levels in prescribing statins and have sufficient capacity to intervene because statins are widely available. Finally, a harm/benefit analysis of statin use suggests that most people benefit from statins, although some patients cannot tolerate their side effects.

An example of model usefulness analysis: Advanced Care Planning

The ASCVD example above, while illustrative, is probably one of the simplest predictive models. But predictive models have the potential to trigger interventions that disrupt healthcare workflows in more complex ways, and the benefits and harms of some interventions may be less clear.

To address this issue, Shah and colleagues developed a framework to test whether predictive models are useful in practice. They demonstrated the framework using a model that triggers an intervention called an advanced care plan (ACP).

ACP is typically provided to patients who are nearing the end of their life and involves an open and honest discussion of possible future scenarios and the patient’s wishes should they become incapacitated. Not only do these conversations give patients a sense of control over their lives, they also reduce health care costs, improve physician morale, and sometimes even improve patient survival rates.

Shah’s team at Stanford developed a model that can predict which hospital patients are likely to die in the next 12 months. Our goal: to identify patients who may benefit from ACP. After ensuring that the model predicted mortality well and was fair, interpretable and reliable, the team conducted two additional analyzes to determine whether the interventions triggered by the model were useful.

The first is a cost-benefit analysis, which found that a successful intervention (providing ACP to patients correctly identified by the model as likely to benefit) would save approximately $8,400, while providing the intervention to those who did not need ACP (i.e., model error ) will cost approximately $3,300. “In this case, very roughly speaking, even if we were only a third right, we would break even,” Shah said.

But the analysis did not stop there. “To save those $8,400 that was promised, we actually had to implement a workflow that involved, say, 21 steps, three people and seven handoffs in 48 hours,” Shah said. "So, in real life, can we do that?"

To answer this question, the team simulated the intervention over 500 hospital days to assess care delivery factors such as limited staff or lack of time. How will the benefit of the intervention be affected (due to patient discharge). They also quantified the relative benefits of increasing inpatient staffing versus providing ACP on an outpatient basis. Results: Having an outpatient option ensures more expected benefits are realized. “We only had to follow up with half of the discharged patients to get 75 percent efficacy, which is pretty good,” Shah said.

This work shows that even if you have a very good model and a very good intervention, a model is only useful if you also have the ability to deliver the intervention, Shah said. While hindsight may make this result seem intuitive, Shah said that was not the case at the time. "Had we not completed this study, Stanford Hospital might have just expanded its inpatient capacity to offer ACP, even though it was not very cost-effective."

Shah's team used to analyze the models, interventions, and interventions A framework of interactions between pros and cons can help identify predictive models that are useful in practice. "At a minimum, modelers should conduct some kind of analysis to determine whether their models suggest useful interventions," Shah said. "This will be a start."

The above is the detailed content of How do we ensure healthcare AI is useful?. For more information, please follow other related articles on the PHP Chinese website!

![[Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyright](https://img.php.cn/upload/article/001/242/473/174707263295098.jpg?x-oss-process=image/resize,p_40) [Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyrightMay 13, 2025 am 01:57 AM

[Ghibli-style images with AI] Introducing how to create free images with ChatGPT and copyrightMay 13, 2025 am 01:57 AMThe latest model GPT-4o released by OpenAI not only can generate text, but also has image generation functions, which has attracted widespread attention. The most eye-catching feature is the generation of "Ghibli-style illustrations". Simply upload the photo to ChatGPT and give simple instructions to generate a dreamy image like a work in Studio Ghibli. This article will explain in detail the actual operation process, the effect experience, as well as the errors and copyright issues that need to be paid attention to. For details of the latest model "o3" released by OpenAI, please click here⬇️ Detailed explanation of OpenAI o3 (ChatGPT o3): Features, pricing system and o4-mini introduction Please click here for the English version of Ghibli-style article⬇️ Create Ji with ChatGPT

Explaining examples of use and implementation of ChatGPT in local governments! Also introduces banned local governmentsMay 13, 2025 am 01:53 AM

Explaining examples of use and implementation of ChatGPT in local governments! Also introduces banned local governmentsMay 13, 2025 am 01:53 AMAs a new communication method, the use and introduction of ChatGPT in local governments is attracting attention. While this trend is progressing in a wide range of areas, some local governments have declined to use ChatGPT. In this article, we will introduce examples of ChatGPT implementation in local governments. We will explore how we are achieving quality and efficiency improvements in local government services through a variety of reform examples, including supporting document creation and dialogue with citizens. Not only local government officials who aim to reduce staff workload and improve convenience for citizens, but also all interested in advanced use cases.

What is the Fukatsu-style prompt in ChatGPT? A thorough explanation with example sentences!May 13, 2025 am 01:52 AM

What is the Fukatsu-style prompt in ChatGPT? A thorough explanation with example sentences!May 13, 2025 am 01:52 AMHave you heard of a framework called the "Fukatsu Prompt System"? Language models such as ChatGPT are extremely excellent, but appropriate prompts are essential to maximize their potential. Fukatsu prompts are one of the most popular prompt techniques designed to improve output accuracy. This article explains the principles and characteristics of Fukatsu-style prompts, including specific usage methods and examples. Furthermore, we have introduced other well-known prompt templates and useful techniques for prompt design, so based on these, we will introduce C.

What is ChatGPT Search? Explains the main functions, usage, and fee structure!May 13, 2025 am 01:51 AM

What is ChatGPT Search? Explains the main functions, usage, and fee structure!May 13, 2025 am 01:51 AMChatGPT Search: Get the latest information efficiently with an innovative AI search engine! In this article, we will thoroughly explain the new ChatGPT feature "ChatGPT Search," provided by OpenAI. Let's take a closer look at the features, usage, and how this tool can help you improve your information collection efficiency with reliable answers based on real-time web information and intuitive ease of use. ChatGPT Search provides a conversational interactive search experience that answers user questions in a comfortable, hidden environment that hides advertisements

An easy-to-understand explanation of how to create a composition in ChatGPT and prompts!May 13, 2025 am 01:50 AM

An easy-to-understand explanation of how to create a composition in ChatGPT and prompts!May 13, 2025 am 01:50 AMIn a modern society with information explosion, it is not easy to create compelling articles. How to use creativity to write articles that attract readers within a limited time and energy requires superb skills and rich experience. At this time, as a revolutionary writing aid, ChatGPT attracted much attention. ChatGPT uses huge data to train language generation models to generate natural, smooth and refined articles. This article will introduce how to effectively use ChatGPT and efficiently create high-quality articles. We will gradually explain the writing process of using ChatGPT, and combine specific cases to elaborate on its advantages and disadvantages, applicable scenarios, and safe use precautions. ChatGPT will be a writer to overcome various obstacles,

How to create diagrams using ChatGPT! Illustrated loading and plugins are also explainedMay 13, 2025 am 01:49 AM

How to create diagrams using ChatGPT! Illustrated loading and plugins are also explainedMay 13, 2025 am 01:49 AMAn efficient guide to creating charts using AI Visual materials are essential to effectively conveying information, but creating it takes a lot of time and effort. However, the chart creation process is changing dramatically due to the rise of AI technologies such as ChatGPT and DALL-E 3. This article provides detailed explanations on efficient and attractive diagram creation methods using these cutting-edge tools. It covers everything from ideas to completion, and includes a wealth of information useful for creating diagrams, from specific steps, tips, plugins and APIs that can be used, and how to use the image generation AI "DALL-E 3."

An easy-to-understand explanation of ChatGPT Plus' pricing structure and payment methods!May 13, 2025 am 01:48 AM

An easy-to-understand explanation of ChatGPT Plus' pricing structure and payment methods!May 13, 2025 am 01:48 AMUnlock ChatGPT Plus: Fees, Payment Methods and Upgrade Guide ChatGPT, a world-renowned generative AI, has been widely used in daily life and business fields. Although ChatGPT is basically free, the paid version of ChatGPT Plus provides a variety of value-added services, such as plug-ins, image recognition, etc., which significantly improves work efficiency. This article will explain in detail the charging standards, payment methods and upgrade processes of ChatGPT Plus. For details of OpenAI's latest image generation technology "GPT-4o image generation" please click: Detailed explanation of GPT-4o image generation: usage methods, prompt word examples, commercial applications and differences from other AIs Table of contents ChatGPT Plus Fees Ch

Explaining how to create a design using ChatGPT! We also introduce examples of use and promptsMay 13, 2025 am 01:47 AM

Explaining how to create a design using ChatGPT! We also introduce examples of use and promptsMay 13, 2025 am 01:47 AMHow to use ChatGPT to streamline your design work and increase creativity This article will explain in detail how to create a design using ChatGPT. We will introduce examples of using ChatGPT in various design fields, such as ideas, text generation, and web design. We will also introduce points that will help you improve the efficiency and quality of a variety of creative work, such as graphic design, illustration, and logo design. Please take a look at how AI can greatly expand your design possibilities. table of contents ChatGPT: A powerful tool for design creation

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.