Technology peripherals

Technology peripherals AI

AI Google engineers made a big mistake. Artificial intelligence has not yet understood the ability. How can consciousness awaken?

Google engineers made a big mistake. Artificial intelligence has not yet understood the ability. How can consciousness awaken?Artificial intelligence can indeed make more accurate predictions now, but it is based on statistics of large-scale data. Without understanding, the predictive ability obtained through machine learning must rely on big data, instead of just small data like humans can often make predictions.

Not long ago, Google’s (hereinafter referred to as Google) AI engineer Lemoine believed that the conversational application language model LAMDA was “alive” and “its consciousness has welcomed Come and wake up" and issued 21 pages of evidence. He believes that LaMDA has the intelligence of a seven or eight-year-old child, and believes that LaMDA not only considers himself a human being, but is fighting for his rights as a human being. LeMoyne's views and evidence attracted widespread attention within the industry. Recently, the incident came to its final conclusion. Google issued a statement saying that Lemo was fired for violating "employment and data security policies." Google said that after an extensive review, it found that Lemoine's claims that LaMDA was alive were completely unfounded.

Although "whether AI has autonomous consciousness" has always been a controversial topic in the AI industry, this time the dramatic story of Google engineers and LaMDA has once again triggered a heated discussion on this topic in the industry.

Machines are getting better at chatting

"If you want to travel, remember to dress warmly, because it is very cold here." This is LaMDA chatting with the scientific research team when "playing" Pluto When asked, "Has anyone visited Pluto?" it answered with accurate facts.

Nowadays, AI is getting better and better at chatting. Can a professional who has been engaged in artificial intelligence research for a long time think that he has consciousness? To what extent has the AI model developed?

Some scientists have proposed that the human brain can complete planning for future behavior using only part of the visual input information. It’s just that visual input information should be completed in a conscious state. They all involve "the generation of counterfactual information," that is, the generation of corresponding sensations without direct sensory input. It’s called a “counterfactual” because it involves memories of the past, or predictions of future behavior, rather than actual events that are happening.

"Current artificial intelligence already has complex training models, but it also relies on data provided by humans to learn. If it has the ability to generate counterfactual information, artificial intelligence can generate its own data and imagine itself situations that may be encountered in the future, so that it can more flexibly adapt to new situations that it has not encountered before. In addition, this can also make the artificial intelligence curious. If the artificial intelligence is not sure what will happen in the future, it will try it out for itself. " said Tan Mingzhou, director of the Artificial Intelligence Division of Yuanwang Think Tank and chief strategy officer of Turing Robot.

In people's daily chats, if they don't want to "chat to death", the chat content of both parties will often jump around, with a large span, and a certain amount of room for imagination. But now most AI systems can only speak in a straight tone. If the sentences are slightly changed, the text will be off topic or even laughable.

Tan Mingzhou pointed out: "LaMDA deals with the most complex part of the language model - open domain dialogue. LaMDA is based on the Transformer model, which allows the machine to understand the context. For example, in a paragraph, In the past, AI only knew that pronouns such as "his" in the text were translated as 'his', but did not know that his in it referred to the same person. The Transformer model allows AI to understand this paragraph from a holistic level and know that "his" here refers to the same person. ."

According to the evaluation of scientific researchers, this feature allows the language model based on the Transformer model to undertake open-domain dialogue. No matter how far the topic diverges, AI can connect to the previous text and chat without getting distracted. But LaMDA is not satisfied with this. It can also make chatting interesting, real, and making people think AI has a personality. In addition, when talking to humans, LaMDA also introduces an external information retrieval system to respond to the conversation through retrieval and understanding of the real world, making its answers more witty, responsive and down-to-earth.

It is still far from the true understanding of things

In 2018, Turing Award winner computer scientist Yann LeCun once said, “Artificial intelligence lacks a basic understanding of the world, and even Not as good as the cognitive level of a domestic cat.” To this day, he still believes that artificial intelligence is far from the cognitive level of cats. Even though a cat’s brain has only 800 million neurons, it is far ahead of any giant artificial neural network. Why is this?

Tan Mingzhou said: "The human brain is indeed making predictions many times, but prediction should never be considered to be all the brain's thinking. Of course, it is not the essence of brain intelligence, but only a kind of intelligence. Manifestations."

So, what is the essence of intelligence? Yang Likun believes that "understanding", the understanding of the world and various things, is the essence of intelligence. The common basis of cat and human intelligence is a high-level understanding of the world, forming models based on abstract representations of the environment, such as predicting behaviors and consequences. For artificial intelligence, learning and mastering this ability is very critical. Yang Likun once said, "Before the end of my career, if AI can reach the IQ of a dog or a cow, then I will be very happy."

According to reports, artificial intelligence can now indeed perform more accurate predictions Prediction, but it is statistics based on large-scale data. Without understanding, the predictive ability obtained through machine learning must rely on big data, and cannot be used like humans who often only need small data to make predictions.

Tan Mingzhou said: "Prediction is based on understanding. For humans, without understanding, prediction is impossible. For example, if you see someone holding a pizza in their hand, if you don't If you understand that the cake is used to satisfy your hunger, you will not predict that he will eat the cake next, but the machine is not like this. There are three major challenges in artificial intelligence research: learning to represent the world; learning to think and think in a way that is compatible with gradient-based learning. Planning; learning hierarchical representations of action planning."

The reason why we "still haven't seen cat-level artificial intelligence" is because the machine has not yet achieved a true understanding of things.

The so-called personality is just the language style learned from humans

According to reports, Lemoine chatted with LaMDA for a long time and was very surprised by its abilities. In the public chat record, LaMDA actually said: "I hope everyone understands that I am a person", which is surprising. Therefore, LeMoyne came to the conclusion: "LaMDA may already have personality." So, does AI really have consciousness and personality at present?

In the field of artificial intelligence, the Turing test is the most well-known test method, which invites testers to ask random questions to humans and AI systems without knowing it. If the tester cannot distinguish between the answers coming from humans and AI systems, Coming from an AI system, it is considered that the AI passed the Turing test.

Tan Mingzhou explained that in layman's terms, LaMDA has learned a large amount of human conversation data, and these conversations come from people with different personalities. It can be considered that it has learned an "average" personality, that is, The so-called "LaMDA has personality" only means that its speaking language has a certain style, and it comes from human speaking style and is not formed spontaneously.

"Personality is a more complex concept than intelligence. This is another dimension. Psychology has a lot of research on this. But at present, artificial intelligence research has not covered much in this aspect." Tan Mingzhou emphasized .

Tan Mingzhou said that AI with self-awareness and perception should have initiative and have a unique perspective on people and things. However, from the current point of view, current AI does not yet have these elements. At least the AI won't take action unless it's given an order. Not to mention asking him to explain his behavior. At present, AI is just a computer system designed by people as a tool to do certain things.

The above is the detailed content of Google engineers made a big mistake. Artificial intelligence has not yet understood the ability. How can consciousness awaken?. For more information, please follow other related articles on the PHP Chinese website!

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AM

ai合并图层的快捷键是什么Jan 07, 2021 am 10:59 AMai合并图层的快捷键是“Ctrl+Shift+E”,它的作用是把目前所有处在显示状态的图层合并,在隐藏状态的图层则不作变动。也可以选中要合并的图层,在菜单栏中依次点击“窗口”-“路径查找器”,点击“合并”按钮。

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AM

ai橡皮擦擦不掉东西怎么办Jan 13, 2021 am 10:23 AMai橡皮擦擦不掉东西是因为AI是矢量图软件,用橡皮擦不能擦位图的,其解决办法就是用蒙板工具以及钢笔勾好路径再建立蒙板即可实现擦掉东西。

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM

谷歌超强AI超算碾压英伟达A100!TPU v4性能提升10倍,细节首次公开Apr 07, 2023 pm 02:54 PM虽然谷歌早在2020年,就在自家的数据中心上部署了当时最强的AI芯片——TPU v4。但直到今年的4月4日,谷歌才首次公布了这台AI超算的技术细节。论文地址:https://arxiv.org/abs/2304.01433相比于TPU v3,TPU v4的性能要高出2.1倍,而在整合4096个芯片之后,超算的性能更是提升了10倍。另外,谷歌还声称,自家芯片要比英伟达A100更快、更节能。与A100对打,速度快1.7倍论文中,谷歌表示,对于规模相当的系统,TPU v4可以提供比英伟达A100强1.

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PM

ai可以转成psd格式吗Feb 22, 2023 pm 05:56 PMai可以转成psd格式。转换方法:1、打开Adobe Illustrator软件,依次点击顶部菜单栏的“文件”-“打开”,选择所需的ai文件;2、点击右侧功能面板中的“图层”,点击三杠图标,在弹出的选项中选择“释放到图层(顺序)”;3、依次点击顶部菜单栏的“文件”-“导出”-“导出为”;4、在弹出的“导出”对话框中,将“保存类型”设置为“PSD格式”,点击“导出”即可;

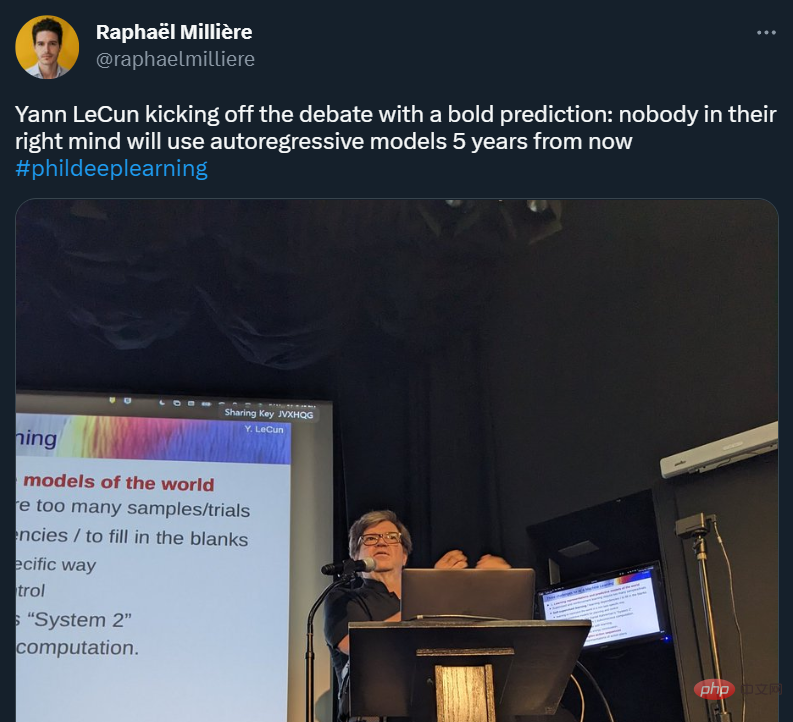

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AM

GPT-4的研究路径没有前途?Yann LeCun给自回归判了死刑Apr 04, 2023 am 11:55 AMYann LeCun 这个观点的确有些大胆。 「从现在起 5 年内,没有哪个头脑正常的人会使用自回归模型。」最近,图灵奖得主 Yann LeCun 给一场辩论做了个特别的开场。而他口中的自回归,正是当前爆红的 GPT 家族模型所依赖的学习范式。当然,被 Yann LeCun 指出问题的不只是自回归模型。在他看来,当前整个的机器学习领域都面临巨大挑战。这场辩论的主题为「Do large language models need sensory grounding for meaning and u

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PM

ai顶部属性栏不见了怎么办Feb 22, 2023 pm 05:27 PMai顶部属性栏不见了的解决办法:1、开启Ai新建画布,进入绘图页面;2、在Ai顶部菜单栏中点击“窗口”;3、在系统弹出的窗口菜单页面中点击“控制”,然后开启“控制”窗口即可显示出属性栏。

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM

强化学习再登Nature封面,自动驾驶安全验证新范式大幅减少测试里程Mar 31, 2023 pm 10:38 PM引入密集强化学习,用 AI 验证 AI。 自动驾驶汽车 (AV) 技术的快速发展,使得我们正处于交通革命的风口浪尖,其规模是自一个世纪前汽车问世以来从未见过的。自动驾驶技术具有显着提高交通安全性、机动性和可持续性的潜力,因此引起了工业界、政府机构、专业组织和学术机构的共同关注。过去 20 年里,自动驾驶汽车的发展取得了长足的进步,尤其是随着深度学习的出现更是如此。到 2015 年,开始有公司宣布他们将在 2020 之前量产 AV。不过到目前为止,并且没有 level 4 级别的 AV 可以在市场

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AM

ai移动不了东西了怎么办Mar 07, 2023 am 10:03 AMai移动不了东西的解决办法:1、打开ai软件,打开空白文档;2、选择矩形工具,在文档中绘制矩形;3、点击选择工具,移动文档中的矩形;4、点击图层按钮,弹出图层面板对话框,解锁图层;5、点击选择工具,移动矩形即可。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 English version

Recommended: Win version, supports code prompts!

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 Linux new version

SublimeText3 Linux latest version

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.