Technology peripherals

Technology peripherals AI

AI 2021 ML and NLP academic statistics: Google ranks first, and reinforcement learning expert Sergey Levine tops the list

2021 ML and NLP academic statistics: Google ranks first, and reinforcement learning expert Sergey Levine tops the list2021 ML and NLP academic statistics: Google ranks first, and reinforcement learning expert Sergey Levine tops the list

2021 is a very productive year for natural language processing (NLP) and machine learning (ML). Now it is time to count the papers in the field of NLP and ML last year.

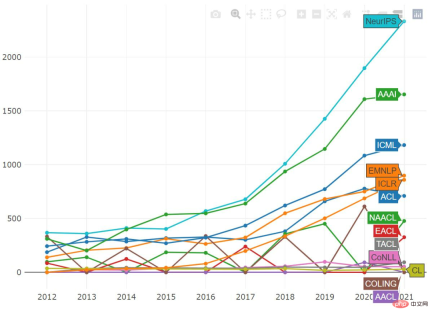

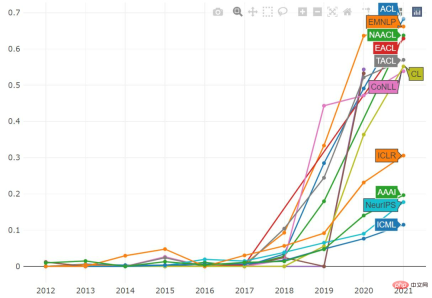

MAREK REI, a researcher in machine learning and natural language processing from the University of Cambridge, summarized and analyzed classic papers in 2021 and summarized the statistics of ML and NLP publications in 2021. The major conferences and journals in the intelligence industry were analyzed, including ACL, EMNLP, NAACL, EACL, CoNLL, TACL, CL, NeurIPS, AAAI, ICLR, and ICML.

The analysis of the paper is completed using a series of automated tools, which may not be perfect and may contain some flaws and errors. For some reason, some authors started publishing their papers in obfuscated form to prevent any form of content duplication or automated content extraction, and these papers were excluded from the analysis process.

Now let’s take a look at the MAREK REI statistical results.

Based on academic conference statistics

The number of submissions to most conferences continues to rise and break records. ACL appears to be an exception, with AAAI almost leveling off and NeurIPS still growing steadily.

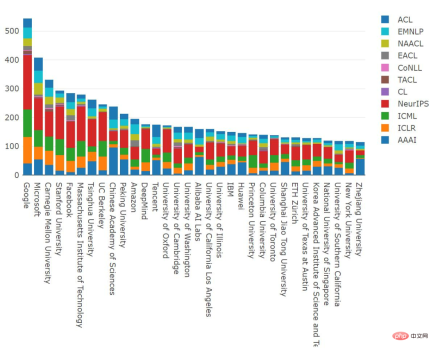

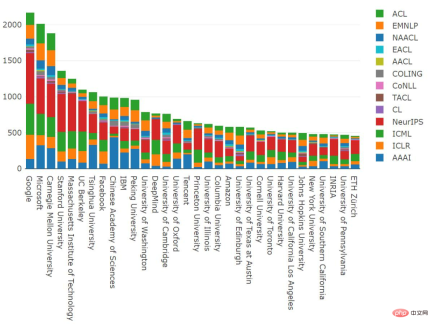

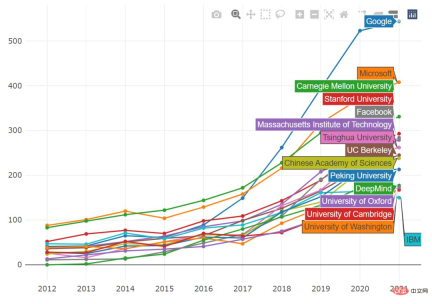

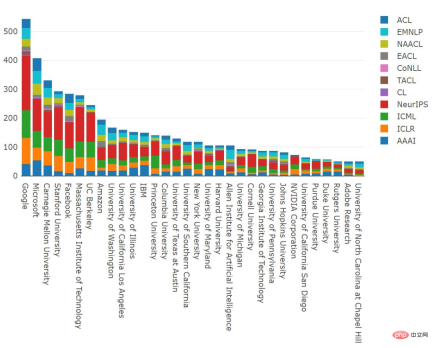

Based on institutional statistics

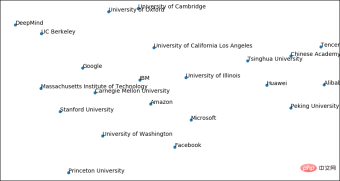

The leading research institution in the number of papers published in 2021 is undoubtedly Google ; Microsoft ranked second; CMU, Stanford University, Meta and MIT ranked closely behind, and Tsinghua University ranked seventh. Microsoft, CAS, Amazon, Tencent, Cambridge, Washington, and Alibaba stand out with a sizable proportion of papers at NLP conferences, while other top organizations seem to focus primarily on the ML field.

From the data of 2012-2021, Google published 2170 papers and ranked first, surpassing the 2013 papers published by Microsoft . CMU published 1,881 papers, ranking third.

Most institutions continue to increase their annual publication numbers. The number of papers published by Google used to grow linearly, and now this trend has eased, but it still publishes more papers than before; CMU had a plateau last year, but has made up for it this year; IBM seems to be the only company that publishes slightly more papers Declining institutions.

By author statistics

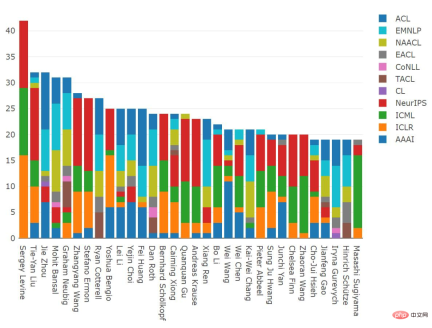

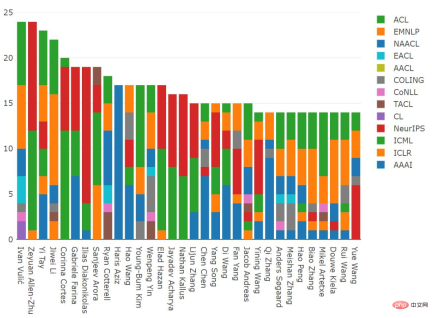

Next, let’s take a look at 2021 Researchers who publish the most papers per year. Sergey Levine (Assistant Professor of Electrical Engineering and Computer Science, University of California, Berkeley) published 42 papers, ranking first; Liu Tieyan (Microsoft), Zhou Jie (Tsinghua University), Mohit Bansal (University of North Carolina at Chapel Hill), Graham Neubig (CMU) also ranks relatively high in the number of papers published.

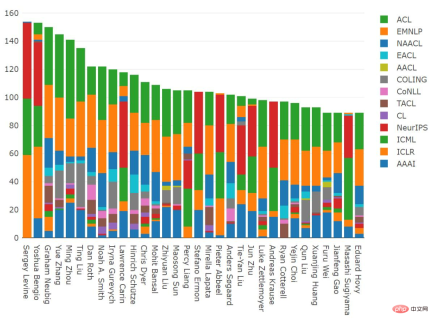

Throughout 2012-2021, the papers published by Sergey Levine ranked first. Last year he ranked sixth. This year It jumped to the first place; Yoshua Bengio (Montreal), Graham Neubig (CMU), Zhang Yue (Westlake University), Zhou Ming (Chief Scientist of Innovation Works), Ting Liu (Harbin Institute of Technology) and others also ranked relatively high in terms of the number of papers they published. .

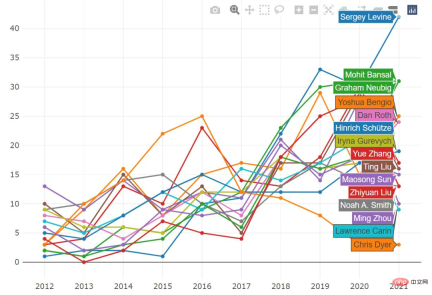

Sergey Levine sets a new record by a considerable margin; Mohit Bansal’s number of papers also increases significantly, 2021 Published 31 papers in 2020, the same as Graham Neubig; Yoshua Bengio's number of papers decreased in 2020, but is now rising again.

Statistics of papers published as the first author

Researchers who publish the most papers are usually postdocs and supervisors. In contrast, people who publish more papers as first authors are usually people who do actual research.

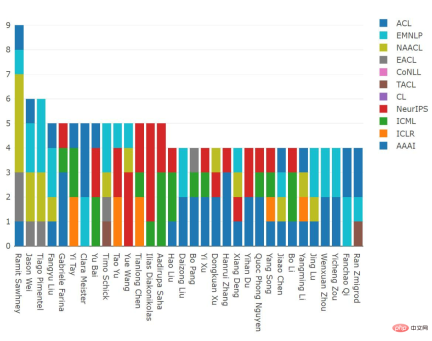

Ramit Sawhney (Technical Director of Tower Research Capital) published 9 influential papers in 2021, Jason Wei (Google) and Tiago Pimentel (PhD student at Cambridge University) published respectively 6 influential papers were published.

From the 2012-2021 distribution, Ivan Vulić (University of Cambridge) and Zeyuan Allen-Zhu (Microsoft) are both first authors Published 24 influential papers, tied for first place; Yi Tay (Google) and Li Jiwei (Shannon Technology) ranked second, having published 23 and 22 influential papers as first authors respectively. papers on NeurIPS; Ilias Diakonikolas (University of Wisconsin-Madison) has published 15 NeurIPS papers as the first author.

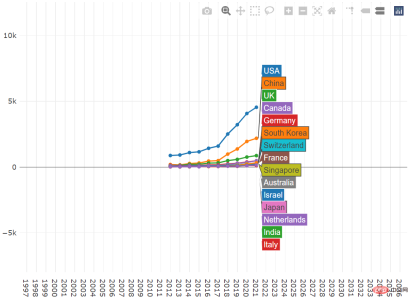

Based on national statistics

Number of publications by country in 2021, United States The number of publications is the largest, with China and the UK ranking second and third respectively. In the United States and the United Kingdom, NeurIPS accounts for the largest proportion, while AAAI accounts for the largest proportion in China.

The vertical coordinates from top to bottom are 500, 1000, 1500, 2000, 2500, and so on

The vertical coordinates from top to bottom are 500, 1000, 1500, 2000, 2500, and so on

Almost all top-ranked countries continue to increase their number of publications and set new records in 2021. The increase was the largest for the United States, further extending its lead.

In the United States, Google, Microsoft and CMU once again lead the list in terms of number of publications.

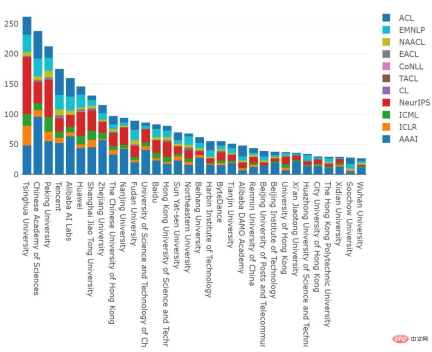

##In China, Tsinghua University, Chinese Academy of Sciences and Peking University published the most papers in 2021.

Through visualization, these Organizations are clustered together primarily based on geographic proximity, with companies in the middle.

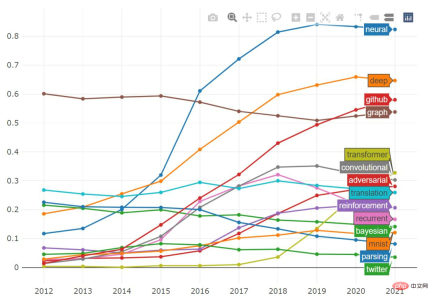

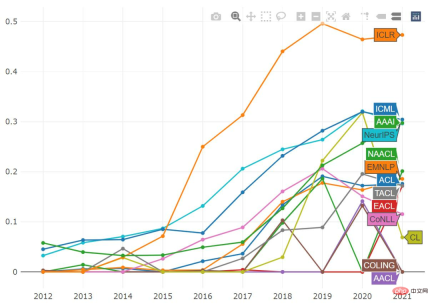

We can also draw drawings containing specific keys word proportion of papers and track changes in this proportion over time.

The word “neural” seems to be on a slight downward trend, although you can still find it in 80% of papers. At the same time, the proportions of "recurrent" and "convolutional" are also declining, and the word "transformer" appears in more than 30% of papers.

If you look at the word "adversarial" alone, we will find that it is very common in ICLR, and almost half of the papers mention it. The proportion of "adversarial" in ICML and NeurIPS seems to have peaked before, while AAAI has not. In the past few years, the term "transformer" has become very popular. It is particularly widely used in NLP papers, with over 50% of published papers containing it, and its popularity is steadily increasing across all ML conferences.

The above is the detailed content of 2021 ML and NLP academic statistics: Google ranks first, and reinforcement learning expert Sergey Levine tops the list. For more information, please follow other related articles on the PHP Chinese website!

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AM

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AMThe legal tech revolution is gaining momentum, pushing legal professionals to actively embrace AI solutions. Passive resistance is no longer a viable option for those aiming to stay competitive. Why is Technology Adoption Crucial? Legal professional

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AM

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AMMany assume interactions with AI are anonymous, a stark contrast to human communication. However, AI actively profiles users during every chat. Every prompt, every word, is analyzed and categorized. Let's explore this critical aspect of the AI revo

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AM

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AMA successful artificial intelligence strategy cannot be separated from strong corporate culture support. As Peter Drucker said, business operations depend on people, and so does the success of artificial intelligence. For organizations that actively embrace artificial intelligence, building a corporate culture that adapts to AI is crucial, and it even determines the success or failure of AI strategies. West Monroe recently released a practical guide to building a thriving AI-friendly corporate culture, and here are some key points: 1. Clarify the success model of AI: First of all, we must have a clear vision of how AI can empower business. An ideal AI operation culture can achieve a natural integration of work processes between humans and AI systems. AI is good at certain tasks, while humans are good at creativity and judgment

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AM

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AMMeta upgrades AI assistant application, and the era of wearable AI is coming! The app, designed to compete with ChatGPT, offers standard AI features such as text, voice interaction, image generation and web search, but has now added geolocation capabilities for the first time. This means that Meta AI knows where you are and what you are viewing when answering your question. It uses your interests, location, profile and activity information to provide the latest situational information that was not possible before. The app also supports real-time translation, which completely changed the AI experience on Ray-Ban glasses and greatly improved its usefulness. The imposition of tariffs on foreign films is a naked exercise of power over the media and culture. If implemented, this will accelerate toward AI and virtual production

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AM

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AMArtificial intelligence is revolutionizing the field of cybercrime, which forces us to learn new defensive skills. Cyber criminals are increasingly using powerful artificial intelligence technologies such as deep forgery and intelligent cyberattacks to fraud and destruction at an unprecedented scale. It is reported that 87% of global businesses have been targeted for AI cybercrime over the past year. So, how can we avoid becoming victims of this wave of smart crimes? Let’s explore how to identify risks and take protective measures at the individual and organizational level. How cybercriminals use artificial intelligence As technology advances, criminals are constantly looking for new ways to attack individuals, businesses and governments. The widespread use of artificial intelligence may be the latest aspect, but its potential harm is unprecedented. In particular, artificial intelligence

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AM

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AMThe intricate relationship between artificial intelligence (AI) and human intelligence (NI) is best understood as a feedback loop. Humans create AI, training it on data generated by human activity to enhance or replicate human capabilities. This AI

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AM

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AMAnthropic's recent statement, highlighting the lack of understanding surrounding cutting-edge AI models, has sparked a heated debate among experts. Is this opacity a genuine technological crisis, or simply a temporary hurdle on the path to more soph

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AM

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AMIndia is a diverse country with a rich tapestry of languages, making seamless communication across regions a persistent challenge. However, Sarvam’s Bulbul-V2 is helping to bridge this gap with its advanced text-to-speech (TTS) t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Linux new version

SublimeText3 Linux latest version

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 English version

Recommended: Win version, supports code prompts!

Dreamweaver Mac version

Visual web development tools