Comparison of eight major predictive analysis tools

What are predictive analytics tools?

Predictive analytics tools blend artificial intelligence and business reporting. These tools include sophisticated pipelines for collecting data from across the enterprise, adding layers of statistical analysis and machine learning to make predictions about the future, and distilling those insights into useful summaries that business users can act on.

The quality of predictions depends primarily on the data that goes into the system—the old slogan from the mainframe era, “garbage in, garbage out,” still holds true today. But there are deeper challenges, as predictive analytics software cannot predict the moments when the world will change, and the future is weakly connected to the past. These tools, which operate primarily by identifying patterns, are becoming increasingly sophisticated.

Using a dedicated predictive analytics tool is often relatively easy compared to writing a tool from scratch. Most tools provide a visual programming interface that enables users to drag and drop a variety of icons optimized for data analysis, helping users understand coding and think like a programmer, and these tools can indeed generate complex predictions with the click of a mouse. .

Comparison of Excellent Predictive Analytics Tools

Alteryx Analytics Process Automation

The goal of the Alteryx Analytics Process Automation (APA) platform is to help you Build pipelines that clean your data before applying the best data science and machine learning algorithms. A high degree of automation enables deployment of these models into production to generate a steady stream of insights and predictions. The visual IDE offers over 300 options that can be combined to form a complex pipeline. One of the strengths of APA is its deep integration with other data sources, such as geospatial databases or demographic data, to enrich the quality of your own datasets.

Key points:

- This is a great solution for data scientists to help them automate the collection of complex data sources to generate multiple deliverables ;

- For local deployment or deployment in the Alteryx cloud.

- Includes a number of robotic process automation (RPA) tools for handling chores like text recognition or image processing;

- is designed for users who may want to display data as a dashboard, spreadsheet, or some other Customize the platform to provide insights for multiple customers.

- Tools such as Designer start at $5,195 per user per year. Additional charges are set by the sales team. Free trial and open source options available.

AWS SageMaker

As Amazon’s primary artificial intelligence platform, AWS SageMaker integrates well with the rest of the AWS portfolio to help users analyze data from cloud providers One of the main data sources - Amazon's data - is then deployed to run in its own instance or as part of a serverless lambda function. SageMaker is a full-service platform with data preparation tools such as Data Wrangler, a presentation layer built with Jupyter notebooks, and an automation option called Autopilot. Visualization tools help users understand what is going on at a glance.

Key Points:

- Fully integrated with many parts of the AWS ecosystem, making it an excellent choice for AWS-based operations;

- Serverless deployment options allow Cost scales with usage;

- Marketplace facilitates buying and selling models and algorithms with other SageMaker users;

- Integrates with a variety of AWS databases, data lakes, and other data storage options to make working with large data sets easy Keep it simple;

- Pricing is often tied to the size of the computing resources supporting the calculation, and a generous free tier makes experimentation possible.

H2O.ai AI Cloud

Translating excellent artificial intelligence algorithms into productive insights is the main goal of H2O.ai AI Cloud. Its “human-driven AI” provides an automated pipeline for ingesting data and studying its most salient features. A set of open source and proprietary feature engineering tools help focus algorithms on the most important parts of your data. The results are displayed in a dashboard or a collection of automated graphical visualizations.

Key Points:

- Focus on AI being best suited for problems that require complex solutions that adapt to incoming data;

- Tools range from being used to create large data-driven Pipeline's AI Cloud, to open-source Python-based Wave that helps desktop users create real-time dashboards;

- Run on-premises or in any cloud platform;

- The core platform is fully open source;

IBM SPSS

For decades, statisticians have used IBM's SPSS to crunch numbers. The latest version includes options to integrate new methods, such as machine learning, text analysis, or other artificial intelligence algorithms. Statistics packages focus on numerical interpretations of events that occur. SPSS Modeler is a drag-and-drop tool for creating data pipelines to gain actionable insights.

Key Points:

- Ideal for large legacy enterprises with big data streams;

- Integrate with other IBM tools such as Watson Studio;

- Pricing starts at $499 per user per month, and Lots of free trials available.

RapidMiner

RapidMiner’s tools are always provided to frontline data scientists first. Its core product is a complete visual IDE for experimenting with various data flows to find the best insights. The product line now includes more automation solutions that open the process to more people in the enterprise through a simpler interface and a range of tools for cleaning data and finding the best modeling solutions. These can then be deployed to the production line. The company is also expanding its cloud offerings with an artificial intelligence hub designed to simplify adoption.

Key Points:

- Ideal for data scientists who work directly with data and drive exploration;

- Provides transparency for users who need to understand the reasons behind predictions;

- Encourage collaboration between AI scientists and users with the Jupyter notebook-powered Artificial Intelligence Hub (AI Hub);

- Strong support for Python-based open source tools;

- Extensive free tier RapidMiner Studio is available for early-stage trials and education programs; pricing models are available on demand for large-scale projects and production deployments.

- SAP

Anyone working in the manufacturing industry should know SAP software. Its database can track goods at various stages of the supply chain. To do this, they invested heavily in developing a great tool for predictive analytics, allowing businesses to make more informed decisions about what might happen next. The tool is heavily based on business intelligence and reporting, treating forecasts as just another column in the analytics presentation. Information from the past informs decisions about the future, primarily using a collection of highly automated machine learning routines. You don’t need to be an AI programmer to run it. In fact, they've also been working on creating what they call "conversation analytics" tools that can provide useful insights to any manager who asks questions in human language.

Key Points:

Ideal for stacks that already rely on deep integration with SAP warehouse and supply chain management software;- Designed with a low-code and no-code strategy to Open analytics for everyone;

- Part of the regular business intelligence process to ensure consistency and simplicity;

- Users can gain insights into how AI makes decisions by asking for the context behind predictions Decision-making.

- The free plan allows for experimentation. Basic pricing starts at $36 per user per month.

- SAS

As one of the oldest statistical and business intelligence software packages, SAS has become more powerful over time. Businesses that need predictions can generate forward-looking reports that rely on any combination of statistics and machine learning algorithms, what SAS calls "composite AI." The product line is divided into tools for basic exploration, such as visual data mining or visual prediction. There are also industry-focused tools, such as anti-money laundering software designed to predict potential compliance issues.

Highlights:

Extensive toolset that has been optimized for specific industries (such as banks);- The perfect combination of traditional statistics and modern machine learning;

- Designed for on-premises and cloud-based deployments;

- Pricing is highly dependent on product selection and usage.

- TIBCO

After various integrated tools collect data, TIBCO’s predictive analytics can begin generating predictions. Data Science Studio is designed to enable teams to co-create low-code and no-code analytics. More focused options are available for specific data sets. For example, TIBCO Streaming is optimized for creating real-time decisions from a sequence of events. Spotfire creates dashboards by integrating location-based data with historical results. These tools work with the company's more robust product line to better support data collection, integration and storage.

Key Points:

Ideal for supporting larger data management architectures;- Predictive analytics integrated with multiple data movement and storage options;

- Built on a tradition of generating reports and business intelligence;

- Machine learning and other artificial intelligence options can improve accuracy;

- Products are planned for a variety of different cloud platforms and on-premises options Independent pricing available. "Turn-key" AWS instances start at 99 cents per hour.

- Original link:

The above is the detailed content of Comparison of eight major predictive analysis tools. For more information, please follow other related articles on the PHP Chinese website!

An easy-to-understand explanation of how to make inventory management more efficient using ChatGPT!May 14, 2025 am 03:44 AM

An easy-to-understand explanation of how to make inventory management more efficient using ChatGPT!May 14, 2025 am 03:44 AMEasy to implement even for small and medium-sized businesses! Smart inventory management with ChatGPT and Excel Inventory management is the lifeblood of your business. Overstocking and out-of-stock items have a serious impact on cash flow and customer satisfaction. However, the current situation is that introducing a full-scale inventory management system is high in terms of cost. What you'd like to focus on is the combination of ChatGPT and Excel. In this article, we will explain step by step how to streamline inventory management using this simple method. Automate tasks such as data analysis, demand forecasting, and reporting to dramatically improve operational efficiency. moreover,

An easy-to-understand explanation of how to check and switch versions of ChatGPT!May 14, 2025 am 03:43 AM

An easy-to-understand explanation of how to check and switch versions of ChatGPT!May 14, 2025 am 03:43 AMUse AI wisely by choosing a ChatGPT version! A thorough explanation of the latest information and how to check ChatGPT is an ever-evolving AI tool, but its features and performance vary greatly depending on the version. In this article, we will explain in an easy-to-understand manner the features of each version of ChatGPT, how to check the latest version, and the differences between the free version and the paid version. Choose the best version and make the most of your AI potential. Click here for more information about OpenAI's latest AI agent, OpenAI Deep Research ⬇️ [ChatGPT] OpenAI D

Explaining the reasons why you cannot use your credit card with ChatGPT's paid plan and how to deal with itMay 14, 2025 am 03:32 AM

Explaining the reasons why you cannot use your credit card with ChatGPT's paid plan and how to deal with itMay 14, 2025 am 03:32 AMTroubleshooting Guide for Credit Card Payment with ChatGPT Paid Subscriptions Credit card payments may be problematic when using ChatGPT paid subscription. This article will discuss the reasons for credit card rejection and the corresponding solutions, from problems solved by users themselves to the situation where they need to contact a credit card company, and provide detailed guides to help you successfully use ChatGPT paid subscription. OpenAI's latest AI agent, please click ⬇️ for details of "OpenAI Deep Research" 【ChatGPT】Detailed explanation of OpenAI Deep Research: How to use and charging standards Table of contents Causes of failure in ChatGPT credit card payment Reason 1: Incorrect input of credit card information Original

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AM

An easy-to-understand explanation of how to create a VBA macro in ChatGPT!May 14, 2025 am 02:40 AMFor beginners and those interested in business automation, writing VBA scripts, an extension to Microsoft Office, may find it difficult. However, ChatGPT makes it easy to streamline and automate business processes. This article explains in an easy-to-understand manner how to develop VBA scripts using ChatGPT. We will introduce in detail specific examples, from the basics of VBA to script implementation using ChatGPT integration, testing and debugging, and benefits and points to note. With the aim of improving programming skills and improving business efficiency,

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AM

I can't use the ChatGPT plugin function! Explaining what to do in case of an errorMay 14, 2025 am 01:56 AMChatGPT plugin cannot be used? This guide will help you solve your problem! Have you ever encountered a situation where the ChatGPT plugin is unavailable or suddenly fails? The ChatGPT plugin is a powerful tool to enhance the user experience, but sometimes it can fail. This article will analyze in detail the reasons why the ChatGPT plug-in cannot work properly and provide corresponding solutions. From user setup checks to server troubleshooting, we cover a variety of troubleshooting solutions to help you efficiently use plug-ins to complete daily tasks. OpenAI Deep Research, the latest AI agent released by OpenAI. For details, please click ⬇️ [ChatGPT] OpenAI Deep Research Detailed explanation:

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AM

Does ChatGPT not follow the character count specification? A thorough explanation of how to deal with this!May 14, 2025 am 01:54 AMWhen writing a sentence using ChatGPT, there are times when you want to specify the number of characters. However, it is difficult to accurately predict the length of sentences generated by AI, and it is not easy to match the specified number of characters. In this article, we will explain how to create a sentence with the number of characters in ChatGPT. We will introduce effective prompt writing, techniques for getting answers that suit your purpose, and teach you tips for dealing with character limits. In addition, we will explain why ChatGPT is not good at specifying the number of characters and how it works, as well as points to be careful about and countermeasures. This article

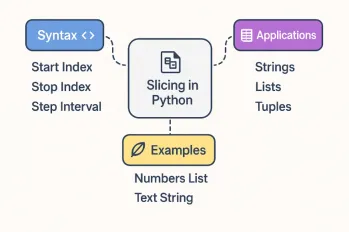

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AM

All About Slicing Operations in PythonMay 14, 2025 am 01:48 AMFor every Python programmer, whether in the domain of data science and machine learning or software development, Python slicing operations are one of the most efficient, versatile, and powerful operations. Python slicing syntax a

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AM

An easy-to-understand explanation of how to use ChatGPT to create quotes!May 14, 2025 am 01:44 AMThe evolution of AI technology has accelerated business efficiency. What's particularly attracting attention is the creation of estimates using AI. OpenAI's AI assistant, ChatGPT, contributes to improving the estimate creation process and improving accuracy. This article explains how to create a quote using ChatGPT. We will introduce efficiency improvements through collaboration with Excel VBA, specific examples of application to system development projects, benefits of AI implementation, and future prospects. Learn how to improve operational efficiency and productivity with ChatGPT. Op

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver Mac version

Visual web development tools