Technology peripherals

Technology peripherals AI

AI Can't find the Chinese speech pre-trained model? Chinese version Wav2vec 2.0 and HuBERT are coming

Can't find the Chinese speech pre-trained model? Chinese version Wav2vec 2.0 and HuBERT are comingCan't find the Chinese speech pre-trained model? Chinese version Wav2vec 2.0 and HuBERT are coming

Wav2vec 2.0 [1], HuBERT [2] and WavLM [3] and other speech pre-training models, through self-supervised learning on tens of thousands of hours of unlabeled speech data (such as Libri-light) , significantly improving the performance of downstream speech tasks such as Automatic Speech Recognition (ASR), Text-to-speech (TTS) and Voice Conversion (VC). However, these models do not have public Chinese versions, making them inconvenient to apply in Chinese speech research scenarios.

WenetSpeech [4] is a multi-domain speech with more than 10,000 hours jointly released by the Audio, Speech and Language Processing Research Group (ASLP@NPU) of NPU, Mobvoi, and Hillshell data set. In order to fill the gap in Chinese speech pre-training models, we have open sourced the Chinese versions of Wav2vec 2.0 and HuBERT models based on 10,000 hours of data training from WenetSpeech.

In order to verify the performance of the pre-trained model, we performed verification on the ASR task. Experimental results show that on the ASR task with 100 hours of supervised data, the speech representation learned by the pre-training model has a significant performance improvement compared to the traditional acoustic FBank features. It can even be obtained with only 100 hours of supervised data and 1000 hours of supervision. Data comparable results.

Model link: https://github.com/TencentGameMate/chinese_speech_pretrain

##Model introductionWav2vec 2.0 Model

Figure 1: Wav2vec 2.0 model structure (Baevski et al., 2020)

Wav2vec 2.0 [1] is an unsupervised speech pre-training model published by Meta in 2020. Its core idea is to construct a self-constructed supervised training target through Vector Quantization (VQ), mask the input in large quantities and then use the contrastive learning loss function for training. The model structure is shown in Figure 1 above. The feature extractor based on the Convolutational Neural Network (CNN) encodes the original audio into a sequence of frame features, and converts each frame feature into a discrete feature Q through the VQ module, which is used as a self-supervised target. At the same time, the frame feature sequence undergoes a masking operation and then enters the Transformer [5] model to obtain the context representation C. Finally, the distance between the context representation of the mask position and the corresponding discrete feature q is shortened by comparing the learning loss function, that is, the positive sample pair. In the original paper, the Wav2vec 2.0 BASE model uses a 12-layer Transformer structure and is trained with 1,000 hours of LibriSpeech data. The LARGE model uses a 24-layer Transformer structure and is trained with 60,000 hours of Libri-light data. In terms of training time, the BASE model uses 64 V100 graphics cards to train for 1.6 days, and the LARGE model uses 128 V100 graphics cards to train for 5 days. In the downstream ASR evaluation, even using only 10 minutes of supervised data, the system still achieved a Word Error Rate (WER) result of 4.8.

HuBERT model

##Figure 2: HuBERT model structure (Hsu et al., 2021)

HuBERT [2] is a model published by Meta in 2021. The model structure is similar to Wav2vec 2.0, but the difference is the training method. Wav2vec 2.0 discretizes speech features as a self-supervised target during training, while HuBERT obtains the training target by performing K-means clustering on MFCC features or HuBERT features. The HuBERT model adopts an iterative training method. The first iteration of the BASE model performs clustering on the MFCC features. The second iteration performs clustering on the middle layer features of the HuBERT model obtained in the first iteration. The LARGE and XLARGE models use The second iteration of the BASE model extracts features for clustering. Judging from the experimental results of the original paper, the HuBERT model is better than Wav2vec 2.0, especially when the downstream tasks have very little supervised training data, such as 1 hour and 10 minutes.Experimental configurationWe use 10,000 hours of Chinese data from the WenetSpeech [4] train_l set as unsupervised pre-training data. The data mainly comes from YouTube and Podcasts, covering various types of recording scenes, background noise, speaking styles, etc. Its fields mainly include audiobooks, narrations, documentaries, TV series, interviews, news, readings, speeches, variety shows and others, etc. 10 major Scenes. We trained Wav2vec 2.0 and HuBERT models respectively based on the Fairseq toolkit [6], following the model configuration of [1, 2], and each pre-trained model model includes two sizes: BASE and LARGE. For the BASE model, we use 8 A100 graphics cards, the gradient accumulation is 8, and simulate 64 graphics cards for training. For the LARGE model, we use 16 A100 graphics cards with a gradient accumulation of 8, simulating 128 graphics cards for training. Downstream speech recognition task verificationIn order to verify the effect of the pre-trained model on the downstream ASR task, we follow the Conformer in the ESPnet [7,8,9] toolkit [10] Model experimental configuration, that is, the pre-trained model is used as a feature extractor, and the hidden layer representations of the input speech extraction pre-trained model are weighted and summed. The obtained speech representation will replace the traditional FBank features as the input of the Conformer ASR model. We used the Aishell 178-hour training set as supervised data for training, and compared Character Error Rate (CER) results using FBank features, Wav2vec 2.0 BASE/LARGE model features, and HuBERT BASE/LARGE model features. At the same time, we additionally compared its effect on the Aishell test set when using the WenetSpeech train_l set of 10,000 hours of Chinese data for training. The training data uses variable speed (0.9, 1.0, 1.1 times) and SpecAugment data augmentation technology, the decoding method is beam search, and a Transformer-based language model is used for rescoring. Table 1: Word error rate (CER%) results of different models on the Aishell test set According to the results in Table 1, we can see that by combining the pre-trained model with tens of thousands of hours of unsupervised data training, the performance of downstream ASR tasks has been significantly improved. Especially when using the HuBERT LARGE model, a relative improvement of about 30% in CER was achieved on the Test set, achieving the best results in the industry under 178 hours of supervised training data. We use the WenetSpeech train_s set of 100 hours of Chinese data as supervised data for training, respectively. The Character Error Rate (CER) results using FBank features, Wav2vec 2.0 BASE/LARGE model features and HuBERT BASE/LARGE model features were compared. At the same time, we additionally compared the model results using the WenetSpeech train_m set for 1,000 hours and the train_l set for 10,000 hours of Chinese data FBank features. The training data does not use variable speed or SpecAugment data augmentation technology, the decoding method is beam search, and no language model rescoring is used. Table 2: Word error rate (CER%) results of different models on the WenetSpeech test set As can be seen from the results in Table 2, by combining the pre-trained model with tens of thousands of hours of unsupervised data training, the downstream ASR results have been greatly improved. Especially when using HuBERT LARGE as the speech representation extractor, the ASR model trained with 100 hours of supervised data performs better than the model trained with 1000 hours of FBank features, and is even close to the model trained with 10,000 hours of data. For more experimental results on speech downstream tasks, please follow the GitHub link (https://github.com/TencentGameMate/chinese_speech_pretrain). Everyone is welcome to use the Chinese speech pre-training model we provide to carry out research work, and explore the application of the speech pre-training model in Chinese and related scenarios. Chinese pre-training model

The above is the detailed content of Can't find the Chinese speech pre-trained model? Chinese version Wav2vec 2.0 and HuBERT are coming. For more information, please follow other related articles on the PHP Chinese website!

MarkItDown MCP Can Convert Any Document into Markdowns!Apr 27, 2025 am 09:47 AM

MarkItDown MCP Can Convert Any Document into Markdowns!Apr 27, 2025 am 09:47 AMHandling documents is no longer just about opening files in your AI projects, it’s about transforming chaos into clarity. Docs such as PDFs, PowerPoints, and Word flood our workflows in every shape and size. Retrieving structured

How to Use Google ADK for Building Agents? - Analytics VidhyaApr 27, 2025 am 09:42 AM

How to Use Google ADK for Building Agents? - Analytics VidhyaApr 27, 2025 am 09:42 AMHarness the power of Google's Agent Development Kit (ADK) to create intelligent agents with real-world capabilities! This tutorial guides you through building conversational agents using ADK, supporting various language models like Gemini and GPT. W

Use of SLM over LLM for Effective Problem Solving - Analytics VidhyaApr 27, 2025 am 09:27 AM

Use of SLM over LLM for Effective Problem Solving - Analytics VidhyaApr 27, 2025 am 09:27 AMsummary: Small Language Model (SLM) is designed for efficiency. They are better than the Large Language Model (LLM) in resource-deficient, real-time and privacy-sensitive environments. Best for focus-based tasks, especially where domain specificity, controllability, and interpretability are more important than general knowledge or creativity. SLMs are not a replacement for LLMs, but they are ideal when precision, speed and cost-effectiveness are critical. Technology helps us achieve more with fewer resources. It has always been a promoter, not a driver. From the steam engine era to the Internet bubble era, the power of technology lies in the extent to which it helps us solve problems. Artificial intelligence (AI) and more recently generative AI are no exception

How to Use Google Gemini Models for Computer Vision Tasks? - Analytics VidhyaApr 27, 2025 am 09:26 AM

How to Use Google Gemini Models for Computer Vision Tasks? - Analytics VidhyaApr 27, 2025 am 09:26 AMHarness the Power of Google Gemini for Computer Vision: A Comprehensive Guide Google Gemini, a leading AI chatbot, extends its capabilities beyond conversation to encompass powerful computer vision functionalities. This guide details how to utilize

Gemini 2.0 Flash vs o4-mini: Can Google Do Better Than OpenAI?Apr 27, 2025 am 09:20 AM

Gemini 2.0 Flash vs o4-mini: Can Google Do Better Than OpenAI?Apr 27, 2025 am 09:20 AMThe AI landscape of 2025 is electrifying with the arrival of Google's Gemini 2.0 Flash and OpenAI's o4-mini. These cutting-edge models, launched weeks apart, boast comparable advanced features and impressive benchmark scores. This in-depth compariso

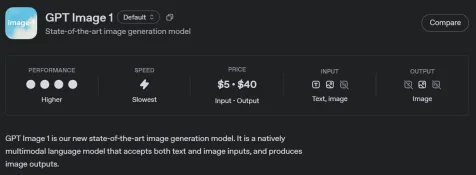

How to Generate and Edit Images Using OpenAI gpt-image-1 APIApr 27, 2025 am 09:16 AM

How to Generate and Edit Images Using OpenAI gpt-image-1 APIApr 27, 2025 am 09:16 AMOpenAI's latest multimodal model, gpt-image-1, revolutionizes image generation within ChatGPT and via its API. This article explores its features, usage, and applications. Table of Contents Understanding gpt-image-1 Key Capabilities of gpt-image-1

How to Perform Data Preprocessing Using Cleanlab? - Analytics VidhyaApr 27, 2025 am 09:15 AM

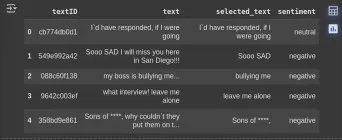

How to Perform Data Preprocessing Using Cleanlab? - Analytics VidhyaApr 27, 2025 am 09:15 AMData preprocessing is paramount for successful machine learning, yet real-world datasets often contain errors. Cleanlab offers an efficient solution, using its Python package to implement confident learning algorithms. It automates the detection and

The AI Skills Gap Is Slowing Down Supply ChainsApr 26, 2025 am 11:13 AM

The AI Skills Gap Is Slowing Down Supply ChainsApr 26, 2025 am 11:13 AMThe term "AI-ready workforce" is frequently used, but what does it truly mean in the supply chain industry? According to Abe Eshkenazi, CEO of the Association for Supply Chain Management (ASCM), it signifies professionals capable of critic

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Dreamweaver Mac version

Visual web development tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

WebStorm Mac version

Useful JavaScript development tools