Technology peripherals

Technology peripherals AI

AI Just train once to generate new 3D scenes! The evolution history of Google's 'Light Field Neural Rendering”

Just train once to generate new 3D scenes! The evolution history of Google's 'Light Field Neural Rendering”Just train once to generate new 3D scenes! The evolution history of Google's 'Light Field Neural Rendering”

View synthesis is a key problem at the intersection of computer vision and computer graphics. It refers to creating a new view of a scene from multiple pictures of the scene.

To accurately synthesize a new view of a scene, a model needs to capture multiple types of information from a small set of reference images, such as detailed 3D structure , materials and lighting, etc.

Since researchers proposed the Neural Radiation Field (NeRF) model in 2020, this issue has also received increasing attention, greatly promoting new views Synthetic performance.

One of the super big players is Google, which has also published many papers in the field of NeRF. This article will introduce two A paper published by Google at CVPR 2022 and ECCV 2022, describing the evolution of light field neural rendering model.

The first paper proposes a two-stage model based on Transformer to learn to combine reference pixel colors. First, the features along the epipolar lines are obtained, The features along the reference view are then obtained to generate the color of the target ray, greatly improving the accuracy of view reproduction.

Paper link: https://arxiv.org/pdf/2112.09687.pdf

ClassicLight Field RenderingCan accurately reproduce view-related effects such as reflection, refraction, and translucency, but requires dense view sampling of the scene. Methods based on geometric reconstruction only require sparse views, but cannot accurately simulate non-Lambertian effects, that is, non-ideal scattering.

The new model proposed in this article combines the advantages of these two directions and alleviates its limitations, by focusing on light By manipulating the four-dimensional representation of the field, the model can learn to accurately represent view-dependent effects. Scene geometry is implicitly learned from a sparse set of views by enforcing geometric constraints during training and inference.

The model outperforms state-of-the-art models on multiple forward and 360° datasets and has severe line-of-sight dependence There is greater leeway in scenes of sexual change.

Another paper solves the generalization problem of synthesizing unseen scenes by using Transformer sequences with canonicalized position encoding . After the model is trained on a set of scenes, it can be used to synthesize views of new scenes.

Paper link: https://arxiv.org/pdf/2207.10662.pdf

This article proposes a different paradigm that does not require depth features and NeRF-like volume rendering. This method can directly predict the color of target rays in new scenes by simply sampling a patch set from the scene.

First use epipolar geometry to extract patches along the epipolar lines of each reference view, and assign each patch Linearly projected into a one-dimensional feature vector, this set is then processed by a series of Transformers.

For position encoding, the researchers used a method similar to the light field representation methodto parameterize the rays. The difference is that the coordinates are normalized relative to the target ray, and also This makes the method independent of the reference frame and improves versatility.

The innovation of the model is that it performs image-based rendering, combining the color and characteristics of the reference image to render a new view, and it is purely It is based on Transformer and operates on image patch sets. And they utilize 4D light field representations for position encoding, helping to simulate view-related effects.

Final experimental results show that this method outperforms other methods in new view synthesis of unseen scenes, even when trained with much less data than The same is true for ##.

Light Field Neural RenderingThe input to the model includes a set of reference images, the corresponding camera parameters (focal length, position and spatial orientation), and the user's desired The coordinates of the color's target ray.

In order to generate a new image, we need to start with the camera parameters of the input image, first obtain the coordinates of the target ray (each one corresponds to a pixel), and for each coordinate Model query.

The researchers’ solution was to not fully process each reference image, but only to look at the areas that might affect the target pixels. These regions can be determined by epipolar geometry, mapping each target pixel to a line on each reference frame.

For the sake of safety, you need to select a small area around some points on the epipolar line to form a set of patches that will be actually processed by the model, and then apply the Transformer to this set of patches. Get the color of the target pixel.

Transformer is particularly useful in this case because the self-attention mechanism in it can naturally take the patch collection as input and the attention weight itself It can be used to predict the color of output pixels by combining reference view colors and features.

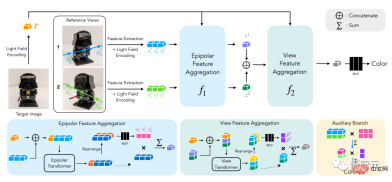

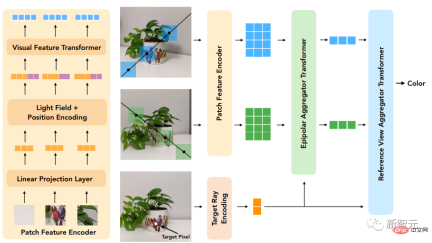

In light field neural rendering (LFNR), researchers use two Transformer sequences to map a collection of patches to target pixel colors.

The first Transformer aggregates information along each epipolar line, and the second Transformer aggregates information along each reference image.

This method can interpret the first Transformer as finding the potential correspondence of the target pixel on each reference frame, while the second Transformer is responsible for occlusion and line-of-sight dependence effects. reasoning, which is also a common difficulty with image-based rendering.

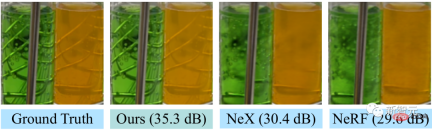

LFNR outperforms the sota model on the most popular view synthesis benchmarks (NeRF’s Blender and Real Forward-Facing scenes and NeX’s Shiny) The peak signal-to-noise ratio (PSNR) is improved by up to 5dB, which is equivalent to reducing the pixel-level error by 1.8 times.

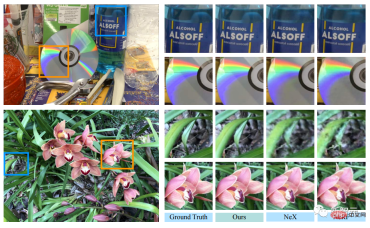

LFNR can reproduce some of the more difficult line-of-sight-dependent effects in the NeX/Shiny dataset, such as rainbows and reflections on CDs, reflections, refractions and translucency on bottles.

Compared with previous methods such as NeX and NeRF, they are unable to reproduce line-of-sight-related effects, such as in the NeX/Shiny dataset Translucency and refractive index of test tubes in a laboratory scene.

But LFNR also has limitations.

The first Transformer folds information along each epipolar line independently for each reference image, which also means that the model can only decide what information to retain based on the output ray coordinates and patches of each reference image. , which works well in training on a single scene (like most neural rendering methods), but it cannot generalize to different scenes.

Generalizable models are important because they can be directly applied to new scenarios without retraining.

The researchers proposed a general patch-based neural rendering (GPNR) model to solve this shortcoming of LFNR.

By adding a Transformer to the model so that it runs before the other two Transformers and between the points of the same depth of all reference images exchange information between.

GPNR consists of a sequence of three Transformers that map a set of patches extracted along epipolar lines into pixel colors. Image patches are mapped to initial features through linear projection layers, and then these features are continuously refined and aggregated by the model to finally form features and colors.

For example, after the first Transformer extracts the patch sequence from "Park Bench", the new model can use "Flowers" that appear at corresponding depths in both views Such clues indicate a potential match.

Another key idea of this work is to normalize the position encoding according to the target ray, because we want to generalize in different scenarios, Quantities must be represented in a relative rather than an absolute frame of reference

To evaluate the model's generalization performance, the researchers trained GPNR on a set of scenarios and tested it on new scenarios .

GPNR improves by an average of 0.5-1.0 dB on several benchmarks (following IBRNet and MVSNeRF protocols), especially on the IBRNet benchmark, where GPNR improves using only 11% of the training scenarios. case, it exceeds the baseline model.

GPNR generated view details on maintained scenes in NeX/Shiny and LLFF without any fine-tuning. GPNR more accurately reproduces details on the blades and refraction through the lens than IBRNet.

The above is the detailed content of Just train once to generate new 3D scenes! The evolution history of Google's 'Light Field Neural Rendering”. For more information, please follow other related articles on the PHP Chinese website!

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AM

From Friction To Flow: How AI Is Reshaping Legal WorkMay 09, 2025 am 11:29 AMThe legal tech revolution is gaining momentum, pushing legal professionals to actively embrace AI solutions. Passive resistance is no longer a viable option for those aiming to stay competitive. Why is Technology Adoption Crucial? Legal professional

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AM

This Is What AI Thinks Of You And Knows About YouMay 09, 2025 am 11:24 AMMany assume interactions with AI are anonymous, a stark contrast to human communication. However, AI actively profiles users during every chat. Every prompt, every word, is analyzed and categorized. Let's explore this critical aspect of the AI revo

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AM

7 Steps To Building A Thriving, AI-Ready Corporate CultureMay 09, 2025 am 11:23 AMA successful artificial intelligence strategy cannot be separated from strong corporate culture support. As Peter Drucker said, business operations depend on people, and so does the success of artificial intelligence. For organizations that actively embrace artificial intelligence, building a corporate culture that adapts to AI is crucial, and it even determines the success or failure of AI strategies. West Monroe recently released a practical guide to building a thriving AI-friendly corporate culture, and here are some key points: 1. Clarify the success model of AI: First of all, we must have a clear vision of how AI can empower business. An ideal AI operation culture can achieve a natural integration of work processes between humans and AI systems. AI is good at certain tasks, while humans are good at creativity and judgment

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AM

Netflix New Scroll, Meta AI's Game Changers, Neuralink Valued At $8.5 BillionMay 09, 2025 am 11:22 AMMeta upgrades AI assistant application, and the era of wearable AI is coming! The app, designed to compete with ChatGPT, offers standard AI features such as text, voice interaction, image generation and web search, but has now added geolocation capabilities for the first time. This means that Meta AI knows where you are and what you are viewing when answering your question. It uses your interests, location, profile and activity information to provide the latest situational information that was not possible before. The app also supports real-time translation, which completely changed the AI experience on Ray-Ban glasses and greatly improved its usefulness. The imposition of tariffs on foreign films is a naked exercise of power over the media and culture. If implemented, this will accelerate toward AI and virtual production

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AM

Take These Steps Today To Protect Yourself Against AI CybercrimeMay 09, 2025 am 11:19 AMArtificial intelligence is revolutionizing the field of cybercrime, which forces us to learn new defensive skills. Cyber criminals are increasingly using powerful artificial intelligence technologies such as deep forgery and intelligent cyberattacks to fraud and destruction at an unprecedented scale. It is reported that 87% of global businesses have been targeted for AI cybercrime over the past year. So, how can we avoid becoming victims of this wave of smart crimes? Let’s explore how to identify risks and take protective measures at the individual and organizational level. How cybercriminals use artificial intelligence As technology advances, criminals are constantly looking for new ways to attack individuals, businesses and governments. The widespread use of artificial intelligence may be the latest aspect, but its potential harm is unprecedented. In particular, artificial intelligence

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AM

A Symbiotic Dance: Navigating Loops Of Artificial And Natural PerceptionMay 09, 2025 am 11:13 AMThe intricate relationship between artificial intelligence (AI) and human intelligence (NI) is best understood as a feedback loop. Humans create AI, training it on data generated by human activity to enhance or replicate human capabilities. This AI

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AM

AI's Biggest Secret — Creators Don't Understand It, Experts SplitMay 09, 2025 am 11:09 AMAnthropic's recent statement, highlighting the lack of understanding surrounding cutting-edge AI models, has sparked a heated debate among experts. Is this opacity a genuine technological crisis, or simply a temporary hurdle on the path to more soph

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AM

Bulbul-V2 by Sarvam AI: India's Best TTS ModelMay 09, 2025 am 10:52 AMIndia is a diverse country with a rich tapestry of languages, making seamless communication across regions a persistent challenge. However, Sarvam’s Bulbul-V2 is helping to bridge this gap with its advanced text-to-speech (TTS) t

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Chinese version

Chinese version, very easy to use

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.