Research shows that the widespread use of general artificial intelligence tools creates new challenges for regulators that they may struggle to meet. In fact, how to regulate generative AI tools such as OpenAI’s chatbot ChatGPT has become a problem that troubles policymakers around the world.

ChatGPT can generate any type of content through keywords and is trained to input a large amount of knowledge. The solution will involve assessing risks, some of which, in particular, should be closely monitored.

Two months after the launch of ChatGPT, this AI chatbot has become the fastest-growing consumer product in history, with more than 100 million active users in January this year alone. This has prompted large technology companies around the world to pay attention to or accelerate the launch of AI systems, and has brought new vitality to the field of conversational AI.

Microsoft is embedding conversational AI in its browser, search engine and broader product range; Google plans to do the same with chatbot Bard and other integrations in Gmail and Google Cloud; Baidu and others Tech giants are also launching their own chatbots; startups such as Jasper and Quora are also bringing generative and conversational AI into mainstream consumer and enterprise markets...

Generative AI accelerates regulation Demand

Widespread misinformation and hard-to-find phishing emails pose real risks to the application of AI, which can lead to misdiagnosis and medical errors if used for medical information. There is also a high risk of bias if the data used to populate the model is not diverse.

While Microsoft has a more accurate retrained model, and providers like AI21 Inc. are working to validate generated content against live data, the generated AI "looks real but is completely inaccurate" The risk of response remains high.

European Union Internal Market Commissioner Thierry Breton recently stated that the upcoming EU AI bill will include provisions for generative AI systems such as ChatGPT and Bard.

“As ChatGPT shows, AI solutions can offer businesses and citizens huge opportunities, but they can also bring risks. That’s why we need a solid regulatory framework to ensure quality-based Trustworthy AI for data,” he said.

AI development needs to be ethical

Analytics software provider SAS outlined some of the risks posed by AI in a report titled "AI and Responsible Innovation" . Dr. Kirk Borne, author of the report, said: “AI has become so powerful and so pervasive that it is increasingly difficult to tell whether the content it generates is true or false, good or bad. The pace of adoption of this technology is significantly faster than the pace of regulation.”

Dr Iain Brown, head of data science at SAS UK and Ireland, said both government and industry have a responsibility to ensure AI is used for good rather than harm. This includes using an ethical framework to guide the development of AI models and strict governance to ensure these models make fair, transparent and equitable decisions. We can compare the AI model to the challenger model and optimize it as new data becomes available. ”

Other experts believe that software developers will be required to reduce the risks represented by the software, and only the riskiest activities will face stricter regulatory measures.

Ropes & Gray LLP (Ropes&Gray) Data, Privacy and Cybersecurity Assistant Edward Machin said that it is inevitable that technologies like ChatGPT, which appear seemingly overnight, will be adopted faster than regulatory regulations, especially in an already difficult environment like AI. Areas of regulation.

He said: “Although regulatory policies for these technologies will be introduced, the regulatory methods and timing of regulation remain to be seen. Suppliers of AI systems will bear the brunt, but importers and distributors (at least in the EU) will also bear potential obligations. This may put some open source software developers in trouble. How the responsibilities of open source developers and other downstream parties are handled could have a chilling effect on the willingness of these individuals to innovate and conduct research. ”

Copyright, Privacy and GDPR Regulations

In addition, Machin believes that in addition to the overall supervision of AI, there are also issues regarding copyright and privacy of the content it generates. For example, it's unclear how easily (if at all) developers can handle individuals' requests for removal or correction, nor how they can scrape third-party sites from their sites in a way that might violate their terms of service. Lots of data.

Lilian Edwards, a professor of law, innovation and society at Newcastle University who works on AI regulation at the Alan Turing Institute, said some of these models will be subject to GDPR regulations, which could lead to Issue orders to delete training data or even the algorithms themselves. If website owners lose traffic to AI searches, it could also mean the end of the massive scraping of data from the internet that currently powers search engines like Google.

He pointed out that the biggest problem is the general nature of these AI models. This makes them difficult to regulate under the EU AI Act, which is drafted based on the risks faced, as it is difficult to tell what end users will do with the technology. The European Commission is trying to add rules to govern this type of technology.

Enhancing algorithm transparency may be a solution. "Big Tech is going to start lobbying regulators, saying, 'You can't impose these obligations on us because we can't imagine every risk or use in the future,'" Edwards said. "There are ways of dealing with this problem for Big Tech Companies have helped more or less, including by making the underlying algorithms more transparent. We are in a difficult moment and need incentives to move toward openness and transparency in order to better understand how AI makes decisions and generates content. ”

She also said: “This is the same problem that people encounter when using more boring technology, because technology is global and people with bad intentions are everywhere, so it is very difficult to regulate Difficult. The behavior of general AI is difficult to match AI regulations."

Adam Leon Smith, chief technology officer of DragonFly, an AI consulting service provider, said: "Global regulators are increasingly aware that if the actual use of AI technology is not considered, situation, it is difficult to regulate. Accuracy and bias requirements can only be considered in the context of use, and it is difficult to consider risk, rights and freedom requirements before large-scale adoption."

"Regulators can Mandating transparency and logging from AI technology providers. However, only users who operate and deploy large language model (LLM) systems for specific purposes can understand the risks and implement mitigation measures through manual supervision or continuous monitoring," he added .

AI regulation is imminent

There has been a large-scale debate on AI regulation within the European Commission, and data regulators must deal with this seriously. Eventually, Smith believes that as regulators pay more attention to the issue, AI providers will start to list the purposes for which the technology "must not be used", including issuing legal disclaimers before users log in, putting them in a risk-based position. outside the scope of regulatory action.

Leon Smith said that the current best practices for managing AI systems almost do not involve large-scale language models, which is an emerging field that is developing extremely rapidly. While there is a lot of work to be done in this area, many companies offer these technologies and do not help define them.

OpenAI Chief Technology Officer Mira Muratti also said that generative AI tools need to be regulated, "It is important for companies like ours to make the public aware of this in a controlled and responsible way." .” But she also said that in addition to regulating AI suppliers, more investment in AI systems is needed, including investment from regulatory agencies and governments. ”

The above is the detailed content of How to regulate generative AI bots like ChatGPT and Bard?. For more information, please follow other related articles on the PHP Chinese website!

令人惊艳的4个ChatGPT项目,开源了!Mar 30, 2023 pm 02:11 PM

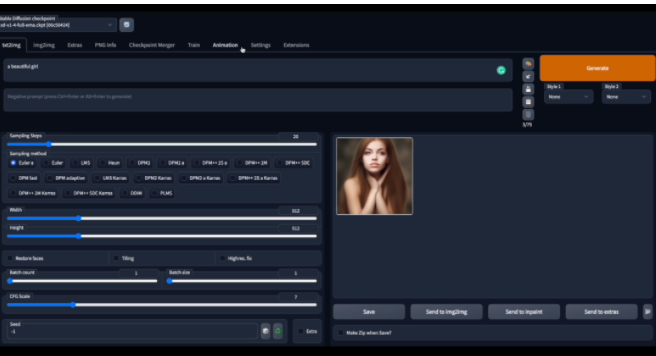

令人惊艳的4个ChatGPT项目,开源了!Mar 30, 2023 pm 02:11 PM自从 ChatGPT、Stable Diffusion 发布以来,各种相关开源项目百花齐放,着实让人应接不暇。今天,着重挑选几个优质的开源项目分享给大家,对我们的日常工作、学习生活,都会有很大的帮助。

Word文档拆分后的子文档字体格式变了怎么办Feb 07, 2023 am 11:40 AM

Word文档拆分后的子文档字体格式变了怎么办Feb 07, 2023 am 11:40 AMWord文档拆分后的子文档字体格式变了的解决办法:1、在大纲模式拆分文档前,先选中正文内容创建一个新的样式,给样式取一个与众不同的名字;2、选中第二段正文内容,通过选择相似文本的功能将剩余正文内容全部设置为新建样式格式;3、进入大纲模式进行文档拆分,操作完成后打开子文档,正文字体格式就是拆分前新建的样式内容。

学术专用版ChatGPT火了,一键完成论文润色、代码解释、报告生成Apr 04, 2023 pm 01:05 PM

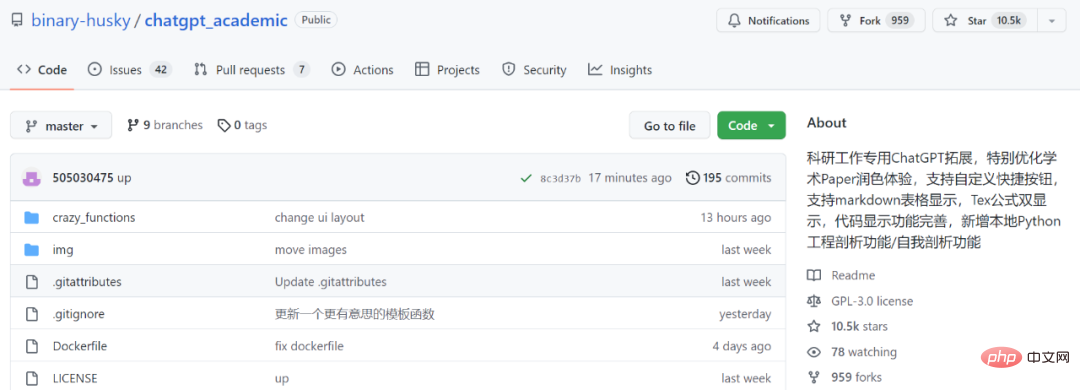

学术专用版ChatGPT火了,一键完成论文润色、代码解释、报告生成Apr 04, 2023 pm 01:05 PM用 ChatGPT 辅助写论文这件事,越来越靠谱了。 ChatGPT 发布以来,各个领域的从业者都在探索 ChatGPT 的应用前景,挖掘它的潜力。其中,学术文本的理解与编辑是一种极具挑战性的应用场景,因为学术文本需要较高的专业性、严谨性等,有时还需要处理公式、代码、图谱等特殊的内容格式。现在,一个名为「ChatGPT 学术优化(chatgpt_academic)」的新项目在 GitHub 上爆火,上线几天就在 GitHub 上狂揽上万 Star。项目地址:https://github.com/

vscode配置中文插件,带你无需注册体验ChatGPT!Dec 16, 2022 pm 07:51 PM

vscode配置中文插件,带你无需注册体验ChatGPT!Dec 16, 2022 pm 07:51 PM面对一夜爆火的 ChatGPT ,我最终也没抵得住诱惑,决定体验一下,不过这玩意要注册需要外国手机号以及科学上网,将许多人拦在门外,本篇博客将体验当下爆火的 ChatGPT 以及无需注册和科学上网,拿来即用的 ChatGPT 使用攻略,快来试试吧!

30行Python代码就可以调用ChatGPT API总结论文的主要内容Apr 04, 2023 pm 12:05 PM

30行Python代码就可以调用ChatGPT API总结论文的主要内容Apr 04, 2023 pm 12:05 PM阅读论文可以说是我们的日常工作之一,论文的数量太多,我们如何快速阅读归纳呢?自从ChatGPT出现以后,有很多阅读论文的服务可以使用。其实使用ChatGPT API非常简单,我们只用30行python代码就可以在本地搭建一个自己的应用。 阅读论文可以说是我们的日常工作之一,论文的数量太多,我们如何快速阅读归纳呢?自从ChatGPT出现以后,有很多阅读论文的服务可以使用。其实使用ChatGPT API非常简单,我们只用30行python代码就可以在本地搭建一个自己的应用。使用 Python 和 C

用ChatGPT秒建大模型!OpenAI全新插件杀疯了,接入代码解释器一键getApr 04, 2023 am 11:30 AM

用ChatGPT秒建大模型!OpenAI全新插件杀疯了,接入代码解释器一键getApr 04, 2023 am 11:30 AMChatGPT可以联网后,OpenAI还火速介绍了一款代码生成器,在这个插件的加持下,ChatGPT甚至可以自己生成机器学习模型了。 上周五,OpenAI刚刚宣布了惊爆的消息,ChatGPT可以联网,接入第三方插件了!而除了第三方插件,OpenAI也介绍了一款自家的插件「代码解释器」,并给出了几个特别的用例:解决定量和定性的数学问题;进行数据分析和可视化;快速转换文件格式。此外,Greg Brockman演示了ChatGPT还可以对上传视频文件进行处理。而一位叫Andrew Mayne的畅销作

ChatGPT教我学习PHP中AOP的实现(附代码)Mar 30, 2023 am 10:45 AM

ChatGPT教我学习PHP中AOP的实现(附代码)Mar 30, 2023 am 10:45 AM本篇文章给大家带来了关于php的相关知识,其中主要介绍了我是怎么用ChatGPT学习PHP中AOP的实现,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.