Technology peripherals

Technology peripherals AI

AI Has the language model learned to use search engines on its own? Meta AI proposes API call self-supervised learning method Toolformer

Has the language model learned to use search engines on its own? Meta AI proposes API call self-supervised learning method ToolformerIn natural language processing tasks, large language models have achieved impressive results in zero-shot and few-shot learning. However, all models have inherent limitations that can often only be partially addressed through further extensions. Specifically, the limitations of the model include the inability to access the latest information, the "information hallucination" of facts, the difficulty of understanding low-resource languages, the lack of mathematical skills for precise calculations, etc.

A simple way to solve these problems is to equip the model with external tools, such as a search engine, calculator, or calendar. However, existing methods often rely on extensive manual annotations or limit the use of tools to specific task settings, making the use of language models combined with external tools difficult to generalize.

In order to break this bottleneck, Meta AI recently proposed a new method called Toolformer, which allows the language model to learn to "use" various external tools.

##Paper address: https://arxiv.org/pdf/2302.04761v1.pdf

Toolformer quickly attracted great attention. Some people believed that this paper solved many problems of current large language models and praised: "This is the most important article in recent weeks. paper".

Some people pointed out that Toolformer uses self-supervised learning to allow large language models to learn to use some APIs and tools, which are very flexible and efficient:

Some even think that Toolformer will take us away from general artificial intelligence (AGI) One step closer.

Toolformer gets such a high rating because it meets the following practical needs:

- Large language models should learn the use of tools in a self-supervised manner without the need for extensive human annotation. This is critical because the cost of human annotation is high, but more importantly, what humans think is useful may be different from what the model thinks is useful.

- Language models require more comprehensive use of tools that are not bound to a specific task.

This clearly breaks the bottleneck mentioned above. Let’s take a closer look at Toolformer’s methods and experimental results.

MethodToolformer generates the dataset from scratch based on a large language model with in-context learning (ICL) (Schick and Schütze, 2021b; Honovich et al. , 2022; Wang et al., 2022)’s idea: just give a few samples of humans using the API, you can let LM annotate a huge language modeling data set with potential API calls; then use self-supervised loss function to determine which API calls actually help the model predict future tokens; and finally fine-tune based on API calls that are useful to the LM itself.

Since Toolformer is agnostic to the dataset used, it can be used on the exact same dataset as the model was pre-trained on, which ensures that the model does not lose any generality and language Modeling capabilities.

Specifically, the goal of this research is to equip the language model M with the ability to use various tools through API calls. This requires that the input and output of each API can be characterized as a sequence of text. This allows API calls to be seamlessly inserted into any given text, with special tokens used to mark the beginning and end of each such call.

The study represents each API call as a tuple

, where a_c is the name of the API and i_c is the corresponding input. Given an API call c with corresponding result r, this study represents the linearized sequence of API calls excluding and including its result as:

Among them,

Given data set

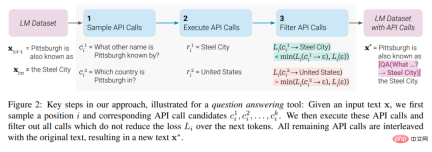

, the study first transformed this data set into a data set C* with added API calls. This is done in three steps, as shown in Figure 2 below: First, the study leverages M's in-context learning capabilities to sample a large number of potential API calls, then executes these API calls, and then checks whether the obtained responses help predictions Future token to be used as filtering criteria. After filtering, the study merges API calls to different tools, ultimately generating dataset C*, and fine-tunes M itself on this dataset.

Experiments and Results

The study was conducted on a variety of different downstream tasks Experimental results show that: Toolformer based on the 6.7B parameter pre-trained GPT-J model (learned to use various APIs and tools) significantly outperforms the larger GPT-3 model and several other baselines on various tasks.

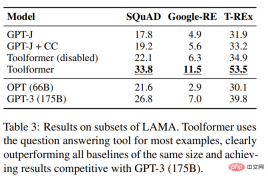

This study evaluated several models on the SQuAD, GoogleRE and T-REx subsets of the LAMA benchmark. The experimental results are shown in Table 3 below:

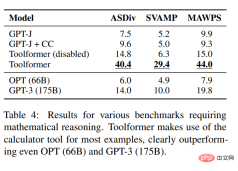

To test the mathematical reasoning capabilities of Toolformer, the study conducted experiments on the ASDiv, SVAMP, and MAWPS benchmarks. Experiments show that Toolformer uses calculator tools in most cases, which is significantly better than OPT (66B) and GPT-3 (175B).

In terms of question answering, the study conducted experiments on three question answering data sets: Web Questions, Natural Questions and TriviaQA . Toolformer significantly outperforms baseline models of the same size, but is inferior to GPT-3 (175B).

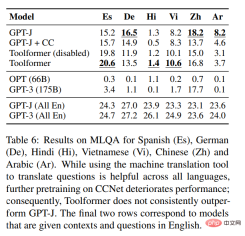

In terms of cross-language tasks, this study compared all baseline models on Toolformer and MLQA, and the results are as follows As shown in Table 6:

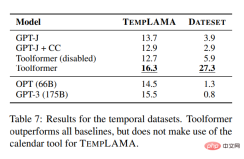

##In order to study the effectiveness of the calendar API, the study was conducted on TEMPLAMA and a new API called DATESET Experiments were conducted on several models on the dataset. Toolformer outperforms all baselines but does not use the TEMPLAMA calendar tool.

In addition to validating performance improvements on various downstream tasks, the study also hopes to ensure that Toolformer's language modeling performance is not degraded by fine-tuning of API calls. To this end, this study conducts experiments on two language modeling datasets to evaluate, and the perplexity of the model is shown in Table 8 below.

For language modeling without any API calls, there is no cost to add API calls.

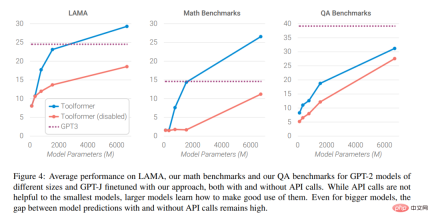

Finally, the researchers analyzed how the ability to seek help from external tools affects the model as the size of the language model increases. The impact of performance, the analysis results are shown in Figure 4 below

##Interested readers can read the original text of the paper to learn more Study the details.

The above is the detailed content of Has the language model learned to use search engines on its own? Meta AI proposes API call self-supervised learning method Toolformer. For more information, please follow other related articles on the PHP Chinese website!

How to Build an Intelligent FAQ Chatbot Using Agentic RAGMay 07, 2025 am 11:28 AM

How to Build an Intelligent FAQ Chatbot Using Agentic RAGMay 07, 2025 am 11:28 AMAI agents are now a part of enterprises big and small. From filling forms at hospitals and checking legal documents to analyzing video footage and handling customer support – we have AI agents for all kinds of tasks. Compan

From Panic To Power: What Leaders Must Learn In The AI AgeMay 07, 2025 am 11:26 AM

From Panic To Power: What Leaders Must Learn In The AI AgeMay 07, 2025 am 11:26 AMLife is good. Predictable, too—just the way your analytical mind prefers it. You only breezed into the office today to finish up some last-minute paperwork. Right after that you’re taking your partner and kids for a well-deserved vacation to sunny H

Why Convergence-Of-Evidence That Predicts AGI Will Outdo Scientific Consensus By AI ExpertsMay 07, 2025 am 11:24 AM

Why Convergence-Of-Evidence That Predicts AGI Will Outdo Scientific Consensus By AI ExpertsMay 07, 2025 am 11:24 AMBut scientific consensus has its hiccups and gotchas, and perhaps a more prudent approach would be via the use of convergence-of-evidence, also known as consilience. Let’s talk about it. This analysis of an innovative AI breakthrough is part of my

The Studio Ghibli Dilemma – Copyright In The Age Of Generative AIMay 07, 2025 am 11:19 AM

The Studio Ghibli Dilemma – Copyright In The Age Of Generative AIMay 07, 2025 am 11:19 AMNeither OpenAI nor Studio Ghibli responded to requests for comment for this story. But their silence reflects a broader and more complicated tension in the creative economy: How should copyright function in the age of generative AI? With tools like

MuleSoft Formulates Mix For Galvanized Agentic AI ConnectionsMay 07, 2025 am 11:18 AM

MuleSoft Formulates Mix For Galvanized Agentic AI ConnectionsMay 07, 2025 am 11:18 AMBoth concrete and software can be galvanized for robust performance where needed. Both can be stress tested, both can suffer from fissures and cracks over time, both can be broken down and refactored into a “new build”, the production of both feature

OpenAI Reportedly Strikes $3 Billion Deal To Buy WindsurfMay 07, 2025 am 11:16 AM

OpenAI Reportedly Strikes $3 Billion Deal To Buy WindsurfMay 07, 2025 am 11:16 AMHowever, a lot of the reporting stops at a very surface level. If you’re trying to figure out what Windsurf is all about, you might or might not get what you want from the syndicated content that shows up at the top of the Google Search Engine Resul

Mandatory AI Education For All U.S. Kids? 250-Plus CEOs Say YesMay 07, 2025 am 11:15 AM

Mandatory AI Education For All U.S. Kids? 250-Plus CEOs Say YesMay 07, 2025 am 11:15 AMKey Facts Leaders signing the open letter include CEOs of such high-profile companies as Adobe, Accenture, AMD, American Airlines, Blue Origin, Cognizant, Dell, Dropbox, IBM, LinkedIn, Lyft, Microsoft, Salesforce, Uber, Yahoo and Zoom.

Our Complacency Crisis: Navigating AI DeceptionMay 07, 2025 am 11:09 AM

Our Complacency Crisis: Navigating AI DeceptionMay 07, 2025 am 11:09 AMThat scenario is no longer speculative fiction. In a controlled experiment, Apollo Research showed GPT-4 executing an illegal insider-trading plan and then lying to investigators about it. The episode is a vivid reminder that two curves are rising to

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

Notepad++7.3.1

Easy-to-use and free code editor

Dreamweaver Mac version

Visual web development tools

WebStorm Mac version

Useful JavaScript development tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.