Technology peripherals

Technology peripherals AI

AI HuggingGPT is popular: a ChatGPT controls all AI models and automatically helps people complete AI tasks. Netizens: Leave your mouth to eat.

HuggingGPT is popular: a ChatGPT controls all AI models and automatically helps people complete AI tasks. Netizens: Leave your mouth to eat.HuggingGPT is popular: a ChatGPT controls all AI models and automatically helps people complete AI tasks. Netizens: Leave your mouth to eat.

The strongest combination: HuggingFace ChatGPT——

HuggingGPT, it’s coming!

Just give an AI task, such as "What animals are in the picture below, and how many of each type are there?"

It can help you automatically analyze which AI models are needed, and then directly call the corresponding model on HuggingFace to help you execute and complete it.

#In the entire process, all you have to do is output your requirements in natural language.

This result of the cooperation between Zhejiang University and Microsoft Research Asia quickly became popular as soon as it was released.

NVIDIA AI research scientist Jim Fan said directly:

This is the most interesting paper I have read this week. Its idea is very close to "Everything App" (everything is an App, and information is read directly by AI).

And one netizen "slaps his thigh directly":

Isn't this the ChatGPT "package transfer man"?

AI evolves at a rapid pace, leaving us something to eat...

So, what exactly is going on?

HuggingGPT: Your AI model "Tiao Bao Xia"

In fact, if this combination is just a "Tiao Bao Xia", then the pattern is too small.

Its true meaning is AGI.

As the author said, a key step towards AGI is the ability to solve complex AI tasks with different domains and modes.

Our current results are still far from this - a large number of models can only perform a specific task well.

However, the performance of large language models LLM in language understanding, generation, interaction and reasoning made the author think:

They can be used as intermediate controllers to manage all existing AI models , by "mobilizing and combining everyone's power" to solve complex AI tasks.

In this system, language is the universal interface.

So, HuggingGPT was born.

Its engineering process is divided into four steps:

First, task planning. ChatGPT parses the user's needs into a task list and determines the execution sequence and resource dependencies between tasks.

Secondly, model selection. ChatGPT assigns appropriate models to tasks based on the descriptions of each expert model hosted on HuggingFace.

Then, the task is executed. The selected expert model on the hybrid endpoint (including local inference and HuggingFace inference) executes the assigned tasks according to the task sequence and dependencies, and gives the execution information and results to ChatGPT.

Finally, output the results. ChatGPT summarizes the execution process logs and inference results of each model and gives the final output.

As shown below.

Suppose we give such a request:

Please generate a picture of a girl reading a book, her posture is the same as the boy in example.jpg. Then use your voice to describe the new image.

You can see how HuggingGPT decomposes it into 6 subtasks, and selects the model to execute respectively to obtain the final result.

What is the specific effect?

The author conducted actual measurements using gpt-3.5-turbo and text-davinci-003, two variants that can be publicly accessed through the OpenAI API.

As shown in the figure below:

When there are resource dependencies between tasks, HuggingGPT can correctly parse the specific tasks according to the user's abstract request and complete the image conversion.

In audio and video tasks, it also demonstrates the ability to organize cooperation between models, by executing two models in parallel and serially, respectively. Finished a video and dubbing work of "Astronauts Walking in Space".

#In addition, it can also integrate input resources from multiple users to perform simple reasoning, such as counting how many zebras there are in the following three pictures. .

Summary in one sentence: HuggingGPT can show good performance on various forms of complex tasks.

The project has been open sourced and is called "Jarvis"

Currently, HuggingGPT's paper has been released and the project is under construction. Only part of the code has been open sourced and has received 1.4k stars. .

We noticed that its project name is very interesting. It is not called HuggingGPT, but JARVIS, the AI butler in Iron Man.

Some people have found that the idea of it is very similar to the Visual ChatGPT just released in March: the latter HuggingGPT, mainly the scope of the callable model has been expanded to more, Include quantity and type.

#Yes, in fact, they all have a common author: Microsoft Asia Research Institute.

Specifically, the first author of Visual ChatGPT is MSRA senior researcher Wu Chenfei, and the corresponding author is MSRA chief researcher Duan Nan.

HuggingGPT includes two co-authors:

Shen Yongliang, who is from Zhejiang University and completed this work during his internship at MSRA;

Song Kaitao, a researcher at MSRA.

The corresponding author is Zhuang Yueting, a professor in the Department of Computer Science of Zhejiang University.

Finally, netizens were very excited about the birth of this powerful new tool. Some people said:

ChatGPT has become a human Create the overall commander of all AI.

Some people also believe that

AGI may not be an LLM, but an "intermediary" LLM Connect multiple interrelated models.

So, have we begun the era of "semi-AGI"?

Paper address:https://www.php.cn/link/1ecdec353419f6d7e30857d00d0312d1

Project link :https://www.php.cn/link/859555c74e9afd45ab771c615c1e49a6

Reference link:https://www.php.cn/ link/62d2b7ba91f34c0ac08aa11c359a8d2c

The above is the detailed content of HuggingGPT is popular: a ChatGPT controls all AI models and automatically helps people complete AI tasks. Netizens: Leave your mouth to eat.. For more information, please follow other related articles on the PHP Chinese website!

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

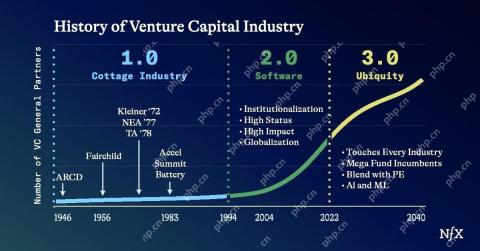

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 English version

Recommended: Win version, supports code prompts!

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.