Technology peripherals

Technology peripherals AI

AI The security of ChatGPT has caused concern. The parent company issued a document saying that it always pays attention to security.

The security of ChatGPT has caused concern. The parent company issued a document saying that it always pays attention to security.The security of ChatGPT has caused concern. The parent company issued a document saying that it always pays attention to security.

With the popularity of large-scale language models represented by ChatGPT, people’s anxiety about their security has become more and more obvious. After Italy announced a temporary ban on ChatGPT due to privacy and security issues on March 31, Canada also announced on April 4 that it was investigating ChatGPT parent company OpenAI for data security issues. On April 5, the official blog of OpenAI updated an article, focusing on how it ensures the safety of AI, which can be regarded as a side response to people's concerns.

It took 6 months to test GPT-4

The article mentioned that after testing the latest GPT-4 model After completing all training, the team spent more than 6 months conducting internal testing to make it more secure when it is released to the public. It believes that powerful artificial intelligence systems should undergo strict security assessments and need to be supervised on the basis of ensuring supervision. The government actively cooperates to develop the best regulatory approach.

The article also mentioned that although it is impossible to predict all risks during the experimental testing process, AI needs to continuously learn experience and improve in actual use to iterate a safer version, and believes that society does It takes some time to adapt to the increasingly powerful AI.

Pay attention to child protection

The article states that one of the key points of safety work is to protect children. Users who use AI tools must be over 18 years old, or under the age of 18. Over 13 years old under parental supervision.

OpenAI emphasizes that it does not allow its technology to be used to generate hate, harassment, violence or adult content. Compared with GPT-3.5, GPT-4 has an 82% improvement in the ability to refuse to respond to banned content. And through the monitoring system to detect possible abuse situations, for example, when users try to upload child sexual abuse material to the image tool, the system will block and report it to the National Center for Missing and Exploited Children.

Respect privacy and improve factual accuracy

The article states that OpenAI’s large language model is trained on an extensive corpus of text, including publicly available content, licenses Content and content generated by human reviewers, data will not be used to sell services, advertise, or profile users. OpenAI acknowledges that it obtains personal information from the public Internet during the training process, but will endeavor to remove personal information from the training data set when feasible, fine-tune the model to reject requests for personal information, and actively respond to requests to remove individuals involved in the system. Requests for informational content.

In terms of the accuracy of the content provided, the article stated that through user feedback on marking false content, the accuracy of the content generated by GPT-4 is 40% higher than that of GPT-3.5.

The article believes that the practical way to solve AI security problems is not only to invest more time and resources to research effective mitigation technologies and test abuse in actual usage scenarios in experimental environments, but more importantly, , to improve security and improve AI capabilities go hand in hand, OpenAI has the ability to match the most powerful AI models with the best security protection measures, create and deploy more powerful models with increasing caution, and will Security measures continue to be strengthened as AI systems evolve.

The above is the detailed content of The security of ChatGPT has caused concern. The parent company issued a document saying that it always pays attention to security.. For more information, please follow other related articles on the PHP Chinese website!

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

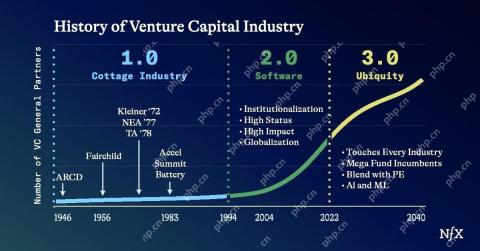

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

WebStorm Mac version

Useful JavaScript development tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.