Technology peripherals

Technology peripherals AI

AI 13 billion parameters, 8 A100 training, UC Berkeley releases dialogue model Koala

13 billion parameters, 8 A100 training, UC Berkeley releases dialogue model Koala13 billion parameters, 8 A100 training, UC Berkeley releases dialogue model Koala

Since Meta released and open sourced the LLaMA series of models, researchers from Stanford University, UC Berkeley and other institutions have carried out "second creation" on the basis of LLaMA, and have successively launched Alpaca, Vicuna and other " Alpaca" large model.

Alpaca has become the new leader in the open source community. Due to the abundance of "secondary creations", the English words for the biological alpaca genus are almost out of use, but it is also possible to name the large model after other animals.

Recently, UC Berkeley’s Berkeley Artificial Intelligence Institute (BAIR) released a conversation model Koala (literally translated as Koala) that can run on consumer-grade GPUs. Koala fine-tunes the LLaMA model using conversation data collected from the web.

Project address: https://bair.berkeley.edu/blog/2023/04/03/koala/

Koala has launched an online test demo:

- ##Demo address: https://chat.lmsys.org/?model=koala-13b

- Open source address: https://github.com/young-geng/EasyLM

Like Vicuna, Koala also uses conversation data collected from the network to fine-tune the LLaMA model, with a focus on ChatGPT Public data of closed-source large model dialogues.

The research team stated that the Koala model is implemented in EasyLM using JAX/Flax and the Koala model is trained on a single Nvidia DGX server equipped with 8 A100 GPUs. It takes 6 hours to complete 2 epochs of training. The cost of such training is typically less than $100 on public cloud computing platforms.

The research team experimentally compared Koala with ChatGPT and Stanford University's Alpaca. The results showed that Koala-13B with 13 billion parameters can effectively respond to various user queries and generate Response is generally better than Alpaca's and is comparable to ChatGPT's performance in more than half of the cases.

The most important significance of Koala is that it shows that when trained on a higher quality data set, a model small enough to run locally can also achieve excellent performance similar to that of a large model . This means that the open source community should work harder to curate high-quality datasets, as this may lead to more secure, realistic, and powerful models than simply increasing the size of existing systems. From this perspective, Koala is a small but refined alternative to ChatGPT.

However, Koala is only a research prototype and still has significant flaws in content, security, and reliability, and should not be used for any purpose other than research.

Datasets and TrainingThe main hurdle in building a conversation model is managing the training data. Large conversation models such as ChatGPT, Bard, Bing Chat, and Claude all use proprietary datasets with extensive human annotations. To build Koala's training dataset, the research team collected and curated conversation data from the web and public datasets, which contain data shared publicly by users speaking to large language models such as ChatGPT.

Unlike other models that crawl as much network data as possible to maximize the data set, Koala focuses on collecting small high-quality data sets, including the question and answer part of public data sets, human Feedback (positive and negative) and dialogue with existing language models. Specifically, Koala's training data set includes the following parts:

ChatGPT distillation data:

- Publicly available chatGPT conversation data (ShareGPT);

- Human ChatGPT comparison corpus (HC3), which uses both human and ChatGPT responses from the HC3 dataset.

Open source data:

- Open Instruction Generalist (OIG);

- Dataset used by the Stanford Alpaca model;

- Anthropic HH ;

- OpenAI WebGPT;

- OpenAI Summarization.

Experimentation and Evaluation

This study conducted a manual evaluation comparing the generation of Koala-All with Koala-Distill, Alpaca and ChatGPT. The results are compared and the results are shown in the figure below. Among them, two different data sets are used for testing, one is Stanford's Alpaca test set, which includes 180 test queries (Alpaca Test Set), and the other is the Koala Test Set.

Overall, the Koala model is sufficient to demonstrate many features of LLM, while being small enough to facilitate fine-tuning or in situations where computing resources are limited. Use below. The research team hopes that the Koala model will become a useful platform for future academic research on large-scale language models. Potential research application directions may include:

- Safety and alignment: Koala allows further research on language models security and better alignment with human intent.

- Model Bias: Koala enables us to better understand bias in large language models, delve into quality issues in conversation datasets, and ultimately help improve the performance of large language models.

- Understanding large language models: Because Koala models can run on relatively cheap consumer-grade GPUs and perform a variety of tasks, Koala allows us to better examine and understand conversational language The internal structure of the model makes the language model more interpretable.

The above is the detailed content of 13 billion parameters, 8 A100 training, UC Berkeley releases dialogue model Koala. For more information, please follow other related articles on the PHP Chinese website!

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

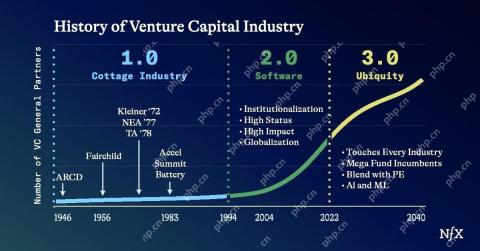

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

WebStorm Mac version

Useful JavaScript development tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Dreamweaver Mac version

Visual web development tools