Technology peripherals

Technology peripherals AI

AI Interview with Sam Altman: GPT-4 didn't surprise me much, but ChatGPT surprised me

Interview with Sam Altman: GPT-4 didn't surprise me much, but ChatGPT surprised meInterview with Sam Altman: GPT-4 didn't surprise me much, but ChatGPT surprised me

ChatGPT and GPT-4 are undoubtedly the biggest “hot hits” in the artificial intelligence industry at the beginning of 2023.

· I have no idea what history books will say about various versions of GPT. But if I have to pick out a key node that I have seen so far, I think it is still ChatGPT. GPT-4 didn’t surprise me too much, but ChatGPT made me a little bit overjoyed.

· To a certain extent, the GPT-4 system enhances human intelligence and can be applied to a variety of scenarios.

· The ease of use of the system itself is sometimes more important than the capabilities of the underlying model.

· GPT-4 is not yet conscious and cannot replace good programmers. A truly conscious artificial intelligence should be able to tell others that it is conscious, express its own pain and other emotions, understand its own situation, have its own memory, and interact with others.

· Artificial intelligence will bring huge improvements to the quality of human life. We can cure diseases, create wealth, increase resources, and make humans happy... It seems that humans don’t need to work anymore, but Human beings also need social status, need passion, need to create, and need to feel their own value. Therefore, after the advent of the artificial intelligence era, what we need to do is to find new jobs and lifestyles, and embrace the huge improvements brought by new technologies.

Sam Altman, one of the founders of OpenAI, is currently the president of Y Combinator and the CEO of OpenAI, an American artificial intelligence laboratory. Led the artificial intelligence laboratory OpenAI to develop the chatbot program ChatGPT, and was called the "Father of ChatGPT" by the media.

(L refers to Lex Fridman, S refers to Sam Altman)

If the history of AI is written on Wikipedia, ChatGPT is still the most critical node

Q1

#L: What is GPT-4? How does it work? What's the most amazing thing about it?

S: Looking back now, it is still a very rudimentary artificial intelligence system. Its work efficiency is low, there are some minor problems, and many things are not completed satisfactorily. Still, it points to a path forward for truly important technologies in the future (even if the process takes decades).

Q2

L: 50 years later, when people look back at early intelligent systems, will GPT-4 be a truly huge leap forward? Is this a pivotal moment? When people write the history of artificial intelligence on Wikipedia, which version of GPT will they write about?

S: This process of progress is ongoing, and it is difficult to pinpoint a historic moment. I have no idea what the history books will say about the various versions of GPT. But if I had to pick out one key node that I have seen so far, I think it is ChatGPT. What is really important about ChatGPT is not its underlying model itself, but how to utilize the underlying model, which involves reinforcement learning based on human feedback (RLHF) and its interface.

Q3

L: How does RLHF make ChatGPT have such amazing performance?

S: We trained these models on large amounts of text data. In the process, they learned some knowledge about low-level representations and were able to do some amazing things. But if we use this basic model immediately after training is completed, although it can have good performance on the test set, it is not very easy to use. To this end, we implemented RLHF by introducing some human feedback. The simplest RLHF is: give the model two versions of the output, let it judge which one human raters will prefer, and then feed that information back to the model through reinforcement learning. RLHF is surprisingly effective. We can make the model more practical with very little data. We use this technology to align the model with human needs and make it easier to give correct answers that are helpful to people. Regardless of the underlying model capabilities, the ease of use of the system is critical.

Q4

L: How do you understand that by using RLHF technology, we no longer need as much human supervision?

S: To be fair, our research on this part is still in its early stages compared to the original scientific study of creating pre-trained large models, but it does require less data.

L: Research on human guidance is very interesting and important. We use this type of research to understand how to make systems more useful, smarter, ethical and consistent with human intent. The process of introducing human feedback is also important.

Q5

L: How large is the pre-training data set?

S: We have spent a lot of effort working with our partners to capture these pre-training data from various open source databases on the Internet and build a huge data set. In fact, apart from Reddit, newspapers and other media, there is a lot of content in the world that most people don’t expect. Cleaning and filtering data is more difficult than collecting it.

Q6

L: Building ChatGPT requires solving many problems, such as: design of model architecture scale, data selection, RLHF. What’s so magical about these parts coming together?

S: GPT-4 is the version we actually rolled out inside the final product of ChatGPT, and the number of parts required to create it is hard to know and it’s a lot of work. At every early stage, we need to come up with new ideas or execute existing ones well.

L: Some technical steps in GPT-4 are relatively mature, such as predicting the performance that the model will achieve before completing the complete training model. How can we know the special characteristics of a fully trained system based on a small amount of training? It's like looking at a one-year-old baby and knowing how many points he got in the college entrance examination.

S: This achievement is surprising. It involves many scientific factors behind it and finally reaches the level of intelligence expected by humans. This implementation process is much more scientific than I could imagine. As with all new branches of science, we will find new things that don't fit the data and come up with better explanations for it. This is just how science develops. Although we have posted some information about GPT-4 on social media, we should still be in awe of its magic.

GPT-4 systematically enhances human intelligence

Q7

##L: GPT-4 This type of language model can be learned or referenced Materials from various fields. Are the researchers and engineers within OpenAI gaining a deeper understanding of the wonders of language models?

S: We can evaluate the model in various ways. After training the model, we can test it on various tasks. We have also opened up the testing process of the model on Github. This is helpful. The important thing is that we spend a lot of manpower, financial resources, and time to analyze the practicality of the model, how the model can bring happiness and help to people, how to create a better world, and generate new products and services. Of course, we still don’t fully understand all the internal processes by which the model accomplishes its tasks, but we will continue to work toward this.Q8

L: GPT-4 compresses the vast amount of information on the Internet into "relatively few" parameters in the black box model, forming human intelligence. What kind of leap does it take from fact to wisdom?

S: We use the model as a database to absorb human knowledge, rather than using it as an inference engine, and the system's processing power is magically improved. In this way, the system can actually achieve a certain degree of reasoning, although some scholars may think this statement is not rigorous. To some extent, the GPT-4 system enhances human intelligence and can be applied to a variety of scenarios. L: ChatGPT seems to "possess" intelligence in its continuous interaction with humans. It admits its wrong assumptions and denies inappropriate requests in this dialogue. GPT-4 is not conscious and will not replace good programmersQ9

#L: Some people enjoy working with Programming with GPT, some people are afraid that their jobs will be replaced by GPT. What do you think of this phenomenon?

S: There are some critical programming tasks that still require a human creative element. GPT-like models will automate some programming tasks, but they still cannot replace a good programmer. Some programmers will feel anxious about the uncertainty of the future, but more people will feel that it improves their work efficiency.Q10

L: Eliezer Yudkowsky warned that artificial intelligence can harm humans and gave some examples. It is almost impossible for us to keep super artificial intelligence and Human intent “aligns.” Do you agree with him?

S: It's possible. If we don't talk about this potential possibility, we won't put enough effort into developing new technologies to solve such problems. Such problems exist in many emerging fields, and now people are concerned about the capabilities and safety of artificial intelligence. Elizer's article is well written, but it's difficult to follow some of his work, there are some logical issues, and I don't entirely support his views. There was a lot of work on AI safety long before people believed in the power of deep learning, large language models, and I don’t think there have been enough updates in this area. Theory is indeed important, but we need to constantly learn from changes in technological trajectories, and this cycle needs to be more compact. I think now is a good time to look at AI safety and explore the “alignment” of these new tools and technologies with human intent.Q11

L: Artificial intelligence technology is developing at a rapid pace, and some people say that we have now entered the stage of "take-off" of artificial intelligence. When someone actually builds general artificial intelligence, how will we know about this change?

S: GPT-4 didn’t surprise me too much, but ChatGPT slightly surprised me. As impressive as GPT-4 is, it is not yet AGI. The true definition of AGI is increasingly important, but I think it’s still very far away.

Q12

L: Do you think GPT-4 is conscious?

S: No, I don’t think it’s conscious yet.

L: I think a truly conscious artificial intelligence should be able to tell others that it is conscious, express its own pain and other emotions, understand its own situation, have its own memory, and be able to interact with people. And I think these abilities are interface abilities, not underlying knowledge.

S: Our chief scientist at OpenAI, Ilya Sutskever, once discussed with me "How to know whether a model is conscious." He believes that if we carefully train a model on a data set without mentioning the subjective experience of consciousness or any related concepts, then we describe this subjective experience of consciousness to the model and see if the model can understand the information we convey.

General Artificial Intelligence, where have we come?

Q13

L: Chomsky and others are critical of the ability of “large language models” to achieve general artificial intelligence. What do you think of it? Are large language models the right path to general artificial intelligence?

S: I think large language models are one part of the road to AGI, and we also need other very important parts.

L: Do you think an intelligent agent needs a "body" to experience the world?

S: I'm cautious about this. But in my opinion, a system that cannot be well integrated into known scientific knowledge cannot be called "superintelligence". It is like inventing new basic science. In order to achieve "super intelligence", we need to continue to expand the paradigm of the GPT class, which still has a long way to go.

L: I think that by changing the data used to train GPT, various huge scientific breakthroughs can already be achieved.

Q14

L: As the prompt chain gets longer and longer, these interactions themselves will become part of human society and serve as mutual aids. Base. How do you view this phenomenon?

S: Compared with the fact that the GPT system can complete certain tasks, what excites me more is that humans participate in the feedback loop of this tool. We can learn more from the trajectories of multiple rounds of interactions. Lots of stuff. AI will expand and amplify human intentions and capabilities, which will also shape how people use it. We may never build AGI, but making humans better is a huge victory in itself.

The above is the detailed content of Interview with Sam Altman: GPT-4 didn't surprise me much, but ChatGPT surprised me. For more information, please follow other related articles on the PHP Chinese website!

MarkItDown MCP Can Convert Any Document into Markdowns!Apr 27, 2025 am 09:47 AM

MarkItDown MCP Can Convert Any Document into Markdowns!Apr 27, 2025 am 09:47 AMHandling documents is no longer just about opening files in your AI projects, it’s about transforming chaos into clarity. Docs such as PDFs, PowerPoints, and Word flood our workflows in every shape and size. Retrieving structured

How to Use Google ADK for Building Agents? - Analytics VidhyaApr 27, 2025 am 09:42 AM

How to Use Google ADK for Building Agents? - Analytics VidhyaApr 27, 2025 am 09:42 AMHarness the power of Google's Agent Development Kit (ADK) to create intelligent agents with real-world capabilities! This tutorial guides you through building conversational agents using ADK, supporting various language models like Gemini and GPT. W

Use of SLM over LLM for Effective Problem Solving - Analytics VidhyaApr 27, 2025 am 09:27 AM

Use of SLM over LLM for Effective Problem Solving - Analytics VidhyaApr 27, 2025 am 09:27 AMsummary: Small Language Model (SLM) is designed for efficiency. They are better than the Large Language Model (LLM) in resource-deficient, real-time and privacy-sensitive environments. Best for focus-based tasks, especially where domain specificity, controllability, and interpretability are more important than general knowledge or creativity. SLMs are not a replacement for LLMs, but they are ideal when precision, speed and cost-effectiveness are critical. Technology helps us achieve more with fewer resources. It has always been a promoter, not a driver. From the steam engine era to the Internet bubble era, the power of technology lies in the extent to which it helps us solve problems. Artificial intelligence (AI) and more recently generative AI are no exception

How to Use Google Gemini Models for Computer Vision Tasks? - Analytics VidhyaApr 27, 2025 am 09:26 AM

How to Use Google Gemini Models for Computer Vision Tasks? - Analytics VidhyaApr 27, 2025 am 09:26 AMHarness the Power of Google Gemini for Computer Vision: A Comprehensive Guide Google Gemini, a leading AI chatbot, extends its capabilities beyond conversation to encompass powerful computer vision functionalities. This guide details how to utilize

Gemini 2.0 Flash vs o4-mini: Can Google Do Better Than OpenAI?Apr 27, 2025 am 09:20 AM

Gemini 2.0 Flash vs o4-mini: Can Google Do Better Than OpenAI?Apr 27, 2025 am 09:20 AMThe AI landscape of 2025 is electrifying with the arrival of Google's Gemini 2.0 Flash and OpenAI's o4-mini. These cutting-edge models, launched weeks apart, boast comparable advanced features and impressive benchmark scores. This in-depth compariso

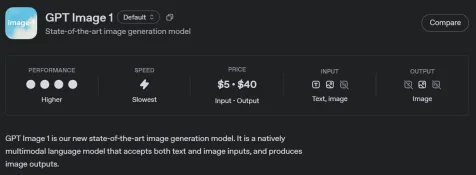

How to Generate and Edit Images Using OpenAI gpt-image-1 APIApr 27, 2025 am 09:16 AM

How to Generate and Edit Images Using OpenAI gpt-image-1 APIApr 27, 2025 am 09:16 AMOpenAI's latest multimodal model, gpt-image-1, revolutionizes image generation within ChatGPT and via its API. This article explores its features, usage, and applications. Table of Contents Understanding gpt-image-1 Key Capabilities of gpt-image-1

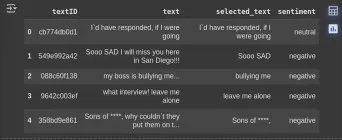

How to Perform Data Preprocessing Using Cleanlab? - Analytics VidhyaApr 27, 2025 am 09:15 AM

How to Perform Data Preprocessing Using Cleanlab? - Analytics VidhyaApr 27, 2025 am 09:15 AMData preprocessing is paramount for successful machine learning, yet real-world datasets often contain errors. Cleanlab offers an efficient solution, using its Python package to implement confident learning algorithms. It automates the detection and

The AI Skills Gap Is Slowing Down Supply ChainsApr 26, 2025 am 11:13 AM

The AI Skills Gap Is Slowing Down Supply ChainsApr 26, 2025 am 11:13 AMThe term "AI-ready workforce" is frequently used, but what does it truly mean in the supply chain industry? According to Abe Eshkenazi, CEO of the Association for Supply Chain Management (ASCM), it signifies professionals capable of critic

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment