Technology peripherals

Technology peripherals AI

AI It only takes a few seconds to convert an ID photo into a digital person. Microsoft has achieved the first high-quality generation of 3D diffusion models, and you can change your appearance and appearance in just one sentence.

It only takes a few seconds to convert an ID photo into a digital person. Microsoft has achieved the first high-quality generation of 3D diffusion models, and you can change your appearance and appearance in just one sentence.The name of this 3D generated diffusion model "Rodin" RODIN is inspired by the French sculpture artist Auguste Rodin.

With a 2D ID photo, you can design a 3D game avatar in just a few seconds!

This is the latest achievement of diffusion model in the 3D field. For example, just an old photo of the French sculptor Rodin can "transform" him into the game in minutes:

CVPR 2023.

Lets come look. Directly use 3D data to train the diffusion modelThe name of this 3D generated diffusion model "Rodin" RODIN is inspired by the French sculpture artist Auguste Rodin. Previously, 2D generated 3D image models were usually obtained by training generative adversarial networks (GAN) or variational autoencoders (VAE) with 2D data, but the results were often unsatisfactory. Researchers analyzed that the reason for this phenomenon is that these methods have a basic underdetermined (ill posed) problem. That is, due to the geometric ambiguity of single-view images, it is difficult to learn the reasonable distribution of high-quality 3D avatars only through a large amount of 2D data, resulting in poor generation results. Therefore, this time they triedto directly use 3D data to train the diffusion model, mainly solving three problems:

- First, how to use the diffusion model to generate 3D model multi-view diagram. Previously, there were no practical methods and precedents to follow for diffusion models on 3D data.

- Secondly, high-quality and large-scale 3D image data sets are difficult to obtain, and there are privacy and copyright risks, but multi-view consistency cannot be guaranteed for 3D images published on the Internet.

- Finally, the 2D diffusion model is directly extended to 3D generation, which requires huge memory, storage and computing overhead.

First, 3D-aware convolution ensures the intrinsic correlation of the three planes after dimensionality reduction.

The 2D convolutional neural network (CNN) used in traditional 2D diffusion cannot handle Triplane feature maps well.3D-aware convolution does not simply generate three 2D feature planes, but considers its inherent three-dimensional characteristics when processing such 3D expressions, that is, the 2D features of one of the three view planes are essentially The projection of a straight line in 3D space is therefore related to the corresponding straight line projection features in the other two planes.

In order to achieve cross-plane communication, researchers consider such 3D correlations in convolution, thus efficiently synthesizing 3D details in 2D.

Second, latent space concerto three-plane 3D expression generation.

Researchers coordinate feature generation through latent vectors to make it globally consistent across the entire three-dimensional space, resulting in higher-quality avatars and semantic editing.

At the same time, an additional image encoder is also trained by using the images in the training dataset, which can extract semantic latent vectors as conditional inputs to the diffusion model.

In this way, the overall generative network can be regarded as an autoencoder, using the diffusion model as the decoding latent space vector. For semantic editability, the researchers adopted a frozen CLIP image encoder that shares the latent space with text prompts.

Third, hierarchical synthesis generates high-fidelity three-dimensional details.

The researchers used the diffusion model to first generate a low-resolution three-view plane (64×64), and then generated a high-resolution three-view plane (256×256) through diffusion upsampling. .

In this way, the basic diffusion model focuses on the overall 3D structure generation, while the subsequent upsampling model focuses on detail generation.

Generating a large amount of random data based on Blender

On the training data set, the researchers used the open source 3D rendering software Blender to randomly combine virtual 3D characters manually created by the artist images, coupled with random sampling from a large number of hair, clothes, expressions and accessories, to create 100,000 synthetic individuals, while rendering 300 multi-view images with a resolution of 256*256 for each individual.

In terms of generating text to 3D avatars, the researchers used the portrait subset of the LAION-400M data set to train the mapping from the input modality to the hidden space of the 3D diffusion model, and finally allowed the RODIN model to use only one A 2D image or a text description can create a realistic 3D avatar.

△Given a photo to generate an avatar

can not only change the image in one sentence, such as "a man with curly hair and a beard wearing a black leather jacket" ":

Even the gender can be changed at will, "Women in red clothes with African hairstyle": (Manual dog head)

The researchers also gave an application demo demonstration. Creating your own image only requires a few buttons:

△Use text to do 3D portrait editing

For more effects, you can click on the project address to view~

3D half-length portraits, which is also related to the fact that it mainly uses face data for training, but 3D image generation The demand is not limited to human faces.

Next, the team will consider trying to use RODIN models to create more 3D scenes, including flowers, trees, buildings, cars and homes, etc., to achieve the ultimate goal of "generating 3D everything with one model". Paper address:https://arxiv.org/abs/2212.06135

https://3d-avatar-diffusion.microsoft.com

The above is the detailed content of It only takes a few seconds to convert an ID photo into a digital person. Microsoft has achieved the first high-quality generation of 3D diffusion models, and you can change your appearance and appearance in just one sentence.. For more information, please follow other related articles on the PHP Chinese website!

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM

从VAE到扩散模型:一文解读以文生图新范式Apr 08, 2023 pm 08:41 PM1 前言在发布DALL·E的15个月后,OpenAI在今年春天带了续作DALL·E 2,以其更加惊艳的效果和丰富的可玩性迅速占领了各大AI社区的头条。近年来,随着生成对抗网络(GAN)、变分自编码器(VAE)、扩散模型(Diffusion models)的出现,深度学习已向世人展现其强大的图像生成能力;加上GPT-3、BERT等NLP模型的成功,人类正逐步打破文本和图像的信息界限。在DALL·E 2中,只需输入简单的文本(prompt),它就可以生成多张1024*1024的高清图像。这些图像甚至

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM

普林斯顿陈丹琦:如何让「大模型」变小Apr 08, 2023 pm 04:01 PM“Making large models smaller”这是很多语言模型研究人员的学术追求,针对大模型昂贵的环境和训练成本,陈丹琦在智源大会青源学术年会上做了题为“Making large models smaller”的特邀报告。报告中重点提及了基于记忆增强的TRIME算法和基于粗细粒度联合剪枝和逐层蒸馏的CofiPruning算法。前者能够在不改变模型结构的基础上兼顾语言模型困惑度和检索速度方面的优势;而后者可以在保证下游任务准确度的同时实现更快的处理速度,具有更小的模型结构。陈丹琦 普

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PM

找不到中文语音预训练模型?中文版 Wav2vec 2.0和HuBERT来了Apr 08, 2023 pm 06:21 PMWav2vec 2.0 [1],HuBERT [2] 和 WavLM [3] 等语音预训练模型,通过在多达上万小时的无标注语音数据(如 Libri-light )上的自监督学习,显著提升了自动语音识别(Automatic Speech Recognition, ASR),语音合成(Text-to-speech, TTS)和语音转换(Voice Conversation,VC)等语音下游任务的性能。然而这些模型都没有公开的中文版本,不便于应用在中文语音研究场景。 WenetSpeech [4] 是

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM

解锁CNN和Transformer正确结合方法,字节跳动提出有效的下一代视觉TransformerApr 09, 2023 pm 02:01 PM由于复杂的注意力机制和模型设计,大多数现有的视觉 Transformer(ViT)在现实的工业部署场景中不能像卷积神经网络(CNN)那样高效地执行。这就带来了一个问题:视觉神经网络能否像 CNN 一样快速推断并像 ViT 一样强大?近期一些工作试图设计 CNN-Transformer 混合架构来解决这个问题,但这些工作的整体性能远不能令人满意。基于此,来自字节跳动的研究者提出了一种能在现实工业场景中有效部署的下一代视觉 Transformer——Next-ViT。从延迟 / 准确性权衡的角度看,

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM

Stable Diffusion XL 现已推出—有什么新功能,你知道吗?Apr 07, 2023 pm 11:21 PM3月27号,Stability AI的创始人兼首席执行官Emad Mostaque在一条推文中宣布,Stable Diffusion XL 现已可用于公开测试。以下是一些事项:“XL”不是这个新的AI模型的官方名称。一旦发布稳定性AI公司的官方公告,名称将会更改。与先前版本相比,图像质量有所提高与先前版本相比,图像生成速度大大加快。示例图像让我们看看新旧AI模型在结果上的差异。Prompt: Luxury sports car with aerodynamic curves, shot in a

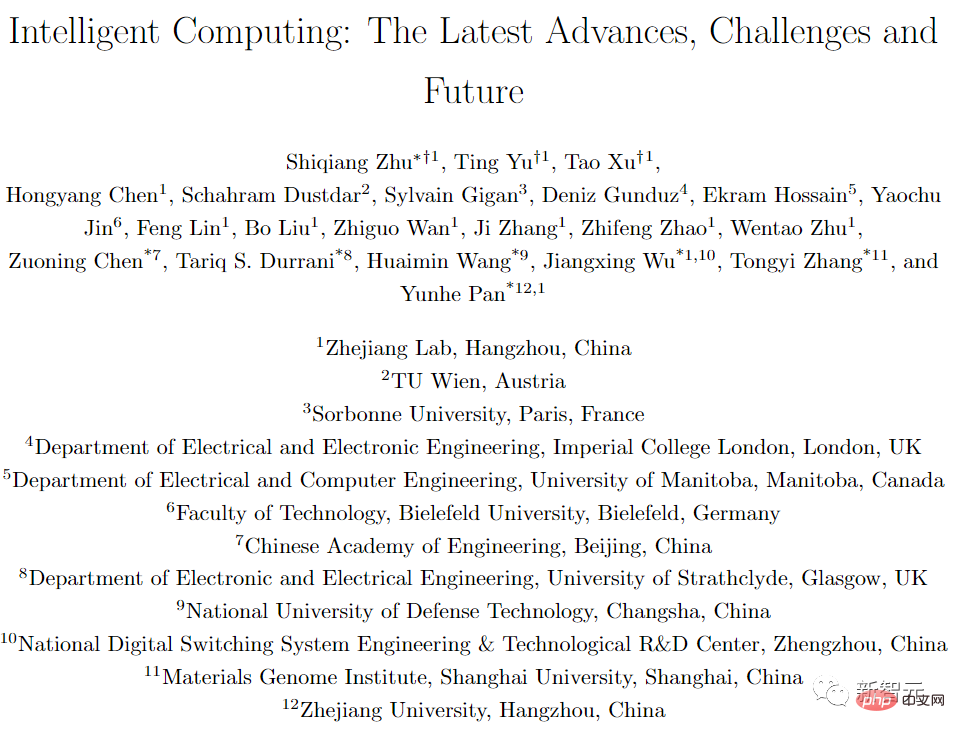

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM

五年后AI所需算力超100万倍!十二家机构联合发表88页长文:「智能计算」是解药Apr 09, 2023 pm 07:01 PM人工智能就是一个「拼财力」的行业,如果没有高性能计算设备,别说开发基础模型,就连微调模型都做不到。但如果只靠拼硬件,单靠当前计算性能的发展速度,迟早有一天无法满足日益膨胀的需求,所以还需要配套的软件来协调统筹计算能力,这时候就需要用到「智能计算」技术。最近,来自之江实验室、中国工程院、国防科技大学、浙江大学等多达十二个国内外研究机构共同发表了一篇论文,首次对智能计算领域进行了全面的调研,涵盖了理论基础、智能与计算的技术融合、重要应用、挑战和未来前景。论文链接:https://spj.scien

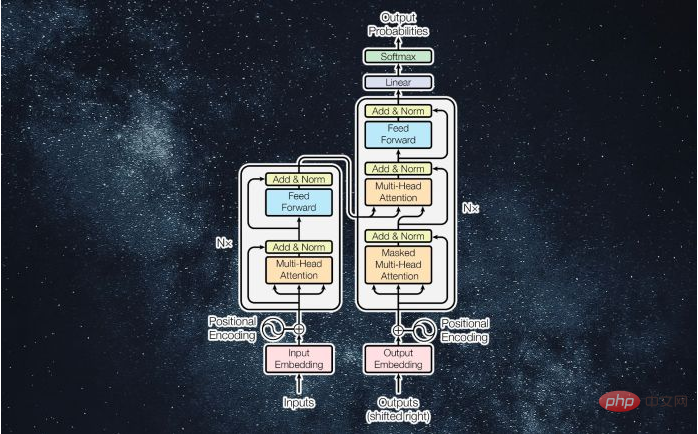

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM

什么是Transformer机器学习模型?Apr 08, 2023 pm 06:31 PM译者 | 李睿审校 | 孙淑娟近年来, Transformer 机器学习模型已经成为深度学习和深度神经网络技术进步的主要亮点之一。它主要用于自然语言处理中的高级应用。谷歌正在使用它来增强其搜索引擎结果。OpenAI 使用 Transformer 创建了著名的 GPT-2和 GPT-3模型。自从2017年首次亮相以来,Transformer 架构不断发展并扩展到多种不同的变体,从语言任务扩展到其他领域。它们已被用于时间序列预测。它们是 DeepMind 的蛋白质结构预测模型 AlphaFold

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM

AI模型告诉你,为啥巴西最可能在今年夺冠!曾精准预测前两届冠军Apr 09, 2023 pm 01:51 PM说起2010年南非世界杯的最大网红,一定非「章鱼保罗」莫属!这只位于德国海洋生物中心的神奇章鱼,不仅成功预测了德国队全部七场比赛的结果,还顺利地选出了最终的总冠军西班牙队。不幸的是,保罗已经永远地离开了我们,但它的「遗产」却在人们预测足球比赛结果的尝试中持续存在。在艾伦图灵研究所(The Alan Turing Institute),随着2022年卡塔尔世界杯的持续进行,三位研究员Nick Barlow、Jack Roberts和Ryan Chan决定用一种AI算法预测今年的冠军归属。预测模型图

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 English version

Recommended: Win version, supports code prompts!

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools