Technology peripherals

Technology peripherals AI

AI The speed is increased by 24 times, and inverse rendering of large indoor scenes can be completed in 30 minutes. Rushi's research results were selected for CVPR 2023

The speed is increased by 24 times, and inverse rendering of large indoor scenes can be completed in 30 minutes. Rushi's research results were selected for CVPR 2023The research results on inverse rendering technology have been selected into the top computer vision conference CVPR for two consecutive years, and have spanned from processing a single image to covering the entire indoor scene. Rushi's visual algorithm technology foundation in the field of three-dimensional reconstruction has been vividly demonstrated .

Three-dimensional reconstruction is one of the hot topics in computer vision (CV) and computer graphics (CG). It uses CV technology to process the two-dimensional images of real objects and scenes captured by cameras and other sensors to obtain their three-dimensional Model. As related technologies continue to mature, 3D reconstruction is increasingly used in many different fields such as smart homes, AR tourism, autonomous driving and high-precision maps, robots, urban planning, cultural relic reconstruction, and film entertainment.

#Typical three-dimensional face reconstruction based on two-dimensional images. Source: 10.1049/iet-cvi.2013.0220

Traditional three-dimensional reconstruction can be roughly divided into photometric and geometric methods. The former analyzes the brightness changes of pixels, and the latter relies on parallax to complete reconstruction. In recent years, machine learning, especially deep learning technology, has begun to be used, achieving good results in feature detection, depth estimation, etc. Although some current methods use spatial geometric models and texture maps, the appearance of the scene looks almost the same as the real world.

However, it should be noted that these methods still have some limitations. They can only restore the appearance characteristics of the scene and cannot digitize deeper attributes such as illumination, reflectivity and roughness in the scene. Querying and querying these deep information Editing is out of the question. This also results in the inability to convert them into PBR rendering assets that can be used by the rendering engine, and therefore cannot produce realistic rendering effects. How to solve these problems? Inverse rendering technology has gradually entered people's field of vision.

The inverse rendering task was first proposed by the older generation of computer scientists Barrow and Tenenbaum in 1978. Based on the three-dimensional reconstruction, the intrinsic properties of the scene such as illumination, reflectivity, roughness and metallicity are further restored to achieve More realistic rendering. However, decomposing these attributes from images is extremely unstable, and different attribute configurations often lead to similar appearances. With the advancement of differentiable rendering and implicit neural representation, some methods have achieved good results in small object-centered scenes with explicit or implicit priors.

However, the inverse rendering of large-scale indoor scenes has not been solved well. Not only is it difficult to restore physically reasonable materials in real scenes, but it is also difficult to ensure the consistency of multiple perspectives within the scene. In China, there is such a technology company that is deeply engaged in independent research and development of core algorithms and focuses on large-scale industrial applications in the field of 3D reconstruction - Realsee. It is groundbreaking in the difficult topic of inverse rendering of large-scale indoor scenes. An efficient multi-view inverse rendering framework is proposed. The paper has been accepted for the CVPR 2023 conference.

- Project address: http://yodlee.top/TexIR/

- Paper address: https ://arxiv.org/pdf/2211.10206.pdf

Specifically, Rushi’s new method can reversely speculate to obtain scenes such as lighting, reflectivity, roughness, etc. based on accurate spatial data. The intrinsic attributes restore lighting and material performance close to the real scene based on 3D reconstruction, achieving comprehensive improvements in reconstruction effect, cost efficiency, application scope and other dimensions.

This article will detailedly interpret Rushi’s multi-view inverse rendering technology for large-scale indoor scenes, and provide an in-depth analysis of its advantages.

For large-scale indoor scenes

RuShi’s new inverse rendering technology achieves “accurate, detailed and fast”

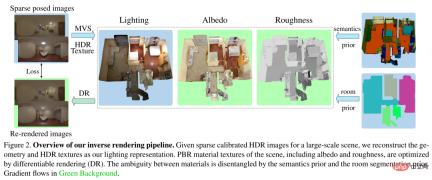

Figure 2 below shows the overall picture of RuShi’s new inverse rendering method process. Given a calibrated set of HDR images of a large-scale indoor scene, the method aims to accurately recover globally consistent illumination and SVBRDFs (bidirectional reflectance distribution functions), allowing easy integration into image pipelines and downstream applications.

In order to achieve these goals, Rushi first proposed a compact lighting representation called TBL (Texture-based Lighting), which consists of 3D meshes and HDR textures to efficiently build It simulates the global illumination including direct and infinite bounce indirect illumination at any position in the entire large indoor scene. Based on TBL, Rushi further proposed mixed lighting representation with pre-calculated irradiance, which greatly improved efficiency and reduced rendering noise in material optimization. Finally, Rushi introduced a three-stage material optimization strategy based on segmentation, which can well handle the physical ambiguity of materials in complex large-scale indoor scenes.

Texture-based lighting (TBL)

In representing the lighting of large-scale indoor scenes, the advantages of TBL are reflected in the compactness of neural representation and IBL global illumination. As well as the interpretability and spatial consistency of parametric light. The TBL is a global representation of the entire scene, defining the outgoing irradiance of all surface points. The emitted irradiance of a surface point is usually equal to the value of the HDR texture, that is, the observed HDR irradiance of the corresponding pixel in the input HDR image.

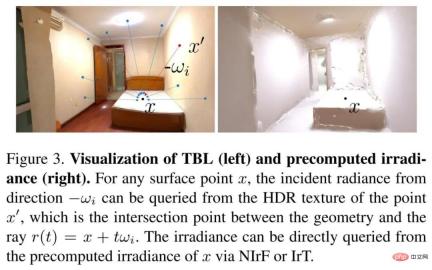

Rushi uses self-developed high-quality 3D reconstruction technology to reconstruct the mesh model of the entire large scene. Finally, the HDR texture is reconstructed based on the input HDR image, and the global illumination is queried from any position and any direction through the HDR texture. Figure 3 below (left) shows a visualization of TBL.

Mixed lighting representation

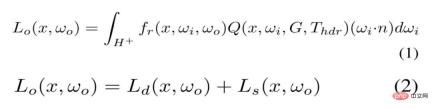

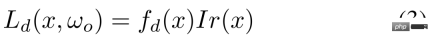

In practice, there are disadvantages in directly using TBL to optimize materials. The high number of Monte Carlo samples will lead to High computational and memory costs. Since most noise exists in the diffuse component, the irradiance of surface points for the diffuse component is precomputed. The irradiance can thus be queried efficiently, replacing costly online calculations, as shown in Figure 3 (right). The TBL-based rendering equation is rewritten from Equation (1) to Equation (2).

Rushi proposes two representations to model precomputed irradiance. One is Neural Irradiance Field (NIrF), which is a shallow multilayer perceptron (MLP) that takes surface points as input and outputs irradiance p. The other is Irradiance texture (IrT), which is similar to the light map commonly used in computer graphics.

As you can see, this mixed lighting representation includes the precomputed irradiance for the diffuse component and the source TBL for the specular component, which greatly reduces rendering noise and realizes material Efficient optimization. The diffuse component in equation (2) is modeled as shown in equation (3).

Three-stage material estimation based on segmentation

For neural materials, it is difficult to use extremely complex materials for large scale scenes and are not suitable for traditional graphics engines. Instead of opting for an explicit material texture that optimizes the geometry directly, a simplified version of the Disney BRDF model was used with SV albedo and SV roughness as parameters. However, due to the sparsity of observations, directly optimizing explicit material textures results in inconsistent and unconverged roughness.

In this regard, Rushi utilizes semantic and room segmentation priors to solve this problem, where semantic images are predicted by a learning-based model and room segmentation is calculated by occupancy grids. In the implementation process, Rushi adopts a three-stage strategy.

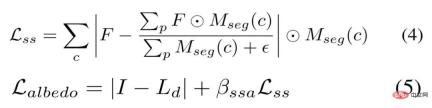

The first stage optimizes the sparse albedo based on the Lambertian assumption, instead of initializing the albedo to a constant like in small object-centered scenes. Although the diffuse albedo can be calculated directly by formula (3), it will make the albedo too bright in the highlight area, resulting in excessive roughness in the next stage. Therefore, as shown in Equation (4) below, we use semantic smoothing constraints to induce similar albedo on the same semantic segmentation. The sparse albedo is optimized by Equation (5).

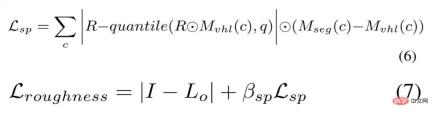

Second stage virtual highlight (VHL)-based sampling and semantic-based propagation. In multi-view images, only sparse specular cues can be observed leading to globally inconsistent roughness, especially in large-scale scenes. However, through semantic segmentation prior, reasonable roughness in highlight areas can be propagated to areas with the same semantics.

Rushi first renders the image based on the input pose with a roughness of 0.01 to find the VHL regions for each semantic class, and then optimizes the roughness of these VHLs based on the frozen sparse albedo and lighting. A reasonable roughness can be propagated into the same semantic segmentation via Equation (6), and this roughness can be optimized via Equation (7).

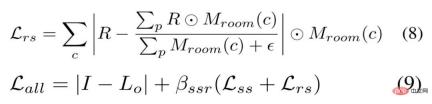

#The third stage of segmentation-based fine-tuning. RuShi fine-tunes all material textures based on semantic segmentation and room segmentation priors. Specifically, Rushi uses a smoothing constraint similar to Equation (4) and a room smoothing constraint for roughness to make the roughness in different rooms softer and smoother. The room smoothing constraint is defined by Equation (8), while not using any smoothing constraints on the albedo, the total loss is defined by Equation (9).

Experimental settings and effect comparison

About dataset, Rushi used two data sets:Synthetic data set and real data set. For the former, Rushi used a path tracer to create a composite scene with different materials and lights, rendering 24 views for optimization and 14 new views, rendering Ground Truth material images for each view. For the latter, due to the lack of full-HDR images in real datasets of commonly used large-scale scenes such as Scannet, Matterport3D and Replica, Rushi collected 10 full-HDR real datasets and captured 10 to 20 by merging 7 bracketed exposures. A full-HDR panoramic image.

About Baseline Method. For recovering SVBRDFs from multi-view images of large-scale scenes, current inverse rendering methods include the SOTA method PhyIR based on single image learning, the multi-view object-centered SOTA neural rendering method InvRender, NVDIFFREC and NeILF. Regarding the evaluation metrics, Rushi uses PSNR, SSIM, and MSE to evaluate material predictions and re-rendered images for quantitative comparison, and uses MAE and SSIM to evaluate re-lit images rendered with different lighting representations.

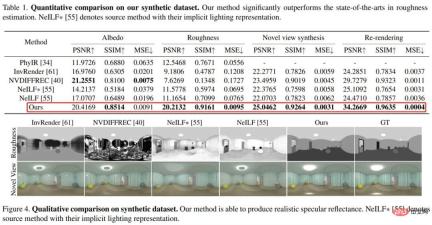

First let’s look at the evaluation on the synthetic data set, as shown in Table 1 and Figure 4 below. The Rushi method is significantly better than the SOTA method in roughness estimation, and the roughness can produce physically reasonable The specular reflectance. In addition, compared with the original implicit representation, NeILF with visual hybrid lighting representation reduces the ambiguity between materials and lighting.

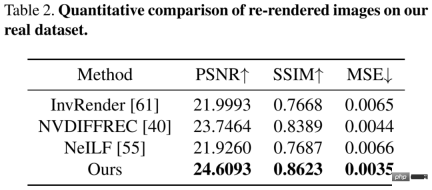

Then evaluated on a challenging real data set containing complex materials and lighting. The quantitative comparison results in Table 2 below show that the Rushi method is better than the previous one. method. Although these methods have approximate re-rendering errors, only the visual method decouples globally consistent and physically sound materials.

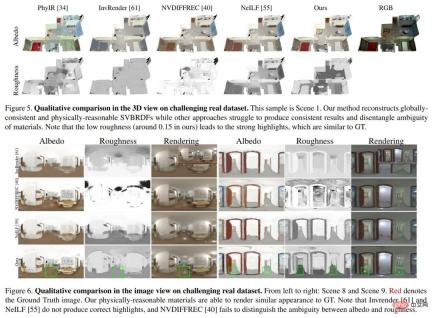

Figures 5 and 6 below show a qualitative comparison of the 3D view and the 2D image view respectively. PhyIR has poor generalization performance due to large domain gaps and cannot achieve global consistency prediction. InvRender, NVDIFFREC, and NeILF produced blurry predictions with artifacts that made it difficult to decouple the correct material. Although NVDIFFREC can achieve similar performance to the RuSight method, it cannot decouple the ambiguity between albedo and roughness, such that highlights in the specular component are incorrectly restored to diffuse albedo.

Ablation experiment

In order to demonstrate the effectiveness of its lighting representation and material optimization strategy, as shownFor TBL, Ablation experiments were conducted on hybrid illumination representation, albedo initialization in the first stage, VHL sampling and semantic propagation for roughness estimation in the second stage, and segmentation-based fine-tuning in the third stage.

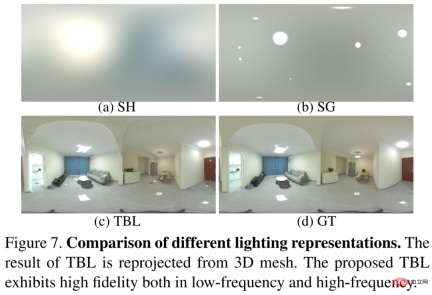

Firstcompared TBL with the SH illumination and SG illumination methods widely used in previous methods. The results are shown in Figure 7 below. For example, video TBL has both low-frequency and high-frequency characteristics. Demonstrated high fidelity.

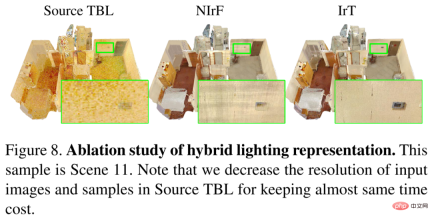

Compare the mixed lighting representation with the original TBL, the results are shown in Figure 8 below. Without a mixed lighting representation, albedo can cause noise and converge slowly. The introduction of precomputed irradiance enables the use of high-resolution inputs to recover fine materials and greatly speeds up the optimization process. At the same time, IrT produces a finer and artifact-free albedo compared to NIrF.

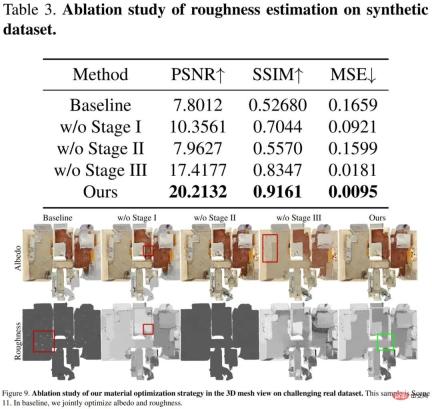

The effectiveness of the three-stage strategy was verified, and the results are shown in Table 3 and Figure 9 below. The baseline roughness fails to converge and only the highlight areas are updated. Without albedo initialization in the first stage, the highlight areas will be too bright and result in incorrect roughness. The second stage of VHL-based sampling and semantic-based propagation is crucial to recover reasonable roughness in areas where specular highlights are not observed. The third stage of segmentation-based fine-tuning produces fine albedo, making the final roughness smoother and preventing error propagation of roughness between different materials.

What is the strength of Rushi’s new inverse rendering technology?

In fact, Rushi achieved SOTA results in the inverse rendering task of a single image by proposing a neural network training method in its CVPR 2022 paper "PhyIR: Physics-based Inverse Rendering for Panoramic Indoor Images". Now the new inverse rendering framework not only achieves multiple perspectives, the entire house, space and scene, but also solves many key flaws of previous inverse rendering methods.

First of allPrevious methods based on synthetic data training did not perform well in real scenarios. Rushi's new depth inverse rendering framework introduces "hierarchical scene prior" for the first time. Through multi-stage material optimization and combined with the world's largest three-dimensional spatial database living space data in Rushi digital space, the illumination, reflectivity and lighting in the space are analyzed. Physical properties such as roughness enable hierarchical and accurate predictions.

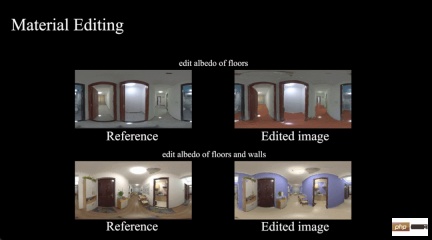

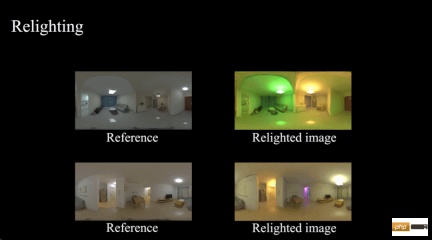

Prediction resultsThe final output is a physically reasonable and globally consistent multi-type material map, which seamlessly converts the indoor scene data actually captured by Rushi equipment into digital rendering assets, adapting to the current needs of Unity, Blender, etc. All mainstream rendering engines, thus enabling automatic generation of scene assets and physically-based MR applications, such as material editing, new view synthesis, relighting, virtual object insertion, etc. This highly versatile digital asset is conducive to supporting more different applications and products in the future.

Material editing

Relighting

SecondlyPrevious differentiable rendering methods based on optimization have extremely high computational costs and extremely low efficiency. In recent years, in order to better solve the inverse rendering problem and reduce the dependence on training data, the differentiable rendering method has been proposed, that is, through the "differential derivation" method to make the forward rendering differentiable, and then back-propagate the gradient to the rendering Parameters, and finally the parameters to be solved based on physics are obtained through optimization. Such methods include spherical-harmonic (SH) lighting [1] and three-dimensional spherical Gaussian (VSG) lighting.

However, large-scale indoor scenes have a large number of complex optical effects such as occlusion and shadow. Modeling global illumination in differentiable rendering will bring high computational costs. For example, the TBL proposed this time can efficiently and accurately represent the global illumination of indoor scenes and only requires about 20MB of memory, while the dense grid-based VSG lighting [2] requires about 1GB of memory, and the sparse grid-based SH lighting method Plenoxels [3] Approximately 750MB of memory is required, and the data memory capacity is reduced dozens of times .

Not only that, Rushi’s new method can complete the inverse rendering of the entire indoor scene within 30 minutes, while the traditional method [4] may take around 12 hours , a total improvement of 24 times. The substantial increase in computing speed means a reduction in costs and a more significant cost-performance advantage, which brings us one step closer to large-scale practical applications.

Finally, previous NeRF-like neural inverse rendering methods (such as PS-NeRF [5], NeRFactor [6], etc.) are mainly oriented to small-scale scenes centered on objects, and it seems incapable of modeling large-scale indoor scenes. Based on Rushi's accurate digital space model and efficient and accurate mixed lighting representation, the new inverse rendering framework solves this problem by introducing semantic segmentation and room segmentation priors.

Regarding this new deep inverse rendering framework, Rushi chief scientist Pan Cihui said, "It truly achieves a deeper digitization of the real world and solves the problem of previous inverse rendering methods that were difficult to restore physics in real scenes. Reasonable materials and lighting, as well as issues of multi-view consistency, bring greater imagination to the application of 3D reconstruction and MR."

Grasp the advantages of inverse rendering technology

in the digital space China Innovation VR Industry Integration

For a long time, Rushi has accumulated a lot of technology in the field of 3D reconstruction, invested a lot of energy in polishing and committed to the implementation of related algorithms. At the same time, it provides great support for the research and development of cutting-edge technologies and attaches great importance to conquering leading technologies in the industry. These have become an important foundation for RuShi's 3D real-life model reconstruction and MR research, including this new inverse rendering technology, to gain recognition from the international academic community, and have helped RuShi's algorithm capabilities reach the international leading level in terms of theoretical research and technical application.

These algorithm and technical advantages will achieve deeper digitization of the real world and further accelerate the construction of digital space. At present, Rushi Digital Space has accumulated more than 27 million sets of collections in different countries and different application scenarios through self-developed collection equipment, covering an area of 2.274 billion square meters. Rushi Digital Space will also help its VR industry integration development direction, bringing new development opportunities for digital application upgrades to commercial retail, industrial facilities, cultural exhibitions, public affairs, home decoration, real estate transactions and other industries, such as VR house viewing, VR Museums etc.

AI marketing assistant created by Rushi

For the integration of VR industry,Rushi’s biggest advantage is that it continues to be benign The evolved digital reconstruction algorithm and the accumulation of massive real data have given it both high technical barriers and large data barriers. These algorithms and data can also circulate with each other to some extent, constantly expanding their advantages. At the same time, the barriers of data and algorithms make it easier for Rushi to cut into the pain points of various industries, bring some technical solutions, and innovate new models of industry development.

The results of inverse rendering technology have been selected into CVPR for two consecutive years, mainly because Rushi wants to make a difference in the MR direction and achieve some implementation in the industry. In the future, Rushi hopes to open up the gap between real-life VR and pure virtual simulation, truly achieve the integration of virtual and real, and build more industry applications.

The above is the detailed content of The speed is increased by 24 times, and inverse rendering of large indoor scenes can be completed in 30 minutes. Rushi's research results were selected for CVPR 2023. For more information, please follow other related articles on the PHP Chinese website!

Tool Calling in LLMsApr 14, 2025 am 11:28 AM

Tool Calling in LLMsApr 14, 2025 am 11:28 AMLarge language models (LLMs) have surged in popularity, with the tool-calling feature dramatically expanding their capabilities beyond simple text generation. Now, LLMs can handle complex automation tasks such as dynamic UI creation and autonomous a

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global HealthApr 14, 2025 am 11:27 AM

How ADHD Games, Health Tools & AI Chatbots Are Transforming Global HealthApr 14, 2025 am 11:27 AMCan a video game ease anxiety, build focus, or support a child with ADHD? As healthcare challenges surge globally — especially among youth — innovators are turning to an unlikely tool: video games. Now one of the world’s largest entertainment indus

UN Input On AI: Winners, Losers, And OpportunitiesApr 14, 2025 am 11:25 AM

UN Input On AI: Winners, Losers, And OpportunitiesApr 14, 2025 am 11:25 AM“History has shown that while technological progress drives economic growth, it does not on its own ensure equitable income distribution or promote inclusive human development,” writes Rebeca Grynspan, Secretary-General of UNCTAD, in the preamble.

Learning Negotiation Skills Via Generative AIApr 14, 2025 am 11:23 AM

Learning Negotiation Skills Via Generative AIApr 14, 2025 am 11:23 AMEasy-peasy, use generative AI as your negotiation tutor and sparring partner. Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining

TED Reveals From OpenAI, Google, Meta Heads To Court, Selfie With MyselfApr 14, 2025 am 11:22 AM

TED Reveals From OpenAI, Google, Meta Heads To Court, Selfie With MyselfApr 14, 2025 am 11:22 AMThe TED2025 Conference, held in Vancouver, wrapped its 36th edition yesterday, April 11. It featured 80 speakers from more than 60 countries, including Sam Altman, Eric Schmidt, and Palmer Luckey. TED’s theme, “humanity reimagined,” was tailor made

Joseph Stiglitz Warns Of The Looming Inequality Amid AI Monopoly PowerApr 14, 2025 am 11:21 AM

Joseph Stiglitz Warns Of The Looming Inequality Amid AI Monopoly PowerApr 14, 2025 am 11:21 AMJoseph Stiglitz is renowned economist and recipient of the Nobel Prize in Economics in 2001. Stiglitz posits that AI can worsen existing inequalities and consolidated power in the hands of a few dominant corporations, ultimately undermining economic

What is Graph Database?Apr 14, 2025 am 11:19 AM

What is Graph Database?Apr 14, 2025 am 11:19 AMGraph Databases: Revolutionizing Data Management Through Relationships As data expands and its characteristics evolve across various fields, graph databases are emerging as transformative solutions for managing interconnected data. Unlike traditional

LLM Routing: Strategies, Techniques, and Python ImplementationApr 14, 2025 am 11:14 AM

LLM Routing: Strategies, Techniques, and Python ImplementationApr 14, 2025 am 11:14 AMLarge Language Model (LLM) Routing: Optimizing Performance Through Intelligent Task Distribution The rapidly evolving landscape of LLMs presents a diverse range of models, each with unique strengths and weaknesses. Some excel at creative content gen

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

SublimeText3 Chinese version

Chinese version, very easy to use

Dreamweaver Mac version

Visual web development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software