Web Front-end

Web Front-end JS Tutorial

JS Tutorial Let's talk about how to use Node to achieve content compression through practice

Let's talk about how to use Node to achieve content compression through practiceLet's talk about how to use Node to achieve content compression through practice

How to achieve content compression using Nodejs? The following article will talk about the method of implementing content compression (gzip/br/deflate) on the Node side through practice. I hope it will be helpful to you!

When checking my application log, I found that it always takes a few seconds to load after entering the log page (the interface is not paginated). So I opened the network panel and checked

Only then did I find that the data returned by the interface was not compressed. I thought the interface used Nginx reverse proxy. , Nginx will automatically help me do this layer (I will explore this later, it is theoretically feasible)

The backend here is Node Service

This article will Share HTTP data compression related knowledge and practice on the Node side

Pre-knowledge

The following clients all refer to browsing

accept-encoding

When the client initiates a request to the server, it will add ## to the request header. #accept-encoding field, its value indicates the compressed content encoding format content-encoding

used for the actual compression of the content by adding content-encoding to the response header. deflate/gzip/br

is one that uses both the LZ77 algorithm and Huffman Coding Lossless data compression algorithm.

is an algorithm based on DEFLATE

refers to Brotli, the data format Aiming to further improve the compression ratio, the compression of text can increase the compression density by 20% relative to deflate, while the compression and decompression speed remains roughly unchanged zlib module

Node.js contains a

zlib module that provides access to Gzip, Deflate/Inflate, and Brotli Implemented compression function Here we take

as an example to list various usage methods according to scenarios. Deflate/Inflate is used in the same way as Brotli , but the API is different

stream

buffer Operation

const zlib = require('zlib')

const fs = require('fs')

const stream = require('stream')

const testFile = 'tests/origin.log'

const targetFile = `${testFile}.gz`

const decodeFile = `${testFile}.un.gz`Unzip/compress the file

Unzip/ To view the compression results, use the

du command here to directly count the results before and after decompression <pre class='brush:php;toolbar:false;'># 执行

du -ah tests

# 结果如下

108K tests/origin.log.gz

2.2M tests/origin.log

2.2M tests/origin.log.un.gz

4.6M tests</pre>Operation based on

createGzip and createUnzip

- zlib

- APIs, except those that are explicitly synchronized, use the Node.js internal thread pool. It can be regarded as asynchronous

Therefore, the compression and decompression code in the following example should be executed separately, otherwise an error will be reported

Directly use the example The pipe method on <pre class='brush:php;toolbar:false;'>// 压缩

const readStream = fs.createReadStream(testFile)

const writeStream = fs.createWriteStream(targetFile)

readStream.pipe(zlib.createGzip()).pipe(writeStream)

// 解压

const readStream = fs.createReadStream(targetFile)

const writeStream = fs.createWriteStream(decodeFile)

readStream.pipe(zlib.createUnzip()).pipe(writeStream)</pre>

Use pipeline on stream, which can be returned Do other processing separately <pre class='brush:php;toolbar:false;'>// 压缩

const readStream = fs.createReadStream(testFile)

const writeStream = fs.createWriteStream(targetFile)

stream.pipeline(readStream, zlib.createGzip(), writeStream, err => {

if (err) {

console.error(err);

}

})

// 解压

const readStream = fs.createReadStream(targetFile)

const writeStream = fs.createWriteStream(decodeFile)

stream.pipeline(readStream, zlib.createUnzip(), writeStream, err => {

if (err) {

console.error(err);

}

})</pre>

PromiseizationpipelineMethod<pre class='brush:php;toolbar:false;'>const { promisify } = require(&#39;util&#39;)

const pipeline = promisify(stream.pipeline)

// 压缩

const readStream = fs.createReadStream(testFile)

const writeStream = fs.createWriteStream(targetFile)

pipeline(readStream, zlib.createGzip(), writeStream)

.catch(err => {

console.error(err);

})

// 解压

const readStream = fs.createReadStream(targetFile)

const writeStream = fs.createWriteStream(decodeFile)

pipeline(readStream, zlib.createUnzip(), writeStream)

.catch(err => {

console.error(err);

})</pre>Operation based on

gzip and unzip APIs, these two methods include synchronous and asynchronoustypes

-

- gzip

- unzip

##Method 1:

readStreamTransferBuffer, and then perform further operationsgzip: asynchronous

// 压缩

const buff = []

readStream.on('data', (chunk) => {

buff.push(chunk)

})

readStream.on('end', () => {

zlib.gzip(Buffer.concat(buff), targetFile, (err, resBuff) => {

if(err){

console.error(err);

process.exit()

}

fs.writeFileSync(targetFile,resBuff)

})

})// 压缩

const buff = []

readStream.on('data', (chunk) => {

buff.push(chunk)

})

readStream.on('end', () => {

fs.writeFileSync(targetFile,zlib.gzipSync(Buffer.concat(buff)))

})readFileSyncDecrypt/compress the text content of

// 压缩 const readBuffer = fs.readFileSync(testFile) const decodeBuffer = zlib.gzipSync(readBuffer) fs.writeFileSync(targetFile,decodeBuffer) // 解压 const readBuffer = fs.readFileSync(targetFile) const decodeBuffer = zlib.gzipSync(decodeFile) fs.writeFileSync(targetFile,decodeBuffer)

这里以压缩文本内容为例

// 测试数据

const testData = fs.readFileSync(testFile, { encoding: 'utf-8' })基于流(stream)操作

这块就考虑 string => buffer => stream的转换就行

string => buffer

const buffer = Buffer.from(testData)

buffer => stream

const transformStream = new stream.PassThrough() transformStream.write(buffer) // or const transformStream = new stream.Duplex() transformStream.push(Buffer.from(testData)) transformStream.push(null)

这里以写入到文件示例,当然也可以写到其它的流里,如HTTP的Response(后面会单独介绍)

transformStream

.pipe(zlib.createGzip())

.pipe(fs.createWriteStream(targetFile))基于Buffer操作

同样利用Buffer.from将字符串转buffer

const buffer = Buffer.from(testData)

然后直接使用同步API进行转换,这里result就是压缩后的内容

const result = zlib.gzipSync(buffer)

可以写入文件,在HTTP Server中也可直接对压缩后的内容进行返回

fs.writeFileSync(targetFile, result)

Node Server中的实践

这里直接使用Node中 http 模块创建一个简单的 Server 进行演示

在其他的 Node Web 框架中,处理思路类似,当然一般也有现成的插件,一键接入

const http = require('http')

const { PassThrough, pipeline } = require('stream')

const zlib = require('zlib')

// 测试数据

const testTxt = '测试数据123'.repeat(1000)

const app = http.createServer((req, res) => {

const { url } = req

// 读取支持的压缩算法

const acceptEncoding = req.headers['accept-encoding'].match(/(br|deflate|gzip)/g)

// 默认响应的数据类型

res.setHeader('Content-Type', 'application/json; charset=utf-8')

// 几个示例的路由

const routes = [

['/gzip', () => {

if (acceptEncoding.includes('gzip')) {

res.setHeader('content-encoding', 'gzip')

// 使用同步API直接压缩文本内容

res.end(zlib.gzipSync(Buffer.from(testTxt)))

return

}

res.end(testTxt)

}],

['/deflate', () => {

if (acceptEncoding.includes('deflate')) {

res.setHeader('content-encoding', 'deflate')

// 基于流的单次操作

const originStream = new PassThrough()

originStream.write(Buffer.from(testTxt))

originStream.pipe(zlib.createDeflate()).pipe(res)

originStream.end()

return

}

res.end(testTxt)

}],

['/br', () => {

if (acceptEncoding.includes('br')) {

res.setHeader('content-encoding', 'br')

res.setHeader('Content-Type', 'text/html; charset=utf-8')

// 基于流的多次写操作

const originStream = new PassThrough()

pipeline(originStream, zlib.createBrotliCompress(), res, (err) => {

if (err) {

console.error(err);

}

})

originStream.write(Buffer.from('<h1 id="BrotliCompress">BrotliCompress</h1>'))

originStream.write(Buffer.from('<h2 id="测试数据">测试数据</h2>'))

originStream.write(Buffer.from(testTxt))

originStream.end()

return

}

res.end(testTxt)

}]

]

const route = routes.find(v => url.startsWith(v[0]))

if (route) {

route[1]()

return

}

// 兜底

res.setHeader('Content-Type', 'text/html; charset=utf-8')

res.end(`<h1 id="nbsp-url">404: ${url}</h1>

<h2 id="已注册路由">已注册路由</h2>

<ul>

${routes.map(r => `<li><a href="${r[0]}">${r[0]}</a></li>`).join('')}

</ul>

`)

res.end()

})

app.listen(3000)更多node相关知识,请访问:nodejs 教程!

The above is the detailed content of Let's talk about how to use Node to achieve content compression through practice. For more information, please follow other related articles on the PHP Chinese website!

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

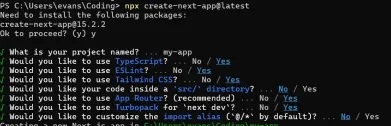

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.