This article will take you to learn more about the cluster in Node.js and introduce the cluster event. I hope it will be helpful to everyone!

1. Introduction

Node In v0.8, the cluster module was directly introduced to It solves the problem of multi-core CPU utilization and also provides a relatively complete API to deal with the robustness of the process.

cluster module calls the fork method to create a child process, which is the same method as fork in child_process. The cluster module uses the classic master-slave model. The cluster will create a master and then copy multiple child processes according to the number you specify. You can use the cluster.isMaster property to determine whether the current process is a master or a worker (worker process). The master process manages all sub-processes. The master process is not responsible for specific task processing. Its main job is to be responsible for scheduling and management.

The cluster module uses built-in load balancing to better handle the pressure between threads, which uses the Round-robin algorithm (also known as the round-robin algorithm). When using the Round-robin scheduling strategy, the master accepts() all incoming connection requests and then sends the corresponding TCP request processing to the selected worker process (this method still communicates through IPC). The official usage example is as follows

const cluster = require('cluster');

const cpuNums = require('os').cpus().length;

const http = require('http');

if (cluster.isMaster) {

for (let i = 0; i < cpuNums; i++){

cluster.fork();

}

// 子进程退出监听

cluster.on('exit', (worker,code,signal) => {

console.log('worker process died,id',worker.process.pid)

})

} else {

// 给子进程标注进程名

process.title = `cluster 子进程 ${process.pid}`;

// Worker可以共享同一个 TCP 连接,这里是一个 http 服务器

http.createServer((req, res)=> {

res.end(`response from worker ${process.pid}`);

}).listen(3000);

console.log(`Worker ${process.pid} started`);

}In fact, the cluster module is applied by a combination of child_process and net modules. When the cluster starts, it will start the TCP server internally. When the cluster.fork() child process, this TCP The file descriptor of the server-side socket is sent to the worker process. If the worker process is copied through cluster.fork(), then NODE_UNIQUE_ID exists in its environment variable. If there is a listen() call to listen to the network port in the worker process, it will get the file descriptor through the SO_REUSEADDR port. Reuse, thereby enabling multiple child processes to share the port.

2. Cluster event

fork: This event is triggered after copying a worker process;

online: After copying a working process, the working process actively sends an online message to the main process. After the main process receives the message, the event is triggered;

listening: called in the working process After listen() (the server-side Socket is shared), a listening message is sent to the main process. After the main process receives the message, the event is triggered;

disconnect: main process and worker process This event will be triggered when the IPC channel is disconnected;

exit: This event will be triggered when a worker process exits;

setup: This event is triggered after cluster.setupMaster() is executed;

Most of these events are related to the events of the child_process module, and are encapsulated based on inter-process message passing.

cluster.on('fork', ()=> {

console.log('fork 事件... ');

})

cluster.on('online', ()=> {

console.log('online 事件... ');

})

cluster.on('listening', ()=> {

console.log('listening 事件... ');

})

cluster.on('disconnect', ()=> {

console.log('disconnect 事件... ');

})

cluster.on('exit', ()=> {

console.log('exit 事件... ');

})

cluster.on('setup', ()=> {

console.log('setup 事件... ');

})3. Communication between master and worker

As can be seen from the above, the master process creates worker processes through cluster.fork(). In fact, cluster.fork() internal Child processes are created through child_process.fork(). That is to say: the master and worker processes are parent and child processes; they communicate through the IPC channel just like the parent-child processes created by child_process.

The full name of IPC is Inter-Process Communication, which is inter-process communication. The purpose of inter-process communication is to allow different processes to access resources and coordinate work with each other. The IPC channel in Node is implemented by pipe technology. The specific implementation is provided by libuv. It is implemented by named pipe under Windows. *nix system uses Unix Domain Socket. The inter-process communication implemented on the application layer only has a simple message event and send method, which is very simple to use.

The parent process will create an IPC channel and listen to it before actually creating the child process, and then actually create the child process and tell the child process through the environment variable (NODE_CHANNEL_FD) The file descriptor for this IPC channel. During the startup process, the child process connects to the existing IPC channel according to the file descriptor, thereby completing the connection between the parent and child processes.

After the connection is established, the parent and child processes can communicate freely. Because IPC channels are created using Named Pipes or Domain Sockets, they behave similarly to network sockets and are two-way communications. The difference is that they complete inter-process communication in the system kernel without going through the actual network layer, which is very efficient. In Node, the IPC channel is abstracted as a Stream object. When send is called, data is sent (similar to write ), and the received message is triggered to the application layer through the message event (similar to data ).

master 和 worker 进程在 server 实例的创建过程中,是通过 IPC 通道进行通信的,那会不会对我们的开发造成干扰呢?比如,收到一堆其实并不需要关心的消息?答案肯定是不会?那么是怎么做到的呢?

Node 引入进程间发送句柄的功能,send 方法除了能通过 IPC 发送数据外,还能发送句柄,第二个参数为句柄,如下所示

child.send(meeage, [sendHandle])

句柄是一种可以用来标识资源的引用,它的内部包含了指向对象的文件描述符。例如句柄可以用来标识一个服务器端 socket 对象、一个客户端 socket 对象、一个 UDP 套接字、一个管道等。 那么句柄发送跟我们直接将服务器对象发送给子进程有没有什么差别?它是否真的将服务器对象发送给子进程?

其实 send() 方法在将消息发送到 IPC 管道前,将消息组装成两个对象,一个参数是 handle,另一个是 message,message 参数如下所示

{

cmd: 'NODE_HANDLE',

type: 'net.Server',

msg: message

}发送到 IPC 管道中的实际上是要发送的句柄文件描述符,其为一个整数值。这个 message 对象在写入到 IPC 管道时会通过 JSON.stringify 进行序列化,转化为字符串。子进程通过连接 IPC 通道读取父进程发送来的消息,将字符串通过 JSON.parse 解析还原为对象后,才触发 message 事件将消息体传递给应用层使用。在这个过程中,消息对象还要被进行过滤处理,message.cmd 的值如果以 NODE_ 为前缀,它将响应一个内部事件 internalMessage ,如果 message.cmd 值为 NODE_HANDLE,它将取出 message.type 值和得到的文件描述符一起还原出一个对应的对象。这个过程的示意图如下所示

在 cluster 中,以 worker 进程通知 master 进程创建 server 实例为例子。worker 伪代码如下:

// woker进程

const message = {

cmd: 'NODE_CLUSTER',

type: 'net.Server',

msg: message

};

process.send(message);master 伪代码如下:

worker.process.on('internalMessage', fn);

四、如何实现端口共享

在前面的例子中,多个 woker 中创建的 server 监听了同个端口 3000,通常来说,多个进程监听同个端口,系统会报 EADDRINUSE 异常。为什么 cluster 没问题呢?

因为独立启动的进程中,TCP 服务器端 socket 套接字的文件描述符并不相同,导致监听到相同的端口时会抛出异常。但对于 send() 发送的句柄还原出来的服务而言,它们的文件描述符是相同的,所以监听相同端口不会引起异常。

这里需要注意的是,多个应用监听相同端口时,文件描述符同一时间只能被某个进程所用,换言之就是网络请求向服务器端发送时,只有一个幸运的进程能够抢到连接,也就是说只有它能为这个请求进行服务,这些进程服务是抢占式的。

五、如何将请求分发到多个worker

- 每当 worker 进程创建 server 实例来监听请求,都会通过 IPC 通道,在 master 上进行注册。当客户端请求到达,master 会负责将请求转发给对应的 worker;

- 具体转发给哪个 worker?这是由转发策略决定的,可以通过环境变量 NODE_CLUSTER_SCHED_POLICY 设置,也可以在 cluster.setupMaster(options) 时传入,默认的转发策略是轮询(SCHED_RR);

- 当有客户请求到达,master 会轮询一遍 worker 列表,找到第一个空闲的 worker,然后将该请求转发给该worker;

六、pm2 工作原理

pm2 是 node 进程管理工具,可以利用它来简化很多 node 应用管理的繁琐任务,如性能监控、自动重启、负载均衡等。

pm2 自身是基于 cluster 模块进行封装的, 本节我们主要 pm2 的 Satan 进程、God Daemon 守护进程 以及两者之间的进程间远程调用 RPC。

撒旦(Satan),主要指《圣经》中的堕天使(也称堕天使撒旦),被看作与上帝的力量相对的邪恶、黑暗之源,是God 的对立面。

其中 Satan.js 提供程序的退出、杀死等方法,God.js 负责维持进程的正常运行,God 进程启动后一直运行,相当于 cluster 中的 Master进程,维持 worker 进程的正常运行。

RPC(Remote Procedure Call Protocol)是指远程过程调用,也就是说两台服务器A,B,一个应用部署在A 服务器上,想要调用 B 服务器上应用提供的函数/方法,由于不在一个内存空间,不能直接调用,需要通过网络来表达调用的语义和传达调用的数据。同一机器不同进程间的方法调用也属于 rpc 的作用范畴。 执行流程如下所示

每次命令行的输入都会执行一次 satan 程序,如果 God 进程不在运行,首先需要启动 God 进程。然后根据指令,Satan 通过 rpc 调用 God 中对应的方法执行相应的逻辑。

以 pm2 start app.js -i 4 为例,God 在初次执行时会配置 cluster,同时监听 cluster 中的事件:

// 配置cluster

cluster.setupMaster({

exec : path.resolve(path.dirname(module.filename), 'ProcessContainer.js')

});

// 监听cluster事件

(function initEngine() {

cluster.on('online', function(clu) {

// worker进程在执行

God.clusters_db[clu.pm_id].status = 'online';

});

// 命令行中 kill pid 会触发exit事件,process.kill不会触发exit

cluster.on('exit', function(clu, code, signal) {

// 重启进程 如果重启次数过于频繁直接标注为stopped

God.clusters_db[clu.pm_id].status = 'starting';

// 逻辑

// ...

});

})();在 God 启动后, 会建立 Satan 和 God 的rpc链接,然后调用 prepare 方法,prepare 方法会调用 cluster.fork 来完成集群的启动

God.prepare = function(opts, cb) {

// ...

return execute(opts, cb);

};

function execute(env, cb) {

// ...

var clu = cluster.fork(env);

// ...

God.clusters_db[id] = clu;

clu.once('online', function() {

God.clusters_db[id].status = 'online';

if (cb) return cb(null, clu);

return true;

});

return clu;

}七、总结

本文从 cluster 的基本使用、事件,到 cluster 的基本实现原理,再到 pm2 如何基于 cluster 进行进程管理,带你从入门到深入原理以及了解其高阶应用,希望对你有帮助。

更多node相关知识,请访问:nodejs 教程!!

The above is the detailed content of Teach you step by step how to use clusters in Node. For more information, please follow other related articles on the PHP Chinese website!

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

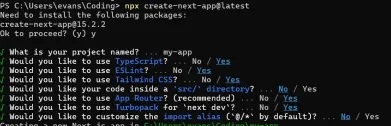

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Demystifying JavaScript: What It Does and Why It MattersApr 09, 2025 am 12:07 AM

Demystifying JavaScript: What It Does and Why It MattersApr 09, 2025 am 12:07 AMJavaScript is the cornerstone of modern web development, and its main functions include event-driven programming, dynamic content generation and asynchronous programming. 1) Event-driven programming allows web pages to change dynamically according to user operations. 2) Dynamic content generation allows page content to be adjusted according to conditions. 3) Asynchronous programming ensures that the user interface is not blocked. JavaScript is widely used in web interaction, single-page application and server-side development, greatly improving the flexibility of user experience and cross-platform development.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

WebStorm Mac version

Useful JavaScript development tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool