This article will analyze the file flow in Nodejs, I hope it will be helpful to everyone!

File stream

Since various media in the computer have different reading and storage speeds and different capacities, there may be a long-term problem with one of them during the operation. Waiting state

There are three main types of file streams, namely Input stream (Readable) , Output stream (Writeable) , Duplex stream (Duplex) . There is another type of stream that is not commonly used, which is Transform stream (Transform)

provides the stream module in node. There are two class instances in this module: Readable and Writable, these two classes will be inherited in the stream, so there will be many common methods.

Readable stream (Readable)

Input stream: Data flows from the source to the memory, and the data in the disk is transferred to the memory.

createReadStream

fs.createReadStream(path, configuration)

In the configuration there are: encoding (encoding method), start (start reading Bytes), end (end of reading bytes), highWaterMark (amount of each read)

highWaterMark: If encoding has a value, this number represents a number of characters; if encoding is null, this number represents a number of characters Number of sections

Returns a Readable subclass ReadStream

const readable = fs.createReadStream(filename, { encoding: 'utf-8', start: 1, end: 2, // highWaterMark: });

Register event

readable.on(event name, handler function)

readable.on('open', (err, data)=> {

// console.log(err);

console.log('文件打开了');

})

readable.on('error', (data, err) => {

console.log(data, err);

console.log('读取文件发生错误');

})

readable.on('close', (data, err) => {

// console.log(data, err);

console.log('文件关闭');

})

readable.close() // 手动触发通过 readable.close()或者在文件读取完毕之后自动关闭--autoClose配置项默认为 true

readable.on('data', (data) => {

console.log(data);

console.log('文件正在读取');

})

readable.on('end', ()=>{

console.log('文件读取完毕');

})Pause reading

readable.pause() Pauses reading and triggers the pause event

Resume reading

readable.resume() Resumes reading , will trigger the resume event

Writable stream

const ws = fs.createWriteStream(filename[, configuration])

ws.write(data)

Write a data, data can be a string or a Buffer, and return a Boolean value.

If true is returned, it means that the write channel is not full, and the next data can be written directly. The write channel is the size indicated by highWaterMark in the configuration.

If false is returned, it means that the writing channel is full, and the remaining characters start to wait, causing back pressure.

const ws = fs.createWriteStream(filename, {

encoding: 'utf-8',

highWaterMark: 2

})

const flag = ws.write('刘');

console.log(flag); // false

这里虽然只会执行一次,但是在通道有空余空间的时候就会继续写入,并不在返回 值。

ws.write() 只会返回一次值。

const flag = ws.write('a');

console.log(flag);

const flag1 = ws.write('a');

console.log(flag1);

const flag2 = ws.write('a');

console.log(flag2);

const flag3 = ws.write('a');

console.log(flag3);

输出顺序:true、false、false、false

第二次写入的时候已经占了两字节,第三次写入后直接占满了,所以返回falseUse streams to copy and paste files and solve back pressure problems

const filename = path.resolve(__dirname, './file/write.txt');

const wsfilename = path.resolve(__dirname, './file/writecopy.txt');

const ws = fs.createWriteStream(wsfilename);

const rs = fs.createReadStream(filename)

rs.on('data', chumk => {

const falg = ws.write(chumk);

if(!falg) {

rs.pause();

}

})

ws.on('drain', () => {

rs.resume();

})

rs.on('close', () => {

ws.end();

console.log('copy end');

})pipe

Using pipe, you can also directly read and write streams Streaming in series can also solve the back pressure problem

rs.pipe(ws);

rs.on('close', () => {

ws.end();

console.log('copy end');

}) After learning, I feel that file streaming is very convenient when reading and writing a large number of files, and it can be done quickly and efficiently. Compared with writeFile and readFile are much more efficient, and there will be no major blocking if handled correctly.

For more node-related knowledge, please visit: nodejs tutorial! !

The above is the detailed content of An in-depth analysis of file flow in node.js. For more information, please follow other related articles on the PHP Chinese website!

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

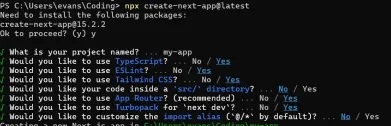

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver Mac version

Visual web development tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool